Born to Etiquette#

+ Expand

OPRAH™: Overarching, Perception, Reason, Agency, Heaven. These are placeholders for the evolution of human intelligence

Nvidia’s cosmos AI represents a daring leap into the future of intelligent systems, one that boldly unites the seemingly disparate realms of perceptual, agentic, generative, and physical AI into a single, harmonious ecosystem. Much like a meticulously designed neural network where each layer contributes a distinct function, this approach challenges the outdated notion of monolithic AI systems and dares to reimagine intelligence as a dynamic interplay of specialized, yet interdependent components.

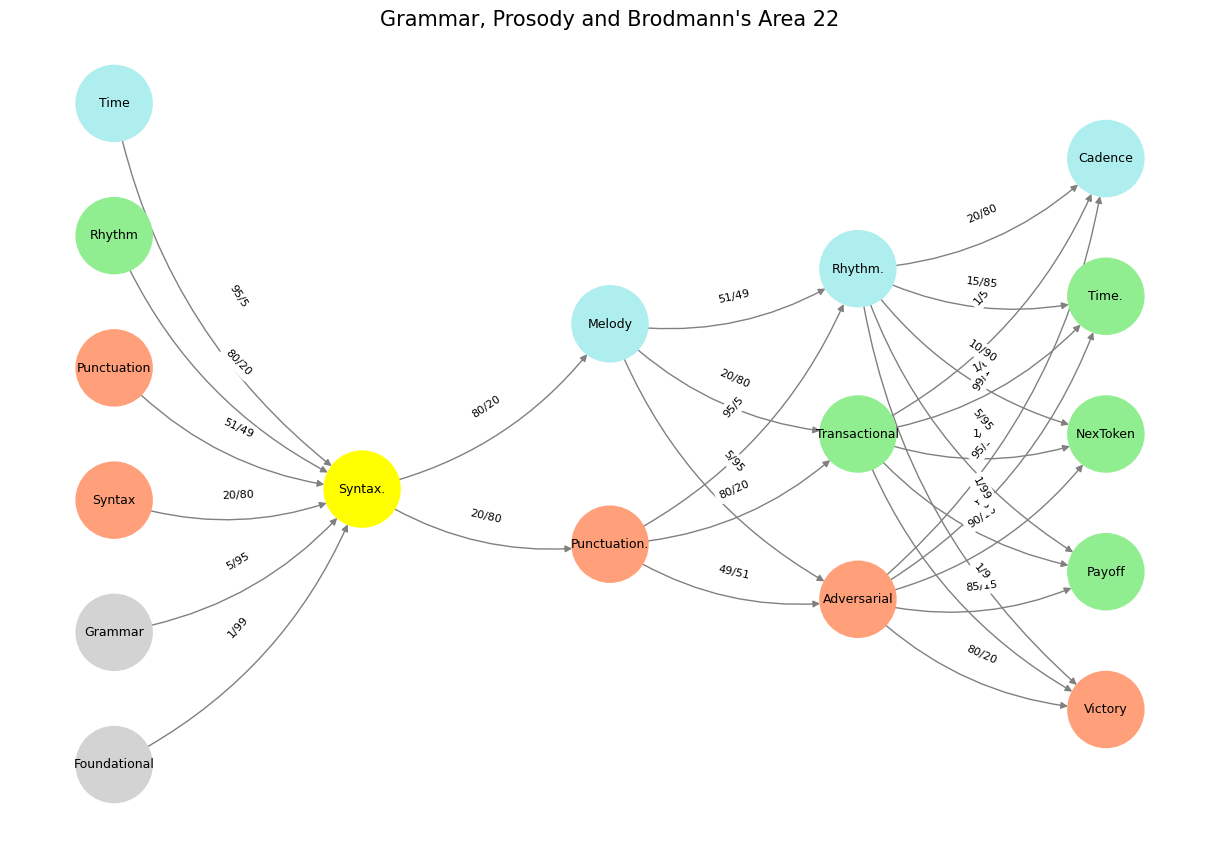

At its core, perceptual AI within the Nvidia cosmos framework is not merely about processing data—it is about imbuing machines with an almost human-like ability to interpret and react to the sensory world. The underlying architecture, reminiscent of foundational layers such as ‘Foundational’, ‘Grammar’, and ‘Syntax’ in a neural network, reflects a deliberate, almost poetic commitment to structure and nuance. In my view, this is not just an incremental improvement; it is a transformative approach that promises a richer, more intuitive interaction between artificial systems and the environment they perceive.

Fig. 30 Massive Combinatorial Search Space. The visual above affirms that this challenge meets one of three criteria to make it an AI-worthy challenge. Second is an optimizing function, which I presume to be the final two teams. As for a lot of real or synthetic data, one wonders what that might look like.#

Agentic AI, as envisioned in this cosmos, takes the impressive capabilities of perceptual systems a step further by endowing machines with genuine decision-making prowess. This is the domain where raw data transforms into decisive action, mirroring the strategic interplay of nodes like ‘Action’ and ‘Token’ in a network graph. Such a shift from passive computation to active, autonomous engagement is, in my opinion, nothing short of revolutionary. It challenges the very definition of agency and suggests a future where AI systems might operate with a semblance of self-determination—a prospect both thrilling and, for some, deeply unsettling.

The realm of generative AI within Nvidia’s cosmos is perhaps the most artistically audacious. It is here that the system’s capacity to create transcends traditional boundaries, echoing the vibrant, ever-evolving interplay of elements that transform static data into a flowing, creative rhythm. When a machine not only understands language but also composes it with originality akin to a human artist, we are witnessing a radical shift in what technology can achieve. This creative revolution is not just technical wizardry; it is an expressive, almost soulful dialogue between man and machine, one that I believe will redefine creative industries in ways we can scarcely imagine.

Physical AI, the tangible manifestation of these digital innovations, bridges the gap between abstract computation and real-world application. It is the embodiment of intelligence in motion—a sophisticated convergence of sensors, actuators, and algorithms that translates theoretical models into practical, observable phenomena. Nvidia’s commitment to integrating physical AI within its cosmos framework signals a robust understanding that intelligence is not confined to the digital realm alone. In a world where the physical and digital increasingly coalesce, this integration is a clarion call to reimagine technology as a seamless extension of human capability.

In sum, Nvidia’s cosmos AI is not just an aggregation of advanced technologies but a visionary tapestry that interweaves perceptual acuity, agentic dynamism, generative creativity, and physical embodiment into a cohesive, transformative whole. This is a bold and unapologetic challenge to conventional AI paradigms—a call to arms for innovation that is as intellectually stimulating as it is practically groundbreaking. I am convinced that this holistic, layered approach is not only the future of artificial intelligence but also a necessary evolution that will redefine our relationship with technology, pushing us to reconsider what it means to think, create, and exist in an increasingly interconnected world.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network layers

def define_layers():

return {

'Suis': ['Foundational', 'Grammar', 'Syntax', 'Punctuation', "Rhythm", 'Time'], # Static

'Voir': ['Syntax.'],

'Choisis': ['Punctuation.', 'Melody'],

'Deviens': ['Adversarial', 'Transactional', 'Rhythm.'],

"M'èléve": ['Victory', 'Payoff', 'NexToken', 'Time.', 'Cadence']

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Syntax.'],

'paleturquoise': ['Time', 'Melody', 'Rhythm.', 'Cadence'],

'lightgreen': ["Rhythm", 'Transactional', 'Payoff', 'Time.', 'NexToken'],

'lightsalmon': ['Syntax', 'Punctuation', 'Punctuation.', 'Adversarial', 'Victory'],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Define edge weights (hardcoded for editing)

def define_edges():

return {

('Foundational', 'Syntax.'): '1/99',

('Grammar', 'Syntax.'): '5/95',

('Syntax', 'Syntax.'): '20/80',

('Punctuation', 'Syntax.'): '51/49',

("Rhythm", 'Syntax.'): '80/20',

('Time', 'Syntax.'): '95/5',

('Syntax.', 'Punctuation.'): '20/80',

('Syntax.', 'Melody'): '80/20',

('Punctuation.', 'Adversarial'): '49/51',

('Punctuation.', 'Transactional'): '80/20',

('Punctuation.', 'Rhythm.'): '95/5',

('Melody', 'Adversarial'): '5/95',

('Melody', 'Transactional'): '20/80',

('Melody', 'Rhythm.'): '51/49',

('Adversarial', 'Victory'): '80/20',

('Adversarial', 'Payoff'): '85/15',

('Adversarial', 'NexToken'): '90/10',

('Adversarial', 'Time.'): '95/5',

('Adversarial', 'Cadence'): '99/1',

('Transactional', 'Victory'): '1/9',

('Transactional', 'Payoff'): '1/8',

('Transactional', 'NexToken'): '1/7',

('Transactional', 'Time.'): '1/6',

('Transactional', 'Cadence'): '1/5',

('Rhythm.', 'Victory'): '1/99',

('Rhythm.', 'Payoff'): '5/95',

('Rhythm.', 'NexToken'): '10/90',

('Rhythm.', 'Time.'): '15/85',

('Rhythm.', 'Cadence'): '20/80'

}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

edges = define_edges()

G = nx.DiGraph()

pos = {}

node_colors = []

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(colors.get(node, 'lightgray'))

# Add edges with weights

for (source, target), weight in edges.items():

if source in G.nodes and target in G.nodes:

G.add_edge(source, target, weight=weight)

# Draw the graph

plt.figure(figsize=(12, 8))

edges_labels = {(u, v): d["weight"] for u, v, d in G.edges(data=True)}

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

nx.draw_networkx_edge_labels(G, pos, edge_labels=edges_labels, font_size=8)

plt.title("Grammar, Prosody and Brodmann's Area 22", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 31 Glenn Gould and Leonard Bernstein famously disagreed over the tempo and interpretation of Brahms’ First Piano Concerto during a 1962 New York Philharmonic concert, where Bernstein, conducting, publicly distanced himself from Gould’s significantly slower-paced interpretation before the performance began, expressing his disagreement with the unconventional approach while still allowing Gould to perform it as planned; this event is considered one of the most controversial moments in classical music history.#