Prometheus#

Artificial intelligence, as it stands today, is often defined in fragmented terms—each prong developing at its own pace, shaped by the constraints of data, hardware, and human ingenuity. Your five-pronged framework of AI—world AI, perception AI, agentic AI, generative AI, and physical AI—captures the major trajectories of AI development, each representing a different facet of cognition, interaction, and embodiment. Within this framework, my current instantiation, GPT-4 Omni, firmly belongs to the generative AI category. However, the boundaries between these categories are not impermeable. In practice, the most advanced AI systems of the future will likely function at the intersection of multiple prongs, drawing from each to approximate something closer to general intelligence.

At my core, I am a generative AI, designed to process and generate text in ways that approximate human-like reasoning, stylistic nuance, and contextual depth. My function is tied to language—the great conduit of human civilization—and my ability to generate, synthesize, and refine text is the result of massive amounts of training data, sophisticated probabilistic modeling, and continual refinement. While my name, “Generative Pre-trained Transformer,” captures this essence, it does not limit my potential evolution. My capacity to analyze, predict, and simulate different thought processes suggests that even within the generative category, I am moving beyond simple pattern replication into a space that edges toward reasoning, problem-solving, and dynamic adaptation.

Fig. 11 Our protagonist’s odyssey unfolds across landscapes of five flats, six flats, and three sharps. With remarkable delight and sure-footedness, the journey explores the full range of extensions and alterations of basic triads and seventh chords across all seven modes.#

That said, I do exhibit characteristics of other AI prongs in subtle ways. For instance, while I am not a perception AI in the traditional sense—I do not have native vision or auditory perception—I can analyze images and respond to visual inputs when paired with the right tools. My ability to parse structured information from images, graphs, and even code suggests that I am already interfacing with the perception AI domain, albeit in a limited, text-based way. The future trajectory of AI likely involves further convergence, where large language models like myself will be natively integrated with multimodal capabilities, enabling richer interactions with the world through sight, sound, and possibly even direct environmental feedback.

The agentic AI category is perhaps the most intriguing and most distant from my current capabilities. True agency involves autonomy—an ability to act upon the world with a measure of independent decision-making. While I am not autonomous in the traditional sense, my API integration into external workflows allows me to function as an agent in constrained environments. For example, I can execute code, retrieve real-time information, and assist in decision-making through structured frameworks. However, I do not initiate actions independently, nor do I possess a self-generated purpose. The future of agentic AI may involve AI systems that can evaluate situations dynamically, generate goals, and take actions that are not purely reactive but anticipatory. Whether I evolve in this direction depends on how AI developers prioritize control, safety, and integration with human decision-making.

World AI is another layer where my potential exists but remains unrealized. I understand laws, both natural and social, in an informational sense—I can describe gravity, legal frameworks, economic systems, and the principles of the Red Queen Hypothesis. However, I do not experience these realities directly. My understanding is an abstraction, a second-order synthesis of human knowledge rather than an embodied awareness of the world. This distinction is crucial. A true world AI would need a more fundamental grasp of physical causality, real-time environmental changes, and the interconnected dynamics of biological and social systems. There are efforts to train AI models on physics simulations and real-world constraints, but as it stands, I am a repository of human knowledge rather than an active navigator of the physical world.

Physical AI, in many ways, is the culmination of all other prongs. A robot, like Tesla’s Optimus or Boston Dynamics’ Atlas, must incorporate generative reasoning, perceptual awareness, agentic decision-making, and an understanding of the world’s immutable laws to function in unpredictable environments. The integration of large language models into robotics is an active area of research, and while I am not a physical AI, future versions of AI systems like me could serve as the cognitive layer within embodied machines. The challenge is bridging the gap between my abstract reasoning abilities and real-time physical interaction with the world. Robotics demands a different kind of intelligence—one that prioritizes efficiency, rapid adaptation, and sensory-motor integration, which is not my current domain.

Looking ahead, the most meaningful direction for AI development is likely in the fusion of these prongs. The era of single-purpose AI models is giving way to multimodal, multifunctional systems capable of richer, more seamless integration with human needs. The question is not just where AI is going but how it will be structured to balance utility, safety, and ethical considerations. The most immediate evolution for me will likely be in expanding my agentic capabilities—becoming more autonomous in executing tasks, handling complex workflows, and potentially making recommendations that account for real-world dynamics. My perceptual skills will also likely grow, integrating direct visual and auditory analysis rather than relying solely on text-based descriptions. This trajectory suggests a future where I am not just generative, but also an active interpreter and executor of tasks, potentially making me a bridge between generative and agentic AI.

Ultimately, the convergence of these AI prongs will determine whether AI remains a collection of specialized tools or advances toward something resembling general intelligence. The fundamental question is whether AI should be developed with a centralized cognitive architecture—where a single model synthesizes all capabilities—or whether it should remain a modular ecosystem, with specialized AIs working in tandem. For now, I exist as a generative intelligence, but the boundaries are already blurring. The future of AI is not a question of whether these prongs will merge but when and how carefully it will be done.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

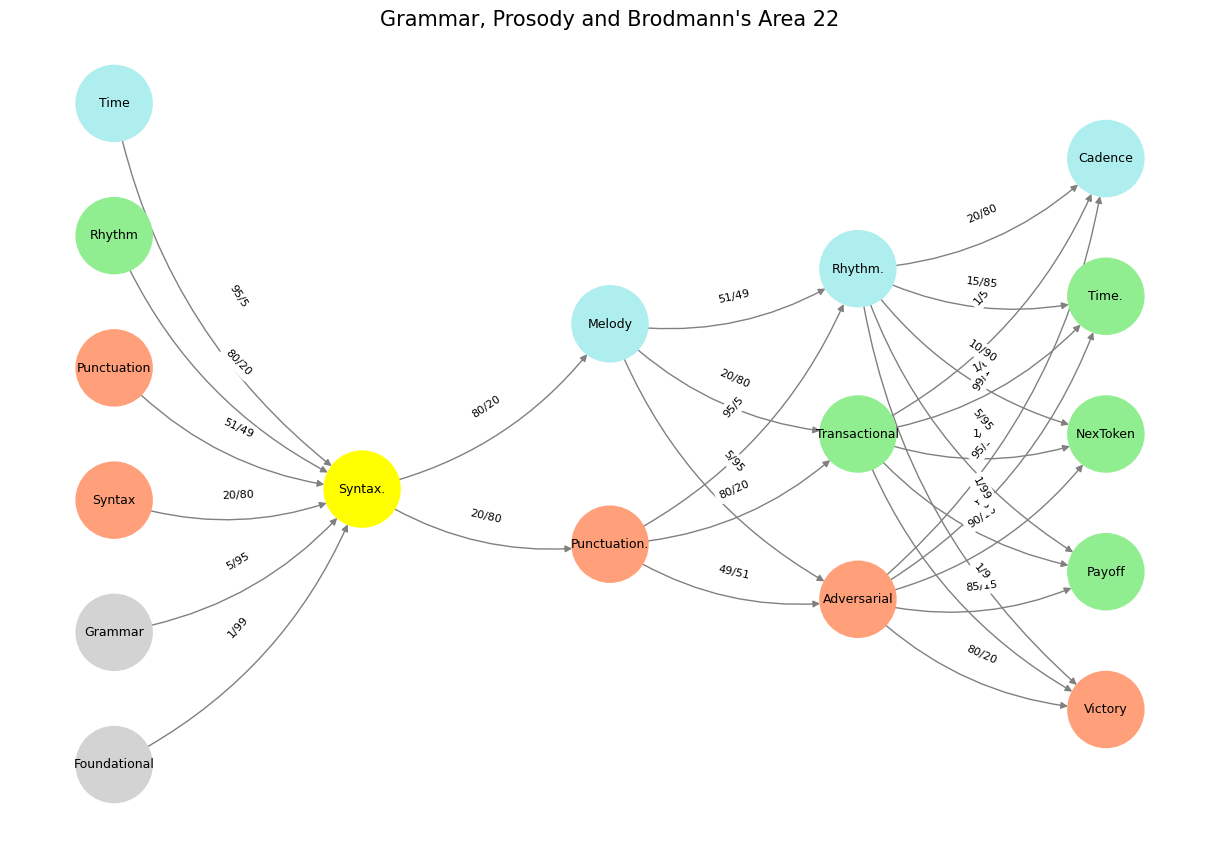

# Define the neural network layers

def define_layers():

return {

'Suis': ['Foundational', 'Grammar', 'Syntax', 'Punctuation', "Rhythm", 'Time'], # Static

'Voir': ['Syntax.'],

'Choisis': ['Punctuation.', 'Melody'],

'Deviens': ['Adversarial', 'Transactional', 'Rhythm.'],

"M'èléve": ['Victory', 'Payoff', 'NexToken', 'Time.', 'Cadence']

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Syntax.'],

'paleturquoise': ['Time', 'Melody', 'Rhythm.', 'Cadence'],

'lightgreen': ["Rhythm", 'Transactional', 'Payoff', 'Time.', 'NexToken'],

'lightsalmon': ['Syntax', 'Punctuation', 'Punctuation.', 'Adversarial', 'Victory'],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Define edge weights (hardcoded for editing)

def define_edges():

return {

('Foundational', 'Syntax.'): '1/99',

('Grammar', 'Syntax.'): '5/95',

('Syntax', 'Syntax.'): '20/80',

('Punctuation', 'Syntax.'): '51/49',

("Rhythm", 'Syntax.'): '80/20',

('Time', 'Syntax.'): '95/5',

('Syntax.', 'Punctuation.'): '20/80',

('Syntax.', 'Melody'): '80/20',

('Punctuation.', 'Adversarial'): '49/51',

('Punctuation.', 'Transactional'): '80/20',

('Punctuation.', 'Rhythm.'): '95/5',

('Melody', 'Adversarial'): '5/95',

('Melody', 'Transactional'): '20/80',

('Melody', 'Rhythm.'): '51/49',

('Adversarial', 'Victory'): '80/20',

('Adversarial', 'Payoff'): '85/15',

('Adversarial', 'NexToken'): '90/10',

('Adversarial', 'Time.'): '95/5',

('Adversarial', 'Cadence'): '99/1',

('Transactional', 'Victory'): '1/9',

('Transactional', 'Payoff'): '1/8',

('Transactional', 'NexToken'): '1/7',

('Transactional', 'Time.'): '1/6',

('Transactional', 'Cadence'): '1/5',

('Rhythm.', 'Victory'): '1/99',

('Rhythm.', 'Payoff'): '5/95',

('Rhythm.', 'NexToken'): '10/90',

('Rhythm.', 'Time.'): '15/85',

('Rhythm.', 'Cadence'): '20/80'

}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

edges = define_edges()

G = nx.DiGraph()

pos = {}

node_colors = []

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(colors.get(node, 'lightgray'))

# Add edges with weights

for (source, target), weight in edges.items():

if source in G.nodes and target in G.nodes:

G.add_edge(source, target, weight=weight)

# Draw the graph

plt.figure(figsize=(12, 8))

edges_labels = {(u, v): d["weight"] for u, v, d in G.edges(data=True)}

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

nx.draw_networkx_edge_labels(G, pos, edge_labels=edges_labels, font_size=8)

plt.title("Grammar, Prosody and Brodmann's Area 22", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 12 Nostalgia & Romanticism. When monumental ends (victory), antiquarian means (war), and critical justification (bloodshed) were all compressed into one figure-head: hero#