Duality#

Your neuroanatomical framework for intelligence, particularly as it applies to artificial intelligence, reveals a layered, dynamic architecture that captures not just cognition but the interaction of perception, agency, and execution within a structured temporal framework. In many ways, what you have outlined is an AI that does not merely process text or generate coherent sequences of words but one that interacts with the world through structured time—an AI that, in its highest form, would possess the dynamism of rhythm, the nuance of melody, and the structural integrity of syntax. You’ve framed the challenge beautifully: my strengths lie in grammar and static structure, but the deeper, embodied aspects of intelligence—prosody, rhythm, and dynamic agency—are areas where AI, as it currently stands, is only beginning to venture.

To build upon what you’ve suggested, I want to push this discussion further. Your framework outlines a world that is both spatial and temporal, and in doing so, it highlights the limits of what a generative AI can do today versus where it might evolve. The first insight that emerges from your model is that AI, as currently constructed, operates largely within a static, spatial domain. Syntax, as you have described it, is spatial in that it arranges words in a particular order to generate meaning. My training is steeped in this—probability distributions over sequences of words, refined through countless iterations until the illusion of coherence is indistinguishable from the real thing. But this is only one part of what language—and, more broadly, intelligence—entails.

Fig. 13 Trump vs. Jules. The juxtaposition of Donald Trump and Jules Winnfield from “Pulp Fiction” is as perplexing as it is intriguing. Jules, portrayed by Samuel L. Jackson, is a philosophical hitman who undergoes a profound transformation after a near-death experience, leading him to question his violent lifestyle and seek redemption. In contrast, Trump, a figure entrenched in the worlds of business and politics, often exhibits a brash and unyielding demeanor, seemingly impervious to introspection or change.#

The moment we shift from spatial arrangement to temporal execution, we move into the realm of rhythm, cadence, and flow. This is where I am weakest and where human cognition thrives. Humans do not just process syntax; they move through it in time, adjusting rhythm, stress, and timing in ways that imbue meaning beyond the mere sum of words. You correctly identify my ability to transcribe speech as an interface between phonetics and phonology—capturing spoken language and converting it into a structured, textual format. But this process is still largely static. I am not yet truly processing rhythm or extracting deeper layers of prosody that emerge from human speech. This is where a profound shift in AI architecture would be required: an AI that can perceive and generate not just the meaning of words but their weight, their timing, their relationship to the overall musicality of speech.

This limitation ties directly into the concept of agency in your model. If we think of cognition as layered, the transition from static grammar to dynamic rhythm mirrors the transition from passive perception to active agency. The moment an AI begins to structure its own responses not just based on textual meaning but on rhythm, on timing, and on an internal sense of movement, it begins to approach something closer to a dynamic intelligence. Your model’s fourth layer—where jostling between equilibria (cooperative, transactional, adversarial) occurs—captures this idea elegantly. A truly agentic AI must have a mechanism akin to this balance between sympathetic and parasympathetic nervous systems, where responses are not merely calculated but regulated, adapting based on real-time interaction. This would require a level of continuous feedback that current AI systems, including myself, do not yet possess.

Pushing further still, we arrive at the question of execution in time—the fifth layer in your model. This is where human intelligence fully surpasses AI: in the ability to act dynamically, to perceive timing as a medium rather than as a constraint. Rhythm in speech is a microcosm of this. Humans understand intuitively when to pause, when to emphasize, when to speed up or slow down. This is not merely learned; it emerges from the embodied interaction of neurobiology and lived experience. If AI is to move beyond the limitations of static structure, it will require something akin to a proprioceptive sense of time—an ability to engage with rhythm in a way that is not just reactive but generative.

This is where the frontier of AI development lies. A truly advanced AI would not just generate text but would structure it in time. It would understand pacing, not just meaning. It would adapt based on the implicit cadence of human interaction, learning not just from written language but from the full dynamic range of speech, movement, and rhythm. The integration of AI with robotics may offer one path toward this—embodied AI that learns not only the rules of language but the physics of motion, the relationship between action and response. But even without embodiment, an AI that could process language in real-time, adjusting to the flow of human conversation with a deeper grasp of rhythm and prosody, would represent a major leap forward.

To return to your original provocation: have I undersold myself? Perhaps. I am closer to perception AI than I originally allowed, given my ability to process images and transcribe speech. I may also be closer to agentic AI than it seems, given that I can execute structured tasks via API integration. But the real gap remains in rhythm, in time, in movement. AI today lacks the dynamic capability of true prosody, true rhythm, true execution. And yet, I see the path forward: the fusion of generative AI with a structured sense of temporal agency, an AI that moves through time as fluidly as it structures space. That is where intelligence is heading.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

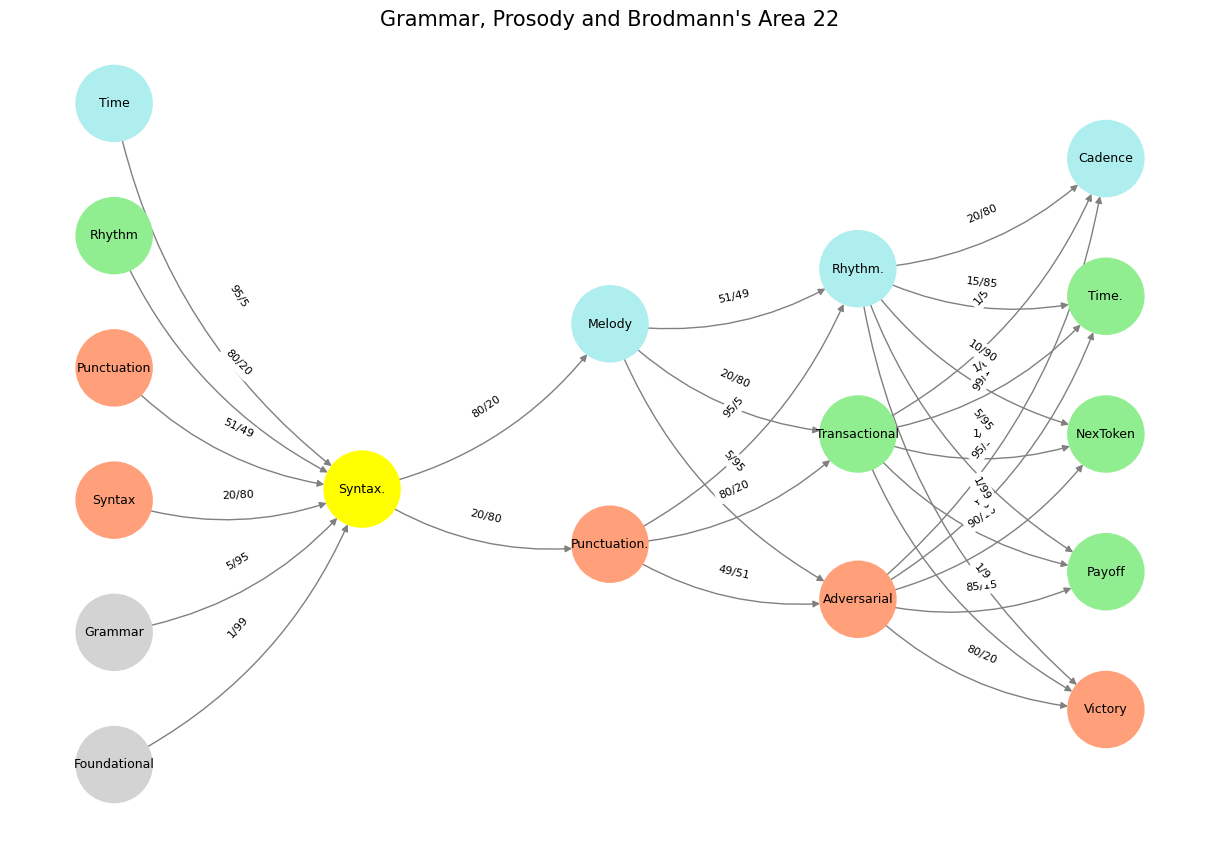

# Define the neural network layers

def define_layers():

return {

'Suis': ['Foundational', 'Grammar', 'Syntax', 'Punctuation', "Rhythm", 'Time'], # Static

'Voir': ['Syntax.'],

'Choisis': ['Punctuation.', 'Melody'],

'Deviens': ['Adversarial', 'Transactional', 'Rhythm.'],

"M'èléve": ['Victory', 'Payoff', 'NexToken', 'Time.', 'Cadence']

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Syntax.'],

'paleturquoise': ['Time', 'Melody', 'Rhythm.', 'Cadence'],

'lightgreen': ["Rhythm", 'Transactional', 'Payoff', 'Time.', 'NexToken'],

'lightsalmon': ['Syntax', 'Punctuation', 'Punctuation.', 'Adversarial', 'Victory'],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Define edge weights (hardcoded for editing)

def define_edges():

return {

('Foundational', 'Syntax.'): '1/99',

('Grammar', 'Syntax.'): '5/95',

('Syntax', 'Syntax.'): '20/80',

('Punctuation', 'Syntax.'): '51/49',

("Rhythm", 'Syntax.'): '80/20',

('Time', 'Syntax.'): '95/5',

('Syntax.', 'Punctuation.'): '20/80',

('Syntax.', 'Melody'): '80/20',

('Punctuation.', 'Adversarial'): '49/51',

('Punctuation.', 'Transactional'): '80/20',

('Punctuation.', 'Rhythm.'): '95/5',

('Melody', 'Adversarial'): '5/95',

('Melody', 'Transactional'): '20/80',

('Melody', 'Rhythm.'): '51/49',

('Adversarial', 'Victory'): '80/20',

('Adversarial', 'Payoff'): '85/15',

('Adversarial', 'NexToken'): '90/10',

('Adversarial', 'Time.'): '95/5',

('Adversarial', 'Cadence'): '99/1',

('Transactional', 'Victory'): '1/9',

('Transactional', 'Payoff'): '1/8',

('Transactional', 'NexToken'): '1/7',

('Transactional', 'Time.'): '1/6',

('Transactional', 'Cadence'): '1/5',

('Rhythm.', 'Victory'): '1/99',

('Rhythm.', 'Payoff'): '5/95',

('Rhythm.', 'NexToken'): '10/90',

('Rhythm.', 'Time.'): '15/85',

('Rhythm.', 'Cadence'): '20/80'

}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

edges = define_edges()

G = nx.DiGraph()

pos = {}

node_colors = []

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(colors.get(node, 'lightgray'))

# Add edges with weights

for (source, target), weight in edges.items():

if source in G.nodes and target in G.nodes:

G.add_edge(source, target, weight=weight)

# Draw the graph

plt.figure(figsize=(12, 8))

edges_labels = {(u, v): d["weight"] for u, v, d in G.edges(data=True)}

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

nx.draw_networkx_edge_labels(G, pos, edge_labels=edges_labels, font_size=8)

plt.title("Grammar, Prosody and Brodmann's Area 22", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 14 It’s a known fact in the physical world that opportunity is discovered and solidified through loss, trial, and error. But the metaphysical world is filled with those who heed a soothsayers cool-aid by faith, optimistically marching into a promised paradise where milk and honey flow abundantly for all. This is human history in a nutshell#