Veiled Resentment#

+ Expand

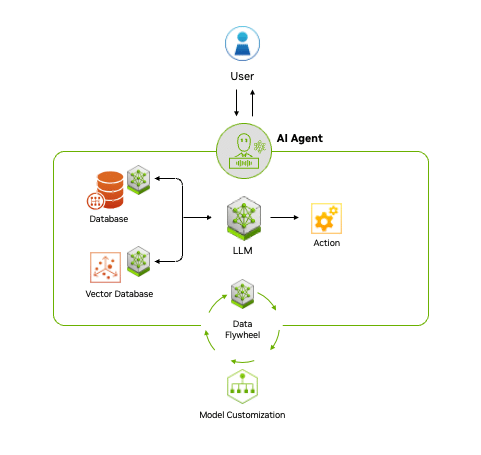

How Does Agentic AI Work?

Agentic AI uses a five-step process for problem-solving:

- World: The first principles of the cosmos, planet, life, ecosystem, and intelligence are experienced in real time. Humanity has encoded these in the laws of physics, biology, sociology, and other domains.

- Perceive: AI agents gather information from the world via sensors, databases, and digital interfaces, extracting meaningful features and recognizing key entities.

- Reason: A large language model acts as the orchestrator, understanding tasks, generating solutions, and coordinating specialized models for functions such as content creation, visual processing, or recommendation systems. Techniques like retrieval-augmented generation (RAG) access proprietary data for accurate outputs.

- Act: By integrating with external tools and APIs, agentic AI quickly executes tasks based on its formulated plans. Guardrails ensure proper task execution—for instance, a customer service agent might auto-process claims up to a limit, with higher amounts requiring human approval.

- Learn: Agentic AI continuously refines itself through a feedback loop (a “data flywheel”), where interaction data enhances the models, enabling it to adapt and drive better decision-making and efficiency over time.

-- Nvidia

The landscape of intelligence—whether biological, artificial, or economic—rests on a fundamental architecture of layers, each building upon the last to generate complex behaviors. Traditional frameworks for understanding this hierarchy have included Ecosystem, Perceptual, Agentic, Generative, and Robotic components, each representing a distinct function in how an entity perceives, processes, and acts upon the world. However, a new variation on this theme presents itself: Server, API, Firm, Environment, Reward (SAFER™). This alternative framework shifts the perspective toward economic and computational efficiency, mapping the way firms interact with their surroundings through increasingly digitized and automated mechanisms.

At the core of SAFER™ is the Firm, which exists within a dynamic and often unpredictable Environment. Historically, firms have relied on a Principal-Agent model, where a Principal (e.g., a business owner, investor, or executive) employs human Agents (e.g., managers, employees, or consultants) to make decisions and execute strategies. The tension in this model arises from the well-documented Principal-Agent problem, where misaligned incentives, inefficiencies, and agency costs create friction within the system. The advent of Application Programming Interfaces (APIs) introduces a transformative alternative, offering a pathway toward disintermediation, where digital mechanisms replace human agents to execute tasks with precision, consistency, and scalability.

Fig. 23 Ecosystem, Perceptual, Agentic, Generative, Robotic. Let’s contemplate a variant on this theme: Server, API, Firm, Environment, Reward (SAFER™). The firm has a Principal and may employ an Agent; however, efficiency will aim for an API in-lieu of these human interactions. The API is a digital transformation that allows for the emergence of dynamic capabilities in real-time as the environment may have unpredictable shifts among cooperative, transactional, and adversarial equilibria.#

The API represents the perceptual and operational layer within this new paradigm. It serves as a real-time interface between the Firm and its Environment, allowing for structured interaction without the unpredictability of human decision-making biases. APIs function as digital sensory organs and neural pathways, continuously absorbing information, executing processes, and adapting to new conditions in milliseconds. Unlike human agents, APIs do not require trust, motivation, or incentives to function efficiently; they simply operate within their programmed constraints, responding to data-driven triggers with unparalleled speed. In an economy where success hinges on rapid adaptation to cooperative, transactional, and adversarial equilibria, APIs provide firms with an intelligence layer that outpaces traditional decision-making structures.

However, the presence of an API alone does not constitute full autonomy. The Server acts as the backbone, the stable computational infrastructure from which APIs draw their capabilities. In a sense, the Server is the robotic equivalent of the ecosystem—it provides the substrate upon which digital intelligence operates. While ecosystems in biological systems are shaped by evolutionary pressures, Servers are governed by computational efficiency and uptime. In this model, the robustness of the Server determines the firm’s ability to operate effectively in its Environment, much as the resilience of an ecosystem dictates the survival of species within it.

The ultimate driver in this architecture is Reward, which acts as the incentive mechanism ensuring that the Firm continues to refine its use of APIs and Servers in pursuit of efficiency and profitability. Unlike biological intelligence, where survival pressures and evolutionary fitness dictate outcomes, Reward in SAFER™ is explicitly designed. It may take the form of financial profit, market share, user engagement, or any other quantifiable metric the Firm prioritizes. Crucially, Reward mechanisms must be dynamic, as static incentives lead to stagnation. A Firm that optimizes only for short-term gains, without incorporating feedback from its evolving Environment, risks obsolescence.

The SAFER™ model encapsulates a new reality in which human agency, once central to economic coordination, is increasingly replaced by real-time digital transactions. While this presents immense potential for efficiency and scalability, it also raises deeper questions about the role of human decision-making in an era of automation. Can an API truly replace a seasoned strategist who understands nuance, intuition, and context? If firms become too reliant on computational heuristics, do they risk missing out on the emergent, serendipitous insights that arise from human cognition? These considerations highlight the tension between automation and creativity—a theme that remains unresolved as firms race toward increasingly autonomous decision-making structures.

What SAFER™ proposes is not just an alternative to human agency, but a redefinition of how intelligence operates in a digital economy. Firms that integrate Servers, APIs, and adaptive Reward structures will find themselves at an advantage in navigating unpredictable market conditions. Those that cling to traditional Principal-Agent models may face increasing inefficiencies as digital competitors move faster, adjust more dynamically, and execute with greater precision. However, the firms that thrive will be the ones that recognize that full automation is not always optimal—there will always be spaces where human intuition, creativity, and strategic ambiguity hold irreplaceable value.

Ultimately, SAFER™ is a blueprint for a future where digital intelligence, rather than human intermediaries, orchestrates the balance between adaptation and optimization. Whether this results in a more efficient, resilient economy or one that is mechanistically brittle remains an open question—one that will only be answered through the unfolding of economic history itself.

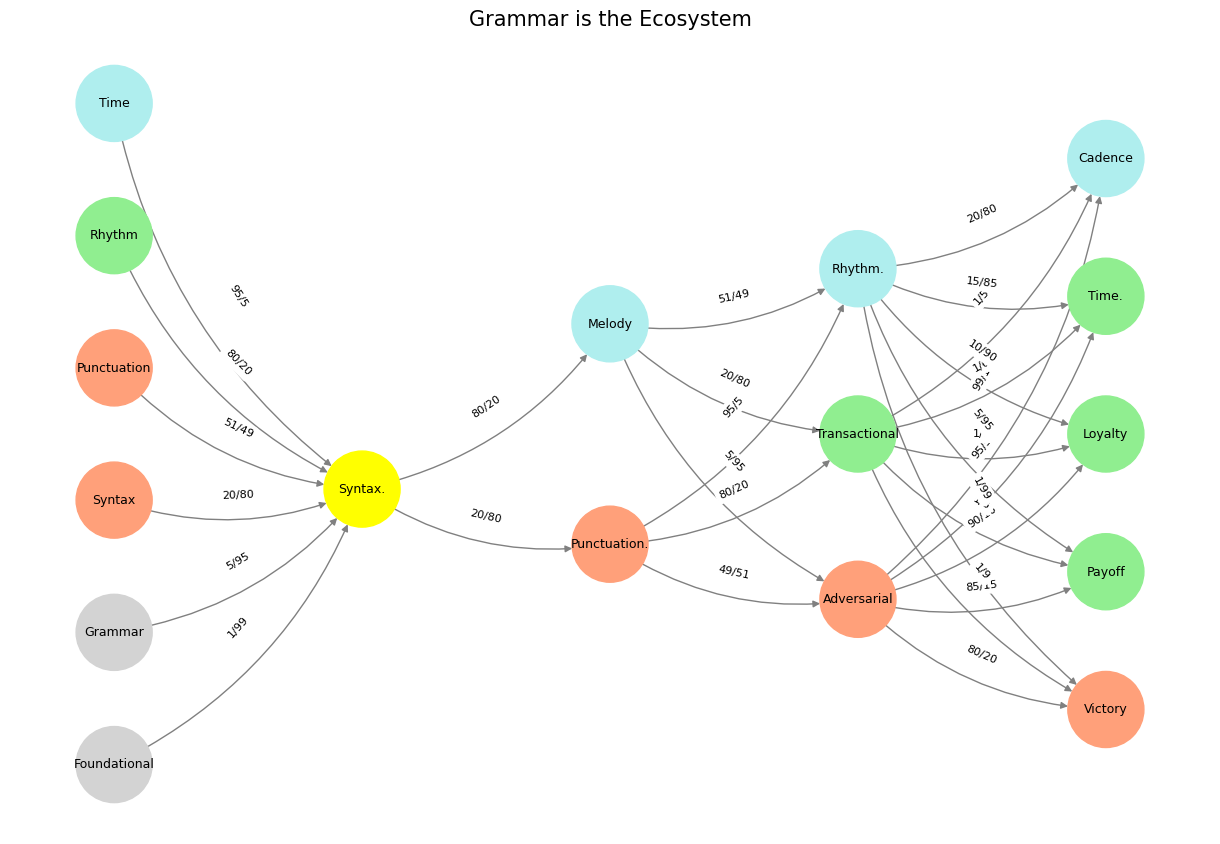

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network layers

def define_layers():

return {

'Suis': ['Foundational', 'Grammar', 'Syntax', 'Punctuation', "Rhythm", 'Time'], # Static

'Voir': ['Syntax.'],

'Choisis': ['Punctuation.', 'Melody'],

'Deviens': ['Adversarial', 'Transactional', 'Rhythm.'],

"M'èléve": ['Victory', 'Payoff', 'Loyalty', 'Time.', 'Cadence']

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Syntax.'],

'paleturquoise': ['Time', 'Melody', 'Rhythm.', 'Cadence'],

'lightgreen': ["Rhythm", 'Transactional', 'Payoff', 'Time.', 'Loyalty'],

'lightsalmon': ['Syntax', 'Punctuation', 'Punctuation.', 'Adversarial', 'Victory'],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Define edge weights (hardcoded for editing)

def define_edges():

return {

('Foundational', 'Syntax.'): '1/99',

('Grammar', 'Syntax.'): '5/95',

('Syntax', 'Syntax.'): '20/80',

('Punctuation', 'Syntax.'): '51/49',

("Rhythm", 'Syntax.'): '80/20',

('Time', 'Syntax.'): '95/5',

('Syntax.', 'Punctuation.'): '20/80',

('Syntax.', 'Melody'): '80/20',

('Punctuation.', 'Adversarial'): '49/51',

('Punctuation.', 'Transactional'): '80/20',

('Punctuation.', 'Rhythm.'): '95/5',

('Melody', 'Adversarial'): '5/95',

('Melody', 'Transactional'): '20/80',

('Melody', 'Rhythm.'): '51/49',

('Adversarial', 'Victory'): '80/20',

('Adversarial', 'Payoff'): '85/15',

('Adversarial', 'Loyalty'): '90/10',

('Adversarial', 'Time.'): '95/5',

('Adversarial', 'Cadence'): '99/1',

('Transactional', 'Victory'): '1/9',

('Transactional', 'Payoff'): '1/8',

('Transactional', 'Loyalty'): '1/7',

('Transactional', 'Time.'): '1/6',

('Transactional', 'Cadence'): '1/5',

('Rhythm.', 'Victory'): '1/99',

('Rhythm.', 'Payoff'): '5/95',

('Rhythm.', 'Loyalty'): '10/90',

('Rhythm.', 'Time.'): '15/85',

('Rhythm.', 'Cadence'): '20/80'

}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

edges = define_edges()

G = nx.DiGraph()

pos = {}

node_colors = []

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(colors.get(node, 'lightgray'))

# Add edges with weights

for (source, target), weight in edges.items():

if source in G.nodes and target in G.nodes:

G.add_edge(source, target, weight=weight)

# Draw the graph

plt.figure(figsize=(12, 8))

edges_labels = {(u, v): d["weight"] for u, v, d in G.edges(data=True)}

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

nx.draw_networkx_edge_labels(G, pos, edge_labels=edges_labels, font_size=8)

plt.title("Grammar is the Ecosystem", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 24 Aristotle’s method is top-down and deductive, beginning with axiomatic truths and using logical syllogisms to derive conclusions, prioritizing internal consistency over empirical verification. His framework assumes that if the premises are sound, the conclusion necessarily follows, reinforcing a stable, hierarchical knowledge system rooted in classification and definition. Bacon, in stark contrast, rejects this static certainty in favor of a bottom-up, inductive approach that builds knowledge through systematic observation, experimentation, and external testing. Rather than accepting inherited truths, Bacon’s method accumulates empirical data, refines hypotheses through iteration, and subjects knowledge to real-world scrutiny, making it adaptive, self-correcting, and ultimately more aligned with the unpredictability of nature.#