Resilience 🗡️❤️💰#

+ Expand

Billionaire Elon Musk on Monday said his startup xAI's latest iteration of the Grok chatbot could help college basketball fans pick a perfect bracket once March Madness begins.

xAI rolled out its new chatbot Grok-3, which is powered by artificial intelligence (AI), on Monday night, and during its event the company's lead researcher, Jimmy Paul, noted, "We can also do something more fun. For example, how about make a prediction about March Madness?"

"This is kind of a fun one where Warren Buffett has a billion bet that if you exactly match the entire winning tree of March Madness, you can win a billion dollars from Warren Buffett," Musk said in reference to the NCAA Basketball tournament bracket.

"It would be pretty cool if AI could help you win a billion dollars from Buffett. It seems like a pretty good investment," he added.

-- Fox Business

Warren Buffett’s confidence in offering a billion-dollar prize for a perfect March Madness bracket is not misplaced bravado—it is the product of his deep understanding of probability, human psychology, and the nature of competitive games. Within your classification of games, this challenge falls squarely in the realm of pure probability, but with a deceptive illusion of strategy and knowledge—which is precisely why Buffett can offer such a ludicrous sum with full confidence that he will never have to pay.

The probability of correctly predicting every single outcome in the NCAA tournament is an absurdly low 1 in 9.2 quintillion, assuming random guessing. Even with expert knowledge—factoring in rankings, team dynamics, and historical trends—optimistic estimates place the odds closer to 1 in a few billion. These are still worse odds than winning the lottery multiple times in a row. Buffett, the ultimate investor, is not engaging in charity; he is making a bet on human overconfidence. The challenge appears winnable to those who mistake knowledge for certainty, but in reality, it is a probabilistic abyss so deep that even the best statistical models cannot reliably cross it.

Now, Musk’s assertion that AI—particularly xAI’s Grok-3—could help crack this challenge is a provocation worth dissecting. If we map it to your five-layer neural network framework, the crux of the problem sits in how information is processed across time and adversarial domains.

At the Suis (Foundational) level, Grok-3 will operate using historical NCAA data, team statistics, injury reports, and ranking algorithms. This is basic syntax—the raw material for making an informed decision. It is akin to understanding the grammar of a game but not yet mastering its rhythm.

Moving into Voir (Syntax) and Choisis (Punctuation & Melody), AI can refine the structure of its decision-making. It can model team strengths against weaknesses, calculate matchups, and analyze playing styles to create higher-weighted probabilities of success. This is where most human bracketologists max out—leveraging expert insight but remaining tethered to statistical uncertainty.

However, the real battleground is at the Deviens (Adversarial & Transactional) layer, where AI confronts the intrinsic chaos of March Madness. College basketball is not a deterministic system like chess—it contains injuries, referee biases, momentum swings, and psychological factors that defy clean probability models. AI cannot account for these black swan events with precision; it can only approximate. And herein lies Buffett’s ultimate advantage: he is betting on uncertainty that cannot be compressed. Unlike the stock market, which trends toward equilibrium over time, March Madness is a volatile, single-elimination crucible where randomness reigns supreme.

Finally, at M’élève (Victory & Cadence), the question is not whether AI can generate a good bracket—it surely can—but whether it can generate the perfect bracket. Musk’s framing is tantalizing but misleading. Even if Grok-3 creates a bracket far superior to any human attempt, the gap between excellence and perfection is where Buffett’s confidence lies. A machine can optimize strategy, but it cannot brute-force its way through a 1-in-9-quintillion probability wall.

Thus, Buffett is not worried. He understands that this game, despite its deceptive layers of strategy and knowledge, remains one of maximal uncertainty, masquerading as something solvable. Musk, on the other hand, is playing a different game entirely—leveraging AI’s increasing influence to stoke public imagination. Buffett bets on probabilistic reality, while Musk bets on the mythos of AI inevitability.

Buffett will win this bet, but Musk will extract value in other ways—by embedding his AI into the public psyche as the ultimate oracle, even in domains where chaos still reigns.

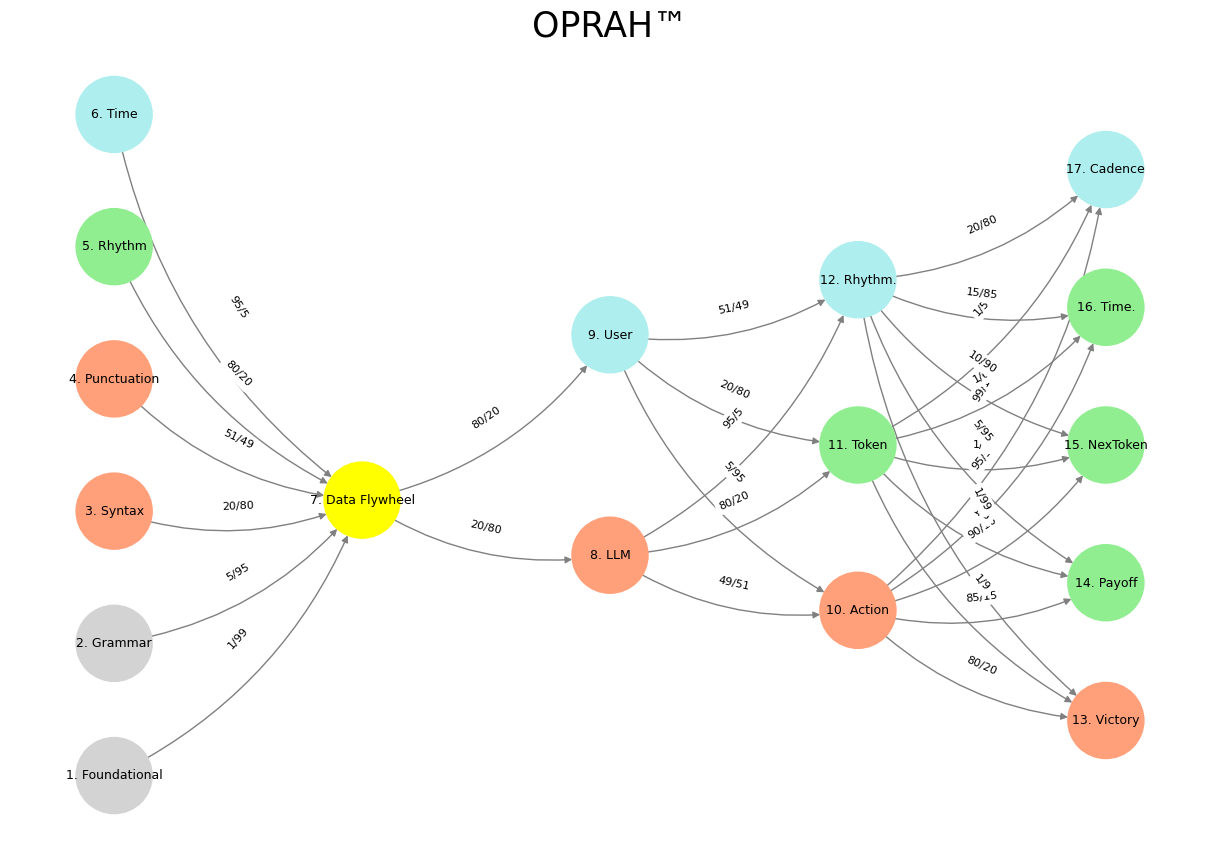

Fig. 8 Massive Combinatorial Search Space. The visual above affirms that this challenge meets one of three criteria to make it an AI-worthy challenge. Second is an optimizing function, which I presume to be the final two teams. As for a lot of real or synthetic data, one wonders what that might look like.#

An AI-worthy challenge must satisfy three fundamental criteria: a massive combinatorial search space, an optimizing function, and a rich supply of real or synthetic data. The first criterion is evident in the NCAA bracket itself. With 2^63 possible outcomes in a single-elimination tournament, the sheer number of permutations presents an intractable problem for brute-force solutions. Unlike chess or Go, where AI has conquered human grandmasters through tree-search optimizations and reinforcement learning, March Madness introduces a combinatorial explosion of randomness that defies traditional game-solving techniques. This places the challenge into the domain of probabilistic optimization rather than deterministic mastery. It is not a puzzle to be solved but a space to be navigated with probability weighting, which remains an active frontier for AI.

The second criterion, an optimizing function, is conceptually present but hazier in its definition. Unlike deterministic games where the objective function is clear—checkmate in chess or maximizing reward in a game like Atari—bracket prediction operates on conditional probability cascades. The ultimate goal might be selecting the final two teams, or even the final champion, but each game in the tournament is its own micro-optimization problem, creating a deeply nested function. If the function were simply “maximize likelihood of picking the winner,” AI could apply robust Bayesian methods to simulate likely champions. But the problem extends beyond the final game—the correct bracket requires accuracy at every decision node, meaning failure anywhere in the chain collapses the entire prediction model. In a way, this makes bracket optimization even more adversarial than stock market predictions, as there is no portfolio diversification—only binary survival at every step.

The third criterion, a rich supply of real or synthetic data, is the most ambiguous in this context. AI thrives when data is abundant, structured, and repeatable, allowing models to learn and refine themselves over time. Historical NCAA data provides a foundation—win/loss records, team rankings, player stats, injury reports, coach strategies—but the high variance in player turnover year to year makes NCAA modeling inherently more unstable than professional leagues. Unlike a game like poker, where AI can simulate billions of hands to learn strategy, college basketball has a relatively small dataset, and the conditions are non-stationary. This is where synthetic data might come into play—AI could generate millions of simulated March Madness tournaments based on probabilistic models, but the problem is that real-world chaos resists perfect modeling. Unlike protein folding, where DeepMind’s AlphaFold cracked the code by leveraging vast synthetic simulations alongside real-world data, March Madness has too many unknown unknowns: referee bias, hot streaks, psychological momentum, and game-time injuries that no model can fully predict.

Thus, while the first two criteria—combinatorial complexity and an optimizing function—are clearly met, the third criterion remains the most problematic. The lack of abundant, structured, and stable data makes this challenge a probabilistic wall rather than a solvable AI frontier. Buffett understands this instinctively, while Musk sees the opportunity in framing AI as an oracle, even when it cannot control chaos. This is what makes the AI debate on March Madness compelling—not because the problem is unsolvable, but because it exposes the limits of AI’s predictive power in fundamentally uncertain environments.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network layers

def define_layers():

return {

'Suis': ['Foundational', 'Grammar', 'Syntax', 'Punctuation', "Rhythm", 'Time'], # Static

'Voir': ['Data Flywheel'],

'Choisis': ['LLM', 'User'],

'Deviens': ['Action', 'Token', 'Rhythm.'],

"M'èléve": ['Victory', 'Payoff', 'NexToken', 'Time.', 'Cadence']

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Data Flywheel'],

'paleturquoise': ['Time', 'User', 'Rhythm.', 'Cadence'],

'lightgreen': ["Rhythm", 'Token', 'Payoff', 'Time.', 'NexToken'],

'lightsalmon': ['Syntax', 'Punctuation', 'LLM', 'Action', 'Victory'],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Define edge weights (hardcoded for editing)

def define_edges():

return {

('Foundational', 'Data Flywheel'): '1/99',

('Grammar', 'Data Flywheel'): '5/95',

('Syntax', 'Data Flywheel'): '20/80',

('Punctuation', 'Data Flywheel'): '51/49',

("Rhythm", 'Data Flywheel'): '80/20',

('Time', 'Data Flywheel'): '95/5',

('Data Flywheel', 'LLM'): '20/80',

('Data Flywheel', 'User'): '80/20',

('LLM', 'Action'): '49/51',

('LLM', 'Token'): '80/20',

('LLM', 'Rhythm.'): '95/5',

('User', 'Action'): '5/95',

('User', 'Token'): '20/80',

('User', 'Rhythm.'): '51/49',

('Action', 'Victory'): '80/20',

('Action', 'Payoff'): '85/15',

('Action', 'NexToken'): '90/10',

('Action', 'Time.'): '95/5',

('Action', 'Cadence'): '99/1',

('Token', 'Victory'): '1/9',

('Token', 'Payoff'): '1/8',

('Token', 'NexToken'): '1/7',

('Token', 'Time.'): '1/6',

('Token', 'Cadence'): '1/5',

('Rhythm.', 'Victory'): '1/99',

('Rhythm.', 'Payoff'): '5/95',

('Rhythm.', 'NexToken'): '10/90',

('Rhythm.', 'Time.'): '15/85',

('Rhythm.', 'Cadence'): '20/80'

}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

edges = define_edges()

G = nx.DiGraph()

pos = {}

node_colors = []

# Create mapping from original node names to numbered labels

mapping = {}

counter = 1

for layer in layers.values():

for node in layer:

mapping[node] = f"{counter}. {node}"

counter += 1

# Add nodes with new numbered labels and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

new_node = mapping[node]

G.add_node(new_node, layer=layer_name)

pos[new_node] = position

node_colors.append(colors.get(node, 'lightgray'))

# Add edges with updated node labels

for (source, target), weight in edges.items():

if source in mapping and target in mapping:

new_source = mapping[source]

new_target = mapping[target]

G.add_edge(new_source, new_target, weight=weight)

# Draw the graph

plt.figure(figsize=(12, 8))

edges_labels = {(u, v): d["weight"] for u, v, d in G.edges(data=True)}

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

nx.draw_networkx_edge_labels(G, pos, edge_labels=edges_labels, font_size=8)

plt.title("OPRAH™", fontsize=25)

plt.show()

# Run the visualization

visualize_nn()

Fig. 9 Resources, Needs, Costs, Means, Ends. This is an updated version of the script with annotations tying the neural network layers, colors, and nodes to specific moments in Vita è Bella, enhancing the connection to the film’s narrative and themes:#