Born to Etiquette#

In personalized or precision medicine, the exponentiation function in a multivariable Cox regression plays the same role as C² in Einstein’s equation—it acts as the transformational force that amplifies differences in risk across patient populations.

Compression and the Role of the Exponentiation Function in Medicine#

At the core of precision medicine is risk stratification, where we take raw biological, genetic, and clinical data and compress it into meaningful survival predictions. The Cox proportional hazards model does this by transforming a baseline hazard rate with an exponentiation function, incorporating multiple patient-specific risk factors into a unified prediction equation:

Here, \( e^{(\beta_1 X_1 + ... + \beta_n X_n)} \) functions as the C² of medicine, exponentially modifying baseline risk according to patient-specific variables.

Search Space (S) → The set of all possible risk factors and patient variables (genetics, biomarkers, demographics, lifestyle factors, clinical history).

Optimization Function (α) → The exponentiation transformation, where each variable’s coefficient β determines its impact on survival.

Data/Simulation (D) → Vast datasets of patient outcomes, where real-world data refines the search space and optimizes the function.

Thus, precision medicine is intelligence acting through compression—rather than treating all patients equally, we compress multidimensional data into a personalized survival function, optimizing treatments based on an individual’s unique risk profile.

Exponentiation as the Key to Personalized Health Optimization#

The real power of \( e^{(\beta X)} \) in the Cox model is its ability to magnify small biological or behavioral differences into massive disparities in survival outcomes. This is exactly what C² does in Einstein’s equation—tiny changes in mass, when transformed by the exponentiation function of light-speed squared, yield astronomical energy outputs.

In medicine:

A small genetic predisposition (X), once exponentiated, may double or triple a patient’s risk of cardiovascular disease.

A lifestyle intervention (X, like smoking cessation or diet changes) may not seem transformative until exponentiation reveals its long-term survival impact.

A biomarker (X) that differs by a fraction in normal lab results may, once exponentiated, identify high-risk patients invisible to standard diagnostics.

Precision Medicine as the Future of Compression#

This means the future of medicine isn’t just in collecting more data—it’s about optimizing the exponentiation function to refine how we personalize risk. Just as Einstein didn’t need to collect experimental data at light speed to derive his equation, medicine must move toward a model where simulated patient trajectories (AI-driven risk stratification, synthetic patient models, etc.) stand in for exhaustive real-world trials.

Can we redefine the exponentiation function using machine learning?

Can we identify new “C²-like” variables in medicine that exponentially amplify differences in survival and treatment efficacy?

What if we found a way to compress all of genomic medicine into a single personalized function for health optimization?

Compression as the Core of Scientific Intelligence#

Just like in Black-Scholes, equal temperament, and Einstein’s equation, the Cox model’s exponentiation function is the point where intelligence happens. The baseline hazard is just raw data—the exponentiation compresses the space of possible patient outcomes into a simple, personalized risk score.

This is the same principle behind all intelligence—whether in finance, music, physics, or epidemiology, the key is always:

Find the broadest possible search space.

Define an optimizing function.

Apply the correct exponentiation to transform raw data into an efficient, predictive model.

Precision medicine is simply the next frontier of compression, where we aren’t just applying intelligence to physics, finance, or sound—but directly to human survival.

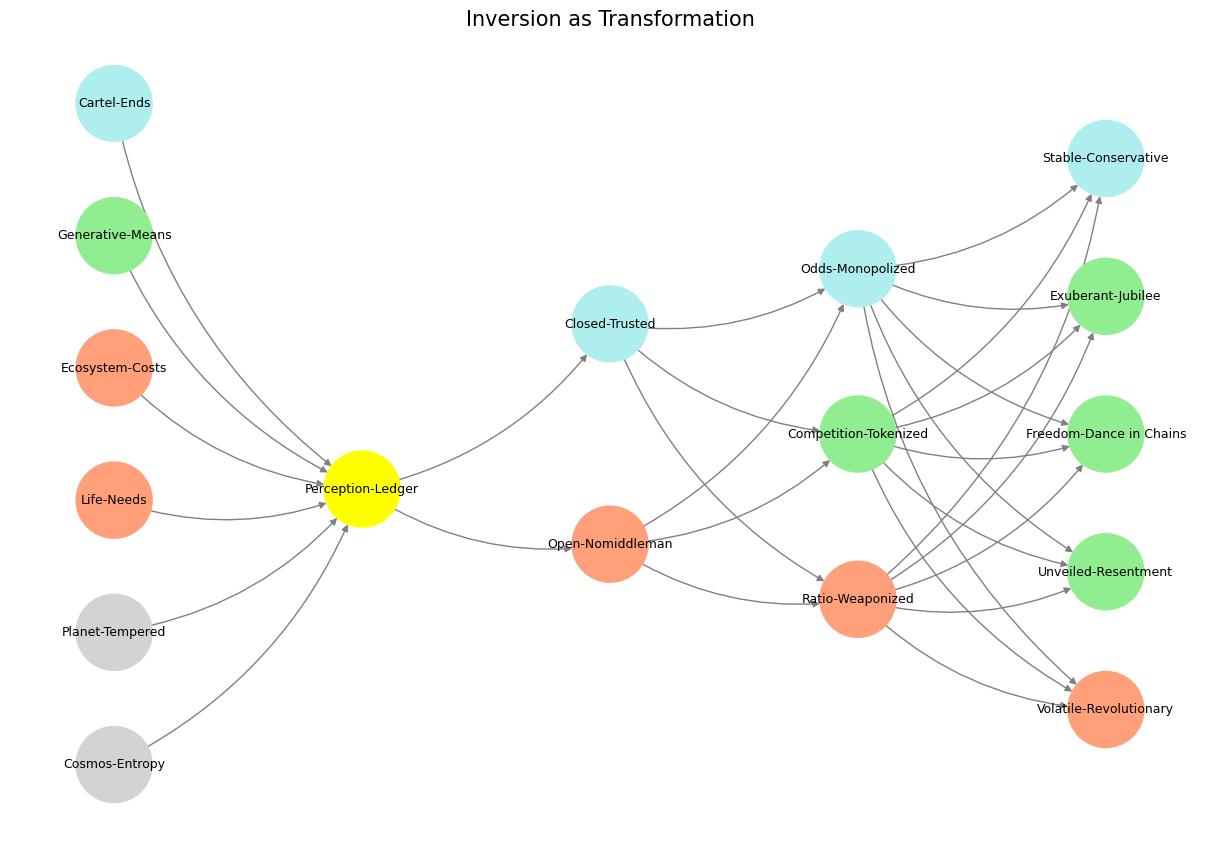

Fig. 36 I got my hands on every recording by Hendrix, Joni Mitchell, CSN, etc (foundations). Thou shalt inherit the kingdom (yellow node). And so why would Bankman-Fried’s FTX go about rescuing other artists failing to keep up with the Hendrixes? Why worry about the fate of the music industry if some unknown joe somewhere in their parents basement may encounter an unknown-unknown that blows them out? Indeed, why did he take on such responsibility? - Great question by interviewer. The tonal inflections and overuse of ecosystem (a node in our layer 1) as well as clichêd variant of one of our output layers nodes (unknown) tells us something: our neural network digests everything and is coherenet. It’s based on our neural anatomy!#

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network fractal

def define_layers():

return {

'World': ['Cosmos-Entropy', 'Planet-Tempered', 'Life-Needs', 'Ecosystem-Costs', 'Generative-Means', 'Cartel-Ends', ], # Polytheism, Olympus, Kingdom

'Perception': ['Perception-Ledger'], # God, Judgement Day, Key

'Agency': ['Open-Nomiddleman', 'Closed-Trusted'], # Evil & Good

'Generative': ['Ratio-Weaponized', 'Competition-Tokenized', 'Odds-Monopolized'], # Dynamics, Compromises

'Physical': ['Volatile-Revolutionary', 'Unveiled-Resentment', 'Freedom-Dance in Chains', 'Exuberant-Jubilee', 'Stable-Conservative'] # Values

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Perception-Ledger'],

'paleturquoise': ['Cartel-Ends', 'Closed-Trusted', 'Odds-Monopolized', 'Stable-Conservative'],

'lightgreen': ['Generative-Means', 'Competition-Tokenized', 'Exuberant-Jubilee', 'Freedom-Dance in Chains', 'Unveiled-Resentment'],

'lightsalmon': [

'Life-Needs', 'Ecosystem-Costs', 'Open-Nomiddleman', # Ecosystem = Red Queen = Prometheus = Sacrifice

'Ratio-Weaponized', 'Volatile-Revolutionary'

],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

G = nx.DiGraph()

pos = {}

node_colors = []

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(colors.get(node, 'lightgray')) # Default color fallback

# Add edges (automated for consecutive layers)

layer_names = list(layers.keys())

for i in range(len(layer_names) - 1):

source_layer, target_layer = layer_names[i], layer_names[i + 1]

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

plt.title("Inversion as Transformation", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 37 Glenn Gould and Leonard Bernstein famously disagreed over the tempo and interpretation of Brahms’ First Piano Concerto during a 1962 New York Philharmonic concert, where Bernstein, conducting, publicly distanced himself from Gould’s significantly slower-paced interpretation before the performance began, expressing his disagreement with the unconventional approach while still allowing Gould to perform it as planned; this event is considered one of the most controversial moments in classical music history.#