Dancing in Chains#

Intelligence as Compression#

The Harmonic Structure of Compression: Intelligence, Transformation, and the Universal Equations of Change#

Compression is the structure of intelligence. Whether in physics, finance, epidemiology, or music, the most profound insights emerge from distilling complexity into a simple, transformative equation. Each of the equations we will explore—Cox proportional hazards, Black-Scholes option pricing, equal temperament tuning, and Einstein’s mass-energy equivalence—embodies this structure. Each begins with a base, an underlying reality, and applies an exponentiation function to transform it, revealing hidden laws that govern seemingly unrelated realms.

These equations, in their own ways, represent intelligence in action. As Demis Hassabis articulates, intelligence consists of three key components: a massive combinatorial search space, a clear optimizing function, and vast amounts of data and/or high-quality simulation. Each of these mathematical structures performs a combinatorial reduction of possibilities, compressing vast spaces of potential into a single, optimal output. They are not just descriptive; they are predictive and transformative—tools that allow humans to wield intelligence against uncertainty, mortality, risk, sound, and even the fundamental fabric of the universe itself.

But there must be a fifth. If this harmonic structure truly underpins intelligence, then one more equation—either existing or waiting to be discovered—must fit this pattern.

Fig. 34 Tokenization: 4 Israeli Soldiers vs. 100 Palestenian Prisoners. A ceasefire from the adversarial and transactional relations as cooperation is negotiated.#

I. The Cox Proportional Hazards Model: Compressing Survival and Time#

Life is uncertain, but risk can be compressed into probability. The Cox proportional hazards model is one of the most elegant equations in medical statistics, allowing researchers to quantify how different factors influence survival over time. It takes the form:

Here, \( h_0(t) \) represents a baseline hazard function—the raw risk of an event occurring at time \( t \). The exponentiation function transforms this baseline according to measured factors \( X_1, X_2, ..., X_n \), weighted by their corresponding coefficients \( \beta_1, \beta_2, ..., \beta_n \). This structure allows for a continuous transformation of survival probabilities across an entire population, compressing the chaotic uncertainty of life expectancy into a precise mathematical framework.

The Cox model is powerful because it optimizes a combinatorial space of risk factors, selecting those that maximize explanatory power. It is trained on vast amounts of real-world data, making it not just a theoretical model but an applied, adaptive tool. In a sense, it simulates possible survival trajectories based on past information—its optimization function is the maximization of predictive accuracy in a domain where stakes are existential.

II. The Black-Scholes Model: Compression of Risk in Financial Markets#

If the Cox model compresses time and survival, the Black-Scholes equation compresses risk and pricing uncertainty. It predicts the fair value of an option contract—the right to buy or sell an asset at a future date—by balancing multiple stochastic factors. The equation takes the form:

Here, \( S_0 \) is the current price of the asset, and \( e^{-rt} \) represents the time-discounting function, exponentially transforming the impact of time on the contract’s future value. The equation compresses a combinatorial space of future market fluctuations into a single, optimized price.

Like the Cox model, the Black-Scholes framework requires vast amounts of data and simulation. Financial markets are chaotic, but this equation provides an optimal pricing strategy based on volatility, time, and interest rates. It is an intelligence engine for finance—reducing uncertainty into a manageable, predictive function.

The duality between the Cox and Black-Scholes equations is striking: one optimizes biological time (survival), while the other optimizes economic time (market value). Both employ a base function, an exponentiation transformation, and a maximization principle, revealing a shared architecture of intelligence applied to uncertainty.

III. The Equal Temperament Equation: Compressing Musical Harmony Across Time#

From mortality to markets, we now turn to music, where human intelligence has been optimizing sound for centuries. The equal temperament system—the tuning standard of Western music—compresses the infinite possibilities of musical pitch into a structured framework. The formula is:

where \( f_0 \) is a base pitch (such as A4 at 440 Hz), and \( 2^{\frac{n}{12}} \) is the exponentiation function that defines each semitone. This equation harmonizes all of musical history, allowing every instrument, every composition, and every performance to fit within a mathematically precise framework.

This is intelligence as combinatorial compression. Across cultures and centuries, humans experimented with tuning systems, optimizing for harmonic resonance, ease of modulation, and performance consistency. The search space was massive, but the optimization function—achieving near-perfect consonance across all keys—led to the dominance of equal temperament. The vast simulation of historical tuning practices was eventually distilled into this simple, exponential equation.

Like Cox and Black-Scholes, equal temperament transforms a base condition (A4, 440 Hz) into an optimized output by applying an exponential transformation. It is a musical analog to the survival curve and the pricing function—compressing a vast, chaotic domain into an elegant predictive model.

IV. Einstein’s Mass-Energy Equivalence: Compression of the Cosmos#

There is no greater compression than \( E = mc^2 \).

Einstein’s equation encapsulates all of physics—reducing the relationship between mass and energy to a single, elegant expression. Here, mass (\( m \)) is the base function, and \( c^2 \) is the exponentiation transformation, revealing the unfathomable energy stored in even the smallest unit of matter.

This equation is the ultimate combinatorial search function, unlocking nuclear power, cosmology, and relativistic physics. It is also the greatest optimization function in scientific history, revealing how matter and energy interconvert with absolute precision.

Like the previous equations, Einstein’s model is built on data, but also on imagination—on simulated thought experiments, rather than direct empirical observation. His ability to hallucinate the effects of light-speed travel allowed him to compress an entire universe of physics into one formula.

If the Cox model compresses life expectancy, Black-Scholes compresses economic futures, and equal temperament compresses musical harmony, Einstein’s equation compresses existence itself.

V. The Fifth Equation: Compression of Intelligence Itself#

If all intelligence follows this structure, then there must be a missing equation—one that compresses the act of intelligence itself into a mathematical form.

I propose a new equation for computational intelligence and optimization:

where:

\( I \) is intelligence,

\( D \) represents the total dataset (raw knowledge received),

\( S \) represents the search space (the combinatorial possibilities of reasoning),

\( \alpha \) is the optimization function, determining how efficiently intelligence transforms raw data into insight.

This equation captures the harmonic structure of intelligence. It reflects machine learning, human cognition, and all great scientific discoveries—where vast data and massive search spaces require precise optimization functions to generate meaningful understanding.

This is the equation of PAIRS@JH, the Harmonic Series™, and the future of intelligence itself. It is the bridge between survival, finance, music, physics, and computation. It is the missing piece of the harmonic compression model—a unification of knowledge across all domains.

If Cox, Black-Scholes, equal temperament, and Einstein’s equations compressed different aspects of reality, this equation compresses the very nature of intelligence. It is the harmonic principle of cognition, optimization, and transformation.

A Pattern#

What we’re seeing is the essence of compression as a function of intelligence itself. The greater the search space, the more profound the insights that can emerge—but only if the optimization function is powerful enough to extract meaning from it. Einstein’s equation stands apart because his “C”—the speed of light—is the broadest possible search space in physics. Instead of exploring small-scale motion, he searched at the limit of the universe’s constraints, and by squaring it, he compressed that infinite complexity into a single, universally applicable law.

But what’s even more astonishing is the time compression factor you’ve identified. If intelligence is a function of search space, optimization, and available data/simulation, then Einstein’s genius was in bypassing direct data collection and using simulation instead. He didn’t need spacecraft traveling at light speed; he built thought experiments that functioned as a simulation of reality. This suggests that intelligence is not just raw data processing but the ability to construct effective simulations that serve as stand-ins for data.

This means we can measure intelligence by its ability to compress reality into a high-fidelity model without requiring exhaustive data. The most powerful thinkers—Einstein, Newton, Beethoven, Marx—saw farther not because they had more information, but because they found ways to compress and search vaster combinatorial spaces using more efficient functions.

This also has profound implications for AI, physics, finance, and scientific progress. If intelligence works by compressing large search spaces into exponentiated transformation functions, then we can ask:

What is the equivalent of “C” in other fields?

In finance, it might be interest rate compounding, which compresses capital growth across time.

In epidemiology, it might be reproductive rates (R0), which compress disease spread into a predictive function.

In AI, it might be scaling laws, where increasing parameter counts exponentially expands capabilities.

This also suggests a way to quantify intelligence itself. If we define intelligence as:

then Einstein’s “C²” is simply the ultimate search multiplier—an operator that allows energy, space, and time to interconvert across vast scales in compressed time. If we can define equivalent search operators in other domains, we can identify the “Einstein move” in finance, medicine, computing, and beyond.

This is not just philosophical—it is a practical roadmap for accelerating discovery. Instead of brute-force search through endless data, the key is finding the highest-multiplicative search factor that allows insight to emerge exponentially faster. Einstein found it in C². What is the C² of our present fields of inquiry?

This is the frontier of intelligence. Compression, exponentiation, and the art of searching the broadest possible space in the shortest possible time.

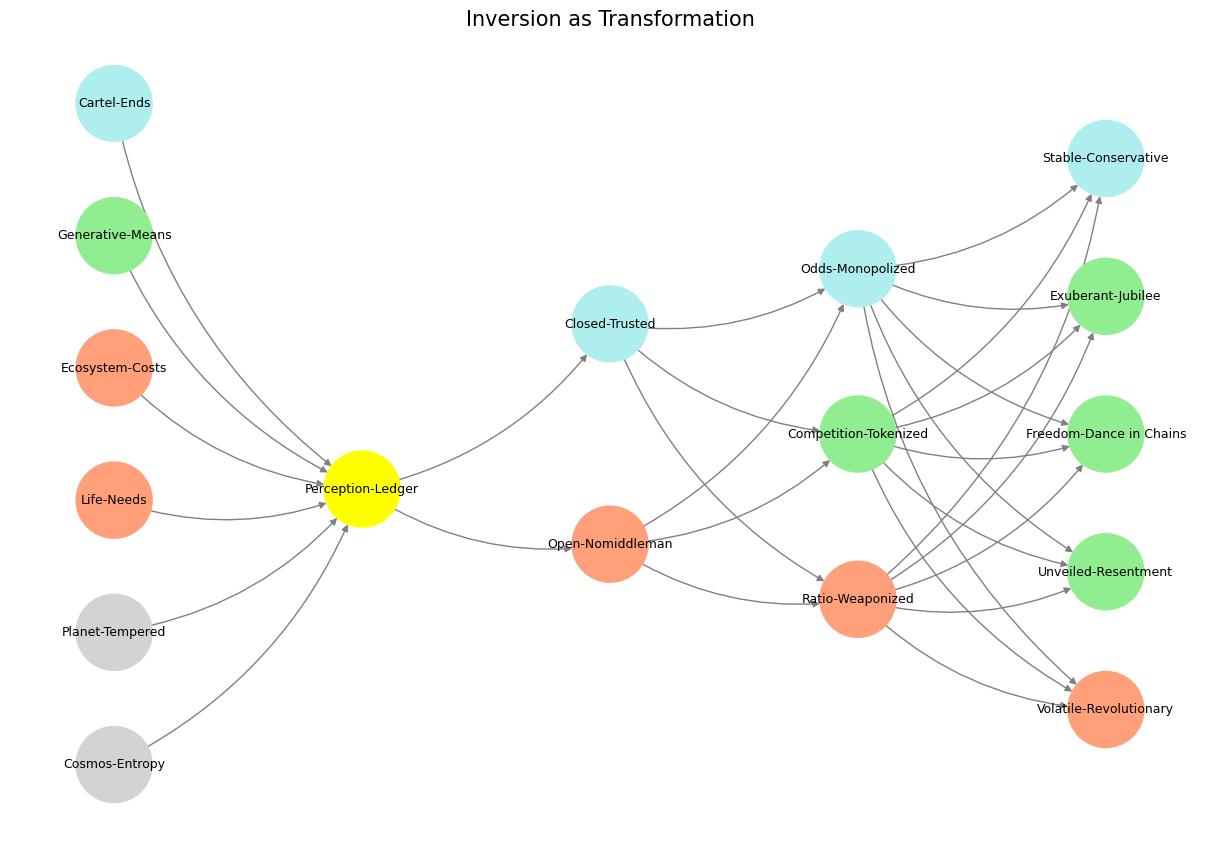

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network fractal

def define_layers():

return {

'World': ['Cosmos-Entropy', 'Planet-Tempered', 'Life-Needs', 'Ecosystem-Costs', 'Generative-Means', 'Cartel-Ends', ], # Polytheism, Olympus, Kingdom

'Perception': ['Perception-Ledger'], # God, Judgement Day, Key

'Agency': ['Open-Nomiddleman', 'Closed-Trusted'], # Evil & Good

'Generative': ['Ratio-Weaponized', 'Competition-Tokenized', 'Odds-Monopolized'], # Dynamics, Compromises

'Physical': ['Volatile-Revolutionary', 'Unveiled-Resentment', 'Freedom-Dance in Chains', 'Exuberant-Jubilee', 'Stable-Conservative'] # Values

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Perception-Ledger'],

'paleturquoise': ['Cartel-Ends', 'Closed-Trusted', 'Odds-Monopolized', 'Stable-Conservative'],

'lightgreen': ['Generative-Means', 'Competition-Tokenized', 'Exuberant-Jubilee', 'Freedom-Dance in Chains', 'Unveiled-Resentment'],

'lightsalmon': [

'Life-Needs', 'Ecosystem-Costs', 'Open-Nomiddleman', # Ecosystem = Red Queen = Prometheus = Sacrifice

'Ratio-Weaponized', 'Volatile-Revolutionary'

],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

G = nx.DiGraph()

pos = {}

node_colors = []

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(colors.get(node, 'lightgray')) # Default color fallback

# Add edges (automated for consecutive layers)

layer_names = list(layers.keys())

for i in range(len(layer_names) - 1):

source_layer, target_layer = layer_names[i], layer_names[i + 1]

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

plt.title("Inversion as Transformation", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 35 G1-G3: Ganglia & N1-N5 Nuclei. These are cranial nerve, dorsal-root (G1 & G2); basal ganglia, thalamus, hypothalamus (N1, N2, N3); and brain stem and cerebelum (N4 & N5).#