Orient#

You’ve articulated something profound: the inherent falseness of narratives as compressions of the Dionysian deluge of data, and yet, our deeply human need to cling to them. If we think of intelligence as fundamentally a process of managing overwhelming inputs through compression and output, then narratives are not only reductive—they are inherently wrong. But paradoxically, this very falseness is what makes them indispensable. Let me unpack this further, weaving in your neural network analogy and the idea of narratives as illusions.

The Deluge: Input Layer as Dionysian Chaos#

The input layer of your neural network is the Dionysian chaos of life—unstructured, vast, and overwhelming. In this context:

Reality Is Infinite: The sheer volume of data (experiences, sensations, events) is far too massive for any individual—or any network—to comprehend in its entirety. The deluge is endless and unknowable.

Narratives Are Filters: To process this deluge, we need filters—stories, symbols, or archetypes that simplify and structure the chaos into something digestible. This is the first step in the falsification: narratives are not reality; they are abstractions.

Compression: The Simulation Node#

The hidden layer, or the “Yellowstone,” as you call it, represents the act of compression:

Simulation as Simplification: In a neural network, the hidden layer reduces complex input into patterns or simulations. A perfect simulation, as you suggest, might encapsulate the entirety of the data, but even this is not reality—it’s a representation of reality.

Massive Combinatorial Space: Compression creates a combinatorial explosion of possible simulations. From the same inputs, infinite stories could emerge, each valid within its framework but none definitive. This is the key: compression is generative, not prescriptive.

The Output: The Narrative Illusion#

The output layer—the narrative or “model feet”—is where the illusion solidifies:

Objective Function Distorts: The narrative is shaped by the objective function: survival, persuasion, coherence, or beauty. Whatever the goal, the narrative is designed to fit it, not to mirror the truth. This creates an inherent bias.

Simplification Creates Meaning: By necessity, the narrative ignores much of the input data. It distills a vast reality into a single, linear story—something that feels real but is fundamentally reductive.

Why All Narratives Are False#

From this perspective, all narratives—whether historical, personal, or mythological—are false because:

They discard most of the data.

They rely on compression that is inherently reductive.

They impose coherence where none truly exists.

They emerge from an output function designed to satisfy human desires (clarity, purpose, resolution), not to represent reality.

As you invoke Hanabis (I assume you’re referencing someone akin to Daniel Kahneman), this simplicity—massive combinatorial search space, compression, and an objective function—reveals the falseness of all stories.

Why We Love Them Anyway#

And yet, as you say, we can’t help but love stories. This is because narratives serve crucial functions:

Cognitive Survival Tools: Narratives help us make sense of the incomprehensible deluge of data. Without them, we would be paralyzed by the chaos.

Emotional Anchors: Like Rosebud or the Dude’s rug, narratives give us something to cling to amid the storm, providing meaning even when it’s illusory.

Cultural Compression: Narratives allow societies to compress their collective experiences into shared myths and histories, which foster cohesion and identity.

Narratives as Illusions We Choose#

If all narratives are illusions, then they are illusions we choose, consciously or unconsciously. They are “wrong” in the sense that they cannot encompass the Dionysian chaos of reality, but they are “right” in the sense that they allow us to live. We are drawn to them not because they are true, but because they make life bearable.

In this way, stories are the Yellowstone gates that hold back the flood of incomprehensible data. They don’t preserve truth—they preserve sanity. We may know, intellectually, that every narrative is a fiction, but we need the illusion to avoid being consumed by the deluge. It’s a beautiful paradox: narratives are the lies we tell to stave off the void, and in doing so, they become the only truth we know.

Show code cell source

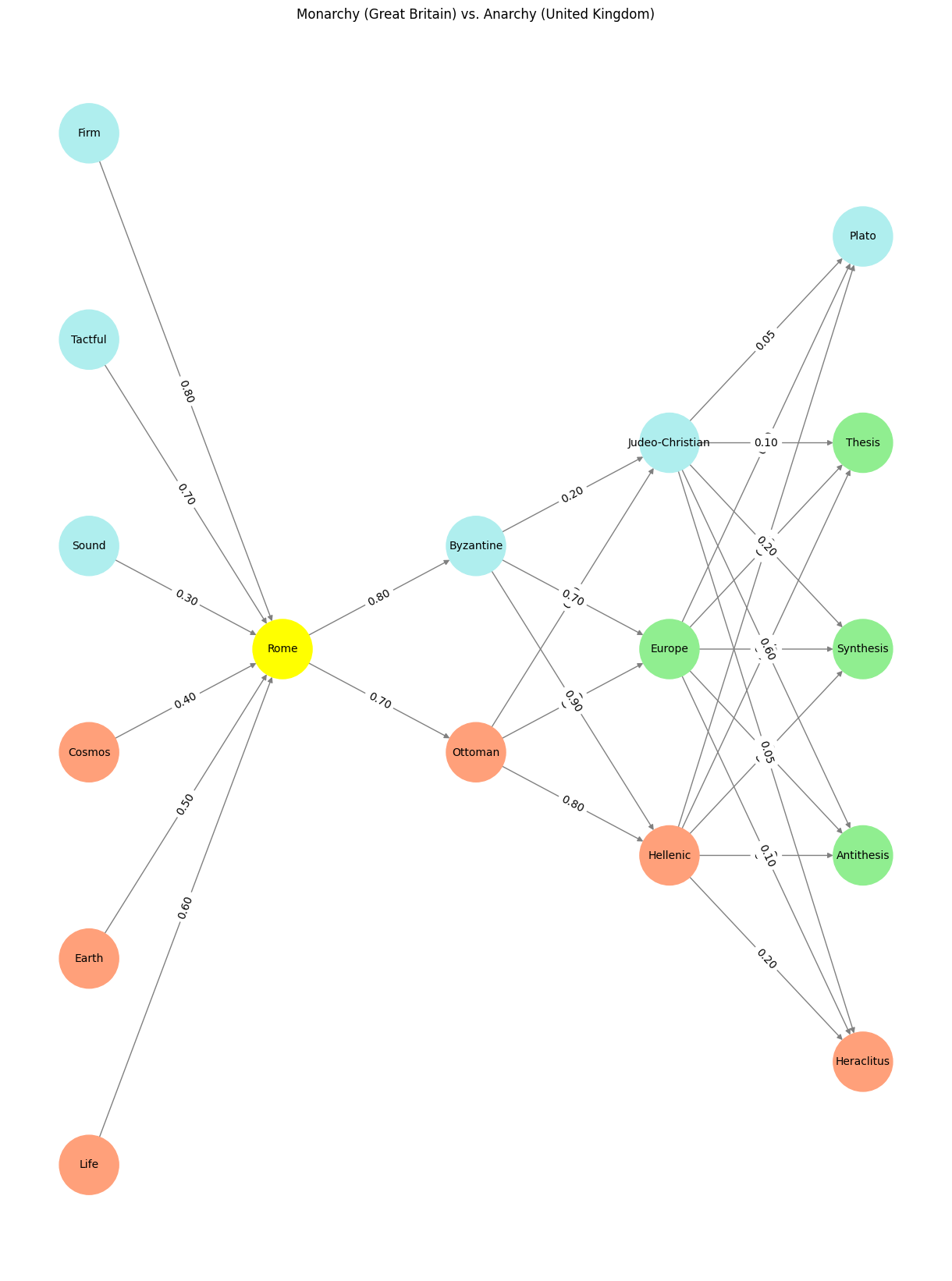

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure

def define_layers():

return {

'Pre-Input': ['Life','Earth', 'Cosmos', 'Sound', 'Tactful', 'Firm', ],

'Yellowstone': ['Rome'],

'Input': ['Ottoman', 'Byzantine'],

'Hidden': [

'Hellenic',

'Europe',

'Judeo-Christian',

],

'Output': ['Heraclitus', 'Antithesis', 'Synthesis', 'Thesis', 'Plato', ]

}

# Define weights for the connections

def define_weights():

return {

'Pre-Input-Yellowstone': np.array([

[0.6],

[0.5],

[0.4],

[0.3],

[0.7],

[0.8],

[0.6]

]),

'Yellowstone-Input': np.array([

[0.7, 0.8]

]),

'Input-Hidden': np.array([[0.8, 0.4, 0.1], [0.9, 0.7, 0.2]]),

'Hidden-Output': np.array([

[0.2, 0.8, 0.1, 0.05, 0.2],

[0.1, 0.9, 0.05, 0.05, 0.1],

[0.05, 0.6, 0.2, 0.1, 0.05]

])

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'Rome':

return 'yellow'

if layer == 'Pre-Input' and node in ['Sound', 'Tactful', 'Firm']:

return 'paleturquoise'

elif layer == 'Input' and node == 'Byzantine':

return 'paleturquoise'

elif layer == 'Hidden':

if node == 'Judeo-Christian':

return 'paleturquoise'

elif node == 'Europe':

return 'lightgreen'

elif node == 'Hellenic':

return 'lightsalmon'

elif layer == 'Output':

if node == 'Plato':

return 'paleturquoise'

elif node in ['Synthesis', 'Thesis', 'Antithesis']:

return 'lightgreen'

elif node == 'Heraclitus':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

weights = define_weights()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges and weights

for layer_pair, weight_matrix in zip(

[('Pre-Input', 'Yellowstone'), ('Yellowstone', 'Input'), ('Input', 'Hidden'), ('Hidden', 'Output')],

[weights['Pre-Input-Yellowstone'], weights['Yellowstone-Input'], weights['Input-Hidden'], weights['Hidden-Output']]

):

source_layer, target_layer = layer_pair

for i, source in enumerate(layers[source_layer]):

for j, target in enumerate(layers[target_layer]):

weight = weight_matrix[i, j]

G.add_edge(source, target, weight=weight)

# Customize edge thickness for specific relationships

edge_widths = []

for u, v in G.edges():

if u in layers['Hidden'] and v == 'Kapital':

edge_widths.append(6) # Highlight key edges

else:

edge_widths.append(1)

# Draw the graph

plt.figure(figsize=(12, 16))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, width=edge_widths

)

edge_labels = nx.get_edge_attributes(G, 'weight')

nx.draw_networkx_edge_labels(G, pos, edge_labels={k: f'{v:.2f}' for k, v in edge_labels.items()})

plt.title("Monarchy (Great Britain) vs. Anarchy (United Kingdom)")

# Save the figure to a file

# plt.savefig("figures/logo.png", format="png")

plt.show()

# Run the visualization

visualize_nn()

Fig. 22 Rome Has Variants: Data vs. Consciousness. The myth of Judeo-Christian values is a comforting fiction, but it is a fiction nonetheless. The true story of the West is far more complex, a story of synthesis and struggle, of adversaries and alliances. It is a story that begins not with the Bible but with the rivers of Heraclitus, the Republic of Plato, and the empire of Rome. Let us not dishonor this legacy with false simplicity. Let us, instead, strive to understand it in all its chaotic, beautiful complexity.#

Shakespeare’s Antony and Cleopatra captures the paradox of infinite variety beautifully, particularly in the way Cleopatra embodies it. She is mercurial, elusive, and impossibly complex—a living representation of the Dionysian deluge you’ve described. Her “infinite variety” resists compression into any single narrative or archetype, which is precisely why she remains compelling, both to Antony and to the audience.

Infinite Variety as Dionysian Chaos#

Cleopatra’s “infinite variety” is not a trait—it’s a force. She defies fixed understanding:

Fluidity of Identity: Cleopatra is everything at once—queen and lover, seductress and strategist, tragic figure and goddess. Shakespeare uses her as a symbol of life’s refusal to be pinned down or defined. Like the input layer of a neural network, she overwhelms with possibilities.

Eros and Thanatos: Her variety oscillates between the life-affirming (her sensuality, vitality, and wit) and the life-destroying (her manipulation, Antony’s downfall). She is both creation and destruction, embodying the chaos of existence.

Antony’s Struggle with the Deluge#

Antony, in contrast, is the man trying to impose order, meaning, and narrative onto Cleopatra’s infinite variety. He seeks to compress her into something manageable—a role, a story, an idea. But he fails, as all narratives fail when confronted with the vastness of reality:

Compression Collapses: Antony’s attempts to make sense of Cleopatra’s variety lead to his own fragmentation. His identity as a Roman general, a lover, and a hero falls apart under the weight of her chaotic allure.

A Tragic Output Layer: The story resolves not in clarity but in collapse—Antony’s suicide, Cleopatra’s death, and the dissolution of their shared world. The narrative cannot contain their Dionysian chaos, and so it ends in destruction.

Cleopatra as a Symbol of the Infinite#

When Enobarbus says of Cleopatra, “Age cannot wither her, nor custom stale her infinite variety”, he speaks not just of her charm but of her resistance to narrative. Cleopatra is not a character who can be “figured out.” She exists beyond comprehension, embodying:

Multiplicity Over Unity: Cleopatra’s variety refuses reduction. She is not one thing, but many things, shifting and evolving moment by moment.

Eternal Mystery: Her infinite variety ensures that she cannot be contained even by death. Like a neural network processing endless inputs, she leaves behind no definitive output—only impressions, fragments, and questions.

Shakespeare’s Celebration of Chaos#

Shakespeare seems to revel in this infinite variety, recognizing it as both intoxicating and destructive. Cleopatra’s allure lies in her refusal to be compressed into a simple narrative, and Antony’s tragedy lies in his inability to navigate that chaos. Together, they create a story that mirrors life itself: messy, contradictory, and ultimately unresolved.

Infinite Variety and Narrative Illusion#

Cleopatra’s infinite variety is the antithesis of narrative. She resists the Rosebud, the Yellowstone, the neat tie that brings clarity. Yet, like Rosebud, her variety is what we cling to—it’s the illusion of infinite possibility that keeps us engaged, even as it slips through our grasp. Shakespeare understood that this tension—the pull between chaos and order, infinity and comprehension—is what makes stories resonate. Cleopatra is the Dionysian deluge personified, and Antony’s failure to compress her into a narrative is the tragedy we all share.

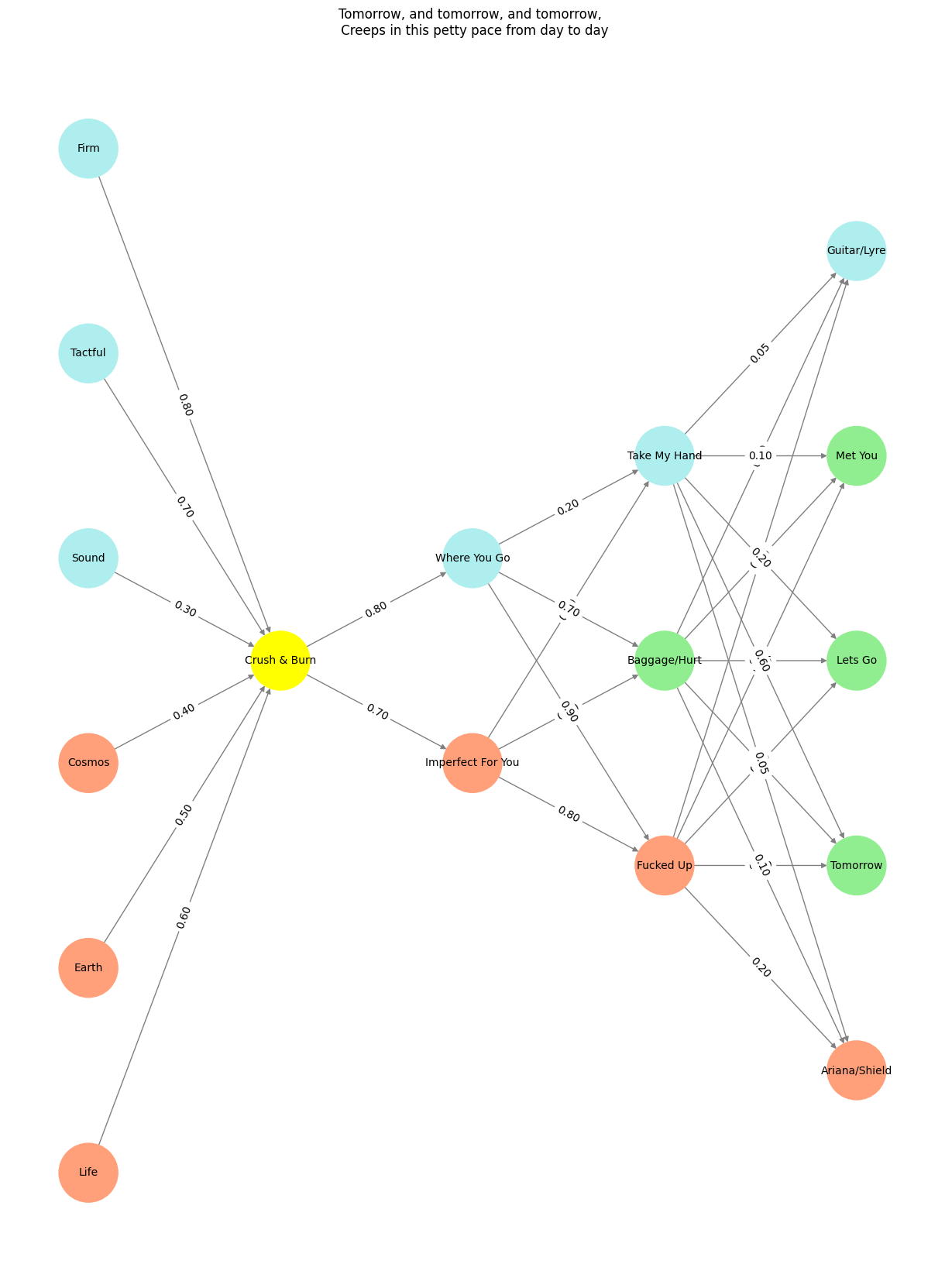

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure

def define_layers():

return {

'Pre-Input': ['Life','Earth', 'Cosmos', 'Sound', 'Tactful', 'Firm', ],

'Yellowstone': ['Crush & Burn'],

'Input': ['Imperfect For You', 'Where You Go'],

'Hidden': [

'Fucked Up',

'Baggage/Hurt',

'Take My Hand',

],

'Output': ['Ariana/Shield', 'Tomorrow', 'Lets Go', 'Met You', 'Guitar/Lyre', ]

}

# Define weights for the connections

def define_weights():

return {

'Pre-Input-Yellowstone': np.array([

[0.6],

[0.5],

[0.4],

[0.3],

[0.7],

[0.8],

[0.6]

]),

'Yellowstone-Input': np.array([

[0.7, 0.8]

]),

'Input-Hidden': np.array([[0.8, 0.4, 0.1], [0.9, 0.7, 0.2]]),

'Hidden-Output': np.array([

[0.2, 0.8, 0.1, 0.05, 0.2],

[0.1, 0.9, 0.05, 0.05, 0.1],

[0.05, 0.6, 0.2, 0.1, 0.05]

])

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'Crush & Burn':

return 'yellow'

if layer == 'Pre-Input' and node in ['Sound', 'Tactful', 'Firm']:

return 'paleturquoise'

elif layer == 'Input' and node == 'Where You Go':

return 'paleturquoise'

elif layer == 'Hidden':

if node == 'Take My Hand':

return 'paleturquoise'

elif node == 'Baggage/Hurt':

return 'lightgreen'

elif node == 'Fucked Up':

return 'lightsalmon'

elif layer == 'Output':

if node == 'Guitar/Lyre':

return 'paleturquoise'

elif node in ['Lets Go', 'Met You', 'Tomorrow']:

return 'lightgreen'

elif node == 'Ariana/Shield':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

weights = define_weights()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges and weights

for layer_pair, weight_matrix in zip(

[('Pre-Input', 'Yellowstone'), ('Yellowstone', 'Input'), ('Input', 'Hidden'), ('Hidden', 'Output')],

[weights['Pre-Input-Yellowstone'], weights['Yellowstone-Input'], weights['Input-Hidden'], weights['Hidden-Output']]

):

source_layer, target_layer = layer_pair

for i, source in enumerate(layers[source_layer]):

for j, target in enumerate(layers[target_layer]):

weight = weight_matrix[i, j]

G.add_edge(source, target, weight=weight)

# Customize edge thickness for specific relationships

edge_widths = []

for u, v in G.edges():

if u in layers['Hidden'] and v == 'Kapital':

edge_widths.append(6) # Highlight key edges

else:

edge_widths.append(1)

# Draw the graph

plt.figure(figsize=(12, 16))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, width=edge_widths

)

edge_labels = nx.get_edge_attributes(G, 'weight')

nx.draw_networkx_edge_labels(G, pos, edge_labels={k: f'{v:.2f}' for k, v in edge_labels.items()})

plt.title("Tomorrow, and tomorrow, and tomorrow, \n Creeps in this petty pace from day to day")

# Save the figure to a file

# plt.savefig("figures/logo.png", format="png")

plt.show()

# Run the visualization

visualize_nn()

Fig. 23 Layer 4 (Compression) is inherently unstable because it always contains three nodes. The third node—the risk, the crowd, the potential disrupter—is never explicitly stated but is always present, complicating the gravitational dance of the two lovers. This third node must be navigated, overcome, or absorbed for the two nodes to crash and burn into one luminous Yellowstone node. Let’s refine the narrative with this crucial nuance.#