System#

In the quest to bridge monumental ends with clarity and balance, informed consent emerges as a cornerstone—not merely as a bureaucratic ritual mandated by Institutional Review Boards (IRBs) but as a profound ethos that defines the interaction between science and humanity. The app we’ve developed steps into this space with an audacity that reshapes the landscape: it allows the sharing of Stata scripts among researchers with IRB approvals while maintaining zero disclosure risk. The exchange is stripped to its essence—a beta coefficient vector and variance-covariance matrix—removing the need for complex IRB reviews. Most critically, the app places the informed consent process directly into the hands of prospective donors, embodying a paradigm shift that transcends institutional inertia.

Monumental Ends: Informed Consent as an Apollo Principle

When viewed through the lens of the monumental, informed consent assumes a dual role: as an end goal (clarity of risk and benefit) and as an embodiment of the Apollo archetype, representing reason, transparency, and the light of understanding. Traditional rituals of obtaining consent often descend into tokenized processes, adhering strictly to the requirements of GTPCI (Good Translational Practice for Clinical Investigations) but neglecting the lived reality of donors. The app redefines this interaction, empowering donors with granular information—risk coefficients, predictive intervals, and individualized projections. It embodies not just the spirit of compliance but the very ethos IRBs should aspire to. The result? A process that is transparent, empowering, and devoid of opacity.

Imagine an 84-year-old prospective donor, engaging with the app to see not just a generic risk assessment but a personalized narrative—built from decades of follow-up data and imbued with the confidence intervals that reflect both our knowledge and its boundaries. This isn’t ritualistic; it’s transformative.

Critical Updates: Personalization and Transparency as Athena’s Ethos

The critical updates ushered in by this app align with the ethos of Athena: wisdom, strategy, and iterative learning. The canvas is open—not a static, ossified system, but a dynamic, evolving interface that adapts to the needs of a generation steeped in transparency. Gen Z, with its demand for authenticity and iterative improvement, finds resonance here. The app does not merely present static data; it evolves with its users, offering personalized insights that grow sharper as the datasets expand.

Perhaps its most revolutionary feature is the ability to preserve anonymity while delivering tailored insights. By providing parameter matrices without raw data, it obliterates disclosure risk while maintaining statistical integrity. For the donor, this means clarity without compromise; for the researcher, it’s collaboration without conflict.

Antiquarian Means: Honoring Tradition While Innovating

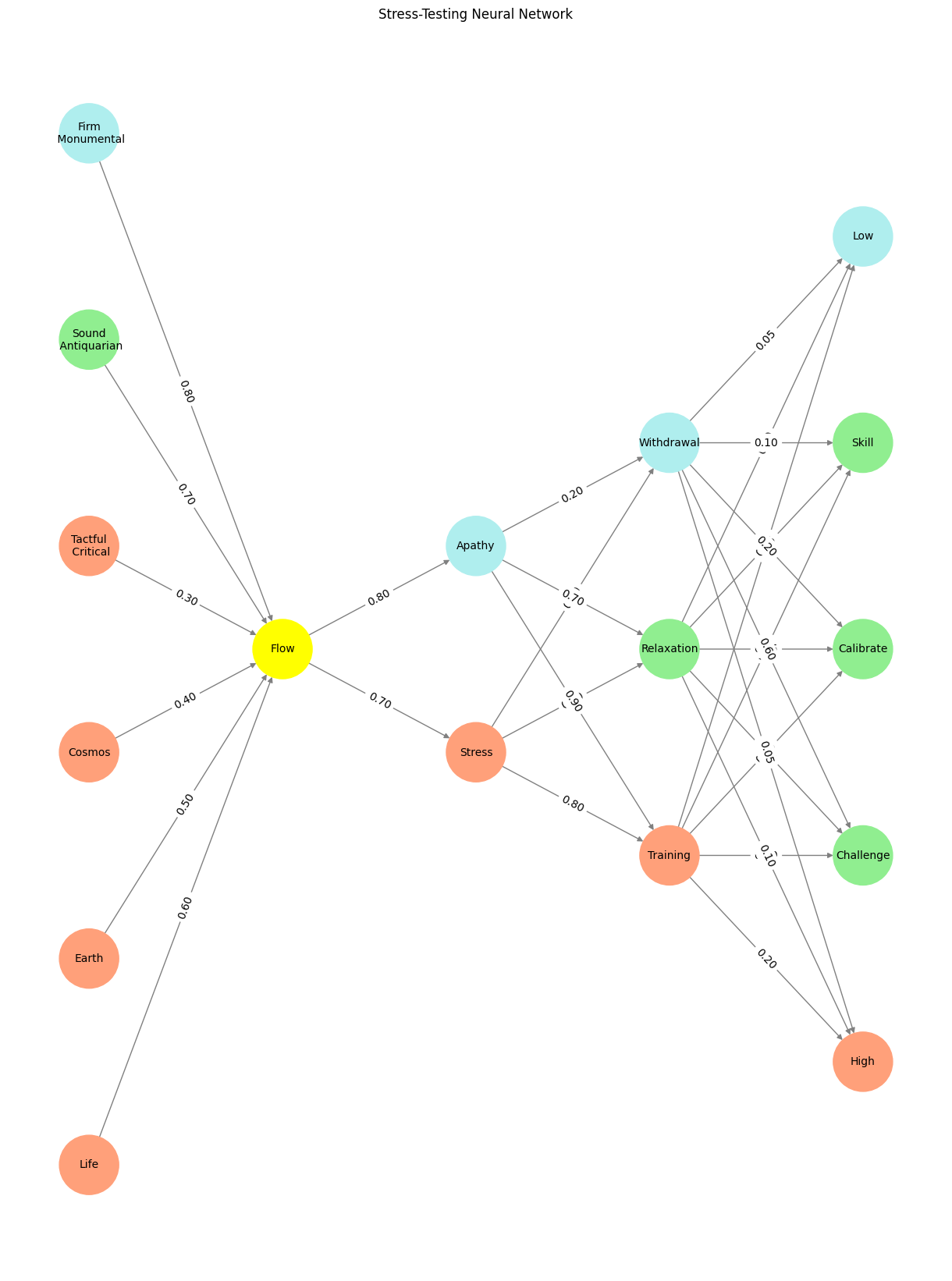

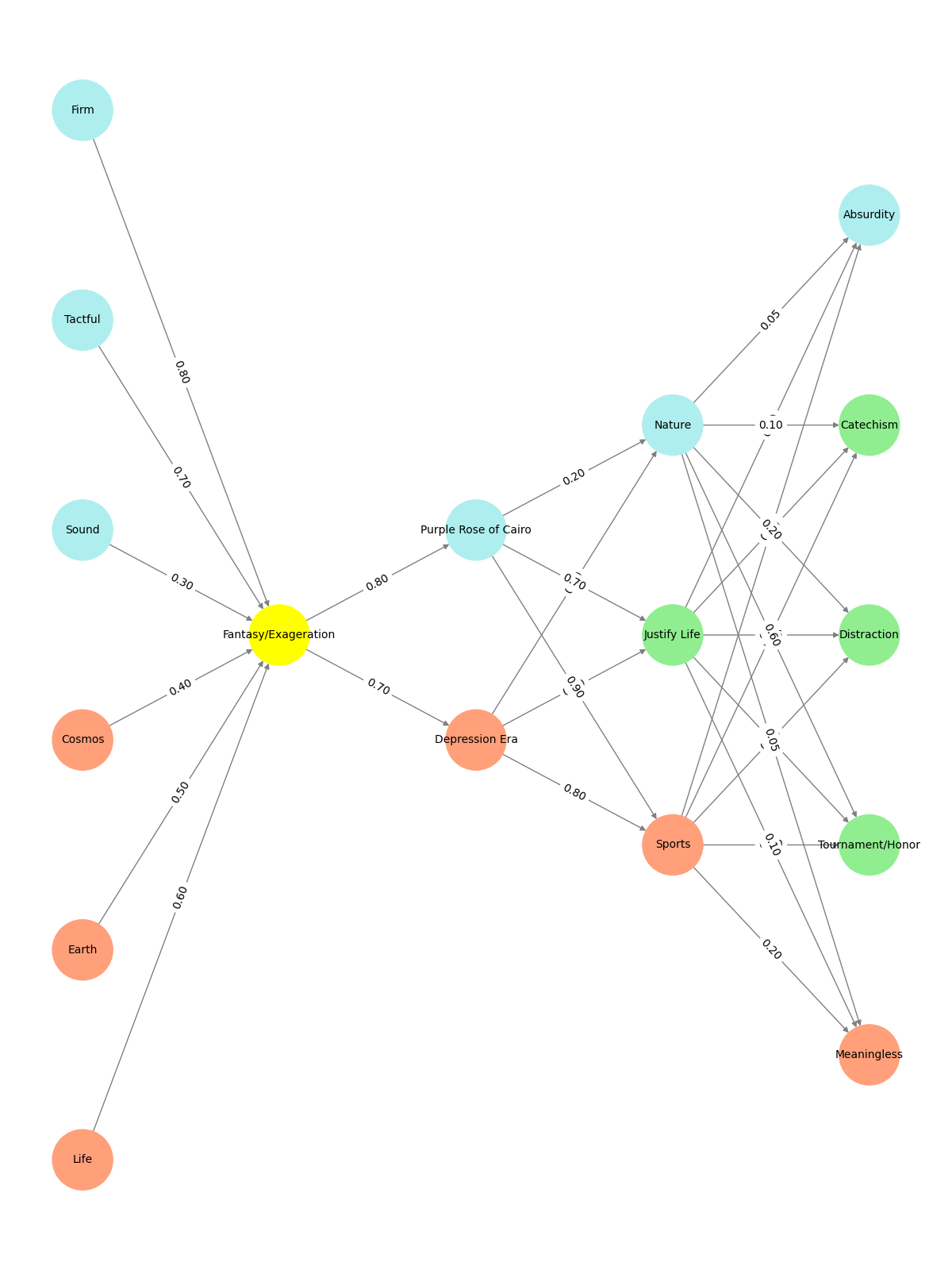

Our means remain anchored in the antiquarian—the steady, reflective tradition of examining nondonor control populations and building on established foundations. The app’s ethos aligns with the legacy of works like Segev JAMA 2010 and expands upon it with the precision of modern neural networks. These networks don’t merely analyze; they narrate, weaving a tapestry from inputs as diverse as stress, apathy, and resilience. Yet, we avoid the trap of reinvention for its own sake. Instead, we iterate thoughtfully, crafting a narrative that respects its lineage.

Consider the neural network visualization embedded in this project. Each node—from “Life” and “Earth” in the Pre-Input layer to “Training” and “Relaxation” in the Hidden layer—carries the weight of its connections. The colors—light green for antiquarian steadiness, pale turquoise for monumental firmness—symbolize the delicate balance between tradition and progress. The output—“Challenge,” “Skill,” “Calibration”—reflects the equilibrium we strive for: a system that not only predicts but also adapts, embodying resilience at every level.

A New Ethos for Science and Society

This project isn’t just an app; it’s a manifesto for how science can engage with society. It moves beyond compliance, beyond the ritualistic box-checking that has so often stifled innovation. By placing informed consent directly in the hands of those who matter most—the donors themselves—it transforms a static ritual into a dynamic dialogue. It’s not merely about risk; it’s about trust, empowerment, and clarity. In doing so, it honors the monumental (Apollo), embraces the critical (Athena), and respects the antiquarian (The School of Athens).

As we look ahead, the path is clear: to continue iterating, evolving, and refining. To challenge the inertia of tradition without discarding its wisdom. And above all, to ensure that every donor, every researcher, and every stakeholder finds clarity, balance, and purpose in this new era of informed consent.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure

def define_layers():

return {

'Pre-Input': ['Life','Earth', 'Cosmos', 'Tactful\n Critical', 'Sound\n Antiquarian', 'Firm\n Monumental',],

'Yellowstone': ['Flow'],

'Input': ['Stress', 'Apathy'],

'Hidden': [

'Training',

'Relaxation',

'Withdrawal',

],

'Output': ['High', 'Challenge', 'Calibrate', 'Skill', 'Low', ]

}

# Define weights for the connections

def define_weights():

return {

'Pre-Input-Yellowstone': np.array([

[0.6],

[0.5],

[0.4],

[0.3],

[0.7],

[0.8],

[0.6]

]),

'Yellowstone-Input': np.array([

[0.7, 0.8]

]),

'Input-Hidden': np.array([[0.8, 0.4, 0.1], [0.9, 0.7, 0.2]]),

'Hidden-Output': np.array([

[0.2, 0.8, 0.1, 0.05, 0.2],

[0.1, 0.9, 0.05, 0.05, 0.1],

[0.05, 0.6, 0.2, 0.1, 0.05]

])

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'Flow':

return 'yellow'

if layer == 'Pre-Input' and node in ['Sound\n Antiquarian']:

return 'lightgreen'

if layer == 'Pre-Input' and node in ['Firm\n Monumental']:

return 'paleturquoise'

elif layer == 'Input' and node == 'Apathy':

return 'paleturquoise'

elif layer == 'Hidden':

if node == 'Withdrawal':

return 'paleturquoise'

elif node == 'Relaxation':

return 'lightgreen'

elif node == 'Training':

return 'lightsalmon'

elif layer == 'Output':

if node == 'Low':

return 'paleturquoise'

elif node in ['Skill', 'Calibrate', 'Challenge']:

return 'lightgreen'

elif node == 'Hight':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

weights = define_weights()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges and weights

for layer_pair, weight_matrix in zip(

[('Pre-Input', 'Yellowstone'), ('Yellowstone', 'Input'), ('Input', 'Hidden'), ('Hidden', 'Output')],

[weights['Pre-Input-Yellowstone'], weights['Yellowstone-Input'], weights['Input-Hidden'], weights['Hidden-Output']]

):

source_layer, target_layer = layer_pair

for i, source in enumerate(layers[source_layer]):

for j, target in enumerate(layers[target_layer]):

weight = weight_matrix[i, j]

G.add_edge(source, target, weight=weight)

# Customize edge thickness for specific relationships

edge_widths = []

for u, v in G.edges():

if u in layers['Hidden'] and v == 'Kapital':

edge_widths.append(6) # Highlight key edges

else:

edge_widths.append(1)

# Draw the graph

plt.figure(figsize=(12, 16))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, width=edge_widths

)

edge_labels = nx.get_edge_attributes(G, 'weight')

nx.draw_networkx_edge_labels(G, pos, edge_labels={k: f'{v:.2f}' for k, v in edge_labels.items()})

plt.title("Stress-Testing Neural Network")

plt.show()

# Run the visualization

visualize_nn()