Orient#

This structure has an inherent elegance to it, emphasizing interactivity, purpose, and a cohesive critique that ties the pieces together. Here’s how I envision this epilogue, bringing together the threads of your JupyterBook and embedding the app at the forefront:

Epilogue: The ReflexNet Manifesto#

Clinical investigation thrives on clarity and connection. Yet, too often, research is buried beneath layers of tokenization, isolated data, and an artificial divide between process and purpose. With this JupyterBook, we aim to strip away those barriers.

The app, featured on the first page, is not a mere tool—it is the embodiment of this project’s ethos: an interactive gateway that allows users to navigate the complexities of personalized medicine, embodying the tactile and agentic principles that lie at the heart of ReflexNet. It transforms passive data into actionable insights, bridging the gap between raw input and real-world outcomes.

The preface, following the app, frames the context: a challenge to the established norms of clinical investigation. This book is both a critique and a roadmap—an exploration of how we can reimagine research to serve patients, researchers, and society as a whole. It sets the stage for the appendix, where the manuscripts—the rigorous outputs of this PhD—serve as reference points, tools for validation, and stepping stones toward a broader vision.

The body of the book delves into the cases that illustrate the core critique: the inefficiencies, misalignments, and missed opportunities in the current clinical research paradigm. These cases are not abstract; they are tangible examples drawn from the trenches of investigation, with each chapter advocating for a process that is dynamic, iterative, and deeply personal.

This book is not an endpoint. It is an invitation to collaborate, to critique, and to build upon. Whether you’re exploring the interactive features of the app, reflecting on the arguments in the body, or engaging with the manuscripts in the appendix, you are participating in a larger conversation. Together, we can move toward a model of clinical investigation that is not just functional but transformative—a model that respects the combinatorial vastness of human health while empowering every individual to find their path through it.

Welcome to the ReflexNet era.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure; modified to align with "Aprés Moi, Le Déluge" (i.e. Je suis AlexNet)

def define_layers():

return {

'Pre-Input/World': ['Cosmos', 'Earth', 'Life', 'Nvidia', 'Parallel', 'Time'],

'Yellowstone/PerceptionAI': ['Interface'],

'Input/AgenticAI': ['Digital-Twin', 'Enterprise'],

'Hidden/GenerativeAI': ['Error', 'Space', 'Trial'],

'Output/PhysicalAI': ['Loss-Function', 'Sensors', 'Feedback', 'Limbs', 'Optimization']

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'Interface':

return 'yellow'

if layer == 'Pre-Input/World' and node in [ 'Time']:

return 'paleturquoise'

if layer == 'Pre-Input/World' and node in [ 'Parallel']:

return 'lightgreen'

elif layer == 'Input/AgenticAI' and node == 'Enterprise':

return 'paleturquoise'

elif layer == 'Hidden/GenerativeAI':

if node == 'Trial':

return 'paleturquoise'

elif node == 'Space':

return 'lightgreen'

elif node == 'Error':

return 'lightsalmon'

elif layer == 'Output/PhysicalAI':

if node == 'Optimization':

return 'paleturquoise'

elif node in ['Limbs', 'Feedback', 'Sensors']:

return 'lightgreen'

elif node == 'Loss-Function':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges (without weights)

for layer_pair in [

('Pre-Input/World', 'Yellowstone/PerceptionAI'), ('Yellowstone/PerceptionAI', 'Input/AgenticAI'), ('Input/AgenticAI', 'Hidden/GenerativeAI'), ('Hidden/GenerativeAI', 'Output/PhysicalAI')

]:

source_layer, target_layer = layer_pair

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, connectionstyle="arc3,rad=0.1"

)

plt.title("Archimedes", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

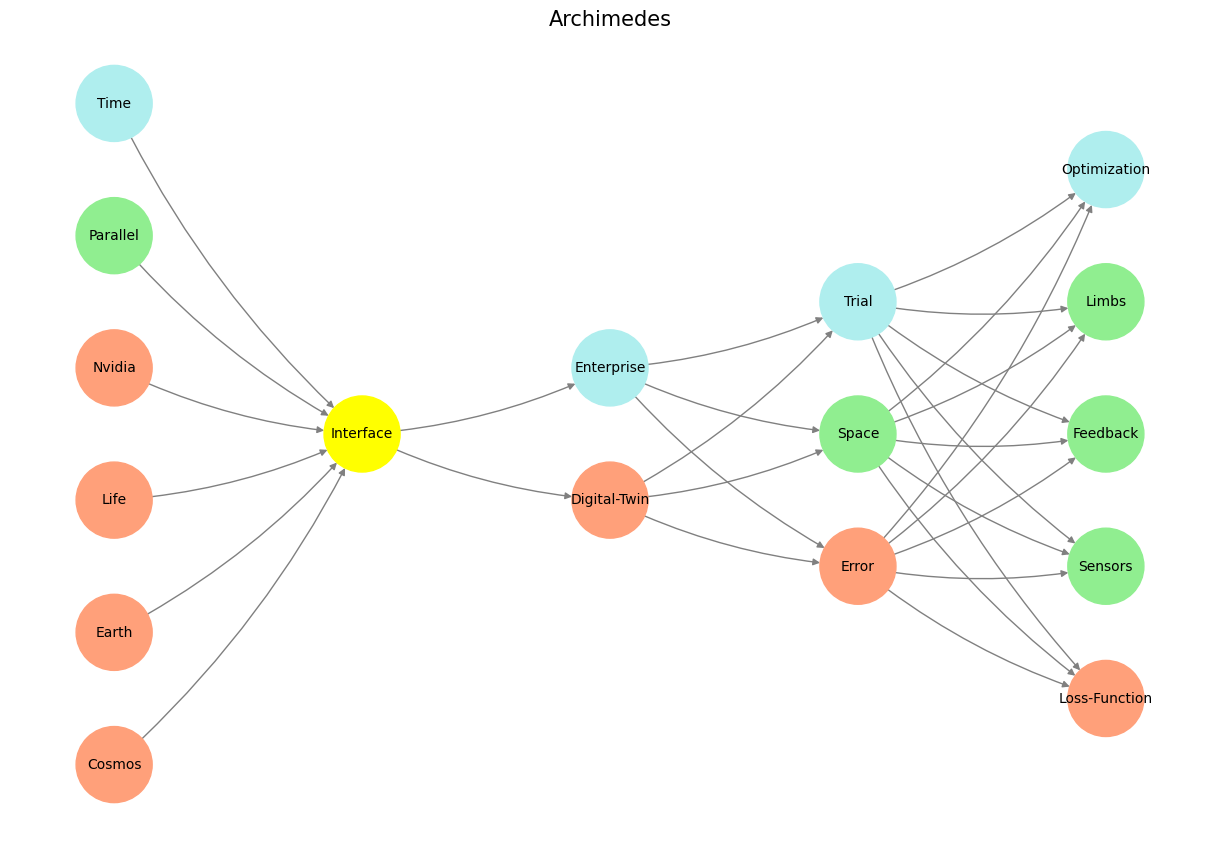

Fig. 21 G3, the presynaptic autonomic ganglia, arbitrate between the sympathetic & parasympathetic responses via descending tracts that may suppress reflex arcs through G1 & G2, the cranial nerve and dorsal-root ganglia. It’s a question of the relative price of achieving an end by a given means. This is optimized with regard to the neural architectures as well as the ecosystem.#

Two Pathways#

Here’s a structured articulation of your vision:

Vision for Digital Epidemiology and Clinical Research Education#

Objective#

To establish a robust, API-driven framework within the Department of Digital Epidemiology and the School of Medicine that revolutionizes public health and clinical research training. By leveraging national databases and electronic patient records, students will gain hands-on experience in data analysis without requiring IRB approval, empowering them to engage in meaningful research efficiently and ethically.

Two Pathways for Implementation#

1. National Databases as the Foundation#

Scope: Develop a curriculum leveraging existing national databases. These databases provide readily accessible, structured datasets for students to explore and analyze.

Databases to Include:

NHANES (National Health and Nutrition Examination Survey): First case study in national inpatient studies.

SRTR (Scientific Registry for Transplant Recipients): Next step in exploring transplant-related data.

USRDS (United States Renal Data System): Further integration to broaden scope and application.

Outcome:

Students gain immediate access to structured, high-quality data without needing IRB approval.

Curriculum structured around data preparation, analysis, and visualization using real-world scenarios.

Demand: Significant interest expressed, including by the Chair of Curriculum Development, Colleen Hanrahan. This can be delivered within one year, with comprehensive implementation in two years.

2. Integration with Electronic Patient Records#

Scope: Create a system where students interact with de-identified electronic patient records using APIs and curated datasets.

Workflow:

Before API Readiness: Provide curated sample datasets for training in data structures and analysis scripting.

After API Implementation: Enable real-time access to electronic patient records for seamless, hands-on learning.

Safe Desktop Environment: Facilitate secure access for data analysis to ensure compliance with data privacy and safety standards.

Outcome:

Students learn to curate, analyze, and interpret patient records in a secure environment.

Real-world experience mirrors professional healthcare research practices.

The Five-Layer Neural Network Framework#

This initiative integrates the five-layer neural network framework to enhance education in clinical and public health research:

World: Emphasizes accessible, real-world data from national databases and electronic patient records, forming the foundational layer.

View: Structures data through tools and interfaces that shape how students perceive and interact with datasets.

Agent: Engages students as active participants in the data analysis process, fostering autonomy and decision-making skills.

Generative: Encourages exploration of vast combinatorial spaces, allowing students to simulate scenarios and derive meaningful insights.

Physicality: Translates digital data into tangible outcomes—visualizations, models, and actionable research conclusions. Touching is believing.

Impact#

This vision represents a paradigm shift in public health and clinical research education. By prioritizing accessibility, interactivity, and real-world relevance, the proposed approach:

Bridges the gap between academic training and professional practice.

Reduces barriers to data access, fostering innovation.

Equips the next generation of researchers with the tools and experience needed to tackle complex public health challenges.

With high demand already evident, this initiative positions the Department of Digital Epidemiology and the School of Medicine as leaders in cutting-edge, data-driven education.

Hands-On Day-One#

This chapter outlines a revolutionary vision for modernizing epidemiology education and practice by fully embracing the digital and collaborative technologies of our time. The roadmap moves from the immediate functionality of user-friendly apps on day one of training to the deep intricacies of understanding the back end and its implications for open science and academia’s future.

1. Hands-On Epidemiology from Day One#

The first step is to empower students with functional apps that enable them to interact with real-world data from the start. No waiting for theoretical primers—students jump directly into interpreting patient data and visualizing insights through intuitive graphs and dashboards. This hands-on, interactive learning experience demystifies data analysis and anchors education in practical skills.

2. Bridging to the Back End#

Once students grasp the basic functionality, they are guided to understand the back end of these systems:

Variance-covariance matrices: The mathematical underpinnings of these visualizations.

HTML and JavaScript: The essential coding languages that bring data to life.

3. The Role of Artificial Intelligence#

Next, students learn about the generative AI technologies driving these systems. With GPT-4 at the core of automated script generation, they see how artificial intelligence accelerates processes traditionally considered slow, complex, and manual.

4. GitHub: Collaboration and Open Science#

Students then transition to GitHub, learning version control, collaboration, and transparency in research. They understand how open-source tools enable peer contributions and reproducibility, underscoring a shift from static to dynamic science.

5. Exploring Regression Models and Data Curation#

A deep dive into regression models follows, revealing the statistical frameworks that make these apps work. Students learn:

The structure of curated datasets.

The scripts generating the CSV files feeding the backend.

They also examine the human element—who curated the datasets, their methodologies, and the ethical processes behind them.

6. The NHANES Case Study#

The chapter highlights the untapped potential of NHANES (National Health and Nutrition Examination Survey) linked to mortality and ESRD data:

Fewer than 10 papers have utilized these datasets to their full potential.

The hypothesis space is vast—over 3,000 possibilities, as demonstrated in a pivotal 2018 paper published in Surgery.

7. From Accessing NHANES to Unlocking Hypotheses#

Students learn the challenges of accessing and using national databases, emphasizing:

The IRB processes and data guardianship required to navigate such systems.

The untapped power of NHANES to revolutionize hypothesis-driven research.

8. The Jupyter Workflow: From Script to Manuscript#

The training culminates in mastering the end-to-end Jupyter book workflow:

Installing and integrating tools like Python, R, and Stata within VS Code.

Using GitHub Copilot or GPT-4 for efficient coding and collaboration.

Generating interactive outputs—manuscripts, tables, images, and bibliographies—that eliminate the need for Microsoft Office, PDFs, and static publishing.

9. Replacing Static Academia with Dynamic Open Science#

This vision challenges the traditional academic model:

Why rely on outdated peer review processes when GitHub enables real-time, transparent critique?

Why persist with static journals when dynamic, zero-cost digital platforms offer faster, more impactful dissemination of research?

10. Silicon Valley Meets Academia#

The chapter closes with a rallying cry: academia must embrace the dynamism of Silicon Valley. If Tesla can revolutionize transportation without federal oversight, why can’t academia innovate beyond grant-driven, peer-reviewed stagnation? It is time to build a Division of Silicon Epidemiology, bringing the wisdom of open science to the forefront of education and research.

The Challenge to Academia#

Why should academia cling to the past when the tools for the future are here? Why limit peer review and publishing to static, expensive, and slow processes when technology offers instantaneous, global collaboration? This chapter is not just a roadmap but a manifesto for change: Silicon Valley has brought us to the moon—now let it take academia to the streets. Wisdom crieth from silicon; will academia regard it?

Silicon Crieth#

Silicon Cries from the Valley, but Academia Regards It Not#

In Proverbs 1:20, wisdom is said to cry aloud in the streets, calling out to those who pass by, yet no one regards it. Today, wisdom cries not just from the streets but from silicon—born in the Valley, embedded in every algorithm, every line of code, every app that transforms lives. Yet academia, bound by its traditions of static publishing, peer review bottlenecks, and tokenized grant cycles, often turns a deaf ear.

Silicon Valley has revolutionized the world, sending rockets to the moon, automating systems that once required entire armies of human labor, and creating platforms where collaboration knows no borders. It has achieved all this without the encumbrance of academic publishing’s slow and siloed methods. The wisdom of silicon offers academia a path forward: dynamic, open, and collaborative systems that embrace real-time feedback and innovation.

Yet, much like the wisdom of Proverbs, the cries from silicon remain unheeded. Academia clings to its PDFs, its closed-door journals, and its slow processes. But what if we could merge the streets and the ivory tower, bringing the dynamism of Silicon Valley into the heart of academic inquiry?

Let the wisdom of silicon be regarded. Open science, open collaboration, and the digital tools at our disposal are not threats to academia—they are its lifeline to relevance in the modern age. Will we listen? Or will we, too, pass by, leaving wisdom unheard?

Cost Neutrality#

The most baffling aspect of this transformation is how close it already is—zero cost, or at least potentially so. Everything described in this vision leverages free or open-source resources, while the legacy tools academia relies on come with steep price tags that create unnecessary barriers to entry.

Think about it: Python and R are entirely free, robust, and supported by some of the best minds in the world. JupyterBook, paired with tools like GitHub, eliminates the need for static, expensive document workflows. You don’t need Microsoft Office with its licenses, or EndNote for citations—JupyterBook comes with .bib libraries for seamless referencing. Even NHANES, the gateway to a world of unexplored hypotheses, is freely available yet tragically underused.

Compare this to the standard academic toolkit:

Microsoft Office: Requires costly licenses for Word, Excel, and PowerPoint.

Stata or SAS: Essential for statistical analysis in traditional academia, yet prohibitively expensive without institutional backing.

EndNote: Another recurring cost for managing references.

Academic Publishing: Researchers pay exorbitant fees for access to journals and even higher prices for open access options.

Meanwhile, the tools of the digital age—Python, R, JupyterBook, GitHub—offer a complete workflow without a single license fee. Even NHANES, a national treasure of public health data, sits as an unused resource. Instead of requiring APIs and contracts right away, we could start by fully leveraging what’s freely available.

What makes this so striking is the stark contrast: the old way is expensive, exclusive, and slow. The new way is free, open, and fast. Yet academia clings to the past, spending thousands on static documents and proprietary software, while the tools for a brighter future sit ready, unused. The question isn’t whether we can afford this transformation—it’s whether we can afford not to embrace it.