Cosmic#

Nvidia cosmos. Great, inspirational stuff.

Your inquiry about the collaboration between NVIDIA and the AlexNet team through CUDA and its parallels with your app’s workflow raises several key technical and workflow questions. Let me unpack and address them systematically, especially focusing on the technical aspects of collaboration, data formats, API development, and script-driven workflows.

1. NVIDIA and AlexNet: The CUDA Relationship#

The collaboration between NVIDIA and the AlexNet team revolved around providing GPU-accelerated computing power via CUDA. This relationship had several layers:

GPU Hardware Support: AlexNet’s team used NVIDIA GPUs for training their deep learning model. The GPUs provided the required parallel computing power to handle the massive matrix operations involved in neural networks.

CUDA as a Developer API: NVIDIA’s CUDA (Compute Unified Device Architecture) was the API that allowed AlexNet’s team to program GPU operations in a way that leveraged GPU acceleration. CUDA included libraries and tools (like cuDNN) optimized for deep learning operations, e.g., convolutions, pooling, and matrix multiplication.

Data Format Interoperability: While CUDA facilitated computations, the AlexNet team ensured the data inputs were preprocessed into formats GPUs could work with efficiently. This involved using libraries and frameworks compatible with CUDA, like NumPy arrays in Python or binary formats in C++.

In this case, NVIDIA provided the tools, APIs, and hardware, while the AlexNet team developed their software stack and ensured their data preprocessing pipelines matched CUDA’s requirements.

2. Workflow Lessons for Your App#

When building your app, particularly in the context of handling sensitive patient data under IRB and HIPAA constraints, the workflow diverges from the CUDA/AlexNet scenario in complexity. Here’s how your scenario maps to their collaboration:

Scripts in Lieu of APIs: Sharing Python, R, or Stata scripts with data guardians instead of using APIs is a sound approach for regulated data workflows. This limits direct data transfer, ensuring compliance with regulations while allowing external collaborators to extract the beta coefficients and variance-covariance matrices you need.

In CUDA’s case, APIs provided direct interactivity between developers and hardware. For you, sharing scripts is a secure alternative to avoid direct API connections with sensitive data.

Formats and Standards:

AlexNet Context: Data preprocessing for AlexNet involved converting raw image datasets (e.g., ImageNet) into formats compatible with deep learning frameworks like TensorFlow or PyTorch.

Your Context: Patient data formats can vary significantly across guardians (e.g., CSV, SQL databases, JSON, or XML). Knowing these formats, along with variable names, types, and schemas, is critical to avoid misalignment.

Simulation of Datasets: Like AlexNet’s team might have tested CUDA’s functionality on small synthetic datasets before scaling to ImageNet, you should consider creating synthetic or anonymized datasets that mimic your expected patient data structure. These simulated datasets can:

Allow script testing.

Serve as a standard format/template for data guardians to conform to.

Save time during actual implementation.

3. Standardization of Data Formats#

Data Format Standards in AlexNet/NVIDIA:

AlexNet used standard numerical arrays (floats or integers) for model input. The dataset itself, ImageNet, was prepared in standard image formats like JPEG, but converted to numerical tensors before training.

CUDA worked with these numerical arrays seamlessly through frameworks like PyTorch, TensorFlow, or raw CUDA calls.

Data Format Standards for Your App:

Formats you’ll encounter (CSV, SQL, JSON) often align with standard data handling tools (Pandas in Python, R’s

data.table, or Stata).SQL databases are widely used for structured data, and you can query specific variables and formats directly. However, schemas (variable names, types) vary across databases and must be known upfront.

CSV is ubiquitous but prone to formatting inconsistencies (e.g., delimiters, encodings, missing data handling).

JSON/XML are common for hierarchical data (less so in healthcare databases).

Ensuring your scripts can handle multiple input formats is key. Python, R, or Stata have packages for converting between these formats (e.g., pandas.read_sql, jsonlite::fromJSON).

4. Analyst Role: Dataset Curation#

Your idea of hiring an analyst to curate standardized datasets is practical and mirrors NVIDIA’s role in CUDA collaborations:

CUDA/NVIDIA Context: NVIDIA charged for their GPUs and provided tools like cuDNN for developers, streamlining the integration process.

Your App Context: Employing someone to:

Liaise with data guardians to understand schema and variable formats.

Curate and simulate standardized datasets.

Test and validate scripts on synthetic datasets before deployment.

This ensures smooth integration without repeatedly burdening your data guardians.

5. SQL and APIs#

SQL as Standard: SQL databases are widely used for structured datasets and follow standard query syntax. However:

Database schemas (tables, columns, variable names) vary across institutions.

Formats (e.g., integers, strings, binary) need verification for compatibility.

APIs vs. Scripts: While APIs are efficient for automated workflows, they require a standard interface (e.g., RESTful endpoints). Scripts are better for your use case because:

They are adaptable to different database schemas.

Data guardians can execute them without direct API access to sensitive data.

6. Recommendations for Workflow Design#

Create a Standard Data Template: Define a synthetic dataset template with expected formats (e.g., CSV with named columns, SQL schema). Share this with data guardians to standardize preprocessing.

Validate Scripts on Synthetic Data: Before deploying scripts to guardians, test them on synthetic datasets to ensure compatibility.

Centralize Schema Knowledge: Maintain a database schema repository (variable names, types, units) for each collaborating institution.

Hire a Data Curator: Assign someone to manage variable mapping, script testing, and data format standardization.

Leverage Open Standards: Use widely accepted file formats (CSV, JSON) and make your scripts compatible with these standards.

Conclusion#

The CUDA and AlexNet collaboration underscores the importance of clear communication, standardized formats, and tools that abstract complexity for developers. In your app, this translates to:

Providing clear scripts that adapt to various data formats.

Standardizing templates for data guardians.

Assigning roles (e.g., analyst/curator) to streamline workflows. This approach minimizes friction, maximizes compliance, and enhances collaboration efficiency.

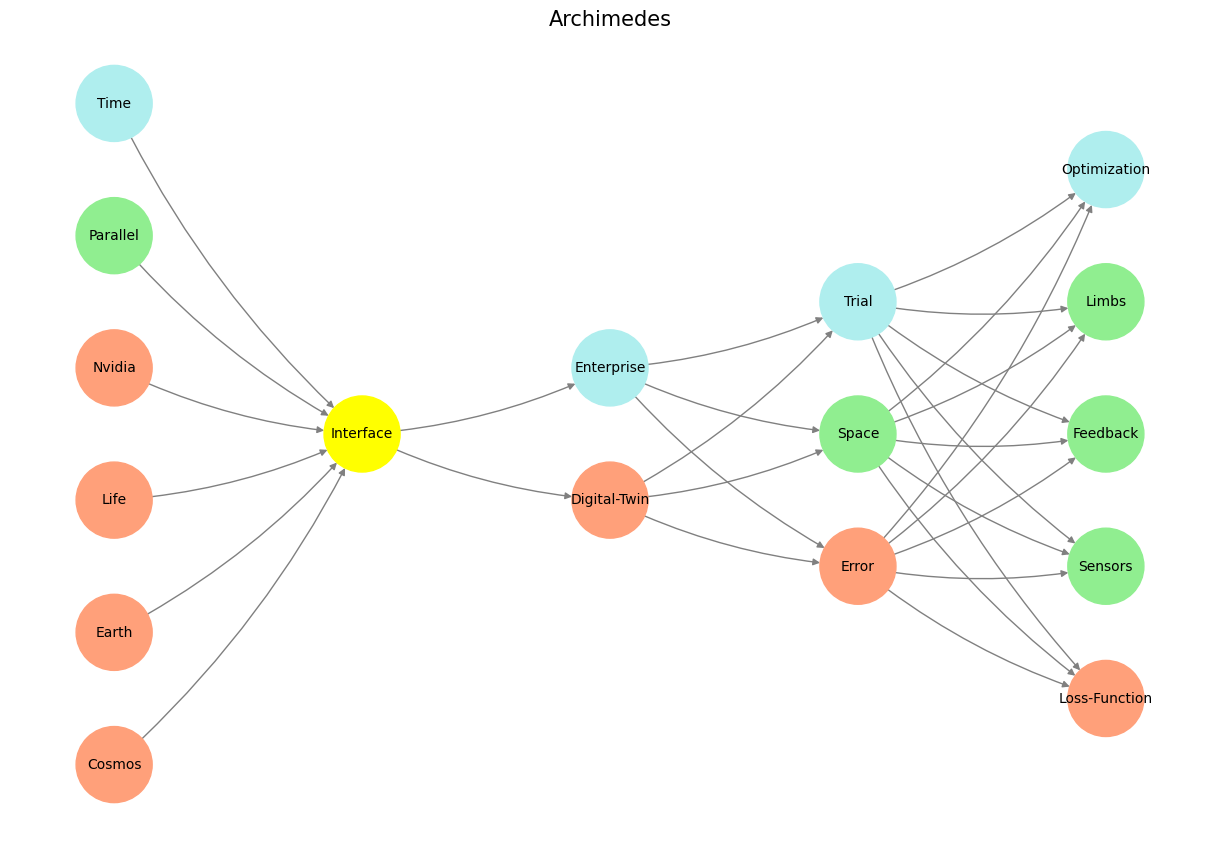

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure; modified to align with "Aprés Moi, Le Déluge" (i.e. Je suis AlexNet)

def define_layers():

return {

'Pre-Input/World': ['Cosmos', 'Earth', 'Life', 'Nvidia', 'Parallel', 'Time'],

'Yellowstone/PerceptionAI': ['Interface'],

'Input/AgenticAI': ['Digital-Twin', 'Enterprise'],

'Hidden/GenerativeAI': ['Error', 'Space', 'Trial'],

'Output/PhysicalAI': ['Loss-Function', 'Sensors', 'Feedback', 'Limbs', 'Optimization']

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'Interface':

return 'yellow'

if layer == 'Pre-Input/World' and node in [ 'Time']:

return 'paleturquoise'

if layer == 'Pre-Input/World' and node in [ 'Parallel']:

return 'lightgreen'

elif layer == 'Input/AgenticAI' and node == 'Enterprise':

return 'paleturquoise'

elif layer == 'Hidden/GenerativeAI':

if node == 'Trial':

return 'paleturquoise'

elif node == 'Space':

return 'lightgreen'

elif node == 'Error':

return 'lightsalmon'

elif layer == 'Output/PhysicalAI':

if node == 'Optimization':

return 'paleturquoise'

elif node in ['Limbs', 'Feedback', 'Sensors']:

return 'lightgreen'

elif node == 'Loss-Function':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges (without weights)

for layer_pair in [

('Pre-Input/World', 'Yellowstone/PerceptionAI'), ('Yellowstone/PerceptionAI', 'Input/AgenticAI'), ('Input/AgenticAI', 'Hidden/GenerativeAI'), ('Hidden/GenerativeAI', 'Output/PhysicalAI')

]:

source_layer, target_layer = layer_pair

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, connectionstyle="arc3,rad=0.1"

)

plt.title("Archimedes", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 12 The Dance of Compliance (Firmness With Our Ideals). Ultimately, compliance need not be a chain but a dance—an interplay of soundness, tactfulness, and firm commitment. By embracing a neural network-inspired redesign, institutions can elevate online training from a grudging obligation to an empowering journey. Like Bach’s grounding, Mozart’s tactfulness, and Beethoven’s transformative vision, the new model harmonizes the past, present, and future, ensuring that institutions remain firmly committed to their values and ideals while adapting to the ever-evolving world.#