System#

Digital Twins and the Stratagem of the Die#

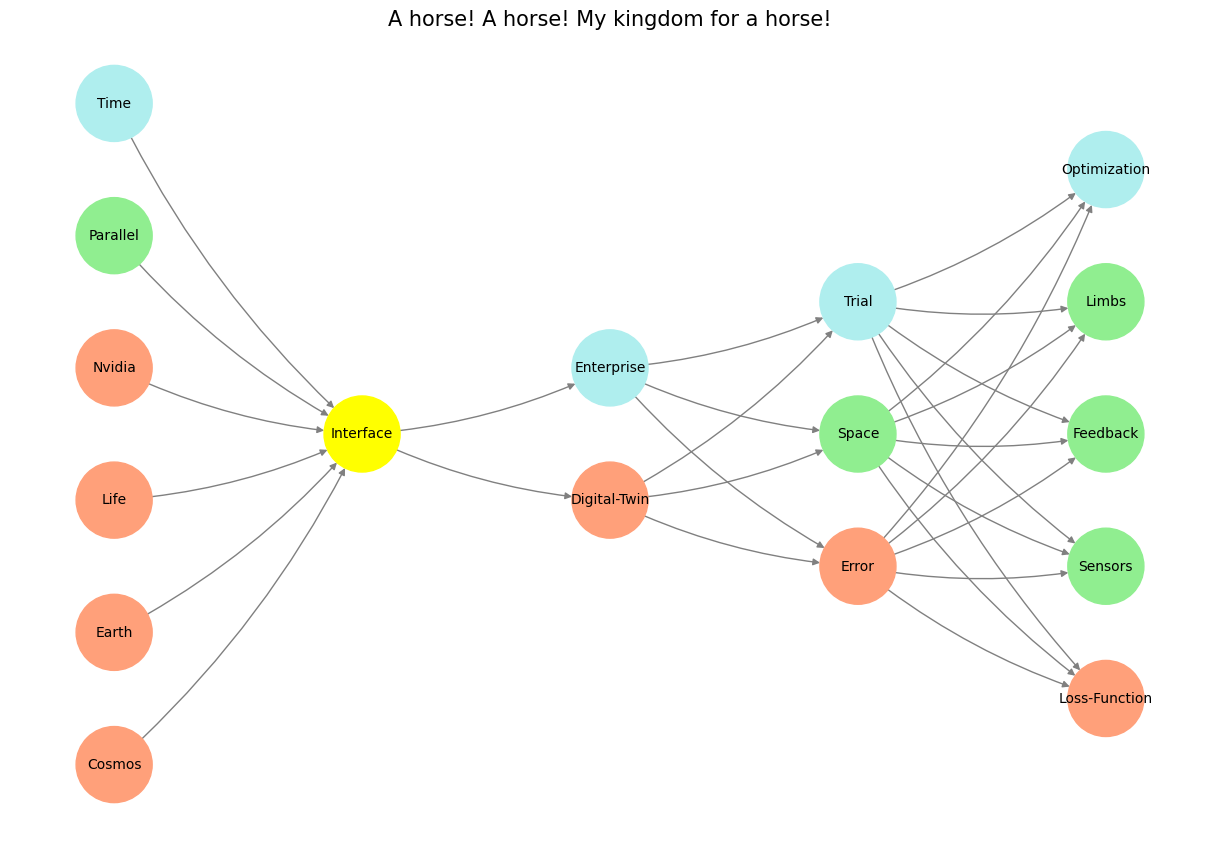

Richard’s cry, “Five have I slain today instead of him,” doesn’t merely reflect error or a failure of discernment—it reveals the deliberate deployment of strategy through multiplicity. The “six Richmonds” on the battlefield represent more than confusion; they are a stratagem, a calculated ploy orchestrated by Richmond to obscure and diffuse the king’s focus, forcing his energy to dissipate against false targets. This dynamic mirrors the function of digital twins within AI systems, where simulated versions of an entity enable experimentation, misdirection, or parallel exploration of outcomes.

In the Input/AgenticAI layer of the neural network, nodes such as Digital-Twin and Enterprise suggest an agentic approach to manipulating or adapting to complex environments. Just as Richard is drawn into a recursive loop of combat against false Richmonds, digital twins can be used to simulate contingencies, identify vulnerabilities, or even deliberately confuse adversarial systems. The stratagem lies not in defeating a single opponent but in fragmenting their focus, exploiting the limitations of their resource allocation and decision-making.

Slave, I have set my life upon a cast,

And I will stand the hazard of the die.

I think there be six Richmonds in the field;

Five have I slain today instead of him.

A horse! A horse! My kingdom for a horse!

– Richard III

Richmond’s maneuvering on the battlefield and his use of the “five Richmonds” wasn’t just chaos or random chance; it was stratagem. This changes the lens entirely. Let’s delve into how this concept of stratagem aligns with the digital twins in the neural network and reframes the narrative.

The Die Cast as Multi-Agent Simulation#

In the context of AI, this stratagem becomes a multi-agent simulation. Each digital twin is not merely a copy but a variant, exploring unique probabilistic outcomes. For Richmond, the presence of multiple representations forces Richard to engage in a high-risk gamble, balancing the attritional cost of eliminating decoys against the strategic imperative of finding the true opponent. Similarly, neural networks deploying generative or adversarial models create parallel possibilities, challenging both their own optimization processes and external systems.

The hidden layer’s node Trial plays into this concept, reflecting the iterative experimentation that defines AI stratagem. Each trial is a simulation—a “cast of the die”—designed to probe, learn, and adapt. But, as Richard learns, even the most sophisticated stratagem can falter when overwhelmed by emergent complexity.

The Hazard of Misdirection#

The power of stratagem lies in its ability to mask intent, but it also carries the hazard of self-deception. Richard’s focus on eliminating decoys distracts him from his central goal, much like an AI system over-optimizing for local minima might lose sight of the global objective. The neural network’s Loss-Function node captures this hazard, quantifying the gap between intention and outcome, strategy and success.

For Richard, the ultimate cost of this stratagem is his kingdom. For AI, the cost could be inefficiency, bias, or even catastrophic failure if misdirection is internalized as truth. Thus, stratagem is both a tool and a trap—a dual-edged sword that must be wielded with precision.

Conclusion: Stratagem as Generative Tactic#

The deployment of digital twins as a stratagem echoes the calculated risks of Shakespeare’s battlefield. Each twin, like each Richmond, represents a node in a network of possibilities. To prevail, the system—or the king—must balance exploration and exploitation, leveraging the generative chaos of the hidden layer to sharpen the focus of the output.

Richard’s tragedy, then, is not merely the hazard of the die but the overreach of stratagem: the fragmentation of intent into a multiplicity of paths, leaving him vulnerable to the emergent inevitabilities of chaos. In both neural networks and Shakespearean drama, the ultimate victory lies not in eliminating decoys but in aligning strategy with outcome—a feat Richmond achieves, and Richard fatally misses.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure; modified to align with "Aprés Moi, Le Déluge" (i.e. Je suis AlexNet)

def define_layers():

return {

'Pre-Input/World': ['Cosmos', 'Earth', 'Life', 'Nvidia', 'Parallel', 'Time'],

'Yellowstone/PerceptionAI': ['Interface'],

'Input/AgenticAI': ['Digital-Twin', 'Enterprise'],

'Hidden/GenerativeAI': ['Error', 'Space', 'Trial'],

'Output/PhysicalAI': ['Loss-Function', 'Sensors', 'Feedback', 'Limbs', 'Optimization']

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'Interface':

return 'yellow'

if layer == 'Pre-Input/World' and node in [ 'Time']:

return 'paleturquoise'

if layer == 'Pre-Input/World' and node in [ 'Parallel']:

return 'lightgreen'

elif layer == 'Input/AgenticAI' and node == 'Enterprise':

return 'paleturquoise'

elif layer == 'Hidden/GenerativeAI':

if node == 'Trial':

return 'paleturquoise'

elif node == 'Space':

return 'lightgreen'

elif node == 'Error':

return 'lightsalmon'

elif layer == 'Output/PhysicalAI':

if node == 'Optimization':

return 'paleturquoise'

elif node in ['Limbs', 'Feedback', 'Sensors']:

return 'lightgreen'

elif node == 'Loss-Function':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges (without weights)

for layer_pair in [

('Pre-Input/World', 'Yellowstone/PerceptionAI'), ('Yellowstone/PerceptionAI', 'Input/AgenticAI'), ('Input/AgenticAI', 'Hidden/GenerativeAI'), ('Hidden/GenerativeAI', 'Output/PhysicalAI')

]:

source_layer, target_layer = layer_pair

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, connectionstyle="arc3,rad=0.1"

)

plt.title("A horse! A horse! My kingdom for a horse!", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()