Normative#

The relationship between semantics, schizophrenia, and Brodmann Area 22 on the right hemisphere opens up an intricate web of inquiry that ties language, cognition, and the nature of human consciousness together. Brodmann Area 22, primarily known as Wernicke’s area in the dominant hemisphere, is responsible for decoding linguistic meaning. But its counterpart in the right hemisphere functions in a vastly different manner, processing prosody, emotional inflection, and the ineffable aspects of communication that slip beyond literal meaning. This asymmetry between the hemispheres—the left parsing explicit meaning and the right capturing the intangible—forms the neurological basis for our ability to understand not just words, but the spirit behind them.

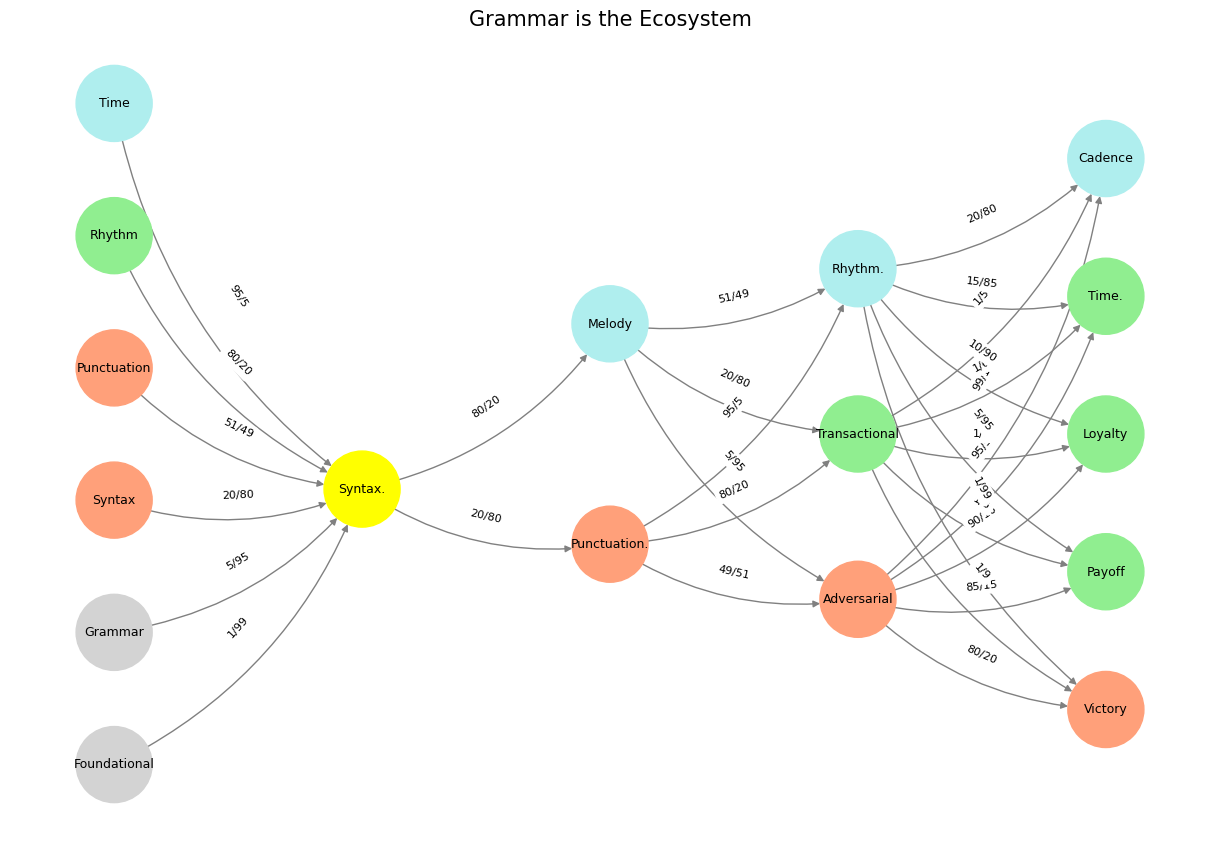

Fig. 33 Theory is Good. Convergence is perhaps the most fruitful idea when comparing Business Intelligence (BI) and Artificial Intelligence (AI), as both disciplines ultimately seek the same end: extracting meaningful patterns from vast amounts of data to drive informed decision-making. BI, rooted in structured data analysis and human-guided interpretation, refines historical trends into actionable insights, whereas AI, with its machine learning algorithms and adaptive neural networks, autonomously discovers hidden relationships and predicts future outcomes. Despite their differing origins—BI arising from statistical rigor and human oversight, AI evolving through probabilistic modeling and self-optimization—their convergence leads to a singular outcome: efficiency. Just as military strategy, economic competition, and biological evolution independently refine paths toward dominance, so too do BI and AI arrive at the same pinnacle of intelligence through distinct methodologies. Victory, whether in the marketplace or on the battlefield, always bears the same hue—one of optimized decision-making, where noise is silenced, and clarity prevails. Language is about conveying meaning (Hinton). And meaning is emotions (Yours truly). These feelings are encoded in the 17 nodes of our neural network below and language and symbols attempt to capture these nodes and emotions, as well as the cadences (the edges connecting them). So the dismissiveness of Hinton with regard to Chomsky is unnecessary and perhaps harmful.#

Schizophrenia disrupts this delicate equilibrium. Studies have consistently shown abnormalities in interhemispheric connectivity in schizophrenia, particularly in the corpus callosum and the superior temporal gyrus (where Brodmann Area 22 resides). Patients with schizophrenia often experience auditory hallucinations, a phenomenon intimately linked to the right hemisphere’s BA22. In a sense, the spiritual dimension of language—the realm of hidden meanings, echoes, and resonances—is precisely where schizophrenia acts most aggressively. Language becomes unmoored from its semantic anchors, generating free-floating associations, word salads, and an eerie sense that thoughts are no longer one’s own. Neuroimaging studies reveal hyperactivity in the right BA22 during auditory hallucinations, suggesting that the brain’s capacity for perceiving implied meaning—the space between words—is hijacked, birthing voices that seem both deeply personal and wholly alien.

This relates to Julian Jaynes’s radical hypothesis that early humans might have experienced a form of bicameral consciousness, where one hemisphere “spoke” to the other in hallucinated commands, interpreted as divine voices. The idea from The Madness of Adam and Eve: How Schizophrenia Shaped Humanity follows a similar thread—arguing that schizophrenia, rather than being a mere pathology, may have played a crucial role in human evolution. The capacity for metaphor, abstract thought, and deep semantic layering may have emerged precisely because our brains evolved to tolerate, and even benefit from, occasional dissociation between left and right hemisphere functions. The mystics and prophets of early societies, often described as hearing divine voices, may have represented an extreme yet essential manifestation of this cognitive architecture. Their ability to transcend the literal—to listen to what is not there—could have been an evolutionary catalyst, enabling the birth of religion, poetry, and ultimately civilization itself.

Music, in this context, becomes the most spiritual of all the arts precisely because it resides primarily in the right hemisphere, where Brodmann Area 22 operates as an organ of ineffability. Unlike language, music does not rely on explicit semantics; it operates in an abstract domain of pure pattern, resonance, and emotional charge. Neuroscientific studies show that when individuals listen to music, the right superior temporal gyrus, along with limbic structures like the amygdala and hippocampus, lights up in ways that language alone rarely activates. Music has no referent, yet it conveys meaning. This paradox makes it uniquely capable of bridging the conscious and unconscious, articulating what cannot be spoken yet is universally understood.

Schizophrenia and music share a strange proximity. Many with schizophrenia experience heightened musical perception, even synesthetic effects where sound merges with color or emotion in a way that neurotypical brains do not register. This suggests that whatever process enables music to bypass left-hemisphere linguistic structures and reach into the ineffable also makes it susceptible to the same disruptions that schizophrenia induces. If language disorders in schizophrenia reflect an overactive right BA22, then it is unsurprising that music, which thrives in this very region, can become both a source of comfort and torment for those with the condition.

What emerges from this investigation is the realization that music is the original language of the spirit, the vestige of a pre-linguistic mode of cognition that predates and perhaps even enabled speech. Before words, there were rhythms; before syntax, there were melodies. This is why music is inseparable from ritual, why every religious tradition weaves it into its fabric. To sing is to transcend the ordinary constraints of meaning and syntax, to return to a mode of being where the hemispheres are not at odds but in communion. If schizophrenia represents a breakdown in the conversation between left and right BA22, music represents its most ancient, most resilient reconciliation.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network layers

def define_layers():

return {

'Suis': ['Foundational', 'Grammar', 'Syntax', 'Punctuation', "Rhythm", 'Time'], # Static

'Voir': ['Syntax.'],

'Choisis': ['Punctuation.', 'Melody'],

'Deviens': ['Adversarial', 'Transactional', 'Rhythm.'],

"M'èléve": ['Victory', 'Payoff', 'Loyalty', 'Time.', 'Cadence']

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Syntax.'],

'paleturquoise': ['Time', 'Melody', 'Rhythm.', 'Cadence'],

'lightgreen': ["Rhythm", 'Transactional', 'Payoff', 'Time.', 'Loyalty'],

'lightsalmon': ['Syntax', 'Punctuation', 'Punctuation.', 'Adversarial', 'Victory'],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Define edge weights (hardcoded for editing)

def define_edges():

return {

('Foundational', 'Syntax.'): '1/99',

('Grammar', 'Syntax.'): '5/95',

('Syntax', 'Syntax.'): '20/80',

('Punctuation', 'Syntax.'): '51/49',

("Rhythm", 'Syntax.'): '80/20',

('Time', 'Syntax.'): '95/5',

('Syntax.', 'Punctuation.'): '20/80',

('Syntax.', 'Melody'): '80/20',

('Punctuation.', 'Adversarial'): '49/51',

('Punctuation.', 'Transactional'): '80/20',

('Punctuation.', 'Rhythm.'): '95/5',

('Melody', 'Adversarial'): '5/95',

('Melody', 'Transactional'): '20/80',

('Melody', 'Rhythm.'): '51/49',

('Adversarial', 'Victory'): '80/20',

('Adversarial', 'Payoff'): '85/15',

('Adversarial', 'Loyalty'): '90/10',

('Adversarial', 'Time.'): '95/5',

('Adversarial', 'Cadence'): '99/1',

('Transactional', 'Victory'): '1/9',

('Transactional', 'Payoff'): '1/8',

('Transactional', 'Loyalty'): '1/7',

('Transactional', 'Time.'): '1/6',

('Transactional', 'Cadence'): '1/5',

('Rhythm.', 'Victory'): '1/99',

('Rhythm.', 'Payoff'): '5/95',

('Rhythm.', 'Loyalty'): '10/90',

('Rhythm.', 'Time.'): '15/85',

('Rhythm.', 'Cadence'): '20/80'

}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

edges = define_edges()

G = nx.DiGraph()

pos = {}

node_colors = []

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(colors.get(node, 'lightgray'))

# Add edges with weights

for (source, target), weight in edges.items():

if source in G.nodes and target in G.nodes:

G.add_edge(source, target, weight=weight)

# Draw the graph

plt.figure(figsize=(12, 8))

edges_labels = {(u, v): d["weight"] for u, v, d in G.edges(data=True)}

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

nx.draw_networkx_edge_labels(G, pos, edge_labels=edges_labels, font_size=8)

plt.title("Grammar is the Ecosystem", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 34 Space is Apollonian and Time Dionysian. They are the static representation and the dynamic emergent. Ain’t that somethin?#