Orient#

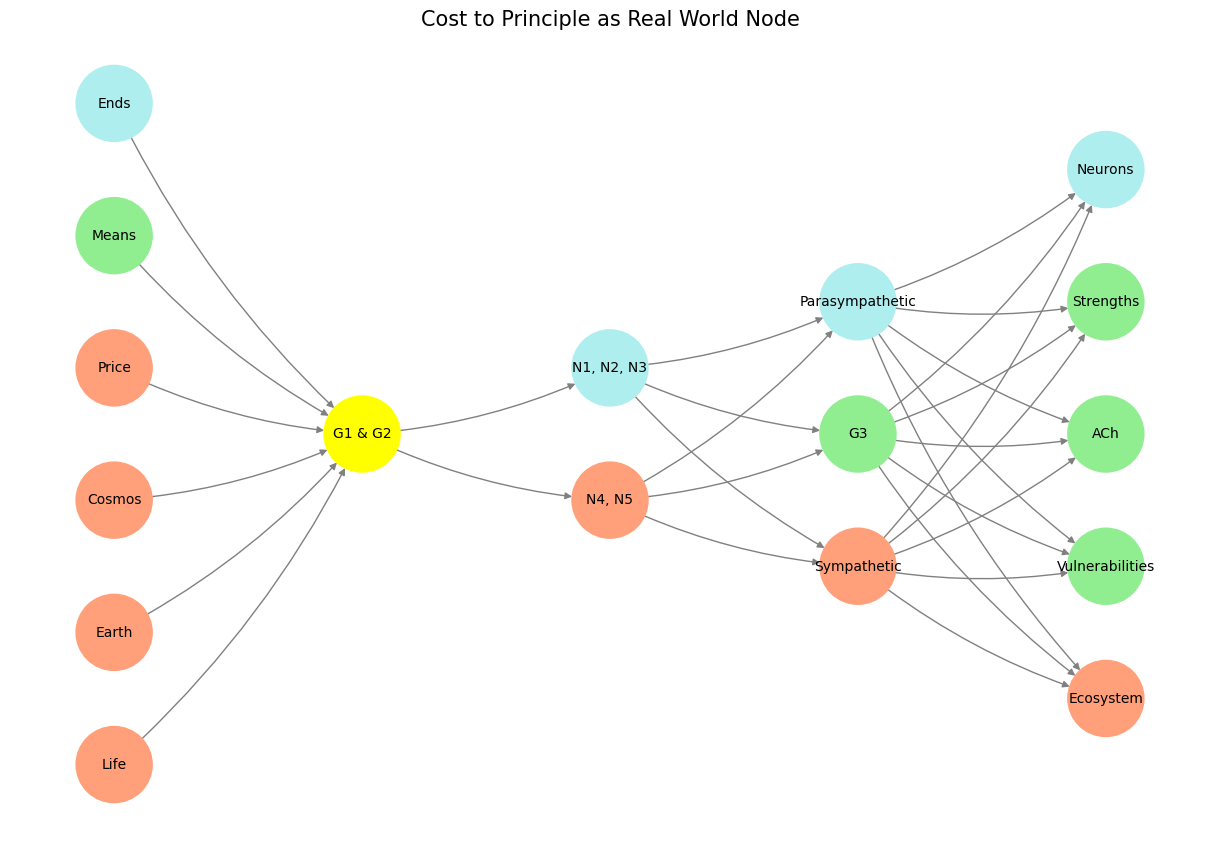

The G3 presynaptic ganglia occupy a pivotal role in the neural network, serving as an intermediary in the dynamic negotiation between Principal (the “Price” node) and Agent (represented by N1-N5 in the Input/AgenticAI layer). Situated within the Hidden/GenerativeAI layer, G3 functions as a critical decision-making node, mediating synaptic signals that flow downstream through descending tracts and shaping reflex arcs from G1 and G2—the cranial nerve and dorsal root ganglia. This position places G3 at the heart of an agency problem, balancing the Principal’s overarching goals with the Agent’s local autonomy.

From an agency theory perspective, G3 arbitrates the inherent tension between achieving systemic efficiency and allowing localized decision-making flexibility. The Principal, represented by immutable rules such as life, cosmos, and the negotiation of “means” and “ends,” sets the strategic directives for the network. Meanwhile, N1-N5, as Agents, operate within the input layer, responding to immediate stimuli and processing localized data. G3 ensures that these Agents do not deviate excessively from the Principal’s overarching goals, modulating their autonomy through synaptic control mechanisms. This dynamic reflects the neural equivalent of contract design, where incentives and constraints are balanced to align local actions with global objectives.

The network visualization reinforces this agency theory dynamic, with Principal (formerly “Price”) positioned in the Pre-Input/WorldRules layer, driving directives downstream. G3, symbolized in light green, serves as the generative nexus between the hidden layer and the inputs, ensuring alignment between the sympathetic (lightsalmon) and parasympathetic (paleturquoise) systems. The Agents, embodied in N1-N5, are visualized as paleturquoise nodes, representing their sensitivity to incoming data and their role in executing the Principal’s strategic goals. The color and placement of these nodes highlight the layered negotiation inherent in the system, where competing signals and demands must be synthesized into coherent actions.

Fig. 28 World Rules vs. Misinformation. The very point of intelligence is to compare the response to patterns of information that yield a reflex and the response to more deliberation. Parsing the case vs. control is critical for inference.#

Agency theory’s hallmark issue—how to ensure alignment between a Principal and its Agents—is echoed in G3’s modulation of reflex arcs and decision pathways. Reflex suppression via descending tracts reflects an explicit “contract” by which G3 enforces constraints on Agent behavior, ensuring that local actions (such as dorsal root ganglia reflexes) do not derail global objectives. However, G3 must also allow flexibility, preserving the Agent’s capacity for rapid, localized responses in dynamic environments.

This interplay of control and autonomy is further enriched by the network’s emphasis on ecosystem outputs. Nodes such as ACh (acetylcholine), Strengths, Vulnerabilities, and Ecosystem in the Output/PhysicalAI layer highlight the consequences of these negotiated relationships. The Principal’s directives, filtered through G3, must ultimately optimize these outputs, balancing resilience, adaptability, and systemic vulnerabilities.

The reframing of “Price” as Principal and the incorporation of N1-N5 as Agent elevate the discussion of G3 from mere neurobiological mechanics to a sophisticated analogy for systemic governance. Whether applied to neural systems, AI frameworks, or organizational dynamics, G3 exemplifies the delicate balance between centralized control and decentralized agency. This balance transforms the presynaptic ganglia into a generative hub for aligning goals, synthesizing inputs, and ensuring coherence across a complex, adaptive network.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure; modified to align with "Aprés Moi, Le Déluge" (i.e. Je suis AlexNet)

def define_layers():

return {

'Pre-Input/WorldRules': ['Life', 'Earth', 'Cosmos', 'Price', 'Means', 'Ends'],

'Yellowstone/PerceptionAI': ['G1 & G2'],

'Input/AgenticAI': ['N4, N5', 'N1, N2, N3'],

'Hidden/GenerativeAI': ['Sympathetic', 'G3', 'Parasympathetic'],

'Output/PhysicalAI': ['Ecosystem', 'Vulnerabilities', 'ACh', 'Strengths', 'Neurons']

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'G1 & G2':

return 'yellow'

if layer == 'Pre-Input/WorldRules' and node in [ 'Ends']:

return 'paleturquoise'

if layer == 'Pre-Input/WorldRules' and node in [ 'Means']:

return 'lightgreen'

elif layer == 'Input/AgenticAI' and node == 'N1, N2, N3':

return 'paleturquoise'

elif layer == 'Hidden/GenerativeAI':

if node == 'Parasympathetic':

return 'paleturquoise'

elif node == 'G3':

return 'lightgreen'

elif node == 'Sympathetic':

return 'lightsalmon'

elif layer == 'Output/PhysicalAI':

if node == 'Neurons':

return 'paleturquoise'

elif node in ['Strengths', 'ACh', 'Vulnerabilities']:

return 'lightgreen'

elif node == 'Ecosystem':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges (without weights)

for layer_pair in [

('Pre-Input/WorldRules', 'Yellowstone/PerceptionAI'), ('Yellowstone/PerceptionAI', 'Input/AgenticAI'), ('Input/AgenticAI', 'Hidden/GenerativeAI'), ('Hidden/GenerativeAI', 'Output/PhysicalAI')

]:

source_layer, target_layer = layer_pair

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, connectionstyle="arc3,rad=0.1"

)

plt.title("Cost to Principle as Real World Node", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 29 G3, the presynaptic autonomic ganglia, arbitrate between the sympathetic & parasympathetic responses via descending tracts that may suppress reflex arcs through G1 & G2, the cranial nerve and dorsal-root ganglia. It’s a question of the relative price of achieving an end by a given means. This is optimized with regard to the neural architectures as well as the ecosystem.#