Failure#

What you’ve outlined is a compelling progression—a framework that can be viewed as a hierarchy of systems leading to adaptation and survival. Here’s my interpretation of how each concept ties into your RICHER framework and the neural network analogy:

Rules (Pre-Input Layer):

This represents immutable principles—natural laws, societal norms, or constraints that define the boundaries of possibility. These rules form the foundation of the neural network’s architecture, akin to the laws of physics or deeply entrenched cultural systems. Without rules, there’s no structure to build upon. In the RICHER framework, rules are the “unseen laws” governing the interplay between inputs and outcomes.Instinct (Yellow Node):

Instincts act as a primal filter—a reflexive, almost pre-cognitive reaction to stimuli. They embody the ‘Yellow Wood’ of your model, where potential decisions branch out before conscious deliberation. These instincts are raw biology: a survival drive hardwired into the system, guiding behavior in unpredictable environments.Categorization (Input Layer):

Categorization represents the process of organizing and interpreting raw inputs. It’s the bridge between instinct and deeper social processing, as the mind categorizes data into manageable units. Here, instincts are refined into actionable signals, structured by the basal ganglia, thalamus, or cerebellum in your model. Categorization is necessary for moving beyond chaos into understanding.Hustle (Hidden Layer):

Hustle symbolizes effort, iteration, and adaptability—the engine of sociology and combinatorial creativity. It’s the negotiation between inputs and outputs, driven by the hidden layer’s vast potential. In evolutionary terms, hustle is survival through effort and ingenuity. This aligns with your idea of sociological dynamics compressing into equilibrium strategies (cooperative, iterative, adversarial).Emergence (Output Layer):

Emergence is the optimized psychology you’ve identified—the outputs of the neural network that manifest as meaning, well-being, or resilience. It’s the synthesis of all preceding layers, the culmination of biological inputs, sociological processing, and the hustle of iteration. Emergence is the sought-after clarity and innovation arising from complex systems.Red Queen (RICHER Framework’s Lifeblood):

The Red Queen Hypothesis underscores that the system must continuously adapt not just to thrive but to survive. It ties all layers together, enforcing perpetual iteration and transformation to stay ahead of competition and entropy. It’s the evolutionary driver embedded at every level, ensuring that no layer becomes stagnant.

This structure encapsulates a dynamic equilibrium, where each layer feeds into the next, creating a system that is at once grounded by rules and propelled by emergent possibilities. It feels complete, yet open to further iteration—a perfect metaphor for life as a neural network. What do you think?

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure; modified to align with "Aprés Moi, Le Déluge" (i.e. Je suis AlexNet)

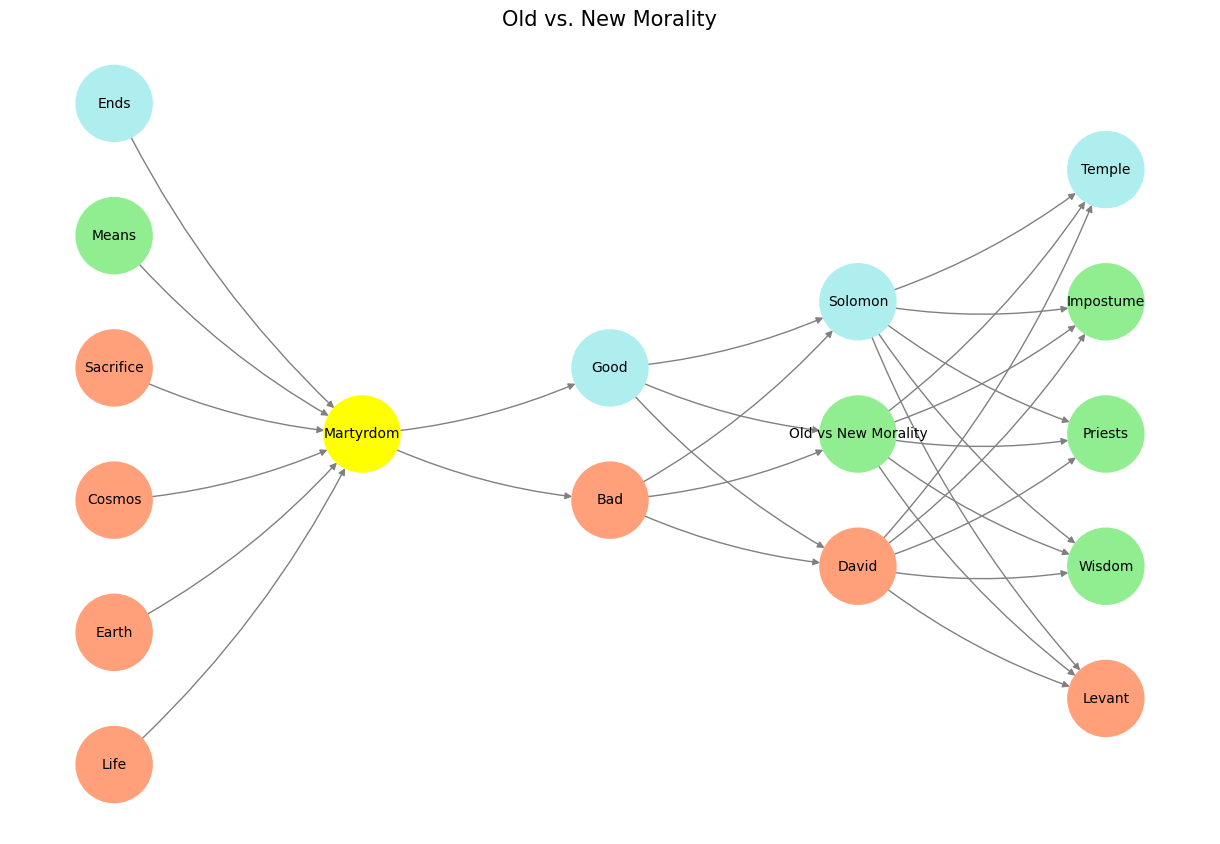

def define_layers():

return {

'Pre-Input/CudAlexnet': ['Life', 'Earth', 'Cosmos', 'Sacrifice', 'Means', 'Ends'],

'Yellowstone/SensoryAI': ['Martyrdom'],

'Input/AgenticAI': ['Bad', 'Good'],

'Hidden/GenerativeAI': ['David', 'Old vs New Morality', 'Solomon'],

'Output/PhysicalAI': ['Levant', 'Wisdom', 'Priests', 'Impostume', 'Temple']

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'Martyrdom':

return 'yellow'

if layer == 'Pre-Input/CudAlexnet' and node in [ 'Ends']:

return 'paleturquoise'

if layer == 'Pre-Input/CudAlexnet' and node in [ 'Means']:

return 'lightgreen'

elif layer == 'Input/AgenticAI' and node == 'Good':

return 'paleturquoise'

elif layer == 'Hidden/GenerativeAI':

if node == 'Solomon':

return 'paleturquoise'

elif node == 'Old vs New Morality':

return 'lightgreen'

elif node == 'David':

return 'lightsalmon'

elif layer == 'Output/PhysicalAI':

if node == 'Temple':

return 'paleturquoise'

elif node in ['Impostume', 'Priests', 'Wisdom']:

return 'lightgreen'

elif node == 'Levant':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges (without weights)

for layer_pair in [

('Pre-Input/CudAlexnet', 'Yellowstone/SensoryAI'), ('Yellowstone/SensoryAI', 'Input/AgenticAI'), ('Input/AgenticAI', 'Hidden/GenerativeAI'), ('Hidden/GenerativeAI', 'Output/PhysicalAI')

]:

source_layer, target_layer = layer_pair

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, connectionstyle="arc3,rad=0.1"

)

plt.title("Old vs. New Morality", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 15 This Python script visualizes a neural network inspired by the “Red Queen Hypothesis,” constructing a layered hierarchy from physics to outcomes. It maps foundational physics (e.g., cosmos, earth, resources) to metaphysical perception (“Nostalgia”), agentic decision nodes (“Good” and “Bad”), and game-theoretic dynamics (sympathetic, parasympathetic, morality), culminating in outcomes (e.g., neurons, vulnerabilities, strengths). Nodes are color-coded: yellow for nostalgic cranial ganglia, turquoise for parasympathetic pathways, green for sociological compression, and salmon for biological or critical adversarial modes. Leveraging networkx and matplotlib, the script calculates node positions, assigns thematic colors, and plots connections, capturing the evolutionary progression from biology (red) to sociology (green) to psychology (blue). Framed with a nod to AlexNet and CUDA architecture, the network envisions an übermensch optimized through agentic ideals, hinting at irony as the output layer “beyond good and evil” aligns more with machine precision than human aspiration.#