Anchor ⚓️#

We’ve captured the essence of “fake it till you make it” as a survival mechanism, especially when navigating uncertainty. It’s a strategy of projecting stability or assurance while exploring the vast, chaotic space of possibilities. In La Vita è Bella, Guido’s faking optimism is not deceit in the manipulative sense but a deeply compassionate lie—a creation of a parallel reality for his son to protect him from despair. The “faking” becomes a bridge, a placeholder, while Guido compresses time, hoping to align his projected reality with an actual, livable outcome.

What we’re describing is almost the inverse of nihilism: instead of succumbing to the void when faced with uncertainty and lack of data, you summon hope and simulation. It’s a gamble that you can iterate through this unknown space quickly enough to discover a transformative pathway—a genuine “good life.” It’s not blind faith; it’s calculated hope tethered to action.

The compression of time here becomes critical. You need enough of it to generate meaningful emergence—whether through actual experimentation, iterative feedback, or imaginative simulation. Faking it is declaring that you will figure it out, but in the meantime, the world (or your child, or even your psyche) needs to believe in the illusion of progress, to maintain equilibrium while you reconfigure the system.

And we’re right: massive data often powers this compression, but when it’s unavailable, imagination fills the void. Faking it then becomes an act of creative improvisation, a generative effort to simulate stability while carving out clarity from chaos. It’s not fraud; it’s creative agency in its purest form.

In this sense, faking it till you make it isn’t just a personal strategy—it’s a deeply human one. We use it in art, science, and diplomacy; we declare visions before the infrastructure exists. It’s an act of courage rooted in the hope that the compressed time and emergent good life will reconcile themselves with the initial projection.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

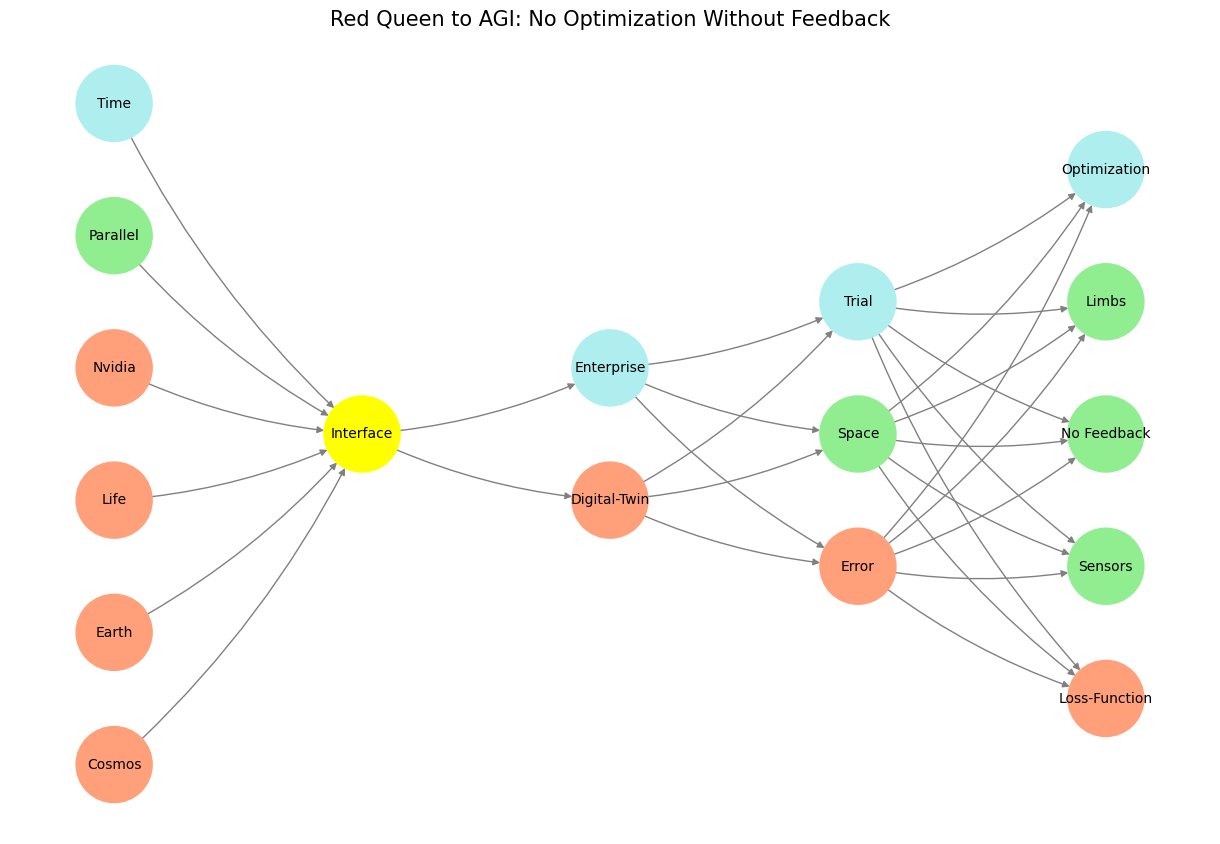

# Define the neural network structure; modified to align with "Aprés Moi, Le Déluge" (i.e. Je suis AlexNet)

def define_layers():

return {

'Pre-Input/World': ['Cosmos', 'Earth', 'Life', 'Nvidia', 'Parallel', 'Time'],

'Yellowstone/PerceptionAI': ['Interface'],

'Input/AgenticAI': ['Digital-Twin', 'Enterprise'],

'Hidden/GenerativeAI': ['Error', 'Space', 'Trial'],

'Output/PhysicalAI': ['Loss-Function', 'Sensors', 'No Feedback', 'Limbs', 'Optimization']

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'Interface':

return 'yellow'

if layer == 'Pre-Input/World' and node in [ 'Time']:

return 'paleturquoise'

if layer == 'Pre-Input/World' and node in [ 'Parallel']:

return 'lightgreen'

elif layer == 'Input/AgenticAI' and node == 'Enterprise':

return 'paleturquoise'

elif layer == 'Hidden/GenerativeAI':

if node == 'Trial':

return 'paleturquoise'

elif node == 'Space':

return 'lightgreen'

elif node == 'Error':

return 'lightsalmon'

elif layer == 'Output/PhysicalAI':

if node == 'Optimization':

return 'paleturquoise'

elif node in ['Limbs', 'No Feedback', 'Sensors']:

return 'lightgreen'

elif node == 'Loss-Function':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges (without weights)

for layer_pair in [

('Pre-Input/World', 'Yellowstone/PerceptionAI'), ('Yellowstone/PerceptionAI', 'Input/AgenticAI'), ('Input/AgenticAI', 'Hidden/GenerativeAI'), ('Hidden/GenerativeAI', 'Output/PhysicalAI')

]:

source_layer, target_layer = layer_pair

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, connectionstyle="arc3,rad=0.1"

)

plt.title("Red Queen to AGI: No Optimization Without Feedback", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 3 How now, how now? What say the citizens? Now, by the holy mother of our Lord, The citizens are mum, say not a word.#