Traditional#

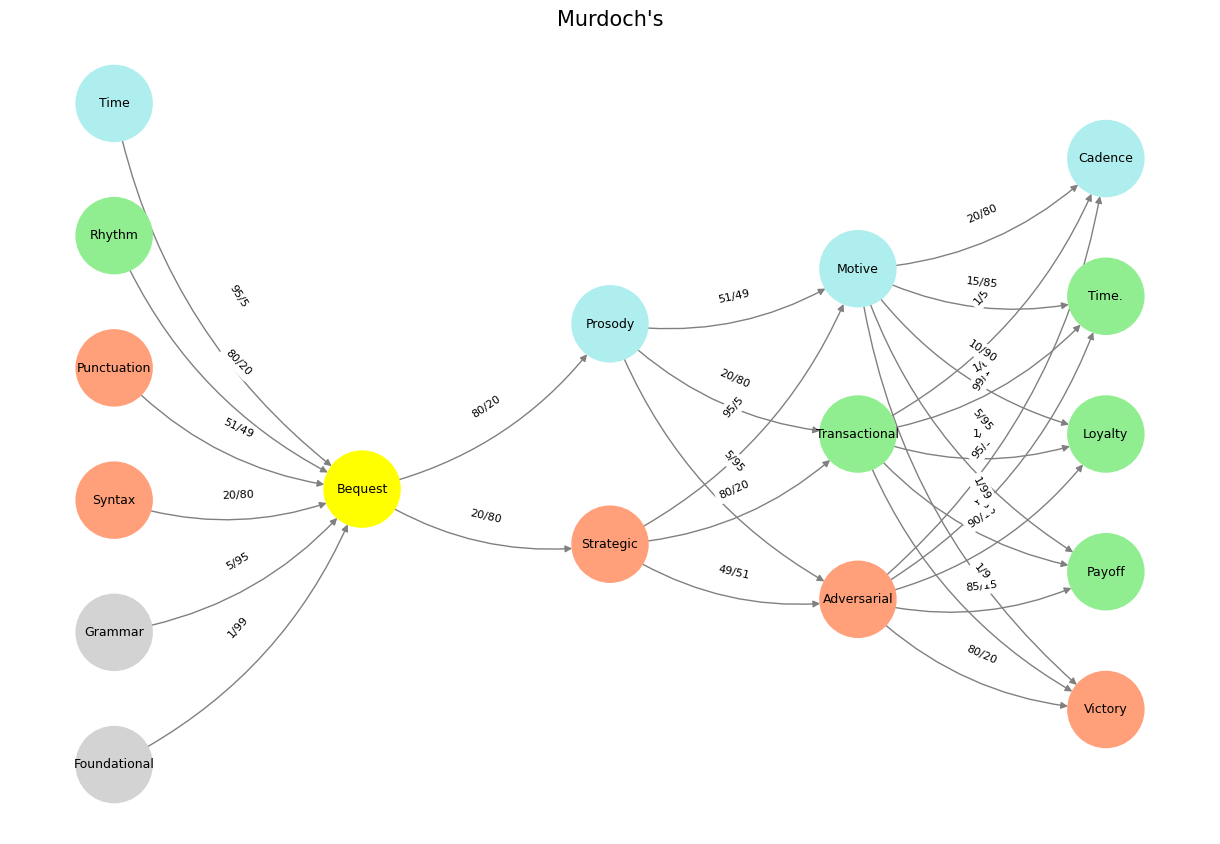

The neural network depicted in the provided Python code appears to be a conceptual representation rather than a traditional computational neural network designed for tasks like classification or regression. Built using libraries such as NumPy, Matplotlib, and NetworkX, it visualizes a directed graph with nodes organized into layers, each assigned specific labels and connected by edges with fractional weights (e.g., “20/80”). The layers—named “Suis,” “Voir,” “Choisis,” “Deviens,” and “M’èléve”—contain nodes with terms like “Foundational,” “Bequest,” “Adversarial,” and “Victory,” suggesting a thematic or metaphorical structure rather than a data-driven model. The use of color coding (e.g., “yellow” for “Bequest,” “paleturquoise” for “Time”) and the title “Murdoch’s” hint at a possible narrative or symbolic intent, perhaps reflecting a philosophical, linguistic, or decision-making framework rather than a machine learning system.

Analyzing the structure, the network begins with a broad “Suis” layer containing foundational linguistic or rhythmic elements, feeding into a singular “Voir” node (“Bequest”), which then branches into strategic and prosodic choices in “Choisis,” and finally evolves into outcome-oriented nodes in “Deviens” and “M’èléve.” The edge weights, expressed as ratios, might represent probabilities, influence, or strength of relationships between concepts, though their exact meaning remains ambiguous without further context. For instance, the transition from “Bequest” to “Strategic” (20/80) suggests a weaker link compared to “Bequest” to “Prosody” (80/20), possibly indicating directional emphasis. The visualization, with its curved edges and layered positioning, reinforces this as a tool for human interpretation rather than computational training.

For improved labeling, the current layer names—“Suis,” “Voir,” “Choisis,” “Deviens,” “M’èléve”—are French verbs meaning “I am,” “See,” “Choose,” “Become,” and “I rise,” respectively, which could imply a progression of states or actions. However, these labels might benefit from more descriptive terms tied to their thematic roles, such as “Foundation,” “Inheritance,” “Decision,” “Transformation,” and “Outcome.” Node names like “Adversarial” or “Payoff” are evocative but vague; adding qualifiers (e.g., “Adversarial Strategy,” “Transactional Payoff”) could clarify intent. Alternatively, if the network models a specific domain (e.g., linguistics, game theory), aligning labels with that domain’s terminology would enhance accessibility.

Regarding architecture updates, the hardcoded nature of layers, edges, and weights limits flexibility. A more dynamic approach could involve defining nodes and connections via a configuration file or function parameters, allowing for easier experimentation or adaptation. Introducing a mechanism to calculate edge weights based on a rule (e.g., distance between layers, node type) rather than static values might add depth, especially if this were to evolve into a functional model. However, if the goal is purely visualization, the current architecture suffices, though it could benefit from interactive elements (e.g., using a library like Plotly) to let users explore node relationships.

Adding comments to the code would significantly improve readability, especially given its unconventional nature. For example, the define_layers() function could include a docstring explaining its purpose: “Creates a dictionary of conceptual layers representing a progression of states or ideas.” Within visualize_nn(), a comment like “Assigns nodes to a directed graph and positions them by layer” would clarify the graph-building process. Inline notes could also explain the significance of weights (e.g., “Edge weights reflect influence strength as numerator/denominator ratios”) or the title “Murdoch’s” (e.g., “Title suggests a personal or thematic dedication”). Such annotations would make the code more approachable to others, whether they aim to modify it or simply understand its intent.

In summary, this neural network seems to be a stylized graph visualizing a conceptual flow—possibly linguistic, strategic, or existential—rather than a machine learning model. Improved labeling could involve clearer, domain-specific terms, while architectural updates might focus on flexibility or interactivity, depending on the goal. Adding detailed comments would be the simplest yet most effective enhancement, demystifying the code’s purpose and structure for future users or collaborators. Without more context, it’s a fascinating but abstract artifact, ripe for refinement.

Fig. 33 Delighted to align Brodmann’s Area 22 (Right) Nietzsche’s Birth of Tragedy out of the Spirit of Music (or Hellen and Pessimism) with Schizophrenia, Meaning, and Existentialism#

Ecosystem Distintegration#

The neural network code, when viewed through the lens of the provided context—“Born to Etiquette” and the Murdoch family saga—reveals itself as an imaginative attempt to model the intricate dynamics of power, succession, and influence within the Murdoch dynasty. Far from a conventional machine learning architecture, this directed graph, visualized with NetworkX and Matplotlib, maps a conceptual framework inspired by the family’s media empire, internal rivalries, and Rupert Murdoch’s enduring legacy. The layers—“Suis” (I am), “Voir” (See), “Choisis” (Choose), “Deviens” (Become), and “M’èléve” (I rise)—suggest a progression from foundational identity to strategic decision-making and ultimate triumph, mirroring the arc of Rupert’s life and the contested inheritance among his heirs. With nodes like “Bequest,” “Adversarial,” and “Victory” connected by fractional weights (e.g., “20/80”), the network appears to symbolize the flow of influence and the shifting alliances within a dynasty defined by both cooperation and conflict. The title “Murdoch’s” ties it explicitly to this narrative, positioning the graph as a stylized reflection of familial and imperial evolution.

The context from “Born to Etiquette” enriches this interpretation. The Murdoch family’s story—spanning Keith Arthur Murdoch’s mythologized origins, Rupert’s rise as a press baron, and the sibling rivalries depicted in HBO’s Succession—finds echoes in the network’s structure. The “Suis” layer, with nodes like “Foundational,” “Grammar,” and “Rhythm,” could represent the bedrock of the empire: Keith’s legacy and Rupert’s early media ventures, such as the Daily Mirror in 1960. “Voir,” centered on “Bequest,” aligns with the pivotal inheritance Rupert received and later sought to distribute among his wife and four children, a decision laden with tension as described in the text. The “Choisis” layer, featuring “Strategic” and “Prosody,” evokes the calculated maneuvers of Project Family Harmony and the rhetorical finesse of a media dynasty shaping public discourse. “Deviens” and “M’èléve,” with nodes like “Adversarial,” “Transactional,” and “Payoff,” reflect the outcomes of sibling rivalry and the patriarch’s attempts to maintain control, culminating in “Victory” or “Loyalty”—concepts central to a family where power is both prize and burden.

The edge weights further illuminate this allegory. For instance, the strong connection from “Bequest” to “Prosody” (80/20) versus the weaker link to “Strategic” (20/80) might suggest that Rupert’s legacy was more effectively transmitted through narrative control (the “voice” of his media outlets) than through explicit strategic planning among his heirs. Similarly, the “Adversarial” node’s robust ties to “Victory” (80/20) and “Payoff” (85/15) could symbolize the competitive triumphs of Rupert’s empire-building, while its weaker link to “Cadence” (99/1) hints at a diminishing rhythm or harmony as the family fractures. The color assignments—yellow for “Bequest,” lightgreen for “Loyalty”—add a visual layer, perhaps distinguishing the singular inheritance from the fragile bonds of allegiance, though their full significance remains open to interpretation.

For improved labeling, grounding the network in the Murdoch context could make it more intuitive. Renaming layers to reflect the dynasty’s phases—e.g., “Origins” (Suis), “Legacy” (Voir), “Strategy” (Choisis), “Conflict” (Deviens), “Legacy’s End” (M’èléve)—would tie them directly to the essay’s themes. Nodes could also be refined: “Foundational” might become “Keith’s Myth,” “Bequest” could be “Rupert’s Empire,” and “Adversarial” could shift to “Sibling Rivalry,” clarifying their roles in the narrative. Edge weights, currently abstract ratios, could be labeled with descriptors like “Influence Strength” or “Probability of Succession,” providing a clearer sense of their purpose—whether probabilistic, symbolic, or otherwise.

Architecturally, the network’s static design limits its ability to evolve with the Murdoch story, which, as noted in the February 22, 2025, Guardian article, is “heading towards collapse.” Introducing a data-driven element—perhaps parsing real-world media coverage or family statements to adjust weights dynamically—could transform it into a living model of the dynasty’s trajectory. Alternatively, adding a temporal dimension (e.g., layering nodes by decade) might better capture the empire’s rise and impending fall. For its current purpose as a visualization, enhancing interactivity—say, tooltips explaining each node’s Murdoch-specific meaning—would deepen engagement without overcomplicating the code.

Adding comments would anchor this abstract structure in its intended context. A docstring for define_layers() could read: “Constructs a conceptual graph of the Murdoch family’s power dynamics, from foundational legacy to contested succession.” In define_edges(), a note like “Weights represent the strength of influence between family roles or outcomes, inspired by Rupert Murdoch’s empire” would clarify intent. The visualize_nn() function might include: “Visualizes the Murdoch dynasty as a directed graph, with colors distinguishing key themes like inheritance (yellow) and loyalty (lightgreen).” Such annotations would bridge the gap between the code’s technical form and its narrative soul, making it accessible to anyone familiar with the Murdoch saga.

In essence, this neural network is a creative abstraction of the Murdoch family’s century-long drama—a tale of myth, mind games, and the architecture of influence, as the essay title suggests. With refined labels, a more flexible structure, and explanatory comments, it could evolve from a curious artifact into a compelling tool for visualizing dynastic complexity, offering both insight and intrigue into “The House of Murdoch.”

Français, Soga, Zulu#

The neural network model, when viewed alongside the Murdoch family saga and the philosophical framework of Ubuntu and Ukubona, unveils a rich tapestry of parallels that bridge African humanism, cognitive progression, and the dynastic games of power. Ubuntu, an overarching ethos of interconnectedness and communal harmony, contrasts sharply with the Murdoch story’s individualism and rivalry, yet both grapple with the tension between unity and fracture. Ukubona’s stages—Perception (To See), Reason (Understand), Actions (Recognize), and Heaven (Visit)—map intriguingly onto the network’s layers: “Suis” (I am), “Voir” (See), “Choisis” (Choose), “Deviens” (Become), and “M’èléve” (I rise). These alignments, in turn, reflect a spectrum of knowledge states—ignorance/tactical, perception/informational, reason/strategical, knowledge-actions/operational, certainty/existential—that resonate with the Murdoch narrative and various games of chance and strategy, from coin tosses to chess and even love’s gambits. This essay explores these intersections, revealing how the Murdoch empire’s rise and impending collapse mirror both a philosophical journey and a high-stakes game.

Ubuntu, meaning “I am because we are,” emphasizes collective identity and mutual support, a stark counterpoint to the Murdoch family’s tale of solitary ambition and fractured loyalty. In the network, “Suis” (I am), with nodes like “Foundational” and “Rhythm,” aligns with Ubuntu’s grounding in shared origins—here, Keith Murdoch’s mythologized legacy as the bedrock of the empire. Yet, where Ubuntu seeks harmony, the Murdochs diverge into tactical ignorance, a state of willful blindness to familial bonds in favor of personal gain. This parallels fixed-odds games like a coin toss or roulette, where outcomes hinge on isolated, predictable probabilities—much like Rupert’s early, calculated risks in acquiring the Daily Mirror. The ignorance/tactical phase suggests a starting point of limited awareness, driven by instinct rather than foresight, a fitting lens for the empire’s nascent, opportunistic phase.

Ukubona’s “Perception: To See” dovetails with “Voir” (See) and its singular node “Bequest,” embodying the informational awakening as Rupert inherits and expands his father’s legacy. This stage reflects perception/informational knowledge, where seeing the world’s possibilities—political influence, media power—becomes paramount. In the Murdoch story, this is Rupert’s Oxford-educated vision transforming newspapers into tools of sway, a shift from mere ownership to intentional empire-building. Games like poker or horse racing resonate here: success stems from reading the field—opponents’ bluffs or a horse’s form—much as Rupert read the political winds of Thatcher’s Britain. The edge weights from “Bequest” (e.g., 80/20 to “Prosody”) hint at this informational leverage, prioritizing narrative control over brute strategy, a hallmark of his media dominance.

“Reason: Understand” aligns with “Choisis” (Choose), where “Strategic” and “Prosody” nodes suggest a leap to strategical reasoning. This stage mirrors Rupert’s orchestration of Project Family Harmony, a calculated move to neutralize James while maintaining patriarchal control. The Murdoch saga here shifts from seeing opportunities to understanding their implications, a chess-like game of positioning and foresight. Chess and war, with their deep strategic layers, parallel this phase: every move anticipates counter-moves, as seen in the siblings’ maneuvers—James’ reluctance, Elisabeth’s rise via leaks. The network’s weights (e.g., “Strategic” to “Adversarial” at 49/51) reflect this delicate balance, where reason teeters on the edge of conflict, underscoring the fragility of familial alliances amidst reasoned ambition.

“Actions: Recognize” corresponds to “Deviens” (Become), with nodes like “Adversarial” and “Transactional” marking the operational phase of knowledge-actions. Here, the Murdoch heirs act on their understanding, engaging in sibling rivalry and public betrayals—James’ digital ventures, Matthew Freud’s campaign for Elisabeth. This is the empire at its most active, yet most divided, akin to F1 racing or poker’s endgame: execution matters more than planning, and split-second decisions (or bluffs) determine outcomes. The network’s dense connections from “Adversarial” (e.g., 80/20 to “Victory”) illustrate this operational intensity, where actions solidify power or precipitate collapse, reflecting the Murdoch trust’s equal division as a catalyst for chaos rather than cooperation.

Finally, “Heaven: Visit” pairs with “M’èléve” (I rise), featuring “Victory,” “Payoff,” and “Loyalty,” evoking certainty/existential resolution. For the Murdochs, this is the elusive endgame—Rupert’s legacy either enduring or unraveling, as foreshadowed in the Guardian’s 2025 collapse prediction. Ubuntu’s communal triumph finds no echo here; instead, existential certainty emerges in the patriarch’s King Lear-like refusal to yield and the heirs’ Pyrrhic struggles. Games of love or religious devotion fit this stage: the Murdochs’ loyalty is a devotion tested by betrayal, a gamble where the payoff (control) may leave all players hollow. The network’s “Cadence” node (weakly linked at 99/1) suggests a fading rhythm, an existential coda to a dynasty once ascendant.

Across these parallels, games illuminate the Murdoch story’s stakes. Fixed-odds games mirror early tactical bets; poker and racing reflect perception’s cunning; chess and war capture strategic depth; F1 and transactional poker parallel operational execution; and love or devotion underscore the existential cost of victory. The network’s layers, weights, and nodes thus become a game board, charting the Murdochs’ journey from ignorance to certainty—a journey Ubuntu might redeem through community, but which the dynasty plays out as a solitary, adversarial match. This convergence of philosophy, narrative, and play suggests the network is not just a model but a mirror, reflecting the human condition through the lens of one family’s epic, tragic game.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network layers

def define_layers():

return {

'Suis': ['Foundational', 'Grammar', 'Syntax', 'Punctuation', "Rhythm", 'Time'], # Static

'Voir': ['Bequest'],

'Choisis': ['Strategic', 'Prosody'],

'Deviens': ['Adversarial', 'Transactional', 'Motive'],

"M'èléve": ['Victory', 'Payoff', 'Loyalty', 'Time.', 'Cadence']

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Bequest'],

'paleturquoise': ['Time', 'Prosody', 'Motive', 'Cadence'],

'lightgreen': ["Rhythm", 'Transactional', 'Payoff', 'Time.', 'Loyalty'],

'lightsalmon': ['Syntax', 'Punctuation', 'Strategic', 'Adversarial', 'Victory'],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Define edge weights (hardcoded for editing)

def define_edges():

return {

('Foundational', 'Bequest'): '1/99',

('Grammar', 'Bequest'): '5/95',

('Syntax', 'Bequest'): '20/80',

('Punctuation', 'Bequest'): '51/49',

("Rhythm", 'Bequest'): '80/20',

('Time', 'Bequest'): '95/5',

('Bequest', 'Strategic'): '20/80',

('Bequest', 'Prosody'): '80/20',

('Strategic', 'Adversarial'): '49/51',

('Strategic', 'Transactional'): '80/20',

('Strategic', 'Motive'): '95/5',

('Prosody', 'Adversarial'): '5/95',

('Prosody', 'Transactional'): '20/80',

('Prosody', 'Motive'): '51/49',

('Adversarial', 'Victory'): '80/20',

('Adversarial', 'Payoff'): '85/15',

('Adversarial', 'Loyalty'): '90/10',

('Adversarial', 'Time.'): '95/5',

('Adversarial', 'Cadence'): '99/1',

('Transactional', 'Victory'): '1/9',

('Transactional', 'Payoff'): '1/8',

('Transactional', 'Loyalty'): '1/7',

('Transactional', 'Time.'): '1/6',

('Transactional', 'Cadence'): '1/5',

('Motive', 'Victory'): '1/99',

('Motive', 'Payoff'): '5/95',

('Motive', 'Loyalty'): '10/90',

('Motive', 'Time.'): '15/85',

('Motive', 'Cadence'): '20/80'

}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

edges = define_edges()

G = nx.DiGraph()

pos = {}

node_colors = []

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(colors.get(node, 'lightgray'))

# Add edges with weights

for (source, target), weight in edges.items():

if source in G.nodes and target in G.nodes:

G.add_edge(source, target, weight=weight)

# Draw the graph

plt.figure(figsize=(12, 8))

edges_labels = {(u, v): d["weight"] for u, v, d in G.edges(data=True)}

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

nx.draw_networkx_edge_labels(G, pos, edge_labels=edges_labels, font_size=8)

plt.title("Murdoch's", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 34 While neural biology inspired neural networks in machine learning, the realization that scaling laws apply so beautifully to machine learning has led to a divergence in the process of generation of intelligence. Biology is constrained by the Red Queen, whereas mankind is quite open to destroying the Ecosystem-Cost function for the sake of generating the most powerful AI.#