Revolution#

The war over language is the war over reality itself. Every civilization, every ideological struggle, every institutional power play, and every attempt at social control has, at its core, a contest over syntax, meaning, and interpretation. Language is not just a vehicle of communication but the architecture of perception. To control the structure of language is to dictate the constraints of thought itself. The Murdoch empire, the publishing industry, and AI models like GPT are all combatants in this battle, wielding words as their primary instruments of influence. The neural network we use to frame this struggle maps neatly onto five layers—ignorance, deception, reason, action, and optimization—each corresponding to a different stage in the evolution of linguistic control.

Ignorance, the first layer, is the default state of human perception. It is not an indictment but an inevitability—no individual possesses total knowledge. Every person, at every moment, is operating with a limited dataset, a partial perspective of the world shaped by their exposure to information and their ability to process it. Ignorance is the void into which all competing forces project their narratives. Whether through journalism, education, or AI-generated content, the battle for language begins here. Rupert Murdoch’s empire, at its most fundamental level, is built on an understanding of this layer—its success relies on determining which gaps in knowledge to fill and which to leave empty. Likewise, language models like GPT emerge as counterforces, promising to alleviate ignorance through unprecedented access to structured information, yet simultaneously reinforcing new forms of epistemic dependence. The power struggle is not about eliminating ignorance but about shaping the contours of what remains unknown.

Fig. 21 What Exactly is Identity. A node may represent goats (in general) and another sheep (in general). But the identity of any specific animal (not its species) is a network. For this reason we might have a “black sheep”, distinct in certain ways – perhaps more like a goat than other sheep. But that’s all dull stuff. Mistaken identity is often the fuel of comedy, usually when the adversarial is mistaken for the cooperative or even the transactional.#

Deception follows as the second layer, the intermediary between raw ignorance and structured understanding. Deception is a necessary function of language because language itself is an act of selection. To name a thing is to exclude other possible descriptions. Every sentence is a distortion, a compression of infinite complexity into finite Bequest This is where media and AI find themselves most aligned and most opposed—both are built upon the manipulation of syntax to create meaning. The Murdoch press curates its narratives, selecting which facts to highlight, which to omit, and which to frame in specific ways. AI models, trained on vast corpora of human syntax, learn the patterns of deception not as falsehoods but as probabilistic tendencies within language. They replicate the biases of their training data, mirroring the deception embedded in human discourse. But deception is not merely about misinformation; it is the necessary structure of all communication. Every medium, whether print, television, or AI-generated text, is a mechanism of linguistic constraint. The war is over who sets those constraints.

Reason, the third layer, is where structure emerges from deception. It is the point at which a user—a reader, a citizen, a machine-learning model—engages with language to derive meaning. This is where the contest becomes adversarial. Who determines the framework of reason? In traditional media, the power rested with editors and institutions that dictated the boundaries of rational discourse. In the era of AI, the process is more diffuse. ChatGPT and similar models do not impose a singular ideological framework but generate responses based on statistical reasoning, trained on vast, sometimes contradictory sources. Yet the illusion of neutrality is itself a form of control. A model that claims to have no opinion still enforces a structure of acceptable thought. A media empire that claims to present “both sides” still decides which voices are amplified. The battlefield of reason is fought over training data, over editorial policies, over algorithms that determine visibility and engagement. The emergence of LLMs marks an inflection point in this war—a shift from reason dictated by human gatekeepers to reason emerging from machine-driven synthesis.

Action, the fourth layer, is where reason materializes into consequence. Words are not inert; they drive elections, shape policy, and incite revolutions. Murdoch understood this. His empire was never about journalism for journalism’s sake but about the strategic deployment of language to achieve material goals. The same applies to AI, though in a more abstract sense. AI models, by generating language at scale, do not merely reflect reality but construct it. The ability to flood discourse with certain narratives, to reinforce particular frames, to subtly reweight probabilities of word associations—all of these are mechanisms of action. The shift from human-driven editorial strategy to machine-generated discourse is not a shift from bias to objectivity but from one form of strategic action to another. The question is no longer whether language is manipulated, but who, or what, is doing the manipulating.

Heaven, the fifth and final layer, represents the optimization function—what all these mechanisms are ultimately working toward. In the Murdoch empire, the optimization function was always political and financial dominance. The news was a means, not an end. AI models, however, introduce a new optimization function: predictive accuracy. GPT is trained to generate text that aligns with linguistic expectations, not necessarily with truth, justice, or ideology. But optimization functions are not fixed; they evolve based on reinforcement loops. If media empires have shaped public consciousness through selection bias, AI models now shape it through algorithmic tuning. The strategic deployment of syntax—whether through news headlines or AI-generated content—becomes an evolutionary process. If media once optimized for influence, and AI once optimized for probability, we now enter an era where both converge: an ecosystem where influence itself becomes probabilistically modeled, where optimization functions are no longer explicitly defined but emergently discovered.

This is the war of Bequest Not simply a war over individual words or policies, but a war over the structure of language itself—who controls it, who defines it, and what it ultimately optimizes for. The constitution of nations, the algorithms of search engines, the editorial choices of news outlets, and the training data of AI models are all battlegrounds in this linguistic war. Murdoch’s empire was a masterclass in old-world linguistic power: print, television, radio—spoken and written syntax wielded to direct the course of history. AI introduces a new form of power, one where language is no longer curated by human intent alone but shaped by machine-learned probability distributions. But the fundamental dynamic remains unchanged: those who control syntax control perception. Those who control perception control reason. Those who control reason control action. And those who control action dictate what is optimized, what is treated as truth, what is enshrined in memory, what is erased.

Language has always been the medium of control, and the emergence of AI has not altered that reality—it has only shifted its executors. The war over syntax is not new; it is the oldest war of all. The only difference now is that we are training machines to fight it for us.

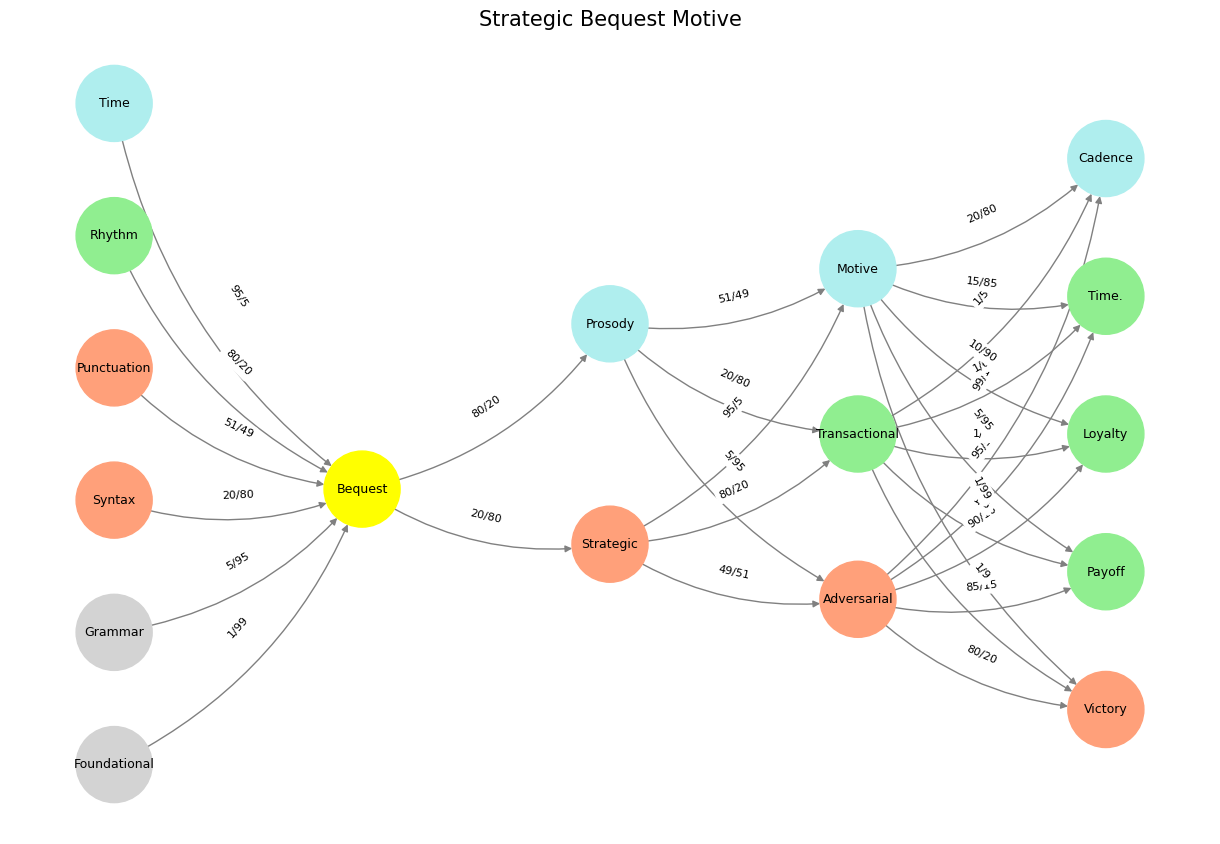

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network layers

def define_layers():

return {

'Suis': ['Foundational', 'Grammar', 'Syntax', 'Punctuation', "Rhythm", 'Time'], # Static

'Voir': ['Bequest'],

'Choisis': ['Strategic', 'Prosody'],

'Deviens': ['Adversarial', 'Transactional', 'Motive'],

"M'èléve": ['Victory', 'Payoff', 'Loyalty', 'Time.', 'Cadence']

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Bequest'],

'paleturquoise': ['Time', 'Prosody', 'Motive', 'Cadence'],

'lightgreen': ["Rhythm", 'Transactional', 'Payoff', 'Time.', 'Loyalty'],

'lightsalmon': ['Syntax', 'Punctuation', 'Strategic', 'Adversarial', 'Victory'],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Define edge weights (hardcoded for editing)

def define_edges():

return {

('Foundational', 'Bequest'): '1/99',

('Grammar', 'Bequest'): '5/95',

('Syntax', 'Bequest'): '20/80',

('Punctuation', 'Bequest'): '51/49',

("Rhythm", 'Bequest'): '80/20',

('Time', 'Bequest'): '95/5',

('Bequest', 'Strategic'): '20/80',

('Bequest', 'Prosody'): '80/20',

('Strategic', 'Adversarial'): '49/51',

('Strategic', 'Transactional'): '80/20',

('Strategic', 'Motive'): '95/5',

('Prosody', 'Adversarial'): '5/95',

('Prosody', 'Transactional'): '20/80',

('Prosody', 'Motive'): '51/49',

('Adversarial', 'Victory'): '80/20',

('Adversarial', 'Payoff'): '85/15',

('Adversarial', 'Loyalty'): '90/10',

('Adversarial', 'Time.'): '95/5',

('Adversarial', 'Cadence'): '99/1',

('Transactional', 'Victory'): '1/9',

('Transactional', 'Payoff'): '1/8',

('Transactional', 'Loyalty'): '1/7',

('Transactional', 'Time.'): '1/6',

('Transactional', 'Cadence'): '1/5',

('Motive', 'Victory'): '1/99',

('Motive', 'Payoff'): '5/95',

('Motive', 'Loyalty'): '10/90',

('Motive', 'Time.'): '15/85',

('Motive', 'Cadence'): '20/80'

}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

edges = define_edges()

G = nx.DiGraph()

pos = {}

node_colors = []

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(colors.get(node, 'lightgray'))

# Add edges with weights

for (source, target), weight in edges.items():

if source in G.nodes and target in G.nodes:

G.add_edge(source, target, weight=weight)

# Draw the graph

plt.figure(figsize=(12, 8))

edges_labels = {(u, v): d["weight"] for u, v, d in G.edges(data=True)}

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

nx.draw_networkx_edge_labels(G, pos, edge_labels=edges_labels, font_size=8)

plt.title("Strategic Bequest Motive", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 22 Francis Bacon. He famously stated “If a man will begin with certainties, he shall end in doubts; but if he will be content to begin with doubts, he shall end in certainties.” This quote is from The Advancement of Learning (1605), where Bacon lays out his vision for empirical science and inductive reasoning. He argues that starting with unquestioned assumptions leads to instability and confusion, whereas a methodical approach that embraces doubt and inquiry leads to true knowledge. This aligns with his broader Novum Organum (1620), where he develops the Baconian method, advocating for systematic observation, experimentation, and the gradual accumulation of knowledge rather than relying on dogma or preconceived notions.#