Open#

1. f(t)

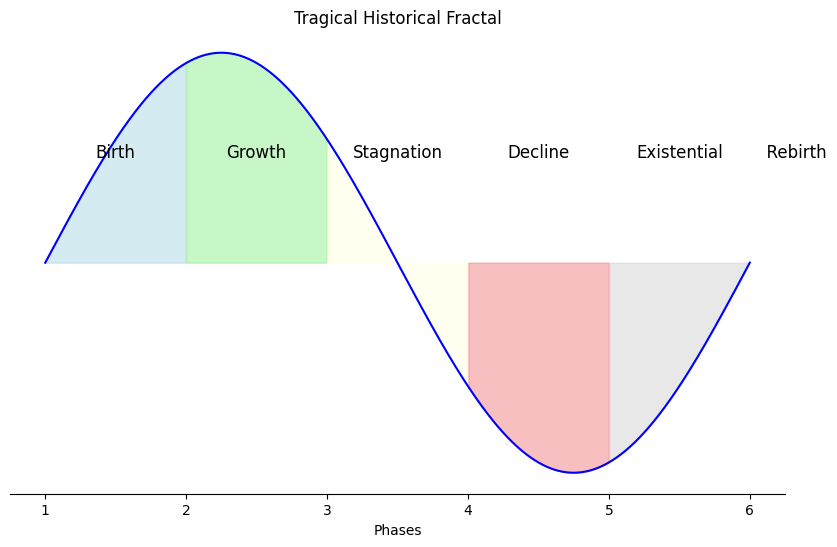

\

2. S(t) -> 4. y:h'(f)=0;t(X'X).X'Y -> 5. b(c) -> 6. SV'

/

3. h(t)

\(\mu\) text#

Pre-training: GPT models are pre-trained on a large corpus of text data. During this phase, the model learns the statistical properties of the language, including grammar, vocabulary, idioms, and even some factual knowledge. This pre-training is done in an unsupervised manner, meaning the model doesn’t know the “correct” output; instead, it learns by predicting the next word in a sentence based on the previous words.

In essence, GPT models are powerful because they can use the context provided by the input data to make informed predictions. The “training context” is all the data and patterns the model has seen during

pre-training, and this rich background allows it togeneratecoherent and contextually appropriate responses. This approach enables the model to handle a wide range of tasks, from language translation to text completion, all while maintaining a contextual awareness that makes its predictions relevant and accurate.Similarly, in a Transformer model, the “melody” can be thought of as the input tokens (words, for instance). The “chords” are the surrounding words or tokens that the attention mechanism uses to reinterpret the context of each word. Just as the meaning of the note B changes with different chords, the significance of a word can shift depending on its context provided by other words. The attention mechanism dynamically adjusts the “chordal” context, allowing the model to emphasize different aspects or interpretations of the same input

\(\sigma\) context#

Contextual Understanding: The training phase helps the model understand context by looking at how words and phrases are used together. This is where the attention mechanism comes into play—it allows the model to focus on different parts of the input data, effectively “learning” the context in which words appear.

\(\%\) pretext#

Contextual Predictions: Once trained, the model can generate predictions based on the context provided by the input text. For example, if given a sentence, it can predict the next word or complete the sentence by considering the context provided by the preceding words. The model uses the patterns it learned during training to make these predictions, ensuring they are contextually relevant.

Dynamic Attention: The attention mechanism is key to this process. It allows the model to weigh the importance of different words or tokens in the input, effectively understanding which parts of the context are most relevant to the prediction. This dynamic adjustment is what gives GPT models their flexibility and nuanced understanding.

1. Observing \ 2. Time = Compute -> 4. Collective Unconscious -> 5. Decoding -> 6. Generation-Imitation-Prediction-Representation / 3. Encoding

Subject: Re: Kind Request: R3 Course Material Reflection

Dear Ilinca,

Thank you for the reminder! I apologize for the delay.

Reflecting on the R3 courses, I found the integration of philosophical discourse and practical applications, such as Open Science, particularly impactful. The emphasis on ethical considerations in science communication and the exploration of different models, including Schramm’s, were enlightening. These elements have broadened my perspective and significantly enhanced my collaborative approach to both research and teaching.

As an Assistant Professor of Surgery, I’ve embraced the principles of Open Science, utilizing platforms like GitHub to make my research accessible and transparent. GitHub serves as a central repository where all research data and findings are stored, promoting scientific integrity and reproducibility. It allows for open-source collaboration, where anyone can view, contribute, or critique the work, aligning perfectly with the Open Science movement’s goals.

In my teaching, I’ve adopted Jupyter Books to create interactive and dynamic content, which are hosted for free on GitHub and deployed as a webpage. This has been instrumental in making complex concepts more accessible to students, providing an open and flexible learning resource. The use of GitHub Discussions has facilitated Schramm’s model of communication, enabling quick feedback and fostering a rich, collaborative learning environment. This approach not only helps reduce errors and misunderstandings but also encourages responsible science and critical thinking.

Please find attached a professional photo of myself. Here are five keywords that summarize the R3 courses for me: Truth, Rigor, Error, Sloppiness, Fraud. For me, your course pushed me to establish a workflow that reduces the likelihood of deviating from the pursuit of truth, and my workflow invites my collaborators to share the same values.

I hope this contributes to the symposium and materials. Please let me know if there’s anything else you need!

Warm regards,

Abi

Essays#

1 Bio#

I’m Abi, an Assistant Professor of Surgery and a 3rd year GTPCI PhD student seeking to put some critical thought into the “Ph” of PhD and think this class is perfect for the journey smile

2 Crisis?#

This weeks session on “Science in crisis — time for reform?” is a tour de force and addresses a vast range of topics. But I’ll focus on the question mark in the title, which to me leaves the question open for discourse — and I choose to disagree with the thesis. Feng et al in their 2012 paper “Misconduct accounts for the majority of retracted scientific publications” tell us that PubMed references more than 25 million articles since 1940 of which a mere 2,047 (8 per 100,000) were retracted articles (1). And the “crisis” in science stems from the observation that this number has increased 10-fold since 1975 (i.e., to 80 per 100,000). Is the increase a crisis? Or is the proportion of retractions alarming?

Like the arbitrary p<0.05 we use to decide whether an empirical finding is statistically significant I believe we need a similar arbitrary cut-off here to tell us whether 80 retractions per 100,000 publications is statistically significant. My intuition is that it is not significantly different from a “null” rate at which human beings are bound to commit errors in their scientific endeavors. The vast majority of scientific papers (i.e., 99,920 of 100,000) are without these worrisome issues and so there is no need to sound the alarm.

This bring me to the next issue. Retracted articles are classified as resulting from fraud, error, plagiarism, and duplicate publication. But I think between error and plagiarism, “sloppiness” of the researcher should be considered. This sloppiness is not entirely a consequence of a random, cosmic error but actually a symptom of a fundamental human trait. “Our survival mechanism as human beings orders the world into cause-effect-conclusion”, said David Mamet in his book “Three Uses of the Knife”, in which he discusses playwriting (2). He goes on to say “The subject of drama is The Lie. At the end of the drama THE TRUTH — which has been overlooked, disregarded, scorned, and denied — prevails. And that is how we know the Drama is done.” Some scientists may be sloppy because they are — like all humans — interested in ordering the world rather than in rigorously demonstrating a truth.

How different is science from the performing arts? How different from life? Feng et al. blame the incentive system of science as a key driver of fraud and they propose that an increased attention to ethics in the training of scientists will stop this trend. I disagree. If at all its inherent in us to order life into cause-effect-conclusion, then scientists will always be tempted to produce such tidy narratives in their works and for all sorts of reasons, as is evident from the observation that misconduct is common at all career stages.

But while I’m pessimistic about the individual scientists morality, I’m pleased to note that the collective endeavor of science includes rigorous attempts to reproduce novel findings. Whenever other scientists fail to reproduce a finding then the original work is brought into question. Similarly, it has become fashionable to summarize specific scientific topics via systematic reviews and meta-analyses. This effort at bottom is the source of my optimism since we will witness the averaging-out of the few “rotten fruit” with the many rigorous studies. So, on average, science is not in crisis and it has in-built mechanisms that correct fraud.

Reference

1.Ferric C Fang, R Grant Steen, Arturo Casadevall. Misconduct accounts for the majority of retracted scientific publications . 2012 Oct 16;109(42):17028-33. doi: 10.1073/pnas.1212247109. Epub 2012 Oct 1.

2.David Mamet. Three Uses of the Knife: On the nature and Purpose of Drama. Accessed on November 02, 2022. https://en.wikipedia.org/wiki/Three_Uses_of_the_Knife#cite_note-1

3 Non-Rigorous#

PART A

A systematic review and meta-analysis of 5087 randomized, controlled trials published in general medical journals was conducted by a meticulous defender of science, Carlisle JB, in 2017 (1). Carlislse found data fabrication and other reasons for non-random sampling in several of these studies, including Estruch et al for the PREDIMED Study Investigators. Primary prevention of cardiovascular disease with a Mediterranean diet. NEJM 2013 (2). This latter study is my choice for Part A of this weeks activity.

Table 1 in this very highly cited study demonstrated differences between baseline study and control populations characteristics that were substantially different from what would have been expected to result from randomization. A year later in 2018, the study authors in collaboration with the NEJM editors retracted the original 2013 study and published a revised analysis that addressed the sampling errors that had been identified (3). As such, the source of error here is methodological (i.e., research design was complex; this increased the number of protocol items, processes, and likelihood of human error).

Estruch et al and PREDIMED conducted a review of how participants were assigned to various interventions groups. This effort revealed irregularities in their randomization procedures. As honest and responsible scientists, they withdrew the original report and published a new one. It is worth noting that once corrected the protocol deviations did not result in any significant change in estimates and inferences from the study, consistent with what one would expect from small random error from a very large study. To date, the original, retracted study has been cited close to 6000 times whereas the corrected one has been cited about 2000 times.

I read the original study when it was published in 2013 and it’s only because of this assignment that I’ve learned about the retraction — pure chance!!!

PART B

As described by the authors in their original study, the PREDIMED trial was a parallel-group, multcenter, randomized trial (2). 7447 participants from Europe, Central or South America, and other countries across the world were randomly assigned in a 1:1:1 ratio to one of three dietary intervention groups across.

Was the randomization process performed at the individual level (i.e., 7447 independently sampled persons)? No, since the study included members from the same households, 11 study sites (i.e., intra-site correlations in statistical analysis), and in general inconsistent use of randomization procedures at some sites (4).

The revised analyses corrected the statistical correlations within families and clinics, omitted 1588 participants whose trial-group assignments were unknown or suspected to have departed from protocol, and estimates and p-values recalculated.

Despite these revelations no significant change in the results was observed. As mentioned earlier, this is somewhat consistent with the nature of errors arising randomly as contrasted with those arising systematically — as discussed in part A. That said, the discovery of these random errors highlights a self-correcting aspect already intrinsic to science, namely the systematic review and meta-analysis of already published works.

No recommendations will change the activity of the kind of welcome vigilantism demonstrated by Carlisle. One sees that its quite remarkable that Carlisle approached the issue from a purely statistical perspective and had an impact at the very highest tiers of pier review, the New England Journal of Medicine, and in a field of expertise (nutrition) that they may not have had any particular interest in! One also sees how responsive and responsible the authors Estruch et al were. And so this entire retraction story is quite reassuring in terms of the prospects of science or its current status.

However, I wish to recommend one other consideration. That we restore the old practice of reporting p-values in Table 1 of randomized trials, to demonstrate balance across trial arms. It used to be a standard practice across journals until p-values became unfashionable in certain high circles.

Also, I recommend that we restore the practice of using a flow chart to describe the sampling design of study participants. Such a chart as Figure 1 may have highlighted to reviewers and readers the sampling error committed by the authors and this might have been addressed in the peer-review period or in an editorial as early as 2013.

References

1.Carlisle JB. Data fabrication and other reasons for non-random sampling in 5078 randomized, controlled trials in anesthetic and general medical journals.

2.Estruch R et al. and PREDIMED Study Investigators. Primary prevention of cardiovascular disease with a Mediterranean diet. N English J Med. 2013 Apr 4;368(14):1279-90

3.Estruch R et al. and PREDIMED Study Investigators. Primary prevention of cardiovascular disease with a Mediterranean diet supplemented with extra-virigin olive oil or nuts. N English J Med. 2018 Jun 21;378(25):e34

4.The Nutrition Source. Harvard T.H. Chan School of Public Health. https://www.hsph.harvard.edu/nutritionsource/2018/06/22/predimed-retraction-republication/Health AccessedNovember 9, 2022.

4 Integrity#

I think the philosophy underpinning statistics must be addressed to foster positive change in regard to intellectual honesty and integrity of science.

Two schools of philosophy exist in the world of statistical inference: Frequentist and Bayesian (1).

To those unfamiliar with these schools I’m 100% certain that your exposure to statistics has been Frequentist. And to those familiar with these schools, it is without a doubt to any of you that the chief reason Bayesian statistics are not routinely taught at most levels of education (including most doctoral programs at as prestigious an institution of Johns Hopkins) is because they are complex (2).

But what is universally understood about these two schools of statistical inference? Fundamentally, the frequentist school relies too heavily on any single study for statistical inference whereas the Bayesian school formalizes our prior beliefs, then formalizes a statistical interaction between the strength of those prior beliefs and the latest findings from the latest study, and then the prior beliefs are thereby updated.

Sounds complex? Yeah, that’s why they don’t teach these methods! And therein lies the problem (3). As a community, we have chosen the easy path of Frequentist statistics that relies exclusively on one study, arbitrary p-values, and all the machinations of the authors of that study including their rigor, their proneness to error, their sloppiness, and their integrity. The tough way of Bayesian statistics is always a community effort, codified in the Bayesian prior knowledge, which always tempers the new information from the new study.

A fraudster who understands Bayesian statistics and who also is aware that peer reviewers and the audience at large are all masters of Bayesian statistics will be preempted from committing fraud. This would mean that teaching Bayesian statistics per se would be a preemptive solution to fraud rather than a reactive one, something described by the Director of the Office of Science Quality and Integrity as the ideal solution (4).

On a conservative note, Frequentists have met Bayesian halfway using meta-analysis to pool inferences from several studies and to reach one unifying conclusion about our best estimate of an effect size and the degree of our uncertainty (or heterogeneity across studies). Perhaps if these frequentist methods were not restricted to advanced courses, then fraudsters may also realize that their individual efforts will be fraught if they differ substantively from the rest of the literature. In other words, a fraudster would be certain to be found out!

To conclude, I am pessimistic about the moral integrity of the individual scientist for reasons outlined in my previous two posts. But I’m optimistic about the collective integrity of science for reasons also outlined in my previous post, which today I formally christen “Bayesian inference.”

References

1.Harrington D, et al. New Guidelines for Statistical Reporting in the Journal. N ENGL J MED 381;3. July 18, 2019

2.Brophy J. Bayes meets the NEJM. https://www.brophyj.com/files/med_lecture_bayes.pdf Accessed on November 16, 2022

3.Rosenquist JN. The Stress of Bayesian Medicine — Uncomfortable Uncertainty in the Face of Covid-19. N ENGL J MED 384;1 January 7, 2021

4.Thornill A. What’s all the Fuss About Scientific Integrity? https://www.doi.gov/ppa/seminar_series/video/whats-all-the-fuss-about-scientific-integrity Accessed on November 16, 2022

5 Reproducibility#

I fully agree with the general idea of Open Science. This weeks presentations essentially hypothesize that more Open Science is equal to greater likelihood of reproducibility. While this is an admirable hypothesis, I don’t think it should be exempt from the scientific process.

How about a natural experiment in which scientists voluntarily choose to adhere to the Open Science recommendations? Scientists who do not follow these recommendations may then serve as controls in this non-randomized trial.

One can prospectively or retrospectively assess whether followers of the Open Science recommendations tend to have “better outcomes” such as lower rates of retractions, higher likelihood of reproducibility, or some other metrics that the community may suggest.

Compelling real-world benefits of Open Science would speak for themselves and the pressure to conform to this new trend would emerge among the non-practicing.

That said, my personal experience has already shown me several numerous benefits of “Open Science”. In my field of Transplant Surgery, there have been publically accessible and mandated national databases for all solid-organ donations and transplants since 1987. The large datasets generated from this activity are curated by Scientific Registry of Transplant Recipients and this communal resource has been used to generate a larger body of outcomes research in Transplant Surgery when compared with other specialties of Surgery (1).

References

1.Scientific Registry of Transplant Recipients. https://www.srtr.org. Accessed November 30, 2022

6 Meta-Science#

Last week I suggested that we apply a scientific method of inquiry into the Open Science Recommendations so that we do not take our dear reproducibility activists at their own word.

I had no clue that I was already dabbling in meta science. So it really is possible to actively engage in an endeavor without having a name or definition for it. And so the 93 minutes of the first Youtube video dedicated to the question “What is Metascience?” tells me that perhaps our time and effort here could have been spent on more practical issues.

The crux of the 93 minute video is that metascience is research on research. That for me is enough. The object of interest is scientific research. And the investigators who do research on research are doing meta science. What methods do they employ? What is the epistemology? They should be in essence the same used in science including trials, experiments, statistical analyses of findings, inferences, and conclusions.

One discussant has a vision of meta science as being interdisciplinary, with no boundaries across science fields. While I will not discuss this in detail here, I am not at all optimistic of this proposal because in the real world funding and financing of science is key to its sustenance. And the proposed cross-disciplinary meta science is likely to remain an orphan since each science is sui generis — has its own unique set of issues, challenges, and most pressing questions — none of which is meta science.

What research topic would I choose to address if I had unlimited means? I would choose to develop an automated methodology that generates a meta-analysis of each paper submitted for peer-review to tell us how the inferences from the meta-analysis look before and after the new study is incorporated into the scientific literature. I’d then ask the authors, reviewers, and journal editors to write a one-sentence speculation on what makes this study unique to the existing literature.

Anyone familiar with Lancet, JAMA, and several top-tier medical journals knows that they already publish a brief research-in-context panel (What was known before? What does this paper contribute? And what remains unknown?) (1). What I propose is quantitive and reported with a classic forest-plot

My proposition would be embedded within existing research frameworks, modes of publication, and methods, and would only face opposition if it entailed extra effort for researchers. Hence, I envision this to be an automated process. The authors of the original science or the journal editors would only need to enter key items such as study outcome, risk factors, and outcome metrics. This would be original research but also placed in a broader context as research on research (i.e., meta-analysis and commentary).

References

Information for Author Item 9. Lancet. https://www.thelancet.com/pb/assets/raw/Lancet/authors/tl-info-for-authors-1660039239213.pdf. Accessed November 30, 2022.

Exercize#

Part I:

I think a fundamental logical fallacy in “Big Sugar’s Secret Ally? Nutritionists” is to mistake cause with effect (1). The basic hypothesis of the article is that the 655% increase in prevalence of Americans with diabetes over the last 60 years is caused by an increase in consumption of sugar.

However, the true causal relationship is that diabetes causes an increase in blood sugar levels, not the other way around wherein increased blood sugar levels (from dietary sources) increase likelihood of diabetes.

What causes diabetes? More specifically, what causes type-II diabetes (the epidemic kind)? The answer is an increased resistance to the effects of insulin, arising often from a change in body composition with more percent body fat than muscle. Adipose tissue is insulin resistant.

And the cause of increased body fat over the last 60 years is reduced physical activity and more or less sedentary life style amongst Americans of most ages.

To say obesity is caused “by a lack of energy balance” is incontestable. The article correctly quotes the NIH in saying “in other words, by our taking in more calories than we expend.” But then the author reveals their bias by focusing on the “taking in more calories” and completely neglecting the “more than we expend.” Hence, the entire article focuses on how food may influence our body weight through their caloric content.”

But how about how increased activity and exercise may moderate our appetite and thus our calorie intake (2)? This question uncovers the authors biased question and article title. It blinds us to the cause of dietary intake, namely, the hormones and endorphins that regulate our appetite in the first place.

Modern persons at all the stages of life including childhood, teenage, young adulthood, middle life, and later life have drastically reduced outdoor activity. Many more more video games, increased indoor entertainment through television, sprawling of suburbs and reliance on cars for every mode of movement (from home to grocery store, to school, to work, etc.) and such pattern have increased exponentially over the last 60 years and are the fundamental problem. Sedentary people eat more than active ones. So dietary intake is an effect arising from physical inactivity.

Of course the consequence of the logical fallacy discussed here is the focus on diet and nutrition instead of physical activity, lifestyle, and environmental engineering that may nudge individuals back to what their ancestors did: more physical activity for daily tasks.

Part II:

My favorite example of a statistical fallacy comes from American politics but may as well apply to clinical studies. Over the last 40 years, the American senate has had either a majority democrat or republican representation. But by majority I mean 51% (majority party) vs. 49% (minority party) of the 100 senators, two from each state. Just today, with Georgia’s senate runoff election complete, the democrats hold 51 of the 100 senate seats (3).

Those is the majority interpret this as the electoral mandate of the people who have chosen them over their rivals.

But when you subject this to statistical inference this is essentially not different from the toss of a coin or a random electoral event bearing absolutely no meaning. When you toss a coin 100 times (to elect the senators), that may count as a sample size of n=100. And the majority of 51% may count as p=0.51. The standard error is given by the square root of p(1-p)/n, 0.05. Thus such a slim majority is 51% (95% CI: 41.2%-60.8%).

Here we see that this isn’t statistically significantly different from the null-hypothesis from a coin toss (pure chance, luck) of 50%.

References

1.Gary Taubes. Big Sugar’s Secret Ally? Nutritionists. The New York Times. Jan. 13, 2017

2.James Dorling, et al. Acute and chronic effects of exercise on appetite, energy intake, appetite-related hormones: the modulating effect of adiposity, sex, and habitual physical activity. Nutrients. 2018 Sp; 10(9): 1140

3.Georgia’s U.S. Senate Runoff Election Results. The New York Times. https://www.nytimes.com/interactive/2022/12/06/us/elections/results-georgia-us-senate-runoff.html. Accessed December 07, 2022.

7 Serendipity#

Viagra was developed and studied in phase I clinical trials for hypertension and angina pectoris (1). It’s therapeutic effects were not substantive and did not reach phase II or III trials. But a commonly reported side effect in the phase I trial was the induction of marked penile erections (2).

Pfizer immediately recognized its commercial potential and patented it in 1996. It was then repositioned for erectile dysfunction and was approved for erectile dysfunction by FDA within a mere two years(3).

Within 3 months of FDA licensing in 1998 (4), Pfizer had earned \(400m from viagra and over the next 20 years leading to expiry of its patent in 2020 the annual earnings from the sale of viagra were \)1.2 - \(2.0b, with a cumulative total of \)27.6b as of 2020 (5).

The market for viagra and its competitors is expected to continue growing according to the WHO. Increased burden of lifestyle disease and sedentary lifestyle interact to cause erectile dysfunction. At a prevalence of 15% of the male population, it is projected by WHO to reach 320m persons by 2025 (6). Such is the story of an entirely serendipitous discovery by otherwise astute scientists and clinicians that were meticulous enough to profit from unintended consequences.

References

1.History of sildenafil. Wikipedia. Accessed December 22, 2022. https://en.wikipedia.org/wiki/Sildenafil#History

2.Goldstein I, et al. The Serendipitous story of sildenafil: an unexpected oral therapy for erectile dysfunction. ScienceDirect. Volume 7, Issue 1, January 2019, Pages 115-128

3.Ellis P, et al. Pyrazolopyrimidinones for the treatment of impotence. Google Patents. Accessed December 22, 2022. https://patents.google.com/patent/US6469012B1/en

Cox, D. The race to replace viagra. The Guardian. Accessed https://www.theguardian.com/science/2019/jun/09/race-to-replace-viagra-patents-erectile-dysfunction-drug-medical-research-cialis-eroxon

5.Worldwide revenue of Pfizer’s viagra from 2003 to 2019. Statista. Accessed December 22, 2022. https://www.statista.com/statistics/264827/pfizers-worldwide-viagra-revenue-since-2003/

6..Erectile dysfunction drugs market size, share & trends analysis report by product (viagra, Cialis, zydeco, levity, stendra), by region (North America, Europe, APAC, Latin America, MEA), and segment forecasts, 2022-2030. Grand View Research. Accessed December 22, 2022. https://www.grandviewresearch.com/industry-analysis/erectile-dysfunction-drugs-market

Final#

Humoral responses in the omicron era following three-dose SARS-CoV-2 vaccine series in kidney transplant recipients: a critical review of this preprint manuscript

McEvoy and colleagues studied antibody responses among 44 kidney transplant recipients who had received a third dose of SARS-CoV-2 vaccine (1). But no clear knowledge gap is outlined by the authors, no succinct hypothesis is proposed, and as such no simple conclusion is drawn that may guide clinical practice, guidelines, or policy. The authors compare serological response among variants (wild-type vs. beta vs. delta vs. omicron); they compare kidney transplant recipients with healthy controls; they assess humoral response using different assays (anti-RBD vs. anti-SmT1, despite the area under the curve of the receiver operating characteristic curve showing equipoise across variants); and this humoral response is assessed at different time points (pre-third dose vs. 1-month post-transplant, and pre-third dose vs. 1 month vs. 3 months). It appears as though there is author indecision regarding key study design and analytic parameters. McEvoy and colleagues do not synthesize their topic into its essential elements.

Indeed, McEvoy reference 61 published papers including references 1-4 on excess deaths from COVID-19 in transplant, references 5-11 on antibody responses following a second dose of vaccine, references 12-19 on booster/third dose following vaccine, references 20-27 on emerging literature on omicron variants, references 28-38 on the impact of booster vaccine on mortality, references 39-45 on the despair about vaccination not being enough even after the fourth dose, references 46-49 on antimetabolites as risk factors for non-response, and strangely references 40-61 on off-topic issues including vaccination guidelines in chronic kidney disease to mention but one. Less than 10 references would suffice given highly cited prior work on response after the second-dose, third-dose, and even fourth-dose.

Precisely because of the absence of a well-circumscribed aim, this manuscript sprawls out to no less than 44 pages in the authors quest for a “deeper understanding” of their topic. The authors present their results in a series of figures (five in total, each with 4-8 panels) and tables (four); this is another manifestation of author indecision about study design and study hypothesis. To contrast this paper with a worthy exemplar (reference #5), Boyarsky and colleagues present their work in 3 pages, they use only one table, one figure, and cite only six published papers (2). Their conclusion is clear: they find an improvement in anti-spike antibody responses in transplant recipients after dose 2 compared with dose 1, but they state that “a substantial proportion of transplant recipients likely remain at risk for COVID-19 after 2 doses of vaccine. Future studies [such as McEnvoy and colleagues] should address interventions to improve vaccine responses including booster doses or immunosuppression modulation.” McEvoy and colleagues to their credit heed this recommendation and investigate a 3rd dose (booster) although they do not investigate immune modulation.

Compared with Boyarsky and colleagues who studied antibody responses among 658 organ transplant recipients (52% kidney transplant recipients) following 2-dose SARS-CoV-2 vaccine, McEvoy and colleagues a small and underpowered population. Also, compared with Boyarsky and colleagues who recruited participants from across the US via social media, McEvoy does not define the geographic distribution of their participants and how they were selected into the study. Boyarsky and colleagues tell us that their recruitment was for participants who completed the 2-dose SARS-CoV-2 mRNA vaccine series between December 15, 2020 and March 13, 2021; however, McEvoy and colleagues do not mention the time period of their recruitment. They do casually tell us in the methods that patients were “contacted” in September 2021 when eligibility for third vaccine dosing was confirmed in Ontario, but it remains unstated in what time period the data were ultimate collected.

With an evolving pandemic where time is of the essence, these omissions in the basic description of the study participants in terms of person, place, and time must be addressed. Indeed, with the incessant emergence of new variants from the wild type to beta, delta, and omicron variants, McEvoy and colleagues do not sufficiently motivate our interest in their topic. Why should the reader be concerned about the omicron variant that emerged in 2021 while we are in the eve of 2023? And why do the authors bother with detailed analyses of the older beta and delta variants when omicron was the dominant variant at the time of study? The comparison with wild-type might be justified since it was the predominant type when the original vaccine trials were conducted in 2020.

And why do the authors compare kidney transplant recipients with healthy controls? As reported by Boyarsky and colleagues and other research groups (3), recipients of solid organ transplants are a substantively different population with regard to humoral responses following SARS-CoV-2 vaccines. And so, the merit of a healthy control population as a reference remains dubious.

Use of several non-standard or unnecessary abbreviations places a burden on the reader over the 44 pages of this manuscript. KTR, RBD, SOTR, CNI, WT, ROC, AUC, for instance, should be spelled out to give the reader, often a clinician, a more natural reading experience without the need to reference the meaning of these abbreviations. The authors are encouraged to spell out the whole word in the abstract as well as the body.

Some established demographic and clinical characteristics associated with lower postvaccination antibody response are older age, kidney, lung, pancreas, and multiorgan transplant as contrasted with liver, many years since transplant, inclusion of antimetabolite in maintenance immunosuppression regimen, and Pfizer-BioNTech as contrasted with Moderna (2, 3). To advance our understanding of the humoral response beyond the 2-doses described by Boyarsky to the 3-doses described herein, it would be helpful if Table 1 of McEvoy and colleagues included these risk factors as well. Instead, McEvoy investigated “transplant vintage”, eGFR, donor type, BMI, and previous COVID-19 infection. The authors do not tell us why they are abandoning what we already know to be risk factors of a poor humoral response. And they do not motivate us to appreciate why “transplant vintage”, eGFR, donor type, BMI, and previous COVID-19 infection should be investigated after dose 3.

Finally, the limited statistical power of this study constrains what may be drawn from McEvoy and colleagues. For this and other outlined reasons, I do not believe this manuscript will be of much value to the readers of a clinical journal on transplantation. And I do not believe the authors can address these limitations in any reasonable amount of time since the fundamental study design and sample size limit the reliability of this entire study.

References

McEvoy CM, et al. Humoral responses in the omicron era following three-dose SARS-Cov-2 vaccine series in kidney transplant recipients. medRxiv preprint server for health sciences. doi: https://doi.org/10.1101/2022.06.24.22276144

Boyarsky BJ, et al. Antibody response to 2-dose SARS-Cov-2 mRNA vaccine series in solid organ transplant recipients. JAMA. 2021;325(21):2204-6

Callaghan CJ, et al. Real-world effectiveness of the Pfizer-BioNTech BNT162b2 and Oxford-AstraZeneca ChAdOx1-S vaccines against SARS-CoV-2 in solid organ and islet transplant recipients. Transplantation. 2022;106(3)”436-46

At the end of the drama THE TRUTH — which has been overlooked, disregarded, scorned, and denied — prevails. And that is how we know the Drama is done.” Some scientists may be

sloppybecause they are — like all humans — interested in ordering the world rather than inrigorouslydemonstrating atruth.

Chaos - Activation, Text

Order - Utility, Context

Accuracy - Marginal, Pretext

8 Zuck#

Business | The Zuckerberg mankini

True to name: “Meta” stands for “After”, in this case, after the data, code & GPUs: i.e., the weights. Well, the endusers are downloading!! But they’re settling for the crumbs that fall off Zuck’s table

1. Data

\

2. Code -> 4. Art -> 5. Weights -> 6. Enduser-Satisfaction

/

3. GPUs

Meta is accused of “bullying” the open-source community#

It hopes its models will set the standard for open-source artificial intelligence#

Aug 28th 2024|los angeles#

Imagine a beach where for decades people have enjoyed sunbathing in the buff. Suddenly one of the world’s biggest corporations takes it over and invites anyone in, declaring that thongs and mankinis are the new nudity. The naturists object, but sun-worshippers flock in anyway. That, by and large, is the situation in the world’s open-source community, where bare-it-all purists are confronting Meta, the social-media giant controlled by a mankini-clad Mark Zuckerberg.

On August 22nd the Open Source Initiative (osi), an industry body, issued a draft set of standards defining what counts as open-source artificial intelligence (ai). It said that, to qualify, developers of ai models must make available enough information about the data they are trained on, as well as the source code and the weights of the internal connections within them (nodes, edges, scale: Graphs), to make them copyable by others. Meta, which releases the weights but not the data behind its popular Llama models (and imposes various licensing restrictions), does not meet the definition. Meta, meanwhile, continues to insist its models are open-source, setting the scene for a clash with the community’s purists.

Meta objects to what it sees as the osi’s binary approach, and appears to believe that the cost and complexity of developing large language models (llms) means a spectrum of openness is more appropriate. It argues that only a few models comply with the osi’s definition, none of which is state of the art.

Mr Zuckerberg’s eagerness to shape what is meant by open-source ai is understandable. Llama sets itself apart from proprietary llms produced by the likes of Openai and Google on the openness of its architecture, rather as Apple, the iPhone-maker, uses privacy as a selling-point. Since early 2023 Meta’s Llama models have been downloaded more than 300m times. As customers begin to scrutinise the cost of ai more closely, interest in open-source models is likely to grow.

Purists are pushing back against Meta’s efforts to set its own standard on the definition of open-source ai. Stefano Maffulli, head of the osi, says Mr Zuckerberg “is really bullying the industry to follow his lead”. olmo, a model created by the Allen Institute for ai, a non-profit based in Seattle, divulges far more than Llama. Its boss, Ali Farhadi, says of Llama models: “We love them, we celebrate them, we cherish them. They are stepping in the right direction. But they are just not open source.”

The definition of open-source ai is doubly important at a time when regulation is in flux. Mr Maffulli alleges that Meta may be “abusing” the term to take advantage of ai regulations that put a lighter burden on open-source models. Take the eu’s ai Act, which became law this month with the aim of imposing safeguards on the most powerful llms. It offers “exceptions” for open-source models (the bloc has many open-source developers), albeit with conflicting definitions of what that means, notes Kai Zenner, a policy adviser at the European Parliament who worked on the legislation. Or consider California’s sb 1047, a bill that aims for responsible ai development in Silicon Valley’s home state. In a letter this month, Mozilla, an open-source software group, Hugging Face, a library for llms, and Eleutherai, a non-profit ai research outfit, urged Senator Scott Wiener, the bill’s sponsor, to work with osi on a precise definition of open-source ai.

Imprecision raises the risk of “open-washing”, says Mark Surman, head of the Mozilla Foundation. In contrast, a watertight definition would give developers confidence that they can use, copy and modify open-source models like Llama without being “at the whim” of Mr Zuckerberg’s goodwill. Which raises the tantalising question: will Zuck ever have the pluck to bare it all? ■