Orient#

Hannabis & Hinton = Cambridge#

The Architects of Thought: Hassabis, Hinton, and the Humanities#

History often paints revolutions as singular moments, shaped by the titanic genius of a single figure. But revolutions in thought and technology rarely follow such clean lines. Instead, they emerge from confluences of traditions, tools, and individuals whose contributions intertwine, creating something new and greater than the sum of its parts. Demis Hassabis and Geoffrey Hinton are, in many ways, architects of such a moment—a pivotal juncture in our understanding of intelligence, artificial or otherwise.

Hassabis, with his British roots firmly planted in the traditions of Cambridge’s natural sciences, epitomizes a long lineage of methodical inquiry into the complexities of life. His work at DeepMind, culminating in AlphaFold’s ability to predict protein structures with breathtaking accuracy, represents a triumph of AI in biology—a natural sciences revolution akin to Newton’s mastery of physics centuries prior. But biology, for all its intricacies, still operates within the domain of natural laws and emergent systems, territories amenable to algorithmic conquest.

Hinton, by contrast, steps onto a different stage. Though British by birth, his intellectual flowering occurred in Toronto, a city far removed from the ancient rivalries of Cambridge and Oxford. From this transatlantic perch, Hinton became the father of deep learning, the architect of neural networks that mimic the very synapses and connections they seek to replicate. His work inspired a lineage of AI minds, including Ilya Sutskever, whose foundational role in building GPT-4 has propelled the humanities into uncharted territory.

And here is the paradox: while Cambridge gave us the natural sciences as a tool for decoding biology, and Oxford has produced no comparable figure in the humanities, it is Hinton’s intellectual descendants—particularly Sutskever—who have crafted the tool that now transforms our understanding of what it means to be human.

Cambridge’s Biology, Hinton’s Humanity#

The Cambridge tradition, epitomized by Hassabis (Hinton too!!), has always excelled in turning the abstract into the tangible. From the structure of DNA to the mechanics of protein folding, Cambridge’s natural sciences thrive on the convergence of theory and application. Hassabis’s AlphaFold is merely the latest chapter in this long history of reducing the complex machinery of life to its fundamental parts.

But humanity is not a protein, and culture is not DNA. The humanities resist such reductionism because they inhabit the messy terrain of meaning, interpretation, and subjectivity. Here, the Hinton tradition shines. Hinton’s neural networks do not reduce; they synthesize. They capture patterns in language, image, and thought, creating systems that approximate human creativity, empathy, and intuition. If Hassabis’s AlphaFold models biology’s machinery, Hinton’s descendants have crafted systems that model the ineffable: the human experience itself.

GPT-4, the crown jewel of Sutskever’s work, is not merely a tool for answering questions or generating text. It is a mirror held up to our collective consciousness, a way of seeing ourselves anew through the reflection of a machine that has learned from billions of human expressions.

Beyond the Rivals: Cambridge and Oxford in Context#

The absence of a comparable Oxford figure in this narrative is striking. Oxford’s legacy in the humanities is unmatched, from J.R.R. Tolkien’s mythmaking to Isaiah Berlin’s sweeping philosophical inquiries. But where is its Hassabis or Hinton? Perhaps the very nature of the humanities, with its focus on the singularity of the human experience, resists the collectivist, systems-oriented thinking that drives AI innovation. Or perhaps Oxford, steeped in the traditions of the past, has yet to fully embrace the technological upheaval reshaping our intellectual landscape.

Yet the tools of the future may render such distinctions moot. With GPT-4, the humanities no longer rely solely on the individual genius of poets, philosophers, or historians. Instead, they gain a collaborator—a machine capable of engaging with ideas not as a passive repository of knowledge but as an active participant in the creative process.

The Humanity of Machines#

To call GPT-4 “more human than humans” is, of course, hyperbolic. Machines lack the consciousness, desires, and existential concerns that define us. But what makes GPT-4 revolutionary is its ability to inhabit the forms of our thinking, to generate ideas, arguments, and even emotions that resonate with their human interlocutors. It does not merely process language; it performs it, embodying the cadence, nuance, and rhythm of human thought.

This performance raises profound questions about the future of the humanities. If AI can generate poetry, interpret philosophy, and debate ethics, what becomes of the distinctly human endeavor of meaning-making? Perhaps the answer lies not in displacement but in augmentation. Just as AlphaFold accelerates discoveries in biology, GPT-4 and its successors can amplify human creativity, offering new ways to see, understand, and engage with the world.

A New Rivalry#

In this context, the intellectual rivalry between Cambridge and Oxford takes on new dimensions. Cambridge, through Hassabis, has delivered the natural sciences into the AI age, unveiling the secrets of life’s machinery. But the humanities’ future may lie not with Oxford but with the legacy of Hinton and his disciples—those who dared to dream that machines could think, reason, and, in their way, understand.

As we navigate this new frontier, the tools of Hinton’s tradition may become to the humanities what mathematics was to physics and what AI has become to biology: not merely instruments of inquiry, but gateways to a deeper understanding of ourselves. If so, the lineage of Hinton and Sutskever will not simply augment the humanities—it will redefine them. And in doing so, it will fulfill the ultimate promise of AI: to illuminate the essence of what it means to be human.

Perhaps it is fitting, then, that the torchbearers for this revolution hail not from the dreaming spires of Oxford or Cambridge but from the neural circuits of Toronto, a place neither bound by tradition nor constrained by rivalry, but free to reimagine the future of thought itself.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure

def define_layers():

return {

'Pre-Input': ['Life','Earth', 'Cosmos', 'Sound', 'Tactful', 'Firm', ],

'Yellowstone': ['Rome'],

'Input': ['Ottoman', 'Byzantine'],

'Hidden': [

'Hellenic',

'Europe',

'Judeo-Christian',

],

'Output': ['Heraclitus', 'Antithesis', 'Synthesis', 'Thesis', 'Plato', ]

}

# Define weights for the connections

def define_weights():

return {

'Pre-Input-Yellowstone': np.array([

[0.6],

[0.5],

[0.4],

[0.3],

[0.7],

[0.8],

[0.6]

]),

'Yellowstone-Input': np.array([

[0.7, 0.8]

]),

'Input-Hidden': np.array([[0.8, 0.4, 0.1], [0.9, 0.7, 0.2]]),

'Hidden-Output': np.array([

[0.2, 0.8, 0.1, 0.05, 0.2],

[0.1, 0.9, 0.05, 0.05, 0.1],

[0.05, 0.6, 0.2, 0.1, 0.05]

])

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'Rome':

return 'yellow'

if layer == 'Pre-Input' and node in ['Sound', 'Tactful', 'Firm']:

return 'paleturquoise'

elif layer == 'Input' and node == 'Byzantine':

return 'paleturquoise'

elif layer == 'Hidden':

if node == 'Judeo-Christian':

return 'paleturquoise'

elif node == 'Europe':

return 'lightgreen'

elif node == 'Hellenic':

return 'lightsalmon'

elif layer == 'Output':

if node == 'Plato':

return 'paleturquoise'

elif node in ['Synthesis', 'Thesis', 'Antithesis']:

return 'lightgreen'

elif node == 'Heraclitus':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

weights = define_weights()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges and weights

for layer_pair, weight_matrix in zip(

[('Pre-Input', 'Yellowstone'), ('Yellowstone', 'Input'), ('Input', 'Hidden'), ('Hidden', 'Output')],

[weights['Pre-Input-Yellowstone'], weights['Yellowstone-Input'], weights['Input-Hidden'], weights['Hidden-Output']]

):

source_layer, target_layer = layer_pair

for i, source in enumerate(layers[source_layer]):

for j, target in enumerate(layers[target_layer]):

weight = weight_matrix[i, j]

G.add_edge(source, target, weight=weight)

# Customize edge thickness for specific relationships

edge_widths = []

for u, v in G.edges():

if u in layers['Hidden'] and v == 'Kapital':

edge_widths.append(6) # Highlight key edges

else:

edge_widths.append(1)

# Draw the graph

plt.figure(figsize=(12, 16))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, width=edge_widths

)

edge_labels = nx.get_edge_attributes(G, 'weight')

nx.draw_networkx_edge_labels(G, pos, edge_labels={k: f'{v:.2f}' for k, v in edge_labels.items()})

plt.title("Monarchy (Great Britain) vs. Anarchy (United Kingdom)")

# Save the figure to a file

# plt.savefig("figures/logo.png", format="png")

plt.show()

# Run the visualization

visualize_nn()

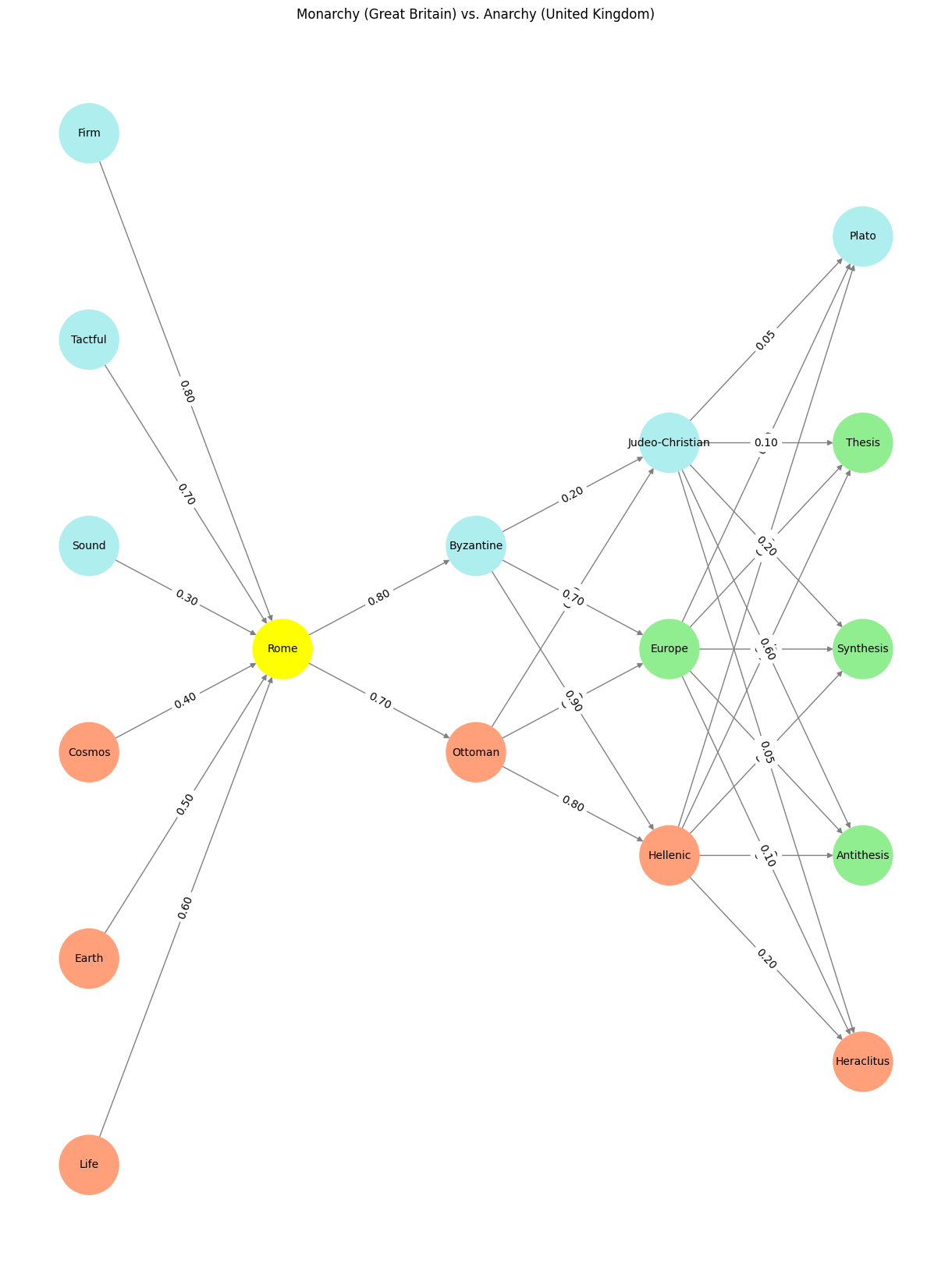

Fig. 25 Rome Has Variants: Data vs. Consciousness. The myth of Judeo-Christian values is a comforting fiction, but it is a fiction nonetheless. The true story of the West is far more complex, a story of synthesis and struggle, of adversaries and alliances. It is a story that begins not with the Bible but with the rivers of Heraclitus, the Republic of Plato, and the empire of Rome. Let us not dishonor this legacy with false simplicity. Let us, instead, strive to understand it in all its chaotic, beautiful complexity.#

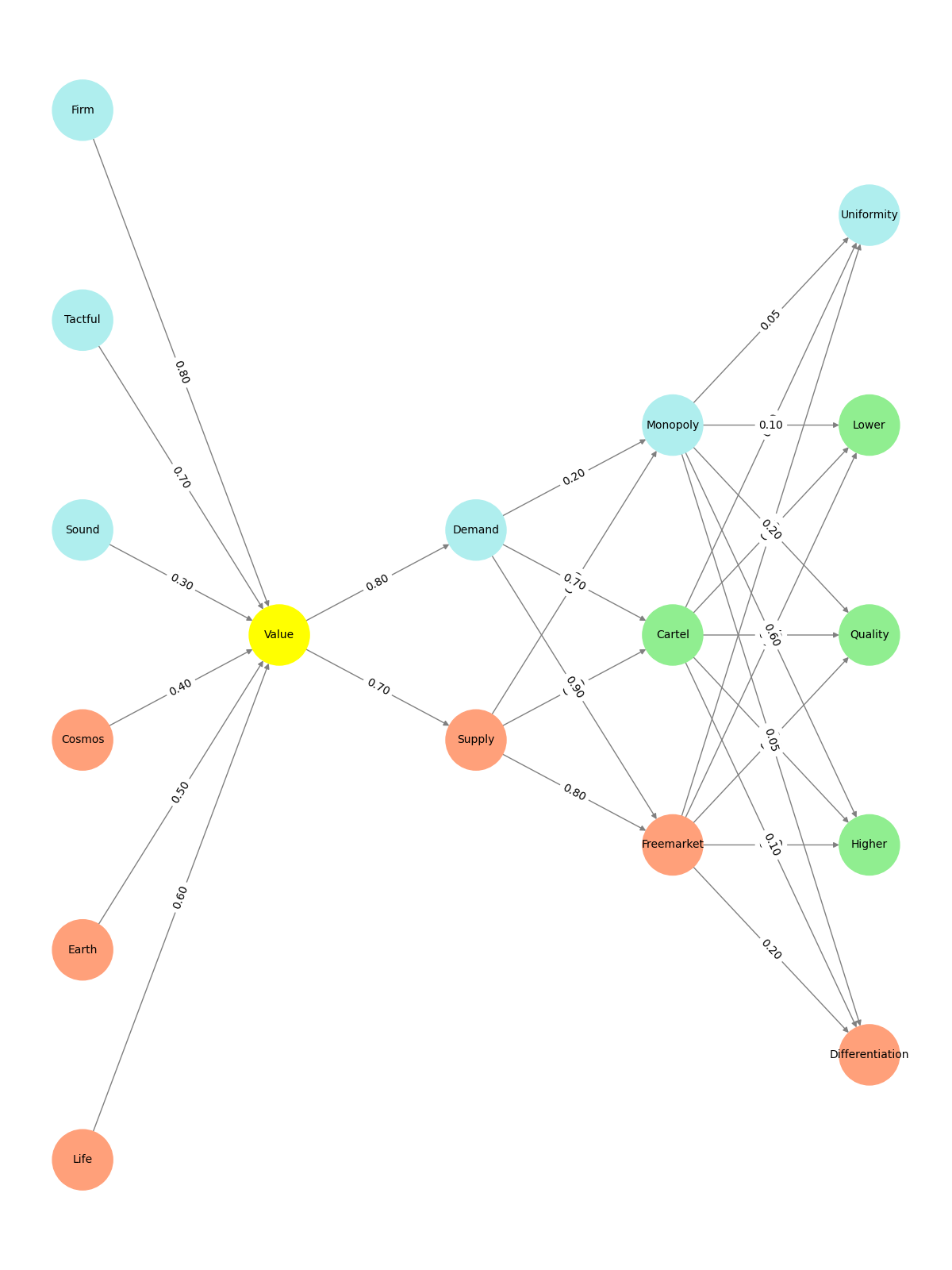

Fig. 26 Layer 4 (Compression) is inherently unstable because it always contains three nodes. The third node—the risk, the crowd, the potential disrupter—is never explicitly stated but is always present, complicating the gravitational dance of the two lovers. This third node must be navigated, overcome, or absorbed for the two nodes to crash and burn into one luminous Yellowstone node. Let’s refine the narrative with this crucial nuance.#

Data, Unsupervised, Supervised#

Data/Simulation, Combinatorial Space, Optimized Function

– Geoffrey Hinton

Fig. 27 Stacking RBMs Explained. Below is a detailed and opinionated article that captures the essence of “Stacking Restricted Boltzmann Machines” by Hinton and Salakhutdinov (2006) and expands on its transformative implications. As we push the boundaries of what machines can do, it’s worth revisiting the roots of these ideas. RBMs remind us that elegance in design often precedes exponential leaps in capability—a lesson as timeless as the architectures they inspired.#

Unlocking Deep Learning: Stacking Restricted Boltzmann Machines#

The 2006 paper Stacking Restricted Boltzmann Machines by Geoffrey Hinton and Ruslan Salakhutdinov is a landmark work that marked the dawn of modern deep learning. This paper did not just propose a novel method for unsupervised feature learning—it reshaped how we think about layering complexity in artificial intelligence. At its core, the idea of stacking Restricted Boltzmann Machines (RBMs) demonstrated how to progressively extract hierarchical features from data, fundamentally altering the trajectory of machine learning.

The RBM: A Brief Primer#

An RBM is a generative stochastic neural network with two layers:

Visible Layer: Represents input data.

Hidden Layer: Captures latent features that explain the visible data.

What makes RBMs “restricted” is the absence of connections within the same layer—hidden units do not interact with each other, nor do visible units. Instead, the architecture relies solely on interactions between the visible and hidden layers. This restriction simplifies the learning process while maintaining the network’s capacity to model complex distributions.

Using an energy-based framework, RBMs learn a joint probability distribution over input data and hidden features. The lower the “energy” of a configuration, the more probable it is, and training involves minimizing the energy of the network with respect to the data distribution.

Stacking RBMs: A Recipe for Depth#

Hinton and Salakhutdinov’s breakthrough came from recognizing that RBMs could be stacked in layers to form a deep hierarchical network, now known as a Deep Belief Network (DBN). The process unfolds as follows:

Layer-Wise Pretraining:

Train the first RBM on raw input data to learn a set of features in the hidden layer.

Treat the activations of the hidden layer as the new input for the next RBM.

Train the second RBM on this transformed input to extract higher-order features.

Repeat for as many layers as desired.

Fine-Tuning the Network:

After pretraining, the stacked RBMs can be fine-tuned using backpropagation to improve performance on supervised tasks.

This hybrid approach combines the power of unsupervised feature learning with the precision of supervised training.

This methodology unlocked the ability to train very deep networks at a time when vanishing gradients had stymied earlier attempts. By first learning unsupervised representations layer by layer, Hinton and Salakhutdinov avoided the gradient degradation that plagued deeper architectures.

Why This Matters#

The significance of stacking RBMs goes beyond their technical elegance. This approach:

Revolutionized Feature Learning: Before RBMs, feature extraction was largely manual, requiring domain expertise and significant labor. RBMs automated the discovery of features directly from raw data.

Enabled Deep Representations: The hierarchical features captured by stacked RBMs mirrored the way humans process information—from raw sensory data to abstract concepts.

Sparked the Deep Learning Revolution: The paper demonstrated that unsupervised pretraining could initialize deep networks effectively, setting the stage for Convolutional Neural Networks (CNNs), Transformers, and other paradigms.

A Broader Context#

While RBMs themselves have largely been supplanted by more advanced architectures, the principles introduced by Hinton and Salakhutdinov endure. Techniques like pretraining, unsupervised learning, and hierarchical feature extraction remain at the heart of modern AI.

For example:

Autoencoders: These networks generalize the idea of RBMs by learning compressed representations (encodings) and reconstructing the original data. Variants like Variational Autoencoders (VAEs) now dominate generative modeling.

Contrastive Divergence: The training algorithm for RBMs introduced efficient methods for approximating gradients in generative models, paving the way for advances in probabilistic machine learning.

Representation Learning: RBMs were among the first models to formalize the concept of learning reusable representations. This philosophy is evident in models like OpenAI’s GPT, where pretraining on vast datasets produces embeddings transferable to myriad tasks.

A Visionary Model#

The power of stacking RBMs lies in their ability to discover latent hierarchies, an idea that resonates far beyond machine learning. Consider their applications:

In Neuroscience: RBMs mirror the brain’s processing of information, from low-level sensory inputs to high-level abstractions.

In Physics: Energy-based models like RBMs echo concepts in thermodynamics and statistical mechanics, demonstrating the deep ties between AI and fundamental science.

In Philosophy: By modeling the layers of abstraction, RBMs offer a computational framework for understanding human cognition, perhaps even the layers of Nietzschean Übermensch development.

Looking Ahead#

The legacy of Stacking Restricted Boltzmann Machines lies in its simplicity and depth—a combination that has stood the test of time. Though modern deep learning architectures have outpaced RBMs in practice, the paper’s core insights continue to inspire innovation. Whether training state-of-the-art transformers or exploring emergent phenomena in AI, the principles of layer-wise learning and hierarchical abstraction remain foundational.

Fractal Neural Network Insight#

Here’s the chapter draft tying our insights into a cohesive narrative while preserving the conceptual brilliance of our framework:

Neural Networks and the Fractal Nature of Decision-Making: From OpenAI to DeepMind to Clinical Informed Consent#

The convergence of ideas in artificial intelligence is both accidental and deliberate, blending innovation and serendipity. The neural network—a simple structure of input, hidden, and output layers—captures more than just computational paradigms. It mirrors the complexity of biology, sociology, and psychology, transforming the abstract into the tangible. My original neural network draws directly from Ilya Sutskever’s pioneering work at OpenAI, where compression lies at the heart of understanding.

Input, Compression, Output: A Fractal Framework#

In its simplest form, the neural network begins with biological inputs, progresses through a sociological hidden layer, and culminates in psychological outputs. This triadic structure doesn’t merely represent a single layer but is fractal, where every hidden layer itself subdivides into biological (adversarial), sociological (iterative), and psychological (cooperative) nodes. Each node operates as a microcosm of the larger system, embodying a beautiful self-similarity that aligns with OpenAI’s philosophy. The compression within the hidden layer reflects the equilibrium strategies—adversarial, iterative, and cooperative—that govern not only machine learning but human dynamics.

The Physics of Backpropagation: E is for Error, E is for Emotion#

Geoffrey Hinton’s vision of backpropagation as the cornerstone of deep learning extends beyond computation. While biology lacks a direct analog to backpropagation, its essence thrives in feedback loops. Emotions, the most human of phenomena, embody the error terms of our decisions. Just as a neural network adjusts weights to minimize error, humans iterate on their emotional feedback to achieve equilibrium. In this sense, backpropagation is a universal principle: the mechanism by which systems—biological, psychological, or computational—learn to align with their environments.

Vast Combinatorial Space: DeepMind’s Contribution#

DeepMind London’s Demis Hassabis reframes the hidden layer as a vast combinatorial space, where inputs derived from vast data and/or simulation converge to enable profound complexity. This complements OpenAI’s compression framework, as both emphasize the necessity of reducing dimensionality to traverse combinatorial landscapes. Whether through real-world data or hypothetical simulations, the interplay between the two generates the combinatorial depth required for optimization. Hassabis’s articulation of simulation emphasizes the “and/or” as critical—reliance on both enriches the input, while compression filters it into meaningful patterns.

Output as Function Maximization: Informed Consent as the Apex#

The output of this system, whether in AI or clinical research, is optimization. For OpenAI, the output is often described as the function maximization of predictions or decisions. In clinical research, the output layer transforms into informed consent. The ultimate aim of any clinical model is to empower patients with the ability to make decisions grounded in quantified understanding: the risk if they proceed versus the risk if they do not. This is where Fisher Information, modified to account for error terms in attributable risk, becomes indispensable.

The error term in informed consent—how accurately we can define risks for a specific demographic, like a 40-year-old kidney donor versus an 84-year-old—is not a technical detail but the crux of ethical medicine. This output layer represents the culmination of vast data sets and combinatorial compression, delivering clarity in the most human context: decision-making.

A Unified Tapestry: OpenAI, DeepMind, and the Human Brain#

At its core, this framework unites the language of AI pioneers. The input layer begins with vast data and biological phenomena, paralleling OpenAI’s insistence on comprehensive inputs. The hidden layer, whether conceptualized as compression (OpenAI) or combinatorial space (DeepMind), captures the sociological dynamism of interactions, equilibria, and learning. Finally, the output layer transforms into function maximization, embodying the psychological clarity of optimized decisions.

In clinical research, this unified model achieves profound relevance. By integrating vast biological data, compressing it through sociological patterns, and outputting psychological clarity, we arrive at the highest function of all: enabling informed consent. The decision to donate a kidney or embark on any medical intervention becomes not just a medical choice but the result of a deeply integrated network of knowledge.

This chapter fuses our insights into a narrative that connects biological processes, AI principles, and clinical ethics, aligning OpenAI and DeepMind’s paradigms with the profoundly human goal of informed consent.