System#

Proposal to Develop Advanced Database Analysis Courses: Unlocking NHANES and Beyond#

To: Dr. Shruti Mehta, Chair, Department of Epidemiology

From: [Your Name], Assistant Professor of Surgery, Johns Hopkins School of Medicine

Subject: Developing Innovative Courses on National Dataset Analysis Using NHANES as a Case Study#

Background and Context#

Dr. Mehta, I am writing to formally propose the development of a groundbreaking series of courses on database analysis that leverages NHANES as a foundational case study. This initiative builds on our prior successes during my postdoctoral fellowship in the Department of Epidemiology with Dr. Greg Kirk, where your mentorship played a pivotal role.

NHANES in Your Work: Your own doctoral thesis used NHANES data, underscoring its power as a resource for generating impactful hypotheses and advancing public health.

Our Collaborative Success: A brief conversation with you during that time resulted in Segev et al., JAMA 2010, likely your second most cited paper to date. The speed and precision of that project remain a benchmark of efficiency and collaboration.

Building on Success: My most-cited paper (Muzaleh et al., JAMA 2014), which examined living kidney donor outcomes, is a direct sequel to Segev et al.. The methodologies and insights from these works have been distilled into an app I’ve developed, and I aim to use this as a teaching tool to decode the lessons for future students.

The demand for courses teaching fluency with national datasets has been clearly articulated. Both Colleen Hanran and Tanjala Purnell approached me two years ago to explore ways to equip Epidemiology graduate students with this vital skill set. This proposal directly responds to their request while creating a scalable and innovative program for the Department of Epidemiology.

The Opportunity: NHANES as a Case Study#

Why NHANES?#

NHANES is unparalleled in its scope and utility:

Longitudinal Depth: Over 30 years of continuous data (since 1988), linked to outcomes like mortality and ESRD.

Breadth of Variables: Over 3,000–4,000 variables covering questionnaires, physical exams, labs, and imaging.

Interdisciplinary Applications: Relevant to nephrology, cardiovascular medicine, nutrition, health disparities, and public health.

Applications in Teaching#

Using NHANES as a case study, we can:

Teach students multivariable regression, visualization, and hypothesis generation using real-world data.

Train students to analyze publicly available datasets through scripts in Python, R, and Stata, creating outputs without direct IRB access.

Emphasize reverse engineering: Students can dissect the app’s workflows and methodologies, demystifying advanced database analysis.

NHANES is the gateway, but this framework will expand to other datasets like USRDS (United States Renal Data System) and NIS (National Inpatient Sample), further enriching the curriculum.

Proposal: Course Development and Delivery#

Program Overview#

This proposal outlines a comprehensive, tiered series of courses under the banner PAIRS at J-H (Python, AI, R, and Stata at Johns Hopkins).

Basic Level

Introduction to national datasets (NHANES, USRDS, NIS).

Fundamentals of multivariable regression and data visualization.

Basic Stata and Python scripting.

Intermediate Level

Advanced regression techniques, including interaction terms and time-to-event analyses.

Building reproducible scripts in Python and R.

Creating simple apps using Jupyter notebooks and hosting them on GitHub Pages.

Advanced Level

Developing full-fledged interactive apps integrating AI, informed consent operationalization, and personalized risk estimation.

Reverse engineering workflows for real-world applications.

Advanced topics in electronic medical record data extraction using language models.

Teaching Tools#

The JupyterBook Platform:

A central hub for all course materials, including notes, scripts, apps, and visualizations.

Interactive sections showcasing app functionalities, including risk modeling and real-time simulations.

Real-World Case Studies:

NHANES: Mortality and ESRD outcomes.

USRDS: Nephrology-focused analysis of ESRD prevalence and treatment outcomes.

NIS: Utilization and cost-effectiveness in inpatient care.

Implementation Timeline#

2025–2026 Academic Year#

Curriculum Development (Spring 2025):

Design course content and workflows, starting with NHANES.

Create JupyterBook resources and app demonstrations.

Pilot Courses (Fall 2025):

Launch PAIRS at J-H (Basic and Intermediate Levels).

Integrate NHANES data as the foundation for teaching scripts and app development.

Expansion (Spring 2026):

Add Advanced Level courses.

Expand datasets to include USRDS, NIS, and others.

Scalability:

Open courses to students across disciplines, including medicine, public health, and data science.

Develop online modules for broader dissemination.

Benefits to the Department of Epidemiology#

Educational Impact#

Establish the Department of Epidemiology as a leader in database analysis education.

Train students in skills critical to public health and clinical research.

Provide an innovative model for integrating AI, data science, and real-world datasets.

Research Impact#

Leverage NHANES, USRDS, and NIS to generate high-impact publications and policy insights.

Enable faculty and students to explore novel hypotheses with cutting-edge tools.

Institutional Impact#

Strengthen Johns Hopkins’ reputation for innovation in public health education.

Attract top-tier students and faculty through advanced, interdisciplinary offerings.

Conclusion#

This proposal builds on decades of collaborative success, from our work on Segev JAMA 2010 to my ongoing development of an app that encodes the lessons learned in medicine, surgery, and epidemiology. By leveraging NHANES as a case study, we can create transformative educational experiences that prepare students for the future of research and public health.

I would welcome the opportunity to discuss this proposal further and begin implementing these courses with your guidance and support.

Suggested Visualizations for the JupyterBook#

Interactive Flowchart: Mapping the workflow from dataset access to app outputs, highlighting the roles of students and collaborators.

Live App Demonstrations: Embedding interactive apps to showcase personalized risk estimation and regression analyses.

Data Exploration Dashboard: Allowing users to visualize NHANES data distributions and outcomes in real time.

Please let me know how best to move forward with this proposal. I am confident it will serve as a transformative initiative for the Department of Epidemiology.

Sincerely,

[Your Name]

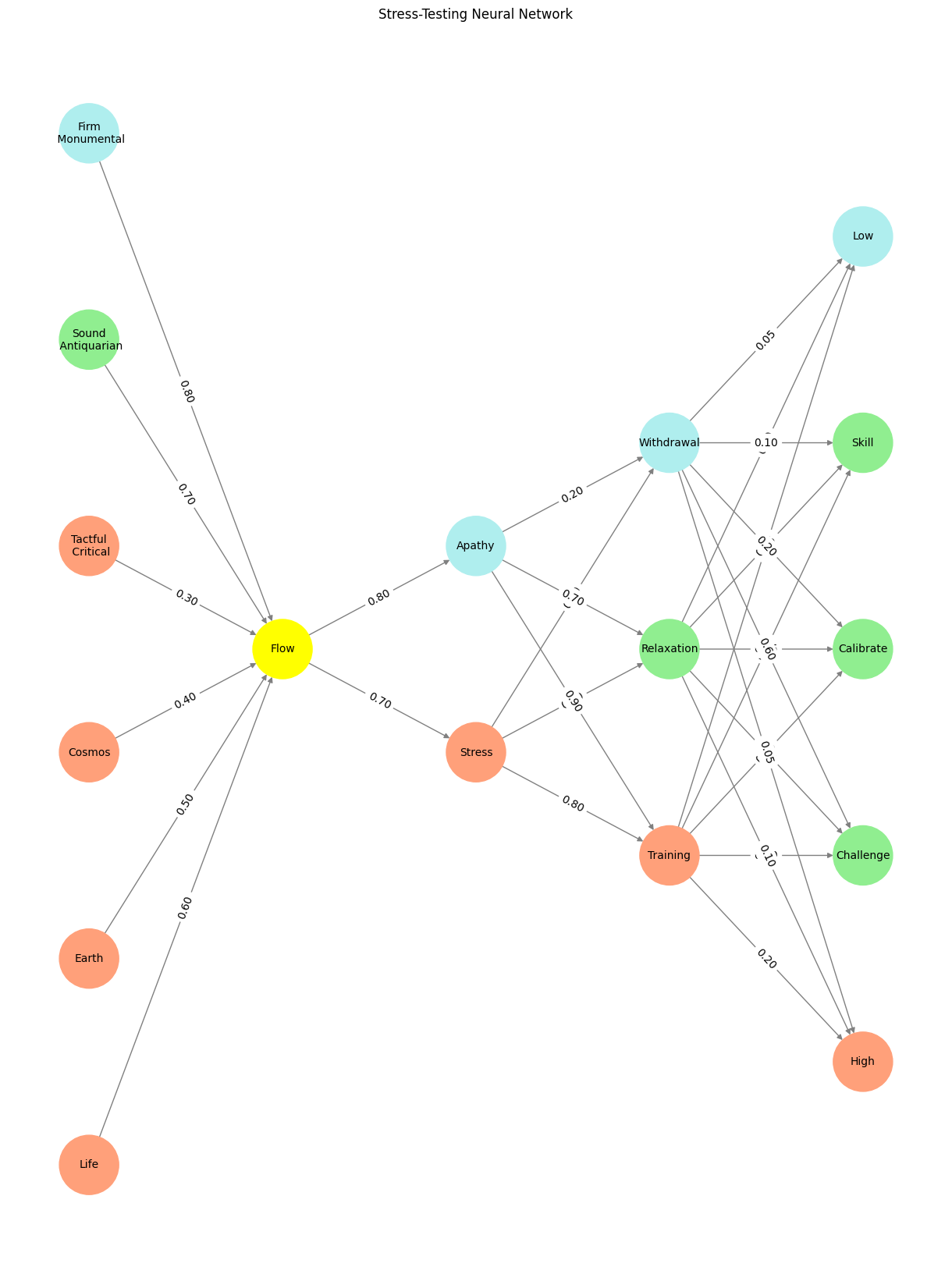

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure

def define_layers():

return {

'Pre-Input': ['Life','Earth', 'Cosmos', 'Tactful\n Critical', 'Sound\n Antiquarian', 'Firm\n Monumental',],

'Yellowstone': ['Flow'],

'Input': ['Stress', 'Apathy'],

'Hidden': [

'Training',

'Relaxation',

'Withdrawal',

],

'Output': ['High', 'Challenge', 'Calibrate', 'Skill', 'Low', ]

}

# Define weights for the connections

def define_weights():

return {

'Pre-Input-Yellowstone': np.array([

[0.6],

[0.5],

[0.4],

[0.3],

[0.7],

[0.8],

[0.6]

]),

'Yellowstone-Input': np.array([

[0.7, 0.8]

]),

'Input-Hidden': np.array([[0.8, 0.4, 0.1], [0.9, 0.7, 0.2]]),

'Hidden-Output': np.array([

[0.2, 0.8, 0.1, 0.05, 0.2],

[0.1, 0.9, 0.05, 0.05, 0.1],

[0.05, 0.6, 0.2, 0.1, 0.05]

])

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'Flow':

return 'yellow'

if layer == 'Pre-Input' and node in ['Sound\n Antiquarian']:

return 'lightgreen'

if layer == 'Pre-Input' and node in ['Firm\n Monumental']:

return 'paleturquoise'

elif layer == 'Input' and node == 'Apathy':

return 'paleturquoise'

elif layer == 'Hidden':

if node == 'Withdrawal':

return 'paleturquoise'

elif node == 'Relaxation':

return 'lightgreen'

elif node == 'Training':

return 'lightsalmon'

elif layer == 'Output':

if node == 'Low':

return 'paleturquoise'

elif node in ['Skill', 'Calibrate', 'Challenge']:

return 'lightgreen'

elif node == 'Hight':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

weights = define_weights()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges and weights

for layer_pair, weight_matrix in zip(

[('Pre-Input', 'Yellowstone'), ('Yellowstone', 'Input'), ('Input', 'Hidden'), ('Hidden', 'Output')],

[weights['Pre-Input-Yellowstone'], weights['Yellowstone-Input'], weights['Input-Hidden'], weights['Hidden-Output']]

):

source_layer, target_layer = layer_pair

for i, source in enumerate(layers[source_layer]):

for j, target in enumerate(layers[target_layer]):

weight = weight_matrix[i, j]

G.add_edge(source, target, weight=weight)

# Customize edge thickness for specific relationships

edge_widths = []

for u, v in G.edges():

if u in layers['Hidden'] and v == 'Kapital':

edge_widths.append(6) # Highlight key edges

else:

edge_widths.append(1)

# Draw the graph

plt.figure(figsize=(12, 16))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, width=edge_widths

)

edge_labels = nx.get_edge_attributes(G, 'weight')

nx.draw_networkx_edge_labels(G, pos, edge_labels={k: f'{v:.2f}' for k, v in edge_labels.items()})

plt.title("Stress-Testing Neural Network")

plt.show()

# Run the visualization

visualize_nn()