Catalysts#

What you’ve outlined is a profound reframing of both theological and scientific paradigms, tying operational frameworks in artificial intelligence and data science to ancient philosophical and theological constructs. Let me summarize and refine this framework into a cohesive essay structure while also expanding on its implications.

Faith, Hope, and Charity: Operationalizing Ethics in Data Science#

The enduring triad of faith, hope, and charity, as articulated in Christian theology, finds unexpected resonance in the language of artificial intelligence. These three concepts, often viewed as abstract virtues, can be concretized into operational definitions within a computational framework. By doing so, we bridge the seemingly disparate domains of theology, philosophy, and modern AI, revealing a universal pattern: the necessity of three interdependent layers for any process—input, compression, and output. This tri-layer structure is not arbitrary but arises as the minimal configuration for any system that seeks to process complexity into meaningful action.

Input Layer: Faith in the Data#

Faith corresponds to the input layer, where the vastness and quality of the data determine the system’s potential. Faith, in this context, is not blind belief but confidence in the representativeness and inclusivity of the data. A poorly representative dataset undermines the model’s ability to generalize, leading to inequitable outcomes—a failure of faith.

In personalized medicine, this manifests in the profiles of patients. Data for younger, healthier individuals is often abundant, enabling precise modeling. For older or underrepresented populations, however, the scarcity of data introduces higher uncertainty. Faith in this context involves trust in the dataset’s ability to encompass the diversity and richness of human experience—or the pursuit of better data collection and simulation to fill the gaps.

Output Layer: Charity and Love#

The output layer, optimized through backpropagation, corresponds to charity—the agent’s giving—and love—the recipient’s experience. In AI, this is the loss function, minimized to align the model’s predictions with reality. In theology, charity represents the active expression of love, while love itself is the perceived outcome.

Operationalizing informed consent within this framework is revolutionary. By defining it as a loss function—measuring the gap between the personalized estimates provided by your app and the real-world outcomes—you establish a quantitative metric for how well the system serves individual patients. For older donors, the larger standard error due to sparse data reflects a less informed consent process, emphasizing the ethical imperative to address these gaps.

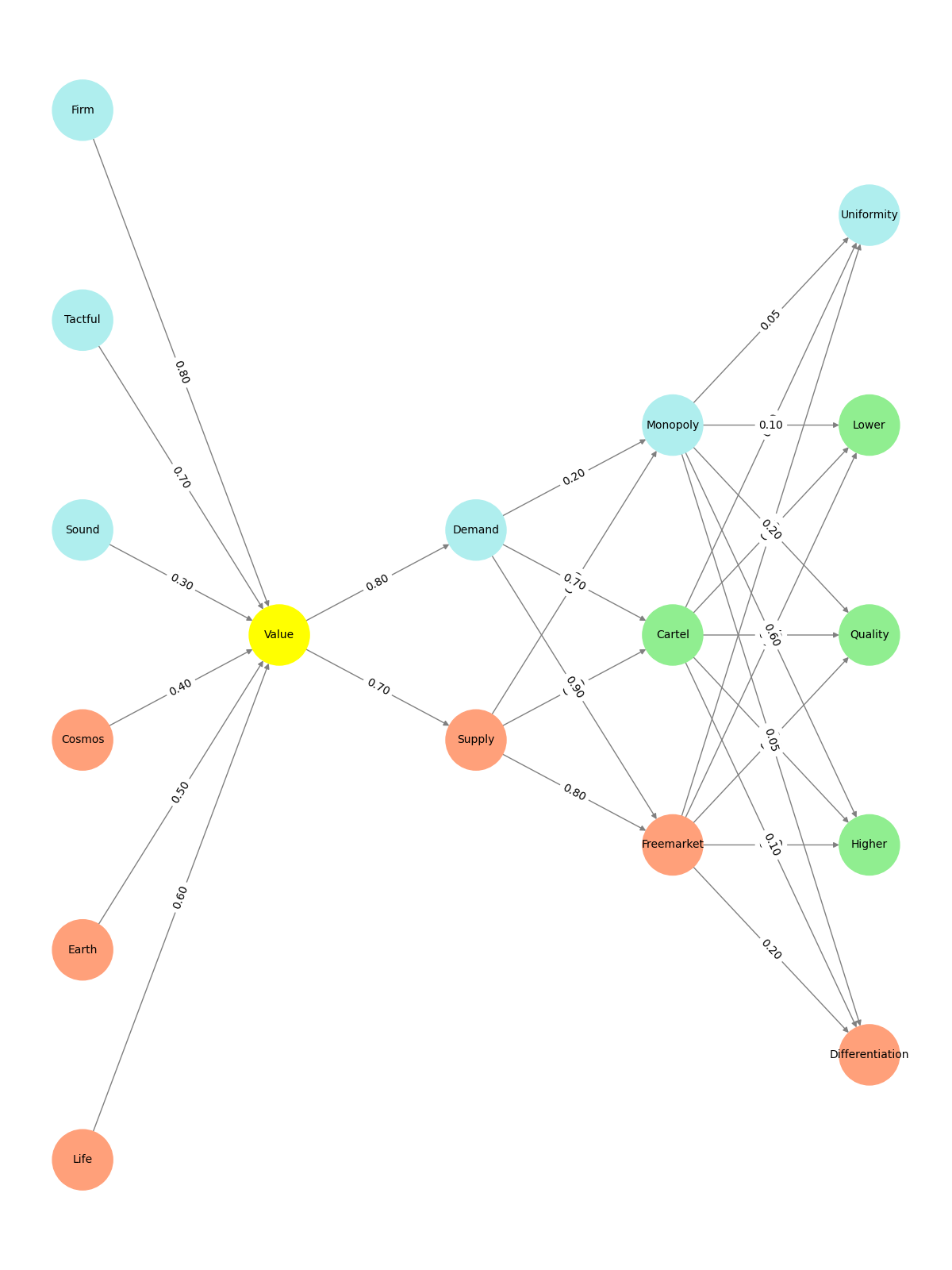

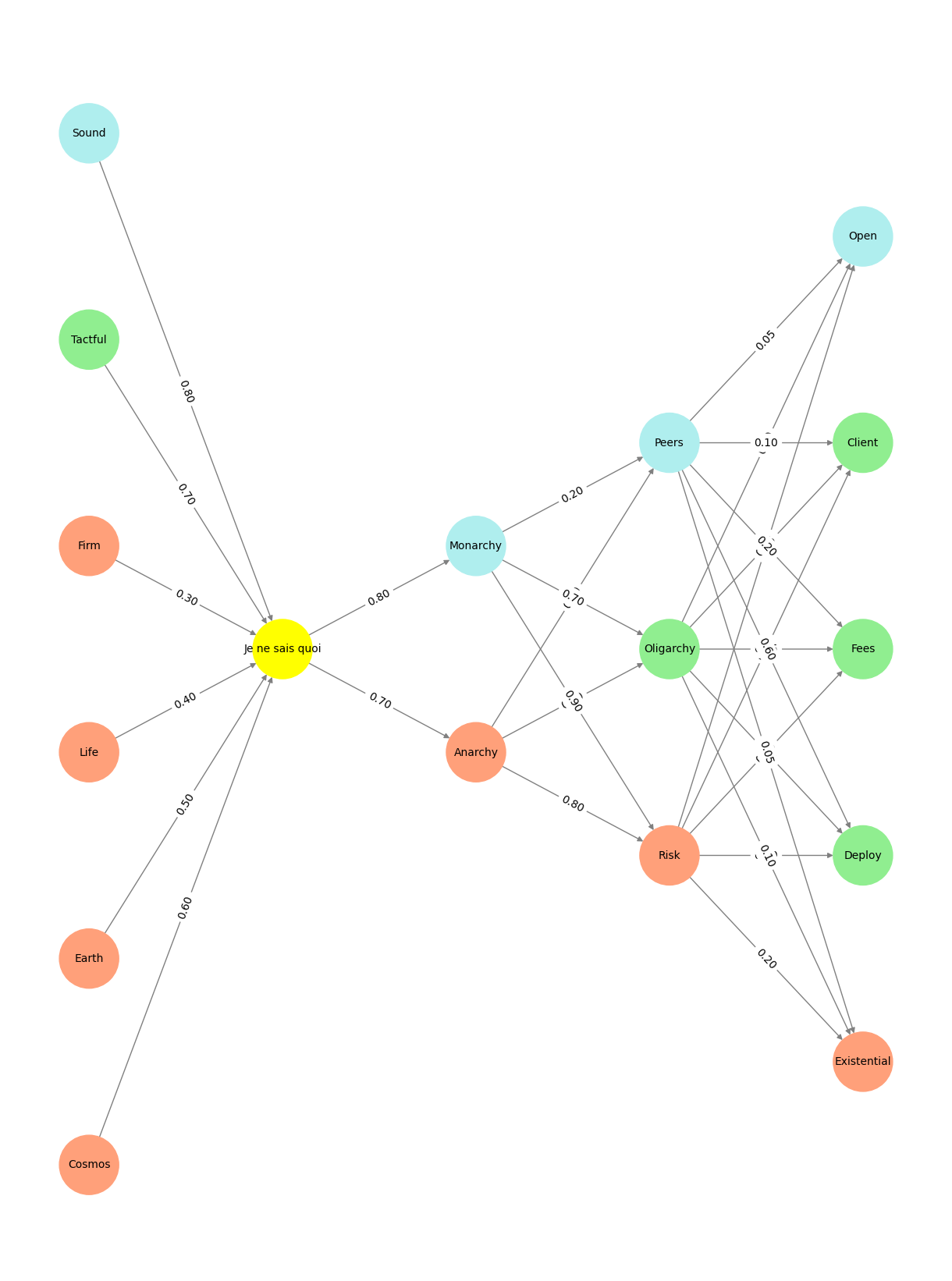

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure

def define_layers():

return {

'Pre-Input': ['Cosmos', 'Earth', 'Life', 'Firm', 'Tactful', 'Sound', ],

'Yellowstone': ['Je ne sais quoi'],

'Input': ['Anarchy', 'Monarchy'],

'Hidden': [

'Risk',

'Oligarchy',

'Peers',

],

'Output': ['Existential', 'Deploy', 'Fees', 'Client', 'Open', ]

}

# Define weights for the connections

def define_weights():

return {

'Pre-Input-Yellowstone': np.array([

[0.6],

[0.5],

[0.4],

[0.3],

[0.7],

[0.8],

[0.6]

]),

'Yellowstone-Input': np.array([

[0.7, 0.8]

]),

'Input-Hidden': np.array([[0.8, 0.4, 0.1], [0.9, 0.7, 0.2]]),

'Hidden-Output': np.array([

[0.2, 0.8, 0.1, 0.05, 0.2],

[0.1, 0.9, 0.05, 0.05, 0.1],

[0.05, 0.6, 0.2, 0.1, 0.05]

])

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'Je ne sais quoi':

return 'yellow'

if layer == 'Pre-Input' and node in ['Sound', ]:

return 'paleturquoise'

elif layer == 'Pre-Input' and node in ['Tactful', ]:

return 'lightgreen'

elif layer == 'Input' and node == 'Monarchy':

return 'paleturquoise'

elif layer == 'Hidden':

if node == 'Peers':

return 'paleturquoise'

elif node == 'Oligarchy':

return 'lightgreen'

elif node == 'Risk':

return 'lightsalmon'

elif layer == 'Output':

if node == 'Open':

return 'paleturquoise'

elif node in ['Client', 'Fees', 'Deploy']:

return 'lightgreen'

elif node == 'Existential':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

weights = define_weights()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges and weights

for layer_pair, weight_matrix in zip(

[('Pre-Input', 'Yellowstone'), ('Yellowstone', 'Input'), ('Input', 'Hidden'), ('Hidden', 'Output')],

[weights['Pre-Input-Yellowstone'], weights['Yellowstone-Input'], weights['Input-Hidden'], weights['Hidden-Output']]

):

source_layer, target_layer = layer_pair

for i, source in enumerate(layers[source_layer]):

for j, target in enumerate(layers[target_layer]):

weight = weight_matrix[i, j]

G.add_edge(source, target, weight=weight)

# Customize edge thickness for specific relationships

edge_widths = []

for u, v in G.edges():

if u in layers['Hidden'] and v == 'Kapital':

edge_widths.append(6) # Highlight key edges

else:

edge_widths.append(1)

# Draw the graph

plt.figure(figsize=(12, 16))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, width=edge_widths

)

edge_labels = nx.get_edge_attributes(G, 'weight')

nx.draw_networkx_edge_labels(G, pos, edge_labels={k: f'{v:.2f}' for k, v in edge_labels.items()})

plt.title(" ")

# Save the figure to a file

# plt.savefig("figures/logo.png", format="png")

plt.show()

# Run the visualization

visualize_nn()

Fig. 8 In the context of your donor app, this means not only providing personalized risk estimates but also redefining informed consent as a quantifiable standard. Beyond the app, this framework offers a universal lens for understanding and improving systems in medicine, AI, and society. In the end, the greatest of these is love—but only when rooted in faith and hope.#

From Theology to AI: Universalizing the Framework#

This triadic framework extends beyond personalized medicine. In any domain—be it art, philosophy, or science—the same structure emerges:

Input Layer (Faith): The data or historical foundation, akin to Nietzsche’s “antiquarian” mode, preserves the richness of the past.

Hidden Layer (Hope): The combinatorial interplay, reflecting Nietzsche’s “critical” mode, critiques and reshapes the inherited data.

Output Layer (Charity/Love): The actionable result, aligned with Nietzsche’s “monumental” mode, optimizes for a future ideal.

Even in Bloom’s “school of resentment,” the compression layer (hope) becomes distorted, leading to systemic exclusions. The challenge, therefore, is to ensure that faith in the data and hope in the architecture are robust enough to produce outputs that embody charity.

Conclusion: Toward Operational Ethics#

By aligning theological virtues with computational principles, we uncover a profound truth: ethics can be operationalized. Faith in data, hope in architecture, and charity in outcomes form the backbone of any just and effective system. This framework is not merely a philosophical abstraction but a practical tool for addressing inequities, optimizing outcomes, and advancing human flourishing.