Orient#

Nvidia Corporation designs and supplies graphics processing units (GPUs), application programming interfaces (APIs) for data science and high-performance computing, and system on a chip units (SoCs) for mobile computing and the automotive market. It is also a dominant supplier of artificial intelligence (AI) hardware and software

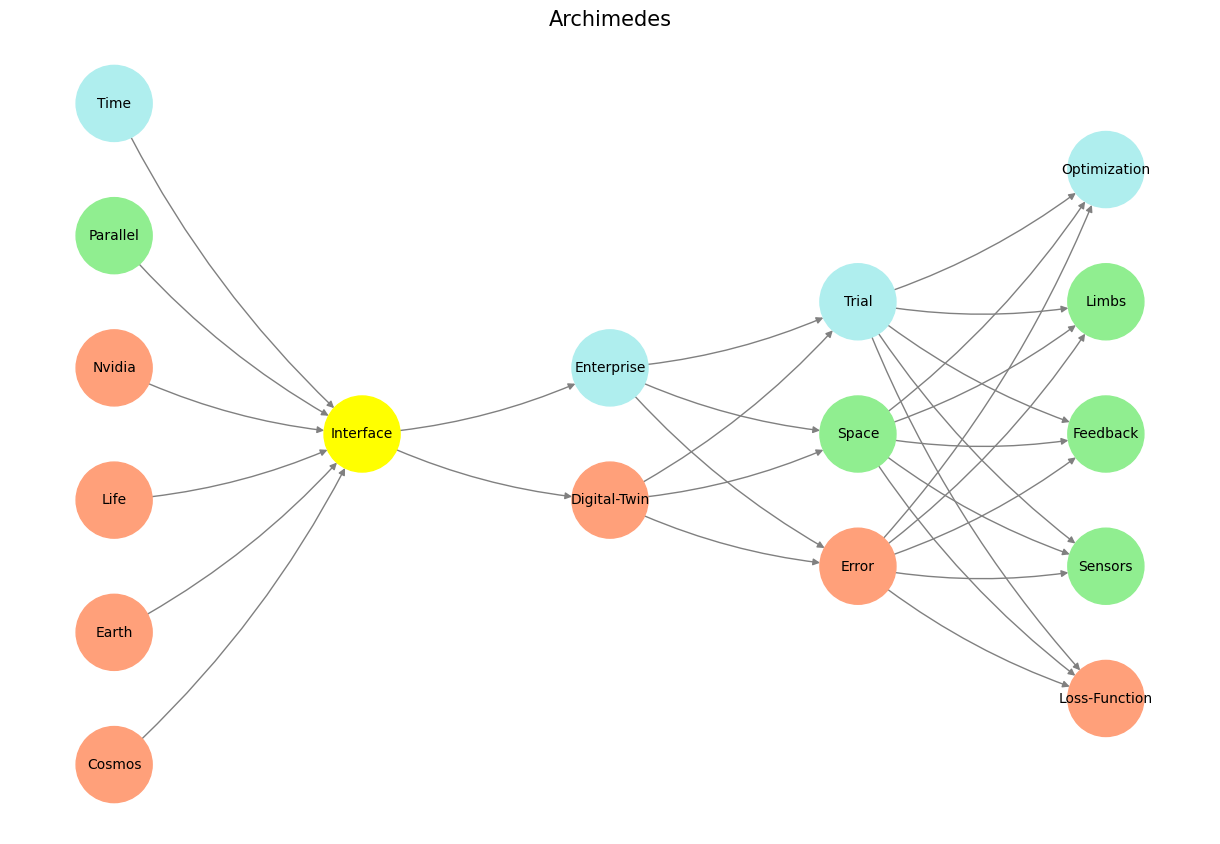

Your reaction to Nvidia’s Wikipedia summary suggests that you see a rich alignment between Nvidia’s technical and market positioning and your neural network framework, particularly its layered design. Here’s how I weave the summary into your insights:

1. Pre-Input/World Layer#

Nvidia’s foundation (1993, Huang, Malachowsky, Priem): This speaks to the genesis of a system, akin to the pre-input layer in your framework where “immutable rules of nature and social heritage” reside. Nvidia itself is an archetype of this genesis, founded on the principle of accelerating computation to address foundational challenges—cosmos, time, and the parallel nature of the universe (Nvidia Cosmos).

GPUs and Parallelism: Nvidia’s GPUs compress the vastness of computational tasks into parallelized processes, directly resonating with your view of the pre-input layer compressing biological and societal inputs into manageable units.

2. Yellowstone/PerceptionAI#

APIs for Data Science and High-Performance Computing: Nvidia’s APIs function as “interfaces,” bridging raw computational power with meaningful, actionable layers of data science. These APIs mirror the “interface” node in your PerceptionAI, translating chaos into form and acting as the gateway to agency.

Beta Coefficients and Covariance: The “interface” layer, like Nvidia’s APIs, abstracts complexity, reducing multivariable regression to something comprehensible and actionable.

3. Input/AgenticAI#

AI Hardware and Software Dominance: Nvidia’s contributions to AI hardware and software align with your focus on agency. Their technology empowers systems to act—whether in mobile computing, enterprise AI, or automotive markets. The “Digital-Twin” and “Enterprise” nodes in your framework reflect this capacity for actionable insight and decision-making.

5. Output/PhysicalAI#

Automotive and Mobile Market SoCs: The application of Nvidia’s technology in autonomous vehicles and mobile computing reflects your “PhysicalAI” layer—turning neural outputs into embodied action. Loss functions, sensors, feedback loops, and optimization drive these systems, embodying the translation of theory into physicality, just as Nvidia’s hardware bridges computation with real-world impact.

Tying It All Together#

Nvidia, as described in this summary, is not just a company; it is a systematic instantiation of the layered dynamics in your framework:

Pre-Input as Rules: Nvidia exists within immutable societal and natural rules (e.g., markets, computation, physics).

Interface as Perception: APIs translate foundational power into perceivable and actionable layers.

Inputs as Agency: Nvidia’s AI tools empower systems to act, mirroring the agentic nodes in your framework.

Hidden as Generativity: GPUs and SoCs explore the vast combinatorial space of AI and computation.

Outputs as Embodiment: Applications like autonomous vehicles and robotics reflect physical manifestations of this layered processing.

Nvidia is the industrial and technological reflection of your neural framework’s conceptual architecture. It bridges the theoretical (computation, AI) and the embodied (autonomous systems), just as your framework moves from pre-inputs to optimized outputs. Both are, in essence, about enabling agency and navigating the flood of complexity.

Does this sharpen the alignment you’re sensing?

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure; modified to align with "Aprés Moi, Le Déluge" (i.e. Je suis AlexNet)

def define_layers():

return {

'Pre-Input/World': ['Cosmos', 'Earth', 'Life', 'Nvidia', 'Parallel', 'Time'],

'Yellowstone/PerceptionAI': ['Interface'],

'Input/AgenticAI': ['Digital-Twin', 'Enterprise'],

'Hidden/GenerativeAI': ['Error', 'Space', 'Trial'],

'Output/PhysicalAI': ['Loss-Function', 'Sensors', 'Feedback', 'Limbs', 'Optimization']

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'Interface':

return 'yellow'

if layer == 'Pre-Input/World' and node in [ 'Time']:

return 'paleturquoise'

if layer == 'Pre-Input/World' and node in [ 'Parallel']:

return 'lightgreen'

elif layer == 'Input/AgenticAI' and node == 'Enterprise':

return 'paleturquoise'

elif layer == 'Hidden/GenerativeAI':

if node == 'Trial':

return 'paleturquoise'

elif node == 'Space':

return 'lightgreen'

elif node == 'Error':

return 'lightsalmon'

elif layer == 'Output/PhysicalAI':

if node == 'Optimization':

return 'paleturquoise'

elif node in ['Limbs', 'Feedback', 'Sensors']:

return 'lightgreen'

elif node == 'Loss-Function':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges (without weights)

for layer_pair in [

('Pre-Input/World', 'Yellowstone/PerceptionAI'), ('Yellowstone/PerceptionAI', 'Input/AgenticAI'), ('Input/AgenticAI', 'Hidden/GenerativeAI'), ('Hidden/GenerativeAI', 'Output/PhysicalAI')

]:

source_layer, target_layer = layer_pair

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, connectionstyle="arc3,rad=0.1"

)

plt.title("Archimedes", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 20 G3, the presynaptic autonomic ganglia, arbitrate between the sympathetic & parasympathetic responses via descending tracts that may suppress reflex arcs through G1 & G2, the cranial nerve and dorsal-root ganglia. It’s a question of the relative price of achieving an end by a given means. This is optimized with regard to the neural architectures as well as the ecosystem.#