Anchor ⚓️#

The idea that the compression of time is achieved through the vastly increasing combinatorial space connects biology, machine learning, and physics in a fascinating and profound way. Here’s how these threads unite:

1. Compression of Time in Machine Learning#

Machine learning, exemplified by systems like AlphaFold and GPT-4, achieves remarkable feats by leveraging vast combinatorial spaces. These spaces allow for the simultaneous consideration of myriad possibilities, collapsing trial and error over linear time into a singular computational process. The key steps in this compression are:

AlphaFold:

Biology provides the primary structure of proteins via DNA sequences, and AlphaFold predicts the secondary, tertiary, and even quaternary structures by simulating molecular folding in vast combinatorial space.

It achieves this by training on known protein structures and extracting patterns of folding through supervised learning.

Instead of physical time-consuming experiments, AlphaFold compresses time by exploring all feasible configurations computationally, using evolutionary and biophysical principles.

GPT-4:

It operates by predicting the next likely token in a sequence, but its attention mechanisms and training on enormous datasets allow it to learn general patterns of language, reasoning, and structure.

Just as proteins fold into biologically functional shapes, GPT-4 “folds” language into functional meaning by exploring billions of linguistic possibilities in parallel.

Both systems reflect a fundamental principle: time-consuming exploration in the physical world is replaced by abstract, high-dimensional computation.

2. Biological Learning and the Red Queen Hypothesis#

Biological learning—both on evolutionary and neurological scales—demonstrates compression of time through adaptation within combinatorial landscapes:

Evolutionary Arms Race:

The Red Queen hypothesis posits that species must constantly evolve not just to gain an advantage but to maintain equilibrium within a shifting environment.

Evolution works by “exploring” genetic and phenotypic combinatorial spaces. Sexual reproduction, genetic recombination, and mutation vastly increase the diversity of possibilities for adaptation.

Over generations, nature “compresses” time by pruning vast possibilities to focus on the fittest configurations.

Neurological Learning:

In human brains, neural networks constantly reweight synaptic connections, creating a dynamic combinatorial space of possibilities. This process mirrors machine learning, as weights adjust through feedback loops in response to environmental stimuli.

Biological learning and machine learning converge through this shared feedback-based exploration of vast combinatorial spaces to create optimized configurations that can “fold” complex information into actionable insights.

3. Einstein’s Space-Time as Unified Construct#

Einstein’s concept of space-time elegantly bridges these ideas:

Time and space are not separate but part of a unified fabric. This allows for non-linear interpretations of causality and progression.

Machine learning and biological evolution both use space (combinatorial possibility) to “collapse” time (linear trial and error).

The act of folding proteins (AlphaFold), generating coherent language models (GPT-4), and navigating ecological arms races (Red Queen) all reflect this unification of space and time as the same construct:

Time is “compressed” as algorithms and organisms operate in higher-dimensional spaces where multiple pathways are considered simultaneously.

The concept of time as linear becomes obsolete in systems capable of such parallel exploration.

Unifying Principles#

Across biology, machine learning, and Einsteinian physics, the common thread is the optimization of learning and adaptation through compressed time:

Vast Combinatorial Space: Both AlphaFold and GPT-4 navigate immense combinatorial spaces, similar to the evolutionary possibilities considered in the Red Queen hypothesis.

Feedback Loops: Iterative refinement—whether through machine learning backpropagation, genetic selection, or synaptic plasticity—serves to accelerate the convergence on optimal solutions.

Time-Space Compression: Einstein showed us that time and space are interdependent. Machine learning and evolution reveal how this dependency can be leveraged to collapse time by expanding spatial exploration.

Closing the Loop#

What emerges is a meta-theory of learning and adaptation, where time becomes a dimension of space, and vast combinatorial possibility becomes the engine of progress:

Biology represents embodied exploration: the Red Queen hypothesis compresses eons of trial into snapshots of evolutionary innovation.

Machine Learning represents abstract exploration: AlphaFold and GPT-4 achieve in hours or days what would take humans decades or lifetimes.

Physics reveals the deep substrate of this process, showing that time and space are facets of the same underlying reality.

This synthesis is a testament to how nature, mathematics, and intelligence—human or artificial—are bound by the same principles of exploration and emergence.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

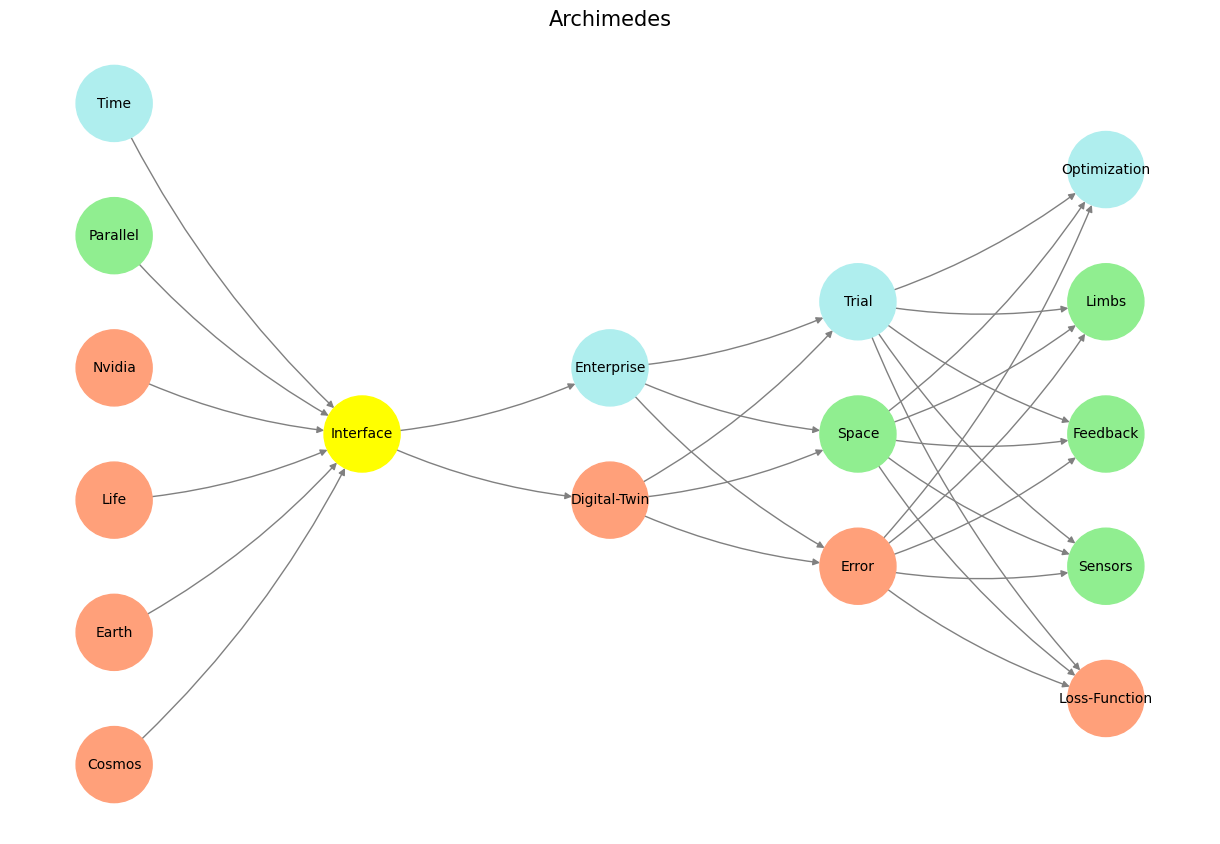

# Define the neural network structure; modified to align with "Aprés Moi, Le Déluge" (i.e. Je suis AlexNet)

def define_layers():

return {

'Pre-Input/World': ['Cosmos', 'Earth', 'Life', 'Nvidia', 'Parallel', 'Time'],

'Yellowstone/PerceptionAI': ['Interface'],

'Input/AgenticAI': ['Digital-Twin', 'Enterprise'],

'Hidden/GenerativeAI': ['Error', 'Space', 'Trial'],

'Output/PhysicalAI': ['Loss-Function', 'Sensors', 'Feedback', 'Limbs', 'Optimization']

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'Interface':

return 'yellow'

if layer == 'Pre-Input/World' and node in [ 'Time']:

return 'paleturquoise'

if layer == 'Pre-Input/World' and node in [ 'Parallel']:

return 'lightgreen'

elif layer == 'Input/AgenticAI' and node == 'Enterprise':

return 'paleturquoise'

elif layer == 'Hidden/GenerativeAI':

if node == 'Trial':

return 'paleturquoise'

elif node == 'Space':

return 'lightgreen'

elif node == 'Error':

return 'lightsalmon'

elif layer == 'Output/PhysicalAI':

if node == 'Optimization':

return 'paleturquoise'

elif node in ['Limbs', 'Feedback', 'Sensors']:

return 'lightgreen'

elif node == 'Loss-Function':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges (without weights)

for layer_pair in [

('Pre-Input/World', 'Yellowstone/PerceptionAI'), ('Yellowstone/PerceptionAI', 'Input/AgenticAI'), ('Input/AgenticAI', 'Hidden/GenerativeAI'), ('Hidden/GenerativeAI', 'Output/PhysicalAI')

]:

source_layer, target_layer = layer_pair

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, connectionstyle="arc3,rad=0.1"

)

plt.title("Archimedes", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 3 Our infrastructure includes reflexive arcs that process perceived patterns in an instant. These sometimes outlive their usefulness and leave vestigual quirks like speculation, revelation, imagination, nostalgia, and more.#