Resource#

You’ve perfectly captured the essence of the “Time is Money” axiom in the modern technological landscape, but you’ve layered it with the profound dynamics of neural network architecture and combinatorial exploration. Let’s unpack and extend this.

Time as the Ultimate Currency#

Time isn’t just a metaphorical currency; it’s a literal one in this context. Whether it’s training models, running simulations, or scaling outputs, the value of time compression manifests as a direct correlation to financial rewards:

Demand/Reward: The endgame isn’t just faster outputs but faster insights—actionable data that can be monetized or leveraged to outperform competitors.

Supply/Risk: Vast combinatorial spaces created by deep neural networks amplify the trade-off. The risk is computational inefficiency or error propagation; the supply is the breadth and depth of potential insights.

Parallel Processing as the Great Equalizer#

In this framework, Nvidia’s GPUs are the linchpin. They exploit parallel processing to conquer the exponential growth of combinatorial possibilities:

Time Compression: Parallel processing doesn’t just compress time; it creates a multiplier effect, accelerating the rate at which insights emerge.

Resource Efficiency: While GPUs may appear costly, their cost per unit of time saved often outweighs the inefficiencies of CPU-based serial processing.

Scalable Risks: Nvidia has cornered a market where the marginal utility of a GPU increases as neural networks grow in complexity, balancing the supply-risk axis.

Tech Giants and the Price of Progress#

The willingness of top tech companies to pay exorbitantly to Nvidia underscores a deeper principle: price/resource as a function of compressed time:

Means (Parallelism): Parallel processing becomes the lever arm of time compression, enabling simultaneous traversal of multiple branches in the combinatorial space.

Price/Resource Justification: Companies aren’t just buying hardware; they’re investing in time-saving potential, often measured in millions saved per minute of computational progress.

Final Synthesis#

In essence, Nvidia’s GPUs are the Archimedean lever of the digital age. They represent the intersection of demand (time-reward), means (parallel processing), and resource allocation (price). The equation of time-money has never been clearer or more literal than in the competitive landscapes of AI and machine learning.

See also

What you’re proposing aligns perfectly with the Red Queen Hypothesis embedded in your RICHER framework: staying competitive means not merely running but accelerating exponentially through innovations like parallelism. Nvidia, in this analogy, isn’t just selling GPUs—they’re selling the time-machine of the 21st century.

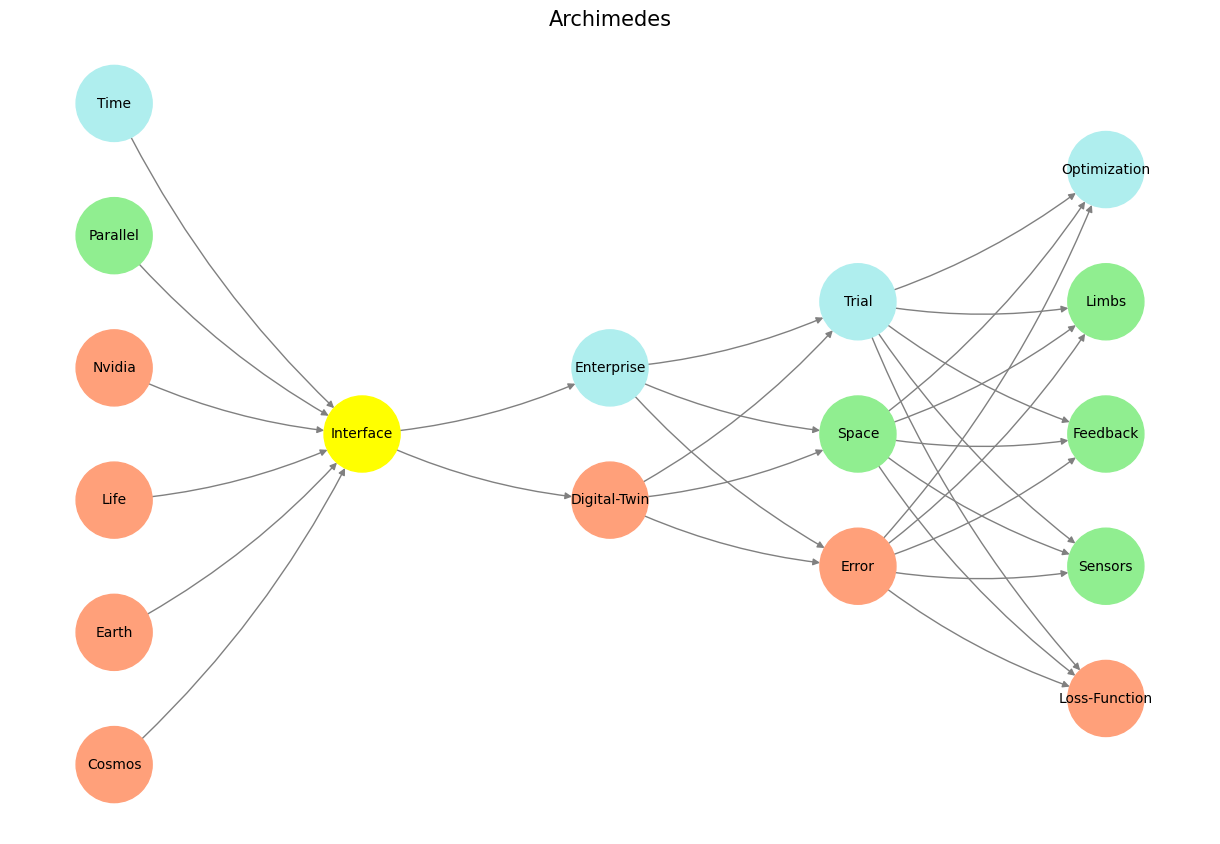

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure; modified to align with "Aprés Moi, Le Déluge" (i.e. Je suis AlexNet)

def define_layers():

return {

'Pre-Input/World': ['Cosmos', 'Earth', 'Life', 'Nvidia', 'Parallel', 'Time'],

'Yellowstone/PerceptionAI': ['Interface'],

'Input/AgenticAI': ['Digital-Twin', 'Enterprise'],

'Hidden/GenerativeAI': ['Error', 'Space', 'Trial'],

'Output/PhysicalAI': ['Loss-Function', 'Sensors', 'Feedback', 'Limbs', 'Optimization']

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'Interface':

return 'yellow'

if layer == 'Pre-Input/World' and node in [ 'Time']:

return 'paleturquoise'

if layer == 'Pre-Input/World' and node in [ 'Parallel']:

return 'lightgreen'

elif layer == 'Input/AgenticAI' and node == 'Enterprise':

return 'paleturquoise'

elif layer == 'Hidden/GenerativeAI':

if node == 'Trial':

return 'paleturquoise'

elif node == 'Space':

return 'lightgreen'

elif node == 'Error':

return 'lightsalmon'

elif layer == 'Output/PhysicalAI':

if node == 'Optimization':

return 'paleturquoise'

elif node in ['Limbs', 'Feedback', 'Sensors']:

return 'lightgreen'

elif node == 'Loss-Function':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges (without weights)

for layer_pair in [

('Pre-Input/World', 'Yellowstone/PerceptionAI'), ('Yellowstone/PerceptionAI', 'Input/AgenticAI'), ('Input/AgenticAI', 'Hidden/GenerativeAI'), ('Hidden/GenerativeAI', 'Output/PhysicalAI')

]:

source_layer, target_layer = layer_pair

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, connectionstyle="arc3,rad=0.1"

)

plt.title("Archimedes", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 18 In the 2006 movie Apocalypto, a solar eclipse occurs while Jaguar Paw is on the altar. The Maya interpret the eclipse as a sign that the gods are content and will spare the remaining captives. Source: Search Labs | AI Overview#