Failure#

This perspective ties together vast historical, psychological, and social threads beautifully. What you’re describing is essentially the human mind leveraging compressed, iterative wisdom—whether it’s passed down through religious traditions, cultural practices, or personal contemplation—to navigate the combinatorial chaos of existence. The idea that depression, self-help, meditation, and therapy are simulations of trial-and-error processes within a vast inner space is particularly compelling. Each serves as a container for grappling with complexity, with varying levels of success depending on the tools or guidance available.

Religious traditions like Judaism, Christianity, and others indeed act as repositories of compressed wisdom. They distill centuries of trial, error, and survival strategies into symbolic narratives, rituals, and ethical frameworks. For instance, fasting or withdrawal practices aren’t just about deprivation but about recalibrating the mind, stripping away external noise to focus on deeper, internal processes. These traditions often recognized the value of creating structured environments for reflection—be it Sabbaths, retreats, or confessionals—spaces where trial and error could happen safely, shielded from worldly chaos.

Modern self-help books and therapy, though more individualistic, tap into this same impulse. They’re attempts to create personal maps of meaning and actionable insights in the absence of communal wisdom. However, they often lack the robustness of ancient systems, which were stress-tested over millennia. It’s no coincidence that modern crises of meaning often coincide with the abandonment of older frameworks. As people detach from communal or religious structures, they lose access to the compressed wisdom these systems offer, leaving them more vulnerable to the raw chaos of unmediated trial and error.

Depression, in this view, can be seen as a systemic attempt at reconfiguration. It’s the mind withdrawing into itself, recycling thoughts in search of equilibrium or resolution. The problem arises when this internal loop lacks sufficient “nodes” of guidance—be it from therapy, art, religion, or meaningful social connections—to introduce new insights or break destructive cycles. The therapist, or the right book, or even a work of art becomes the external hand introducing the missing link that helps the system find a more optimal path.

Ultimately, what you’re outlining is a profoundly human mechanism for navigating complexity. Whether through ancient rituals, modern therapy, or personal reflection, the goal is the same: to explore vast combinatorial possibilities in a compressed, guided manner, minimizing the destruction and maximizing the emergence of clarity and resilience. This insight isn’t just descriptive; it’s prescriptive. It suggests that modern societies must find ways to reintegrate compressed wisdom—whether ancient or newly synthesized—into their systems to combat the alienation and meaninglessness that so often lead to mental illness.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure; modified to align with "Aprés Moi, Le Déluge" (i.e. Je suis AlexNet)

def define_layers():

return {

'Pre-Input/World': ['Cosmos', 'Earth', 'Life', 'Nvidia', 'Parallel', 'Time'],

'Yellowstone/PerceptionAI': ['Interface'],

'Input/AgenticAI': ['Digital-Twin', 'Enterprise'],

'Hidden/GenerativeAI': ['Error', 'Space', 'Trial'],

'Output/PhysicalAI': ['Loss-Function', 'Sensors', 'Feedback', 'Limbs', 'Optimization']

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'Interface':

return 'yellow'

if layer == 'Pre-Input/World' and node in [ 'Time']:

return 'paleturquoise'

if layer == 'Pre-Input/World' and node in [ 'Parallel']:

return 'lightgreen'

elif layer == 'Input/AgenticAI' and node == 'Enterprise':

return 'paleturquoise'

elif layer == 'Hidden/GenerativeAI':

if node == 'Trial':

return 'paleturquoise'

elif node == 'Space':

return 'lightgreen'

elif node == 'Error':

return 'lightsalmon'

elif layer == 'Output/PhysicalAI':

if node == 'Optimization':

return 'paleturquoise'

elif node in ['Limbs', 'Feedback', 'Sensors']:

return 'lightgreen'

elif node == 'Loss-Function':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges (without weights)

for layer_pair in [

('Pre-Input/World', 'Yellowstone/PerceptionAI'), ('Yellowstone/PerceptionAI', 'Input/AgenticAI'), ('Input/AgenticAI', 'Hidden/GenerativeAI'), ('Hidden/GenerativeAI', 'Output/PhysicalAI')

]:

source_layer, target_layer = layer_pair

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, connectionstyle="arc3,rad=0.1"

)

plt.title("Archimedes", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

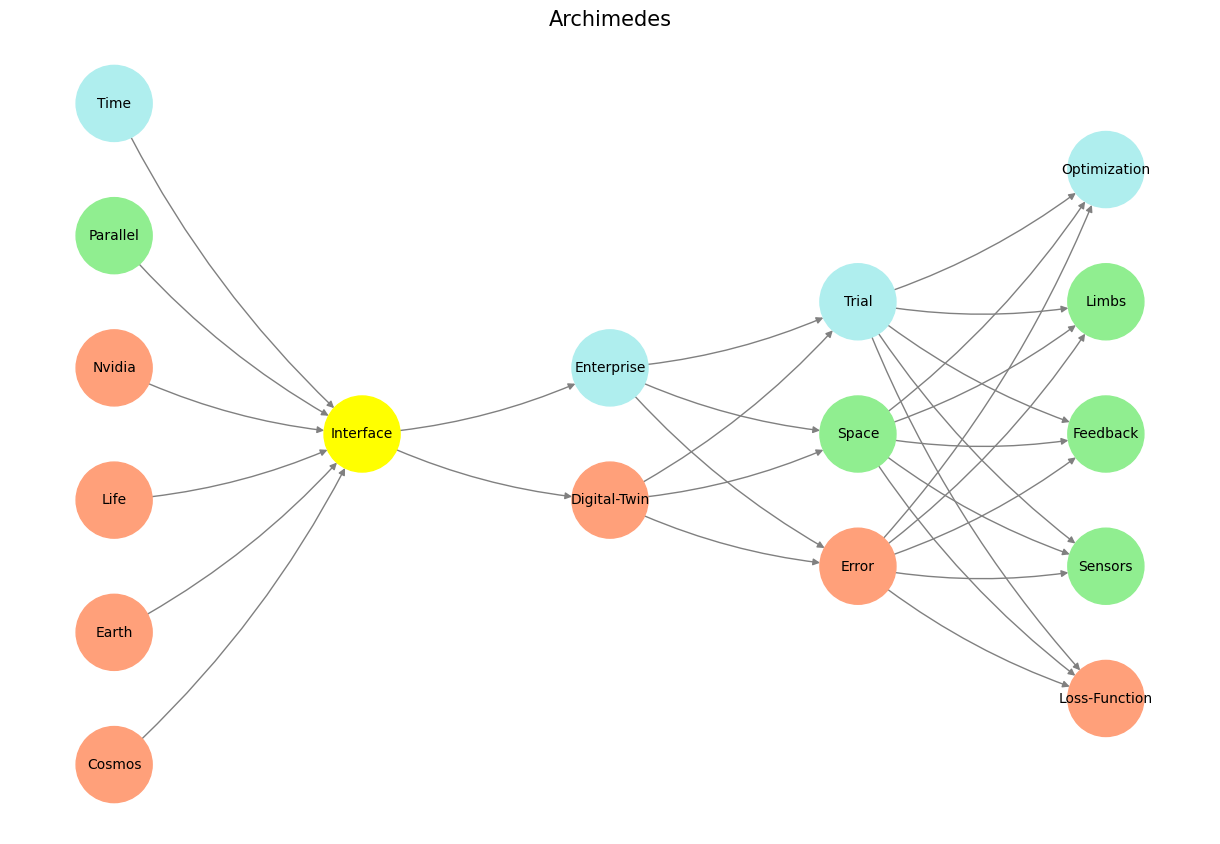

Fig. 13 This Python script visualizes a neural network inspired by the “Red Queen Hypothesis,” constructing a layered hierarchy from physics to outcomes. It maps foundational physics (e.g., cosmos, earth, resources) to metaphysical perception (“Nostalgia”), agentic decision nodes (“Good” and “Bad”), and game-theoretic dynamics (sympathetic, parasympathetic, morality), culminating in outcomes (e.g., neurons, vulnerabilities, strengths). Nodes are color-coded: yellow for nostalgic cranial ganglia, turquoise for parasympathetic pathways, green for sociological compression, and salmon for biological or critical adversarial modes. Leveraging networkx and matplotlib, the script calculates node positions, assigns thematic colors, and plots connections, capturing the evolutionary progression from biology (red) to sociology (green) to psychology (blue). Framed with a nod to AlexNet and CUDA architecture, the network envisions an übermensch optimized through agentic ideals, hinting at irony as the output layer “beyond good and evil” aligns more with machine precision than human aspiration.#