Woo’d 🗡️❤️💰#

Academic Medicine Has Lost Its Mind: Why Authorship in Research Needs GitHub

Authorship in academic medicine has become an absurd parody of itself. The sprawling bylines of research papers—sometimes 20, 30, or more names long—are no longer symbols of collaboration. They are tokens of career advancement, handed out for reasons that have little to do with the actual work behind the paper. These lists, which are ostensibly meant to credit intellectual and technical contributions, have instead devolved into a ritualistic exercise in patronage, networking, and promotion. Academic medicine is drowning in its own hypocrisy, and the authorship system has become an open joke.

It doesn’t have to be this way. In fact, it can’t be this way if we want to preserve the integrity of science. The solution lies in Git—specifically, GitHub, the gold standard of version control systems. If academic medicine is to reclaim its soul, it must abandon its bloated authorship lists and embrace a transparent, auditable system that reflects who did what, when, and how.

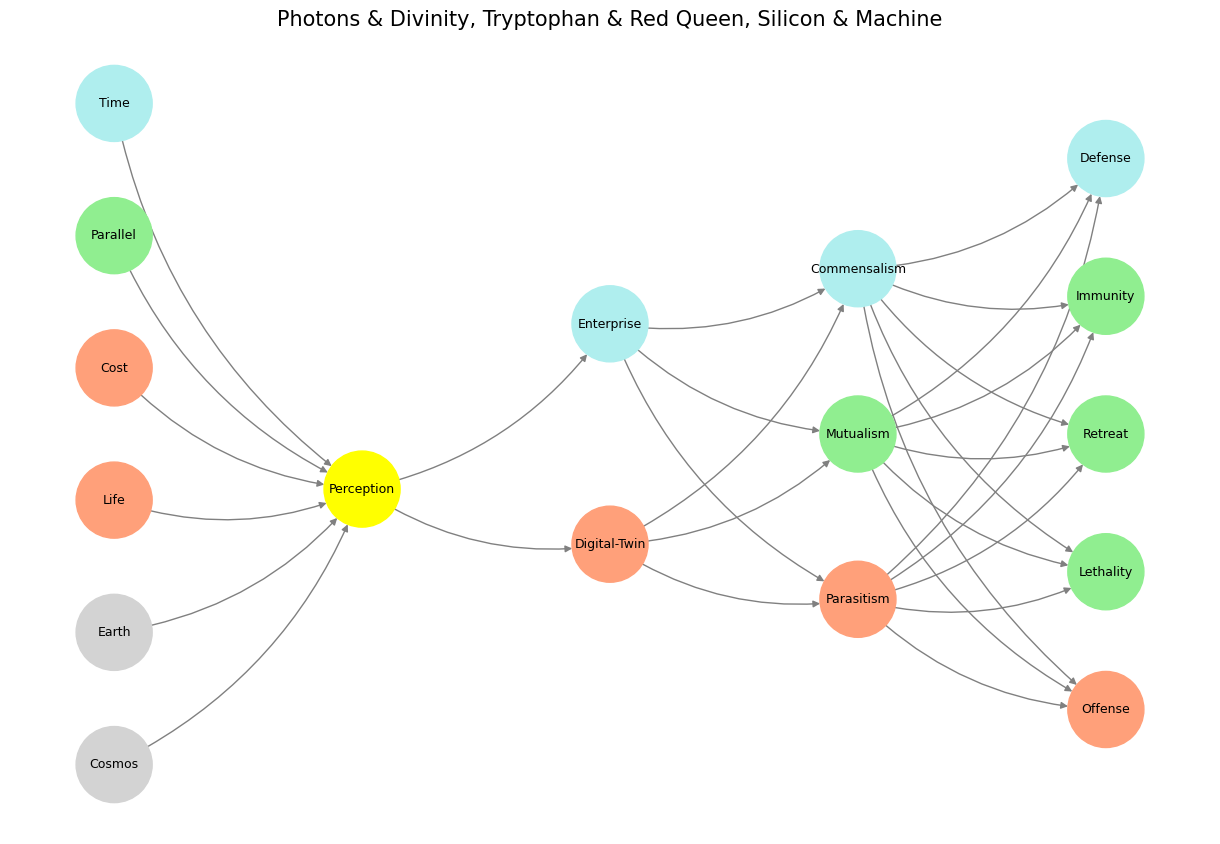

Fig. 8 What Exactly Is It About? Might it be about fixed odds, pattern recognition, leveraged agency, curtailed agency, or spoils for further play? Grants certainly are part of the spoils for further play. And perhaps bits of the other stuff.#

The GitHub Solution#

GitHub offers a way out. It is the premier version control platform for software development, but its principles—transparency, accountability, and traceability—are exactly what academic research needs. Imagine if every major research project were housed on GitHub. Each experiment, data set, analysis, and draft could be tracked in real-time. Every edit, every line of code, every figure could be attributed to a specific individual.

GitHub’s version control system does something that the current authorship model cannot: it provides a granular, auditable record of contribution. Instead of an arbitrary list of names, we would have detailed logs showing exactly who did what. Did someone write the first draft? Run the statistical analysis? Collect the data? With Git, we wouldn’t need to guess.

Furthermore, GitHub allows for branching and merging, capturing the non-linear, iterative nature of scientific discovery. Collaborators could work in parallel, propose changes, and resolve conflicts in a transparent way. The entire history of the project would be preserved, providing a level of accountability that the current system cannot hope to match.

The Objections#

Critics might argue that GitHub can be manipulated. Of course it can. No system is perfect. But GitHub raises the bar for corruption. To manipulate a Git repository, one would have to falsify timestamps, alter commit histories, or fabricate branches—all of which leave traces. Compared to the opaque deals currently made behind closed doors, GitHub is a fortress of integrity.

Others might worry that GitHub is too technical for academics outside the fields of computer science or bioinformatics. This is nonsense. If researchers can navigate the arcane rituals of grant applications, IRB approvals, and peer review, they can learn Git. The learning curve is a small price to pay for the clarity it offers.

A Personal Plea for Reform#

I speak from experience. I have seen the dysfunction of academic authorship up close. I have witnessed the horse-trading of byline positions, the addition of names for political reasons, the exclusion of names for personal ones. I have watched as the work of a few is diluted by the tokenism of many. It is an insult to the principles of science, and it must stop.

The current system is broken. It has lost meaning, lost trust, and lost its way. GitHub is not a perfect solution, but it is a transformative one. By adopting version control, academic medicine can move from a system of opaque tokenism to one of transparent contribution. Let’s stop pretending that a 30-person authorship list makes sense. Let’s stop rewarding the politics of science over the practice of it.

It’s time for academic medicine to grow up. If we care about the integrity of research—and we should—we need to abandon the old ways and embrace the tools that can restore trust and meaning. Authorship as we know it is dead. Long live GitHub.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Placeholders (Substitute) for the neural network visualization

#1 Time (Paleturquoise)

#2 Parallel (Lightgreen)

#3 Cost (Lightsalmon)

#4 Life (Lightsalmon)

#5 Earth (Lightsalmon)

#6 Cosmos (Lightsalmon)

#7 Perception (Yellow)

#8 Enterprise (Paleturquoise)

#9 Digital-Twin (Lightsalmon)

#10 Commensalism (Paleturquoise)

#11 Mutualism (Lightgreen)

#12 Parasitism (Lightsalmon)

#13 Defense (Paleturquoise)

#14 Immunity (Lightgreen)

#15 Retreat (Lightgreen)

#16 Lethality (Lightgreen)

#17 Offense (Lightsalmon)

# World: Input/Time (The Rules ...)

# Perception: Hidden/Perception (La Grande Illusion)

# Agency: Hidden/Parallel Spaces (Je ne regrette rien)

# Generativity: Hidden/Games (.. Of the Game)

# Physicality: Output (La Fin)

# Define the neural network structure

def define_layers():

return {

'World': ['Cosmos', 'Earth', 'Life', 'Cost', 'Parallel', 'Time', ],

'Perception': ['Perception'],

'Agency': ['Digital-Twin', 'Enterprise'],

'Generativity': ['Parasitism', 'Mutualism', 'Commensalism'],

'Physicality': ['Offense', 'Lethality', 'Retreat', 'Immunity', 'Defense']

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Perception'],

'paleturquoise': ['Time', 'Enterprise', 'Commensalism', 'Defense'],

'lightgreen': ['Parallel', 'Mutualism', 'Immunity', 'Retreat', 'Lethality'],

'lightsalmon': [

'Cost', 'Life', 'Digital-Twin',

'Parasitism', 'Offense'

],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

G = nx.DiGraph()

pos = {}

node_colors = []

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(colors.get(node, 'lightgray')) # Default color fallback

# Add edges (automated for consecutive layers)

layer_names = list(layers.keys())

for i in range(len(layer_names) - 1):

source_layer, target_layer = layer_names[i], layer_names[i + 1]

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

plt.title("Photons & Divinity, Tryptophan & Red Queen, Silicon & Machine", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 9 This is an updated version of the script with annotations tying the neural network layers, colors, and nodes to specific moments in Vita è Bella, enhancing the connection to the film’s narrative and themes:#