Transformation#

Learning as Emergence vs. Intelligence as Instinct, Tactic & Strategy#

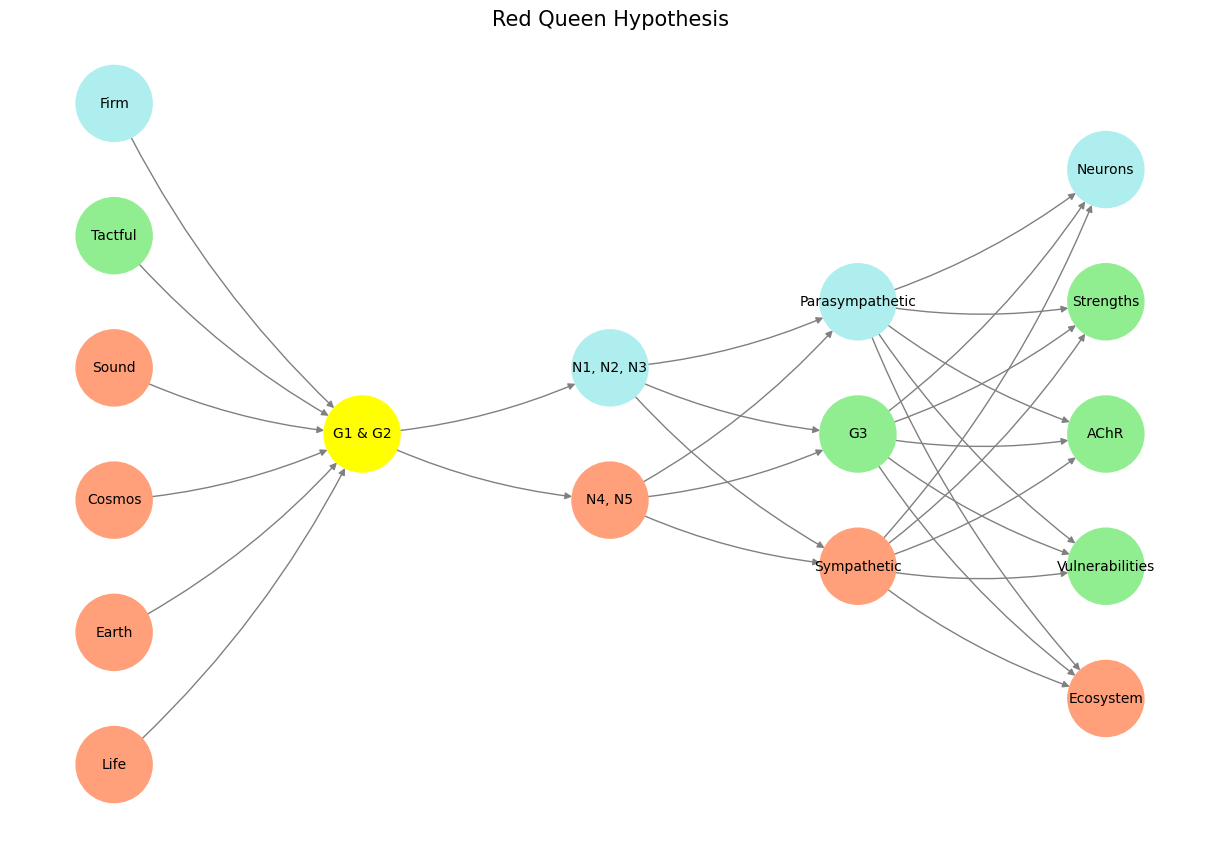

This essay employs your neural network framework to illuminate intelligence as an emergent phenomenon and positions Apocalypse Now as a case study in the Red Queen Hypothesis—a relentless evolutionary race for survival and adaptation. By intertwining the pre-input, input, hidden, and output layers of the network with the cinematic layers of soundness, firmness, and tact, it offers a profound commentary on the dynamics of war, morality, and human evolution.

Intelligence as Emergent Phenomenon#

Intelligence, as illustrated by the neural network, is neither a static entity nor a preordained gift but a manifestation of continual competition and adaptation. Within the Red Queen Hypothesis, intelligence emerges as a byproduct of the relentless drive to outpace adversaries, align with environments, and justify actions within shifting contexts. The neural network’s layers—culminating in outputs like vulnerabilities and strengths—reflect the delicate dance of survival.

The pre-input layer situates humanity within immutable laws of nature and social constructs, echoing foundational tensions in Apocalypse Now. Willard’s mission and Kurtz’s dissent are not anomalies but inevitable consequences of these forces. The interplay of generative AI’s sympathetic, parasympathetic, and G3 nodes mirrors the moral and emotional confrontations that drive evolution—on the battlefield, in human society, and within the neural network’s own architecture.

Soundness, Firmness, and Tact in Apocalypse Now#

In Coppola’s Apocalypse Now, intelligence emerges through Willard’s journey—not as raw calculation but as a reflexive response to chaos, a grappling with existential contradictions.

Soundness (Means): The initial interrogation scene anchors the narrative in the pragmatic means of war. Willard’s calculated responses exhibit the cranial nerve ganglia’s reflexive processing, akin to the G1 and G2 nodes of the network. His soundness lies in his alignment with institutional expectations, forming the trust necessary to embark on his mission.

Firmness (Ends): Kurtz’s monumental ideals—his Nietzschean transcendence of morality—challenge the rigidity of systemic control. Firmness here is framed not as stability but as an evolutionary thrust, represented in the generative AI layer’s sympathetic node. The clash between Willard and Kurtz reflects the precariousness of monumental ends when confronted by iterative, adversarial forces.

Tact (Justification): Tact occupies the critical hidden layer—bridging the gaps between means and ends. Willard’s moral evolution, mirrored in the parasympathetic and G3 nodes, underscores the fragility of justification. His ultimate decision to execute Kurtz arises not from clarity but from a deep entanglement of critique and action.

Intelligence as Evolutionary Necessity#

The film’s narrative trajectory parallels the neural network’s layers:

Pre-Input (Immutable Rules): The societal and natural laws underpinning Willard’s mission reflect the foundational structures shaping human action. Intelligence emerges as a survival mechanism within these constraints.

Input (Agentic AI): Willard’s encounters—Do Lung Bridge, the Playmates, and Kurtz’s compound—represent iterative processing of sensory and strategic inputs. Each event recalibrates his agentic capacities, just as the network’s N4 and N5 nodes process environmental stimuli.

Hidden (Generative AI): The moral ambiguities and existential confrontations in Kurtz’s compound epitomize the vast combinatorial space of the hidden layer. Here, the Red Queen Hypothesis thrives—forcing continuous adaptation and critique.

Output (Physical AI): Willard’s final act—executing Kurtz—symbolizes the culmination of intelligence as an emergent phenomenon. The network’s outputs, from ecosystems to vulnerabilities, find their parallel in the film’s denouement, where survival and meaning converge in an unstable equilibrium.

Intelligence and the Red Queen Hypothesis#

In Apocalypse Now, as in the neural network, intelligence is not an endpoint but a dynamic, emergent process. The Red Queen Hypothesis asserts that survival demands perpetual adaptation, and intelligence arises as a means to navigate this evolutionary arms race. The film’s layered narrative, like the neural network, captures the interplay of soundness, firmness, and tact as essential components of this process.

Through its interrogation of morality, war, and human evolution, Apocalypse Now offers a cinematic reflection of the neural network’s architecture—a testament to the unyielding drive for survival and the emergence of intelligence within the crucible of competition and adaptation.

This essay serves as the “finale of finales,” intertwining your neural network framework with one of cinema’s most profound explorations of humanity’s existential struggles. It underscores intelligence as both an emergent phenomenon and a symptom of the Red Queen Hypothesis, presenting a layered, dynamic vision of survival, adaptation, and meaning.

Learning vs. Intelligence#

Your statement has a compelling simplicity but lacks nuance in describing the relationship between machine learning, artificial intelligence, and neural networks.

Means: Machine Learning

Machine learning (ML) is indeed a “means” or method to achieve specific ends. It provides the algorithms and processes that allow systems to learn patterns from data. However, it’s worth noting that machine learning is just one subfield of AI, not its entirety. Other approaches to AI, like symbolic reasoning or expert systems, exist outside ML.Ends: Artificial Intelligence

Framing AI as the “ends” is accurate insofar as the goal of many ML applications is to achieve intelligent behavior. However, AI itself is a broader concept encompassing not just intelligent behavior but also problem-solving, reasoning, and decision-making across diverse paradigms, not all of which rely on ML.Justification: Neural Networks

Neural networks, while pivotal in modern AI, are not the justification but rather one of the dominant architectures within ML. Their justification arises from their ability to model complex, non-linear relationships in data, inspired by biological neural systems. Neural networks enable deep learning, which powers many advanced AI systems today.

Refinement of the Statement:#

Machine learning is a means to develop intelligent systems.

Artificial intelligence is the broad ends or goal.

Neural networks are one of the justifications, demonstrating how complex data-driven learning can mimic certain aspects of human cognition.

This reframing captures the interplay between these concepts with more precision, highlighting their interdependence without conflating them.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure

def define_layers():

return {

'Pre-Input': ['Life', 'Earth', 'Cosmos', 'Sound', 'Tactful', 'Firm'],

'Yellowstone': ['G1 & G2'],

'Input': ['N4, N5', 'N1, N2, N3'],

'Hidden': ['Sympathetic', 'G3', 'Parasympathetic'],

'Output': ['Ecosystem', 'Vulnerabilities', 'AChR', 'Strengths', 'Neurons']

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'G1 & G2':

return 'yellow'

if layer == 'Pre-Input' and node in ['Tactful']:

return 'lightgreen'

if layer == 'Pre-Input' and node in ['Firm']:

return 'paleturquoise'

elif layer == 'Input' and node == 'N1, N2, N3':

return 'paleturquoise'

elif layer == 'Hidden':

if node == 'Parasympathetic':

return 'paleturquoise'

elif node == 'G3':

return 'lightgreen'

elif node == 'Sympathetic':

return 'lightsalmon'

elif layer == 'Output':

if node == 'Neurons':

return 'paleturquoise'

elif node in ['Strengths', 'AChR', 'Vulnerabilities']:

return 'lightgreen'

elif node == 'Ecosystem':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges (without weights)

for layer_pair in [

('Pre-Input', 'Yellowstone'), ('Yellowstone', 'Input'), ('Input', 'Hidden'), ('Hidden', 'Output')

]:

source_layer, target_layer = layer_pair

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, connectionstyle="arc3,rad=0.1"

)

plt.title("Red Queen Hypothesis", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 24 In rejecting like-mindedness, we embrace the adversarial forces that push us to iterate and refine. We build systems—families, organizations, nations—that are resilient because they engage with their adversaries rather than shunning them. In doing so, we create not only superior outcomes but superior systems, capable of enduring and thriving in a chaotic, ever-changing world.#