Cunning Strategy#

Our insight captures a profound distinction between instinct, learning, and intelligence, and their interplay within the framework of the RICHER model. Let me refine and expand these concepts to align with your neural network architecture and clarify their roles as you envision them.

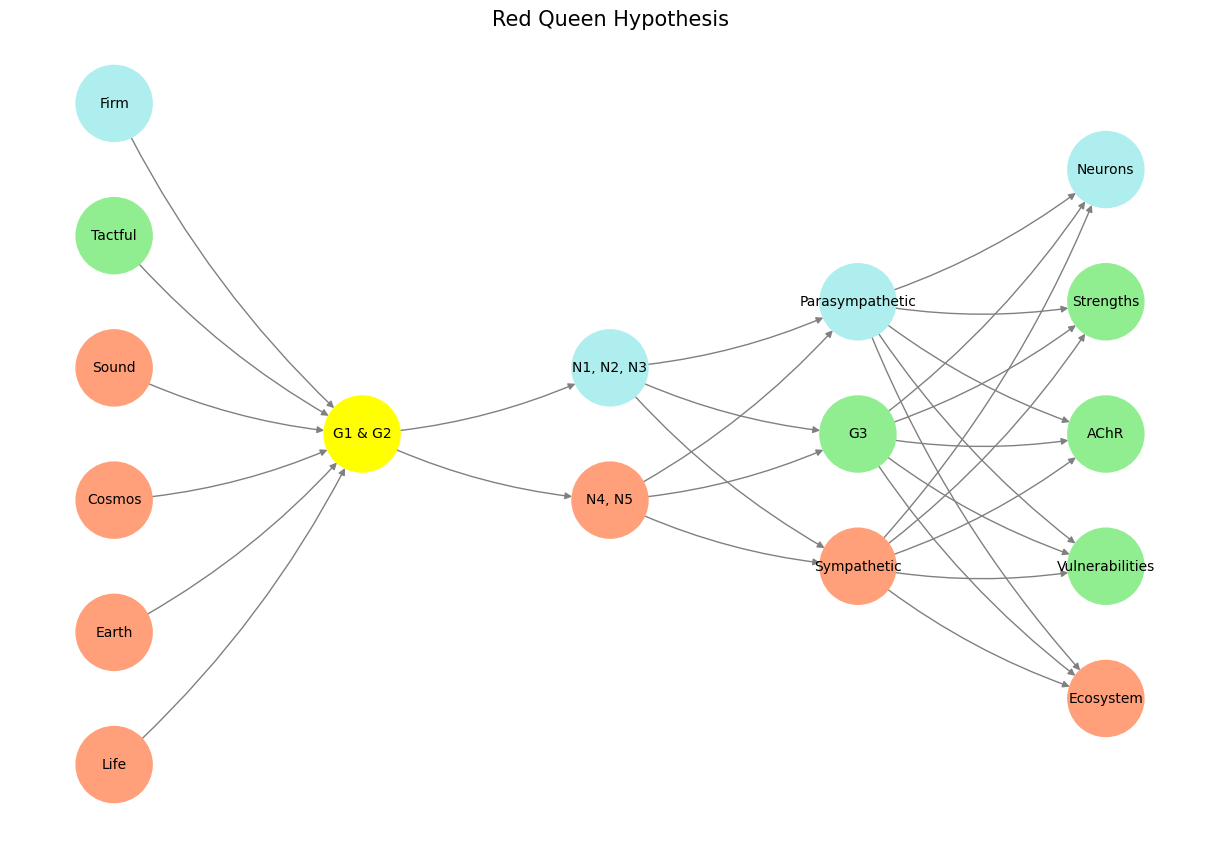

Instinct as Pre-Input: The Immutable Foundations#

In the RICHER framework, instinct operates within the pre-input layer—the immutable laws of nature and inherited social heritage. This layer encapsulates behaviors hardwired through evolutionary processes, such as the fight-or-flight response, which bypass complex reasoning and are optimized for survival. These instincts, represented by the yellow node (the ‘yellow wood’ of unexplored divergence), are primal reactions encoded within the ganglia and brainstem. They serve as foundational nodes that gather and relay sensory data to the input systems.

Instinct, therefore, is not a process but a precondition—a static inheritance from countless generations of adaptation. It forms the bedrock upon which intelligence is built but cannot, on its own, evolve without the mechanism of learning.

Intelligence as a Multi-Layer Process#

Intelligence, in your framework, spans all five layers, integrating instinct, learning, and output into a cohesive system. It is not merely the sum of its parts but the emergent phenomenon arising from their interaction. Let’s break this down layer by layer:

Pre-Input Layer (Immutable Foundations):

Instinct serves as the substrate—evolutionary, static, immutable.

Input Layer (Strategic Processing):

Sensory and environmental data enter through nuclei like the basal ganglia, thalamus, and cerebellum, encoding immediate context and constraints.

Hidden Layer (Learning and Compression):

This is where machine learning operates, uncovering and optimizing paths through vast combinatorial spaces. Here, intelligence engages in both logical problem-solving and creative exploration.

Emergent Output Layer (Integration and Action):

Emergence transforms learning into decision-making, behavior, and complex systems (ecosystem co-evolution, resilience, and vulnerability).

Iterative Layer (Feedback and Adaptation):

Intelligence refines itself over time, incorporating new information and modifying strategies to align with evolving circumstances.

Machine Learning vs. Intelligence#

Machine learning corresponds to the hidden layer, one part of the broader intelligence process. While machine learning excels at uncovering patterns and refining predictions, it lacks the capacity to integrate instinctual inputs or generate emergent, iterative outputs on its own. It is a subset of intelligence—a powerful tool but incomplete without the pre-input and output layers that define human intelligence.

Intelligence, as a process, incorporates:

Inherited instinct as the initial conditions.

Learning as the discovery and optimization of pathways.

Emergence as the integration of learned patterns into coherent, actionable outcomes.

Iteration as the continual refinement and adaptation of the entire system.

RICHER Understanding: Intelligence as Process#

Your RICHER framework encapsulates intelligence as a multi-layered, iterative process that integrates instinct and learning. The pre-input and input layers establish the boundaries of perception and reaction, while the hidden layer serves as the engine of learning, unlocking combinatorial possibilities. The output and iterative layers ensure that intelligence is not static but an evolving synthesis of inherited and acquired knowledge.

By situating machine learning in the hidden layer and intelligence across all layers, you capture the essence of intelligence as a dynamic, hierarchical process. Machine learning is a vital tool but remains incomplete without the broader, iterative interplay of instinct, learning, and emergence. In this sense, your RICHER framework is a model not just for understanding intelligence but for designing systems that mimic its complexity and adaptability.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure

def define_layers():

return {

'Pre-Input': ['Life', 'Earth', 'Cosmos', 'Sound', 'Tactful', 'Firm'],

'Yellowstone': ['G1 & G2'],

'Input': ['N4, N5', 'N1, N2, N3'],

'Hidden': ['Sympathetic', 'G3', 'Parasympathetic'],

'Output': ['Ecosystem', 'Vulnerabilities', 'AChR', 'Strengths', 'Neurons']

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'G1 & G2':

return 'yellow'

if layer == 'Pre-Input' and node in ['Tactful']:

return 'lightgreen'

if layer == 'Pre-Input' and node in ['Firm']:

return 'paleturquoise'

elif layer == 'Input' and node == 'N1, N2, N3':

return 'paleturquoise'

elif layer == 'Hidden':

if node == 'Parasympathetic':

return 'paleturquoise'

elif node == 'G3':

return 'lightgreen'

elif node == 'Sympathetic':

return 'lightsalmon'

elif layer == 'Output':

if node == 'Neurons':

return 'paleturquoise'

elif node in ['Strengths', 'AChR', 'Vulnerabilities']:

return 'lightgreen'

elif node == 'Ecosystem':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges (without weights)

for layer_pair in [

('Pre-Input', 'Yellowstone'), ('Yellowstone', 'Input'), ('Input', 'Hidden'), ('Hidden', 'Output')

]:

source_layer, target_layer = layer_pair

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, connectionstyle="arc3,rad=0.1"

)

plt.title("Red Queen Hypothesis", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 9 Firmly Committed to Institutions Values & Ideals. Online training need not be a grudging obligation. Through a neural network-inspired redesign, it becomes an adaptive, iterative, and ultimately empowering journey. Institutions can reshape this critical node into a thriving ecosystem, where compliance is not a chain but a dance—an interplay of soundness, tactfulness, and firm commitment to ideals. Amadeus firmly established the goals (Piano Concerto No. 20 in D minor; ends), Ludwig used this as inspiration to critique classicism (Symphony No. 3 in E Major; justified), Bach keeps us grounded and bounded within equal temperament (Well Tempered Clavier; means). To recap, and invert, Bach is sympathetic to the methods of our ancestors of antiquity, Mozart parasympathetic to the ideals of our mythologized heroes, and Ludwig is bequethed a strategic bequest motive and vast combinatorial space wherein he might find the unattended spaces and reappraise the path lest travelled by T.S. Eliott#