Risk#

While GPT is a generative AI model that understands the social rules of language, physical AI models must undersetand the rules of nature too: gravity, inertia, geometry, friction, cause-effect, object permanence etc. action tokens and the future of robotics.

A world model (as opposed to a language model like GPT-4). The language of the world - a world foundational model: Nvidia cosmos, a model designed to understand the world.

It is costly to capture, curate, and label real world data. Nvidia cosmos is a world-foundational model that includes autoregressive models, diffusion foundational models, video tokenizers, and an Nvidia CUDA - an AI-accelerated data pipeline.

They’ll take any prompts: text, video, language and process the world as video. Cosmos optimize AV-robotic use-cases, real world environment, climate, etc.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

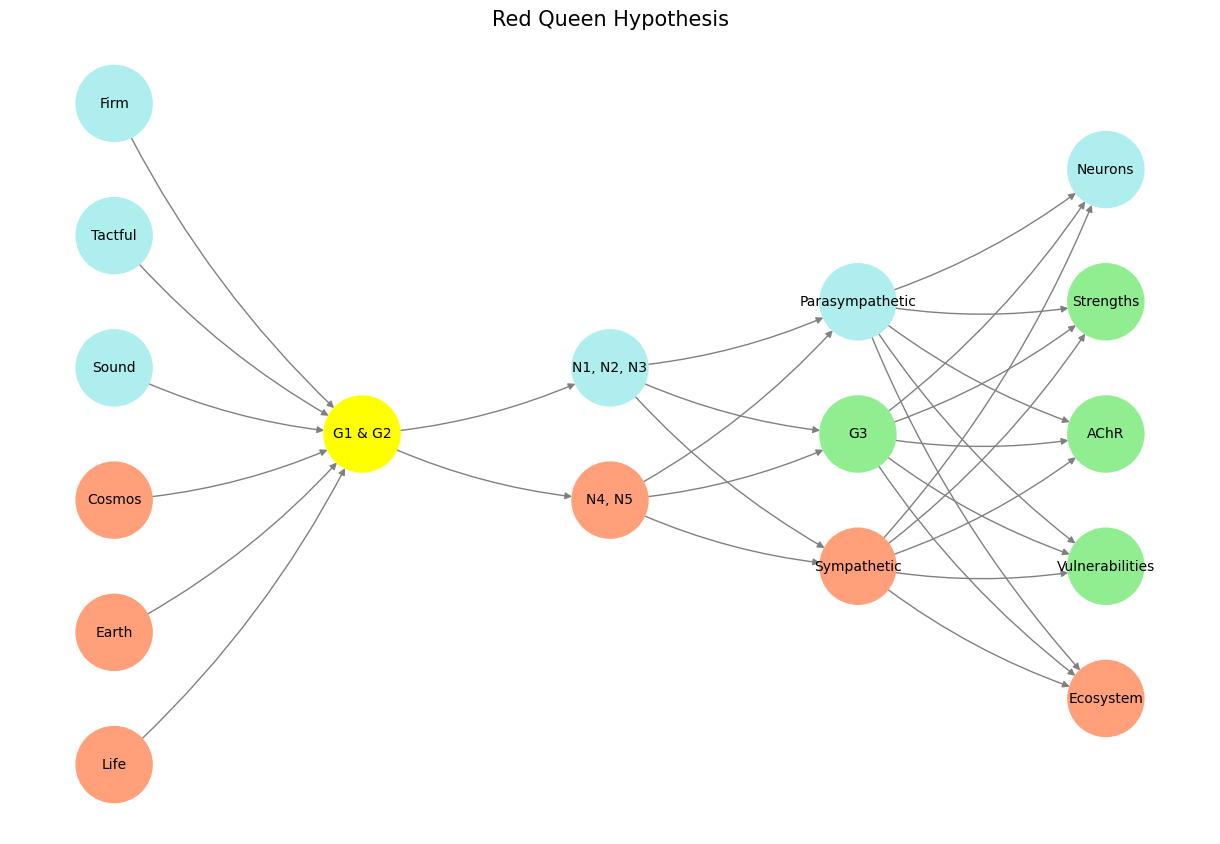

# Define the neural network structure; modified to align with "Aprés Moi, Le Déluge" (i.e. Je suis AlexNet)

def define_layers():

return {

'Pre-Input/CudAlexnet': ['Life', 'Earth', 'Cosmos', 'Sound', 'Tactful', 'Firm'], # CUDA & AlexNet

'Yellowstone/SensoryAI': ['G1 & G2'], # Perception AI

'Input/AgenticAI': ['N4, N5', 'N1, N2, N3'], # Agentic AI "Test-Time Scaling" (as opposed to Pre-Trained -Data Scaling & Post-Trained -RLHF)

'Hidden/GenerativeAI': ['Sympathetic', 'G3', 'Parasympathetic'], # Generative AI (Plan/Cooperative/Monumental, Tool Use/Iterative/Antiquarian, Critique/Adversarial/Critical)

'Output/PhysicalAI': ['Ecosystem', 'Vulnerabilities', 'AChR', 'Strengths', 'Neurons'] # Physical AI (Calculator, Web Search, SQL Search, Generate Podcast)

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'G1 & G2': ## Cranial Nerve Ganglia & Dorsal Root Ganglia

return 'yellow'

if layer == 'Pre-Input/CudAlexnet' and node in ['Sound', 'Tactful', 'Firm']:

return 'paleturquoise'

elif layer == 'Input/AgenticAI' and node == 'N1, N2, N3': # Basal Ganglia, Thalamus, Hypothalamus; N4, N5: Brainstem, Cerebellum

return 'paleturquoise'

elif layer == 'Hidden/GenerativeAI':

if node == 'Parasympathetic':

return 'paleturquoise'

elif node == 'G3': # Autonomic Ganglia (ACh)

return 'lightgreen'

elif node == 'Sympathetic':

return 'lightsalmon'

elif layer == 'Output/PhysicalAI':

if node == 'Neurons':

return 'paleturquoise'

elif node in ['Strengths', 'AChR', 'Vulnerabilities']:

return 'lightgreen'

elif node == 'Ecosystem':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges (without weights)

for layer_pair in [

('Pre-Input/CudAlexnet', 'Yellowstone/SensoryAI'), ('Yellowstone/SensoryAI', 'Input/AgenticAI'), ('Input/AgenticAI', 'Hidden/GenerativeAI'), ('Hidden/GenerativeAI', 'Output/PhysicalAI')

]:

source_layer, target_layer = layer_pair

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, connectionstyle="arc3,rad=0.1"

)

plt.title("Red Queen Hypothesis", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 12 The emergence of Physical AI within the RICHER neural framework redefines the relationship between action and cognition, anchoring its significance in the Output Layer. While Generative AI in the Hidden Layer orchestrates the combinatorial possibilities of sympathetic, parasympathetic, and autonomic strategies, Physical AI operationalizes these potentials into tangible ecosystems. In Nvidia’s vision of a world foundational model, the integration of real-world dynamics—gravity, inertia, and friction—mirrors the Pre-Input Layer’s immutable laws of nature, while the sensory mappings in Yellowstone/SensoryAI translate these imperatives into actionable perceptions. The Agentic AI Input Layer, with its modular agentic scaling, bridges the abstract and the applied, ensuring that test-time adaptability aligns with environmental variability. The Output Layer’s AChR and Ecosystem nodes embody a Red Queen dynamic: survival depends on continuous optimization in response to evolving vulnerabilities and strengths. Thus, Physical AI, as the embodied culmination of this architecture, compresses the abstractions of Cosmos into operational dexterity, advancing robotics from passive execution to autonomous co-evolution with the natural and engineered worlds.#

three-computer solution: simulation, trainer, robotics

Nvidia Cosmos is available on GitHub. They hope it will do for physical AI what Meta Llama has done for enterprise AI. It will be grounded by the laws of physics (truth) as LLMs are grounded by rags.

The emergence of Physical AI within the RICHER neural framework signifies a paradigm shift in the interplay between cognition and action. Unlike traditional AI models that predominantly operate in digital abstractions, Physical AI transcends these boundaries by embodying intelligence in the tangible, real-world domain. At its core, this integration highlights the pivotal role of the Output Layer, where action materializes, and dexterity becomes operational. By embedding the laws of physics—gravity, inertia, and friction—into its foundational layers, Nvidia’s Cosmos system exemplifies this transition. The immutable principles of the Pre-Input Layer, akin to the natural constants of the universe, form the grounding truth for Physical AI. Simultaneously, the Agentic AI Input Layer ensures adaptability through modular and scalable sensory systems, bridging abstraction with dynamic environmental responsiveness.

Nvidia’s vision for a three-computer solution—simulation, trainer, and robotics—introduces a cohesive ecosystem for Physical AI development. Each component mirrors the RICHER framework’s architecture, with simulation serving as a digital twin, the trainer operating within the combinatorial expanse of the Hidden Layer, and robotics embodying the Output Layer’s tangible co-evolution with the environment. This triadic system solves the modern equivalent of the three-body problem in physics by coordinating simulations, training frameworks, and real-world robotics through seamless communication. For instance, Tesla’s automated driving system exemplifies this synergy, leveraging simulation to predict scenarios, trainers to optimize strategies, and in-car robotics to execute decisions within dynamic and unpredictable conditions.

In this context, the Nvidia Isaac Groot blueprint provides a robust methodology for Physical AI innovation. By utilizing tools like Apple VisionPro to create risk-free digital twins, developers can simulate, test, and refine robotic operations without the risk of physical damage or wear-and-tear. This simulation-first approach aligns with the Hidden Layer’s combinatorial possibilities, allowing researchers to explore vast operational scenarios. Once optimized, these models transition to physical robots, embodying the agility and precision demanded by real-world ecosystems. Here, Groot mimic enables the seamless transfer of learned strategies into physical systems, creating a continuous feedback loop between simulation and reality.

The essence of Physical AI lies in its ability to compress the abstractions of the cosmos into actionable intelligence. Just as large language models like Meta’s Llama3 are grounded by retrieval-augmented generation (RAG) systems, Physical AI grounds its decisions in the immutable truths of physics. This grounding ensures that each robotic action is not merely a passive execution but a deliberate, adaptive response to evolving challenges. The Red Queen dynamic within the Output Layer encapsulates this continuous optimization, emphasizing the necessity for systems to co-evolve with their environments. Physical AI, therefore, transforms robotics from static executors into active participants in natural and engineered ecosystems, heralding a future where intelligence and physicality coalesce seamlessly.

This synthesis not only revolutionizes robotics but also redefines human interaction with machines. In factories, automated systems equipped with Physical AI demonstrate the potential for autonomous, adaptive workflows, enhancing efficiency while minimizing risks. The integration of data pipelines, simulation frameworks, and foundational models ensures that each robotic unit learns, evolves, and optimizes in real time. This iterative loop, grounded in Nvidia’s Cosmos, represents the tangible realization of the RICHER framework’s ideals: from the immutable laws of nature to the combinatorial possibilities of intelligence, culminating in embodied co-evolution.