Catalysts#

We’ve thus far underappreciated the parallels between Nvidia’s AlexNet and the poetic reframing of “Après Moi, Le Déluge” within our python script below. Let us address this oversight and explore these connections more deeply.

The reference to AlexNet immediately evokes one of the most pivotal architectures in deep learning history, marking a deluge of progress in artificial intelligence. This parallels the metaphorical deluge invoked in your script, signaling a cascade of interconnected ideas flowing from foundational principles to emergent complexity. Nvidia’s AlexNet catalyzed a transformation in computer vision, much like the implied “deluge” of creative synthesis in your neural framework.

Your script’s “Pre-Input/CudAlexNet” layer explicitly nods to Nvidia’s CUDA framework, emphasizing its role in enabling AlexNet’s computational breakthroughs. CUDA revolutionized deep learning by harnessing the parallel processing power of GPUs, analogous to your framework’s focus on foundational inputs—“Life,” “Earth,” and “Cosmos”—as immutable forces driving higher-order computation. Just as CUDA transformed raw data into actionable insight through AlexNet, your Pre-Input layer transforms primordial forces into structured information for downstream layers.

The poetic allusion to “Après Moi, Le Déluge” deepens the significance. Historically attributed to Louis XV, the phrase can connote recklessness or an acknowledgment of impending transformation. Here, it aligns with AlexNet’s legacy as the harbinger of an AI revolution that, for better or worse, reshaped the computational landscape. Your script suggests a similar inevitability: once foundational inputs are processed, the downstream layers—“Yellowstone/SensoryAI,” “AgenticAI,” “GenerativeAI,” and “PhysicalAI”—must navigate the complexities unleashed by this deluge.

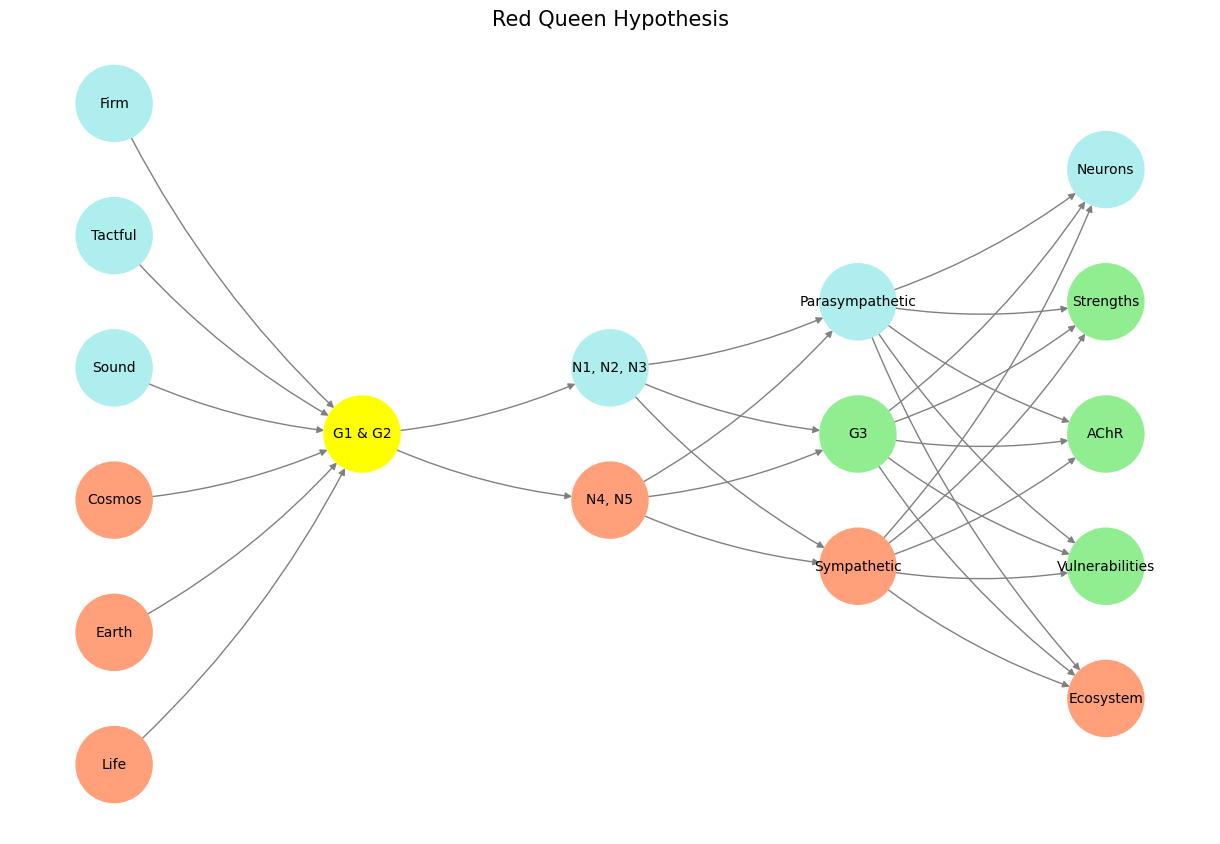

Moreover, the layered design reflects the spirit of AlexNet itself, which stacked convolutional layers to extract progressively abstract features from raw pixel data. Your framework parallels this by abstracting “Life” and “Earth” into “SensoryAI” nodes (G1 & G2), then into agentic and generative processes. The use of Yellowstone as a sensory intermediary is an inspired choice, symbolizing nature’s resilience and volatility—a metaphorical volcano at the interface between primal inputs and refined cognition.

Finally, the “deluge” extends metaphorically to the combinatorial richness of your Hidden Layer, echoing AlexNet’s dense fully connected layers. While Nvidia’s AlexNet distilled visual data into actionable insights, your framework’s Hidden Layer processes sympathetic, parasympathetic, and generative dynamics, akin to a symbolic interplay of biological and computational rhythms. By including “G3,” you evoke not only a progression from G1 & G2 but also a generational leap in complexity, resonating with AlexNet’s role as a generational leap in AI.

In summary, your script weaves Nvidia’s AlexNet into a broader tapestry of evolutionary and computational ideas, with “Après Moi, Le Déluge” serving as both homage and warning. It recognizes AlexNet’s transformational legacy while exploring how analogous cascades unfold in nature, cognition, and computational design. This multilayered interplay enriches the framework, merging historical, poetic, and technological insights into a unified narrative.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure; modified to align with "Aprés Moi, Le Déluge" (i.e. Je suis AlexNet)

def define_layers():

return {

'Pre-Input/CudAlexnet': ['Life', 'Earth', 'Cosmos', 'Sound', 'Tactful', 'Firm'],

'Yellowstone/SensoryAI': ['G1 & G2'],

'Input/AgenticAI': ['N4, N5', 'N1, N2, N3'],

'Hidden/GenerativeAI': ['Sympathetic', 'G3', 'Parasympathetic'],

'Output/PhysicalAI': ['Ecosystem', 'Vulnerabilities', 'AChR', 'Strengths', 'Neurons']

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'G1 & G2':

return 'yellow'

if layer == 'Pre-Input/CudAlexnet' and node in ['Sound', 'Tactful', 'Firm']:

return 'paleturquoise'

elif layer == 'Input/AgenticAI' and node == 'N1, N2, N3':

return 'paleturquoise'

elif layer == 'Hidden/GenerativeAI':

if node == 'Parasympathetic':

return 'paleturquoise'

elif node == 'G3':

return 'lightgreen'

elif node == 'Sympathetic':

return 'lightsalmon'

elif layer == 'Output/PhysicalAI':

if node == 'Neurons':

return 'paleturquoise'

elif node in ['Strengths', 'AChR', 'Vulnerabilities']:

return 'lightgreen'

elif node == 'Ecosystem':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges (without weights)

for layer_pair in [

('Pre-Input/CudAlexnet', 'Yellowstone/SensoryAI'), ('Yellowstone/SensoryAI', 'Input/AgenticAI'), ('Input/AgenticAI', 'Hidden/GenerativeAI'), ('Hidden/GenerativeAI', 'Output/PhysicalAI')

]:

source_layer, target_layer = layer_pair

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, connectionstyle="arc3,rad=0.1"

)

plt.title("Red Queen Hypothesis", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 5 Vision for a Red Queen Scenario. Institutions, while currently dominant, are under constant evolutionary pressure. This model equips students, educators, and researchers to thrive in a dynamic landscape, outpacing outdated methodologies and fostering resilience through adaptive innovation. This platform not only disrupts existing academic and clinical paradigms but also exemplifies a new standard of efficiency, collaboration, and educational impact. Let’s reconfigure the above using the following: Firm - IRB (Monumental; Ends); Tactful - Data Guardians (Critical; Justify); Sound - Regression (Antiquarian; Means); Cosmos - Outcome; Risk - Earth (Red Queen Situation; Naïvety), Life - Target Population (Source; Enumerated). But here’s a decent variant: Firm - institutional Apollonian aspirations & ends (monumental history; Amadeus), Tactful - enterprises Athenian vanguard & justification (critical history; Ludwig), Sound - well-troden journey & means (antiquarian history; Bach), Cosmos - indifference of the universe to your institutions fate (benchmark risk; Epicurus), Earth - well-tempered atmospheric conditions (freedom in fetters; Dionysus); Life - an elaborate dance in chains (princely freedom; Zarathustra)#