Anchor ⚓️#

Tokenized Combinatorial Space#

The Neural Evolution of RICHER#

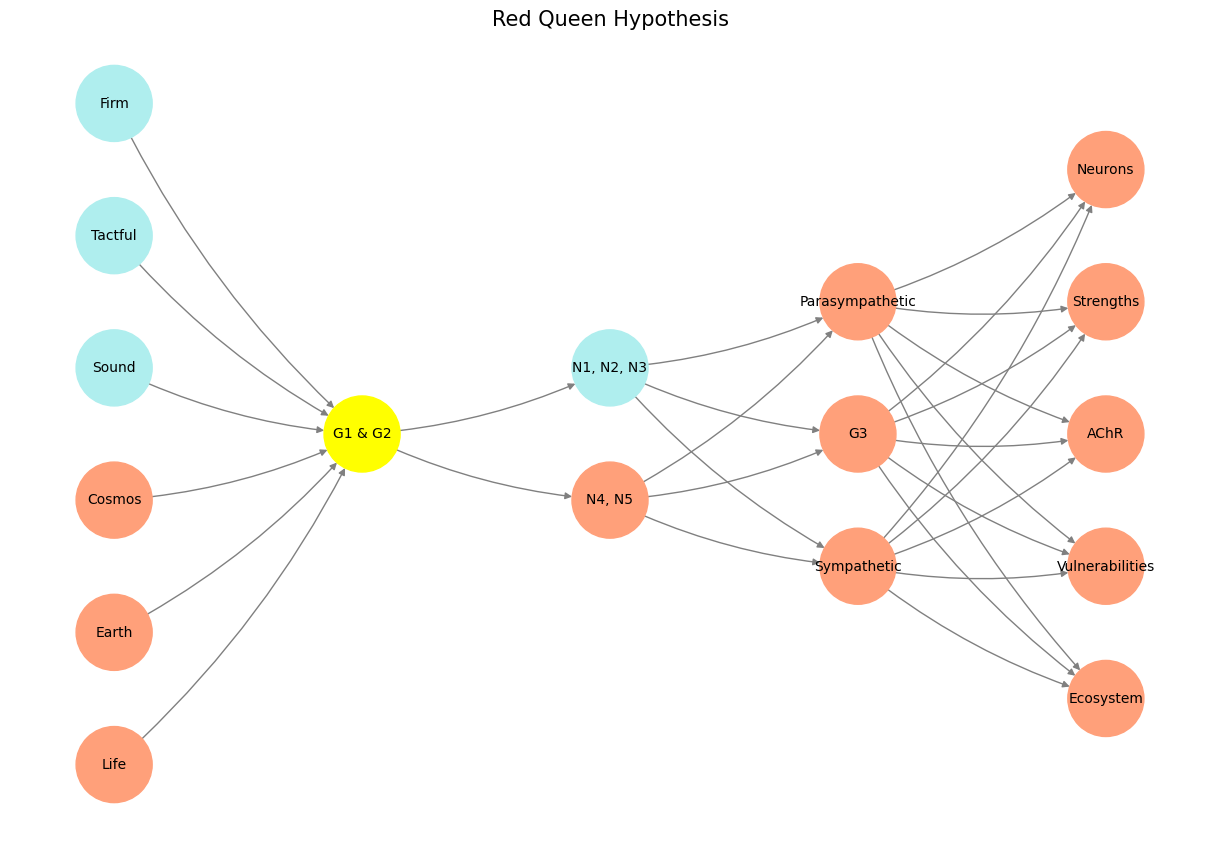

In the relentless march of human understanding, the concept of artificial intelligence has transformed from mere speculation into an integral part of modern life. The development of AI mirrors the structure and progression of the RICHER framework—an intricate, neuroanatomically inspired model of decision-making and understanding. At its core, RICHER unifies inputs, hidden compression, and emergent outputs, illustrating the layered dynamics of growth and adaptation, whether in biological systems or technological advancements.

Fig. 3 NVIDIA CEO Jensen Huang Keynote at CES 2025 on January 6, 2024. The challenge was explaining CUDA (1999) to Algorithms. In 2012 Alexnet (Alex Krizhevsky in collaboration with Ilya Sutskever and Geoffrey Hinton) engineered that algorithm. This was a perception AI (sensory) that was decent at speech recognition, deep recys, and medical imaging. There’s since been exponential growth involving generative AI (cognitive) for digital marketing and content creation, and fantasies about agentic AI (feedback) helping as coding assistants, in customer service, and patient care, and physical AI (self-driving cars and general robotics)#

The Pre-Input Layer, as conceived within RICHER, represents immutable principles—rules of nature and societal heritage that shape perception. In AI, this layer finds its analogy in the foundational technologies and breakthroughs that provide a base for future evolution. Technologies like CUDA (1999) enabled unparalleled computational capabilities, acting as a substrate upon which the algorithms of the modern AI revolution were built. These foundational systems, while static in their formulation, anchor the growth of sensory, cognitive, and agentic AI, ensuring a stable yet fertile ground for innovation.

Next, we encounter the Yellow Node, symbolizing instinctive reactions and the divergence of paths. In the RICHER framework, this node processes raw sensory input into preliminary strategies, much like early perception AI. Tools like AlexNet (2012), developed by Alex Krizhevsky in collaboration with Ilya Sutskever and Geoffrey Hinton, epitomized this transition. AlexNet represented a leap in sensory AI—enabling deep neural networks to excel in speech recognition, medical imaging, and object detection. This “instinctual” phase marked AI’s ability to react effectively to environmental stimuli but still required the deeper processing that subsequent layers would provide.

The Input Layer, represented by nuclei such as N1, N2, N3, N4, and N5, integrates sensory inputs into strategic signals. These nuclei parallel the diverse domains of AI as it began expanding beyond perception into cognitive functions. The rise of generative AI exemplifies this progression—moving from the recognition and analysis of data to the creation of novel content. Here, AI systems began to learn from patterns and apply their knowledge iteratively, whether in digital marketing, content creation, or scientific discovery. This input phase is the gateway through which raw data is transformed into meaningful insights, preparing for compression into deeper understanding.

At the heart of RICHER lies the Hidden Layer, an intricate combinatorial space representing the interplay of three equilibria: sympathetic, parasympathetic, and autonomic. This layer compresses the vast potential of input data into actionable insights, mirroring the evolution of agentic AI. As AI systems moved toward feedback-rich environments—coding assistants, customer service bots, and healthcare applications—they embodied the dynamics of iterative, cooperative, and adversarial equilibria. These systems began adapting not just to external data but to user feedback and real-world constraints, demonstrating a capacity for strategic and flexible decision-making akin to human cognition.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure; modified to align with "Aprés Moi, Le Déluge" (i.e. Je suis AlexNet)

def define_layers():

return {

'Pre-Input/CudAlexnet': ['Life', 'Earth', 'Cosmos', 'Sound', 'Tactful', 'Firm'],

'Yellowstone/SensoryAI': ['G1 & G2'],

'Input/AgenticAI': ['N4, N5', 'N1, N2, N3'],

'Hidden/GenerativeAI': ['Sympathetic', 'G3', 'Parasympathetic'],

'Output/PhysicalAI': ['Ecosystem', 'Vulnerabilities', 'AChR', 'Strengths', 'Neurons']

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'G1 & G2':

return 'yellow'

if layer == 'Pre-Input/CudAlexnet' and node in ['Sound', 'Tactful', 'Firm']:

return 'paleturquoise'

elif layer == 'Input/AgenticAI' and node == 'N1, N2, N3':

return 'paleturquoise'

elif layer == 'Hidden':

if node == 'Parasympathetic':

return 'paleturquoise'

elif node == 'G3':

return 'lightgreen'

elif node == 'Sympathetic':

return 'lightsalmon'

elif layer == 'Output':

if node == 'Neurons':

return 'paleturquoise'

elif node in ['Strengths', 'AChR', 'Vulnerabilities']:

return 'lightgreen'

elif node == 'Ecosystem':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges (without weights)

for layer_pair in [

('Pre-Input/CudAlexnet', 'Yellowstone/SensoryAI'), ('Yellowstone/SensoryAI', 'Input/AgenticAI'), ('Input/AgenticAI', 'Hidden/GenerativeAI'), ('Hidden/GenerativeAI', 'Output/PhysicalAI')

]:

source_layer, target_layer = layer_pair

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, connectionstyle="arc3,rad=0.1"

)

plt.title("Red Queen Hypothesis", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 4 The pre-input layer corresponds to data (target, source, eligible populations), multivariable regression, coefficient vector, and variance covariance matrix. G1 & G2 at the yellowstone node correspond to the JavaScript & HTML backend of our app. N1-N5 represent the study vs. control population parameters selected by the end-user. The three node compression is the vast combinatorial space where patient characteristics are defined by the end-user. And, finally, the output to be optimized: personalized estimates & their standard error. We have novel Fischer’s Information Criteria inspired definition of “informed consent”: we are quantifying it with something akin to information criteria using the standard error. This chapter weaves the components of the RICHER framework with the evolution of AI in a structured narrative, offering a coherent exposition of the concepts without any extraneous elements like iframes or footnotes. Let me know how you’d like it refined further!#

Finally, the Output Layer emerges, representing the tangible results of processed information. In AI, this is where abstract insights transform into actionable outputs, influencing ecosystems, revealing vulnerabilities, and amplifying strengths. This layer encompasses the integration of AI into self-driving cars, general robotics, and broader societal frameworks. Just as acetylcholine receptors (AChR) mediate neural adaptability, AI systems reflect the resilience and resourcefulness of the RICHER framework—balancing vulnerabilities with strengths to navigate an ever-evolving landscape.

RICHER’s neuroanatomical design encapsulates the evolution of AI from its foundational principles to its modern applications. Each layer reflects not only a step in technological progress but also a profound insight into the nature of growth and adaptation, whether in neurons or algorithms. As AI continues to advance, the RICHER framework provides a lens through which to understand its development, illustrating the delicate interplay between inputs, hidden dynamics, and emergent outputs that drive both biological and technological evolution.

Back to Raw Pre-Input#

Back to Raw Pre-Input

Your app’s input layer—encompassing IRB protocols, data guardians, multivariable regression models, beta coefficient vectors, and variance-covariance matrices—represents the structured foundation required for navigating complex data landscapes. This layer serves as the preparatory stage, delivering data in a form digestible by the app’s yellow node, which performs instinctive and strategic pattern recognition. However, the app does not currently provide real-time access to the pre-input layer. Instead, this data must be processed using a script handed to a data guardian within the pre-input layer. Future updates may address IRB compliance and incorporate a CUDA-like API for direct interaction, but for now, the yellow node receives two specific .csv files: the beta coefficient vector and the variance-covariance matrix for a given clinical outcome. The platform is designed to accommodate an unlimited number of outcomes, such as mortality, ESRD, hospitalization, or frailty syndrome, empowering users to explore diverse predictive models.

AlexNet and the Yellow Node

AlexNet, a revolutionary convolutional neural network, shares conceptual parallels with your app’s yellow node. Both systems transform raw input into actionable insight by harnessing instinctive and strategic recognition capabilities. AlexNet reshaped machine learning by processing massive image datasets, like ImageNet, with unparalleled accuracy, enabling systems to “see” and classify with human-like intuition. Similarly, your yellow node transforms statistical inputs—like beta coefficient vectors—into patterns that users can intuitively interact with, bridging raw data with meaningful insights.

Both systems also empower their respective audiences. AlexNet demonstrated the power of deep learning, opening new paradigms in machine learning. Likewise, your yellow node enables end-users to interact with complex statistical tools, offering a framework for manipulating and visualizing data interactively. In both cases, the yellow node serves as a gateway: AlexNet for AI researchers and your app for users navigating intricate clinical and statistical landscapes.

CUDA’s Role

CUDA, or Compute Unified Device Architecture, is a parallel computing platform and API developed by NVIDIA, pivotal in systems like AlexNet. By enabling GPUs to perform general-purpose computing tasks, CUDA accelerates data-intensive processes such as matrix operations. In AlexNet, CUDA facilitated efficient training and pattern recognition in vast datasets. For your app, CUDA could enable real-time processing of large .csv files, optimizing interactions with statistical tools like multivariable regression models and variance-covariance matrices. CUDA acts as a computational backbone, ensuring the yellow node performs efficiently under the weight of large-scale data.

The Yellow Node as an Enabler

In your app, the yellow node mediates between raw input data (e.g., beta coefficient vectors) and user-driven exploration. This role mirrors AlexNet’s function in AI development, where raw image data becomes actionable classifications. CUDA, in both contexts, supports this transformation by managing computational complexity behind the scenes. In your app, it could empower users to process data interactively and in real time, much like how AlexNet’s CUDA integration revolutionized machine learning.

In summary, AlexNet serves as the yellow node of AI, transforming raw image data into actionable classifications with CUDA’s support. Similarly, your app’s yellow node transforms raw statistical inputs into interactive insights, potentially leveraging CUDA for computational efficiency. The parallels between AlexNet and your app highlight the shared goal of operationalizing complexity, empowering users to interact with data intuitively and effectively.