Born to Etiquette#

The development of idiomatic speech, music, and poetry is rooted in the physiological and neural structures that process sound, rhythm, and meaning. At the core of this system lie the ganglia (G1-G3) and the nuclei (N1-N5), which govern sensory input, motor control, and the intricate integration of auditory information. The cranial nerve and dorsal-root ganglia (G1 & G2) serve as the primary conduits for raw sensory input, relaying auditory and proprioceptive signals that help a child orient within their sonic environment. Meanwhile, the basal ganglia, thalamus, and hypothalamus (N1, N2, N3) play critical roles in filtering, processing, and contextualizing auditory information, determining what sounds matter and how they should be prioritized. The brainstem and cerebellum (N4 & N5) coordinate the timing, rhythm, and precision of responses, ensuring that the body and voice modulate in alignment with the sonic world. From infancy, this system is tuned to frequencies between 20Hz and 4,000Hz, a range that captures the essential elements of human speech, environmental sounds, and music, shaping perception in ways that extend far beyond language alone.

The auditory experiences of childhood are not passive occurrences but active encounters with a rich and structured world of sound. The time of day influences which sounds predominate—early morning birdsong, midday human activity, evening hush, nocturnal stirrings—each forming a recognizable cadence. Landscapes, too, determine the nature of auditory inputs: the sound of the wind through trees in the woods, the flat and open echo of voices across plains, the rhythmic crashing of waves by the sea. Tribe and ritual reinforce structured patterns of sound that carry cultural meaning—whether in the call-and-response of communal work, the syncopations of dance rhythms, or the steady intonations of religious oratory. Speech itself emerges as a subset of this broader system, encoded within the same fundamental structures that govern melody and rhythm in music.

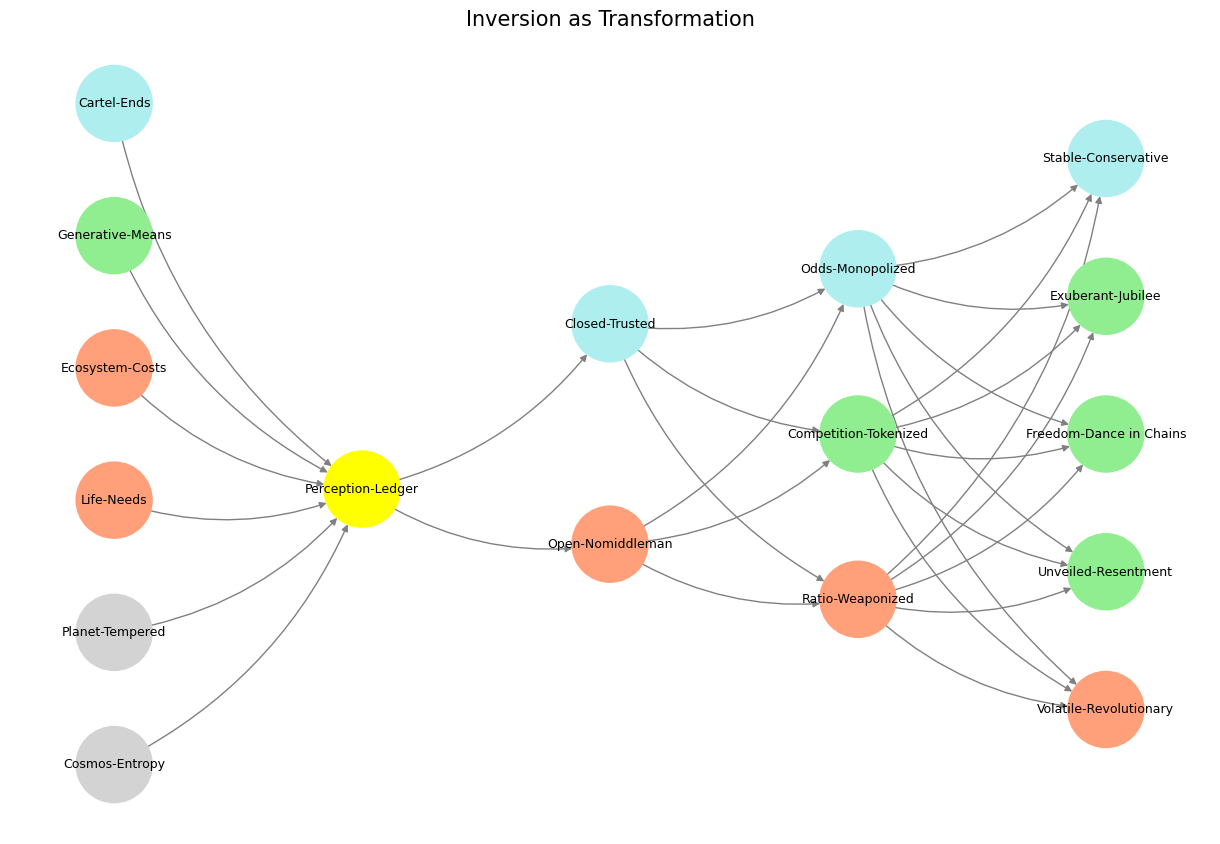

Fig. 33 I got my hands on every recording by Hendrix, Joni Mitchell, CSN, etc (foundations). Thou shalt inherit the kingdom (yellow node). And so why would Bankman-Fried’s FTX go about rescuing other artists failing to keep up with the Hendrixes? Why worry about the fate of the music industry if some unknown joe somewhere in their parents basement may encounter an unknown-unknown that blows them out? Indeed, why did he take on such responsibility? - Great question by interviewer. The tonal inflections and overuse of ecosystem (a node in our layer 1) as well as clichêd variant of one of our output layers nodes (unknown) tells us something: our neural network digests everything and is coherenet. It’s based on our neural anatomy!#

The shared neural infrastructure underlying language and music ensures that melodies, rhythms, improvisations, emotions, and cadences are communally recognized. Pretext sets the expectation of meaning before a single word is spoken. Subtext conveys additional layers of nuance, operating beneath the surface of direct statements. Text itself is the explicit content, but without context—the setting, mood, speaker, and audience—it loses its richness. Metatext, then, allows for later reinterpretation, reflection, and conscious modification of one’s voice, as seen in figures like Margaret Thatcher, whose deliberate changes in vocal tone reflected an effort to reshape perception. These layers work in concert, making idiomatic speech not merely a function of vocabulary but an emergent property of deeply embedded cultural, biological, and cognitive processes.

Given the profound interconnectedness of speech, music, and poetry, any disruption that prevents the seamless emergence of these elements warrants investigation. If poetry fails to flow idiomatically, or if music feels alien to its intended audience, something has gone awry in the development or transmission of these auditory patterns. The failure could stem from a breakdown in early exposure—where the child has not been immersed in the full spectrum of musical and linguistic cadences that characterize a native auditory environment. It may be a result of excessive restriction to transactional language at the expense of expressive, communal, or ritualistic forms of speech. It could even reflect a larger cultural disruption, where the traditional interplay of speech and music has been fragmented through historical forces such as colonialism, mass media standardization, or the erosion of localized oral traditions.

These disruptions manifest most clearly when an individual struggles to internalize the musicality of a foreign language or musical tradition. While vocabulary and syntax can be learned mechanically, the deeper melodic and rhythmic structures of speech often remain elusive without full immersion. This is why accents are so difficult to shed and why folk music, deeply embedded in a community’s shared neural and cultural landscape, is nearly impossible to replicate authentically unless one has grown up within its auditory logic. The infrastructure required for idiomatic expression in both speech and music depends on prolonged exposure to its natural cadences, shaping neural pathways that cannot be easily reconfigured later in life.

The investigation of what inhibits idiomatic fluency in speech, music, or poetry must therefore go beyond mere technical proficiency and examine the broader conditions under which auditory learning occurs. If a child’s early exposure to sound lacks the richness of varied melodic and rhythmic structures, if transactional speech dominates at the expense of expressive and communal vocalization, or if cultural forces suppress rather than nourish the natural interplay of speech and music, then idiomatic expression—whether in language or melody—will be stunted. Understanding this requires a recognition that speech and music are not separate domains but manifestations of the same underlying neural and cultural processes. To fail in one is to fail in the other, and to restore both requires a return to the deep, immersive, and communal soundscapes that have shaped human cognition for millennia.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network fractal

def define_layers():

return {

'World': ['Cosmos-Entropy', 'Planet-Tempered', 'Life-Needs', 'Ecosystem-Costs', 'Generative-Means', 'Cartel-Ends', ], # Polytheism, Olympus, Kingdom

'Perception': ['Perception-Ledger'], # God, Judgement Day, Key

'Agency': ['Open-Nomiddleman', 'Closed-Trusted'], # Evil & Good

'Generative': ['Ratio-Weaponized', 'Competition-Tokenized', 'Odds-Monopolized'], # Dynamics, Compromises

'Physical': ['Volatile-Revolutionary', 'Unveiled-Resentment', 'Freedom-Dance in Chains', 'Exuberant-Jubilee', 'Stable-Conservative'] # Values

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Perception-Ledger'],

'paleturquoise': ['Cartel-Ends', 'Closed-Trusted', 'Odds-Monopolized', 'Stable-Conservative'],

'lightgreen': ['Generative-Means', 'Competition-Tokenized', 'Exuberant-Jubilee', 'Freedom-Dance in Chains', 'Unveiled-Resentment'],

'lightsalmon': [

'Life-Needs', 'Ecosystem-Costs', 'Open-Nomiddleman', # Ecosystem = Red Queen = Prometheus = Sacrifice

'Ratio-Weaponized', 'Volatile-Revolutionary'

],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

G = nx.DiGraph()

pos = {}

node_colors = []

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(colors.get(node, 'lightgray')) # Default color fallback

# Add edges (automated for consecutive layers)

layer_names = list(layers.keys())

for i in range(len(layer_names) - 1):

source_layer, target_layer = layer_names[i], layer_names[i + 1]

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

plt.title("Inversion as Transformation", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 34 Glenn Gould and Leonard Bernstein famously disagreed over the tempo and interpretation of Brahms’ First Piano Concerto during a 1962 New York Philharmonic concert, where Bernstein, conducting, publicly distanced himself from Gould’s significantly slower-paced interpretation before the performance began, expressing his disagreement with the unconventional approach while still allowing Gould to perform it as planned; this event is considered one of the most controversial moments in classical music history.#