Anchor ⚓️#

The Cosmos in a Terminal#

Heraclitus famously declared, “You cannot step into the same river twice,” a poignant reminder that flux governs the cosmos. The currents of existence are in constant motion, impervious to human attempts to impose stability. Yet within this chaotic flow, there is a remarkable paradox: automation, particularly in the form of shell scripts executed on a terminal, offers a kind of cosmic anchor. It doesn’t halt the flux, but it provides a structured lens through which we can distinguish between the inherent uncertainty of the universe and actionable errors within our systems.

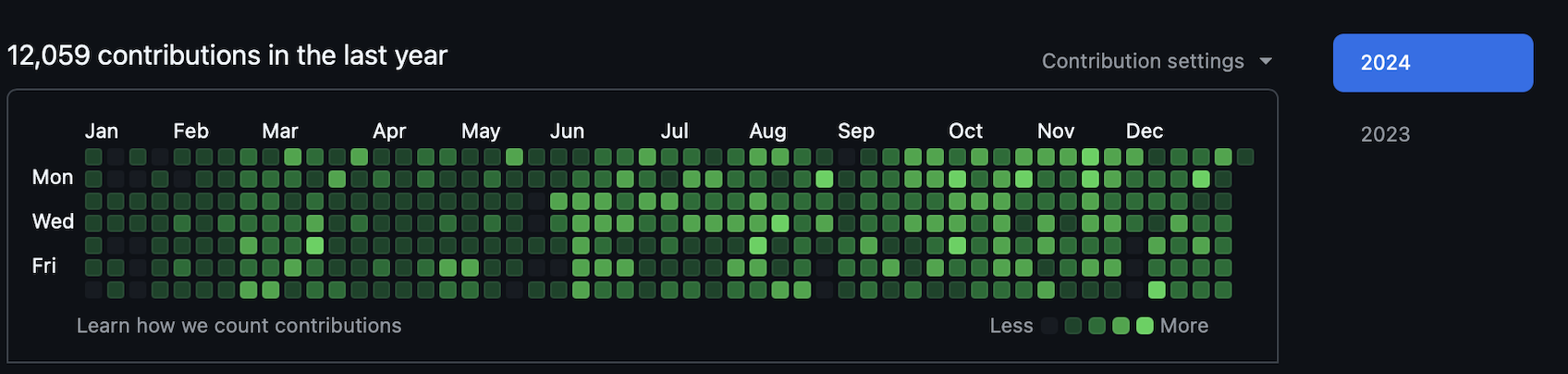

I have learned this lesson through the crucible of practice. Over the course of 2024, as December draws to a close, I’ve completed over 12,000 deployments on GitHub. For the statistically inclined, that equates to 1.3 deployments per hour for every hour of this year—unstoppable, unrelenting, and profoundly automated. If that sounds insane, it’s because it is. And yet, this madness holds the seed of genius. These deployments were not achieved through brute force, but through the disciplined elegance of automated scripts.

The Insight Born of Pain#

Fig. 3 The 10,000 hour rule is a popular idea that suggests that 10,000 hours of practice is required to master a complex skill or material#

Why are automated scripts so important? Because they are an antidote to the chaos. Without them, every deployment becomes a manual negotiation with the cosmos, a Sisyphean struggle to discern whether an issue lies within your system or the broader, inscrutable realm of cosmic risk. This is the crux: when you rely on automation, you know—deeply, intuitively, axiomatically—that a failure is not likely a bug within your process. Instead, it is either an artifact of cosmic risk or, occasionally, a legitimate issue requiring debugging.

This insight is not theoretical; it is painfully earned. For two years, I wrestled with the ambiguity that arises when processes fail sporadically. Before automation, every failure felt personal, like a rebuke from the terminal itself. I would waste hours debugging code that was perfectly functional, caught in an endless cycle of questioning myself, my methods, and my tools. The moment I embraced automation, the fog began to lift. When an automated script fails, you run it again. And again. And again. And when it works—as it often does without intervention—you have your answer: the cosmos was simply being the cosmos.

Automation as a Control Population#

Automation offers more than convenience; it provides a philosophical framework. In clinical research, a control population allows us to isolate the effects of an intervention from the noise of biological variability. Automated scripts function similarly in the world of software development. They establish a baseline, a repeatable process that works 99.9% of the time. When it fails, you are not consumed by doubt. You have a control against which to measure the failure.

Occasionally, debugging is necessary. Automation does not absolve you of responsibility; it clarifies the boundaries of your responsibility. When an automated script fails repeatedly and the error logs point to a specific issue, you know it is time to act. But until that threshold is reached, you are free to trust the process. This freedom is invaluable. It saves time, energy, and—most importantly—mental bandwidth, allowing you to focus on higher-order problems rather than getting lost in the weeds of debugging phantom issues.

Cosmic Risk and the Art of Deployment#

In 2024, this principle has driven me to an almost ludicrous level of productivity. Over 12,000 deployments. This is not a statistic meant to impress through sheer volume—although I won’t deny that it should impress. It is a testament to the power of automation as a tool for navigating uncertainty. Each deployment represents a moment of clarity within the flux, a step forward taken with confidence that my systems are robust enough to handle the cosmic risk inherent in every execution.

This rhythm of automation is not just a technical achievement; it is a philosophy. It acknowledges the truth of Heraclitus while carving out a space for human agency within the river’s flow. By standardizing processes, automation allows us to engage with uncertainty on our terms, transforming the chaos of the cosmos into a manageable backdrop against which meaningful work can be done.

Be Impressed, but Learn#

As I write this on a Sunday evening, December 29th, at precisely 7:25 p.m., I reflect on the journey that brought me here. It is one of persistence, frustration, and eventual mastery. If there is one lesson to take from this chapter, it is this: automation is not just a tool—it is a way of thinking. It is the means by which we impose order on chaos without succumbing to it, the mechanism through which we align our work with the rhythms of the cosmos.

So, yes, be impressed. But more importantly, learn. Write your scripts. Automate your processes. Deploy, fail, and deploy again. In this dance with the universe, you will find not only efficiency but also clarity, a rare gift in a world governed by flux.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure

layers = {

'Input': ['Resourcefulness', 'Resources'],

'Hidden': [

'Identity (Self, Family, Community, Tribe)',

'Tokenization/Commodification',

'Adversary Networks (Biological)',

],

'Output': ['Joy', 'Freude', 'Kapital', 'Schaden', 'Ecosystem']

}

# Adjacency matrix defining the weight connections

weights = {

'Input-Hidden': np.array([[0.8, 0.4, 0.1], [0.9, 0.7, 0.2]]),

'Hidden-Output': np.array([

[0.2, 0.8, 0.1, 0.05, 0.2],

[0.1, 0.9, 0.05, 0.05, 0.1],

[0.05, 0.6, 0.2, 0.1, 0.05]

])

}

# Visualizing the Neural Network

def visualize_nn(layers, weights):

G = nx.DiGraph()

pos = {}

node_colors = []

# Add input layer nodes

for i, node in enumerate(layers['Input']):

G.add_node(node, layer=0)

pos[node] = (0, -i)

node_colors.append('lightgray')

# Add hidden layer nodes

for i, node in enumerate(layers['Hidden']):

G.add_node(node, layer=1)

pos[node] = (1, -i)

if node == 'Identity (Self, Family, Community, Tribe)':

node_colors.append('paleturquoise')

elif node == 'Tokenization/Commodification':

node_colors.append('lightgreen')

elif node == 'Adversary Networks (Biological)':

node_colors.append('lightsalmon')

# Add output layer nodes

for i, node in enumerate(layers['Output']):

G.add_node(node, layer=2)

pos[node] = (2, -i)

if node == 'Joy':

node_colors.append('paleturquoise')

elif node in ['Freude', 'Kapital', 'Schaden']:

node_colors.append('lightgreen')

elif node == 'Ecosystem':

node_colors.append('lightsalmon')

# Add edges based on weights

for i, in_node in enumerate(layers['Input']):

for j, hid_node in enumerate(layers['Hidden']):

G.add_edge(in_node, hid_node, weight=weights['Input-Hidden'][i, j])

for i, hid_node in enumerate(layers['Hidden']):

for j, out_node in enumerate(layers['Output']):

# Adjust thickness for specific edges

if hid_node == "Identity (Self, Family, Community, Tribe)" and out_node == "Kapital":

width = 6

elif hid_node == "Tokenization/Commodification" and out_node == "Kapital":

width = 6

elif hid_node == "Adversary Networks (Biological)" and out_node == "Kapital":

width = 6

else:

width = 1

G.add_edge(hid_node, out_node, weight=weights['Hidden-Output'][i, j], width=width)

# Draw the graph

plt.figure(figsize=(12, 8))

edge_labels = nx.get_edge_attributes(G, 'weight')

widths = [G[u][v]['width'] if 'width' in G[u][v] else 1 for u, v in G.edges()]

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, width=widths

)

nx.draw_networkx_edge_labels(G, pos, edge_labels={k: f'{v:.2f}' for k, v in edge_labels.items()})

plt.title("Visualizing Capital Gains Maximization")

plt.show()

visualize_nn(layers, weights)

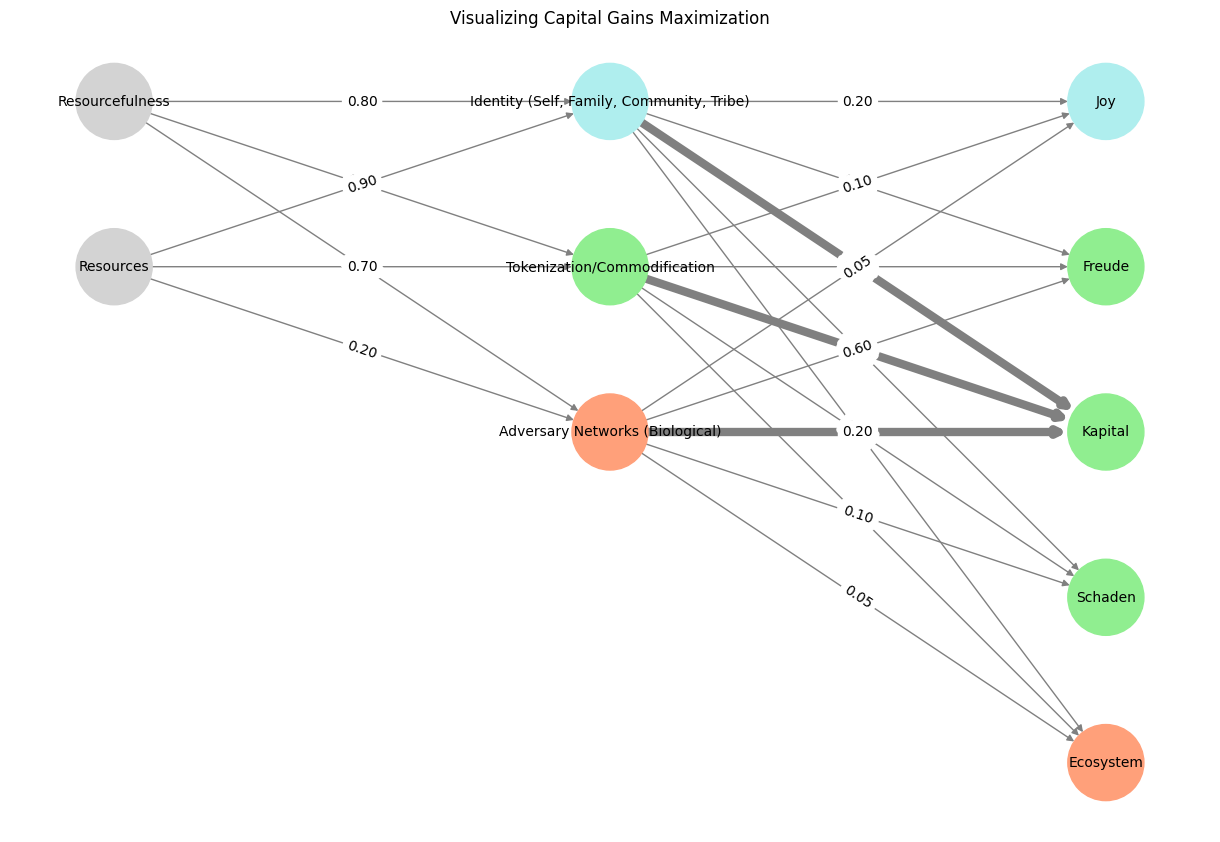

Fig. 4 Process Thinking. Our inputs consist of cosmic and strategic interactions, compressed through our system and equilibria, yielding welfare outputs. Automated shell scripts preempt wasteful backpropagation and reweighting when bad outcomes emerge from the occasional cosmic flux. This dynamic mirrors a crucial principle observed in clinical research: the necessity of a control population to appropriately attribute adverse outcomes to specific system failures rather than stochastic noise. Without proper controls, it becomes impossible to discern whether an adverse outcome arises from a fault in the system itself or from external, uncontrollable factors. This distinction guides decisions about whether the system requires reappraisal, improvement, or reweighting. By continuously monitoring and adjusting, we prevent cascading inefficiencies and maintain an equilibrium that maximizes welfare outputs while minimizing waste. At the heart of this approach lies the principle of iterative learning: refining the system through feedback loops informed by empirical evidence. Clinical research’s reliance on controls ensures a rigorous methodology for identifying genuine causal relationships, a framework that is equally essential in process thinking. Through this lens, automated scripts act as surrogate controls in dynamic systems, flagging inefficiencies or deviations and enabling proactive intervention. Ultimately, the synthesis of cosmic interactions and strategic maneuvers into a coherent process underscores the importance of maintaining adaptive systems. These systems, informed by controlled evaluations and automated adjustments, can navigate the inherent unpredictability of cosmic flux while achieving sustainable welfare outcomes.#