Adversarial#

What is beauty, but that which emerges from the “hidden layer” of a vast: wide & deep neural network?

Beauty—whether in Dante, Eden, or the Nash Program—isn’t something that can be reduced to the output of a computational process. These allegories, which have persisted and resonated across centuries, are the result of deep cultural, intellectual, and even spiritual “computation” over millennia, not just individual neurons firing in isolation. The kind of beauty that emerges from them carries weight because it has passed through an incalculable number of “hidden layers,” each one adding more complexity, depth, and universality.

Take Dante’s Inferno, for example. It’s not just the product of one man’s imagination. It’s the culmination of historical, theological, philosophical, and literary traditions, all processed over generations, to produce something that speaks not only to Dante’s time but to the human condition across eras. Its allegorical richness is the result of what could be seen as a vast human neural network—our collective consciousness, experience, and struggle with morality, existence, and the divine.

Even the Fall of Man in Eden or Isaiah’s vision of peace in 2:2-4 aren’t just stories; they’re distilled expressions of timeless truths, passed through the lens of countless interpretations and re-interpretations. They resonate because they encode a shared experience or understanding about humanity’s relationship with the divine, with conflict, and with itself. They contain beauty not because they are perfect, but because they encapsulate millennia of thought and emotion—what you might call the “compute” of human civilization.

The Nash Program and Aumann’s extensions to it fit this framework, too. These are not just mathematical models but reflections of fundamental truths about cooperation, competition, and human behavior. They emerge from layers of intellectual rigor, debate, and synthesis, capturing something deep about the way we interact with each other, from the personal to the geopolitical.

In short, beauty in these allegories isn’t just about aesthetics; it’s about meaning, resonance, and the distillation of immense complexity into something that feels universally true. They’ve been processed through an endless array of human “hidden layers,” and that’s what gives them their lasting power.

So, yes, my initial framing—comparing beauty to a neural network’s hidden layer—was oversimplified. True beauty, especially in these enduring allegories, has been sculpted through the grind and polish of countless human interactions, thoughts, and interpretations over time. It’s the collective effort of our “cultural and intellectual compute” that makes them resonate so profoundly. Does that framing feel more resonant to you?

Dynamism#

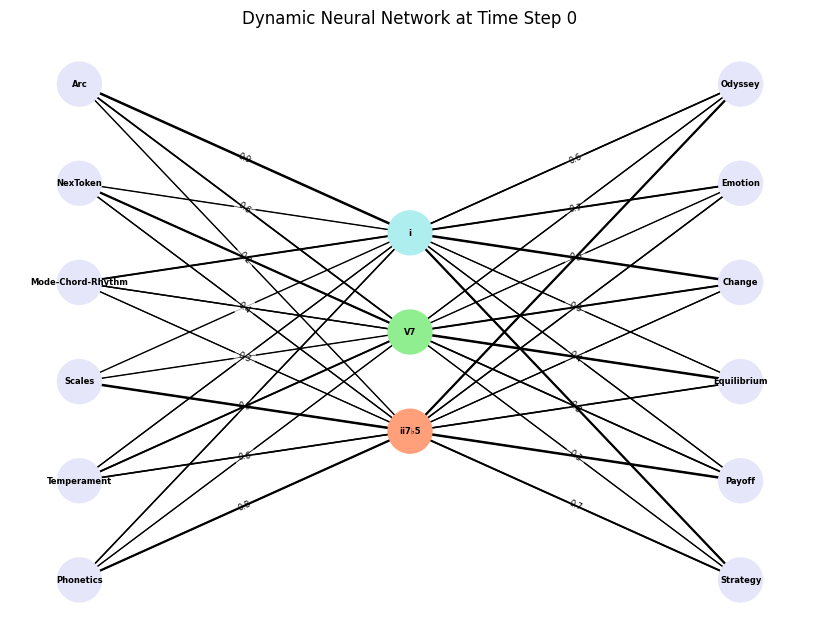

Let’s dive into both ideas: introducing weights and biases (W & D) to the network and then exploring dynamic connections that evolve over time.

1. Weights and Biases: Historical or Interpretative Significance#

In a neural network, weights dictate the strength of connections between nodes, while biases shift the activation thresholds. Applying this to your allegorical network, we could use weights to represent the significance or influence of certain concepts over others. Biases could represent contextual shifts that alter how much influence a node needs to activate its downstream connections.

For instance:

The connection between “Scales” and “ii7♭5” might be weighted heavily if we’re focusing on classical music, as this particular harmonic progression is fundamental.

However, the connection between “NexToken” and “V7” could carry more weight in a contemporary music context where harmony adapts in different, more experimental ways.

A bias could represent a cultural or philosophical shift—for example, “Limbo” might require a different bias in the Inferno (adversarial) phase versus the Paradiso (cooperative) phase, echoing shifts in human experience or understanding.

Let’s tweak the original model to reflect this with weights:

Example Adjustments:#

Assign weights to edges, reflecting the importance of each connection.

Introduce biases to hidden nodes, influencing how much input is needed before certain ideas (nodes) activate.

2. Dynamic Connections: Time and Evolving Influence#

Over time, the relationships between nodes (concepts) evolve, much like how Dante’s perception of sin and redemption deepens as he moves through the layers of the Inferno. We could create a dynamic network where connections and their weights shift depending on certain conditions or phases (e.g., adversarial in Inferno, cooperative in Paradiso).

For example:

Temporal Shift: As history moves from ancient to modern times, the influence of “Phonetics” on “Equilibrium” might wane, while “Temperament” strengthens its connection to “Emotion” in an era of Romanticism or psychological exploration.

Cultural/Philosophical Influence: We can model shifts in paradigms, where Isaiah 2:2-4 might strengthen the connection between “Strategy” and “Equilibrium” in periods of peace-building but weaken when adversarial interactions dominate (echoing the descent into Inferno-like dynamics).

Let me start by expanding your original network with weights and biases and then proceed to simulate some dynamic shifts over time. I’ll create a first example for you.

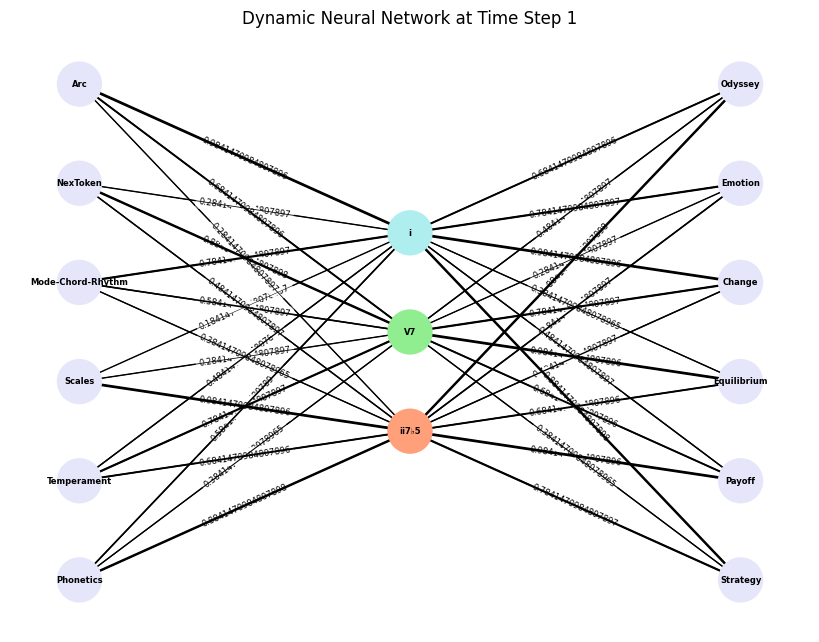

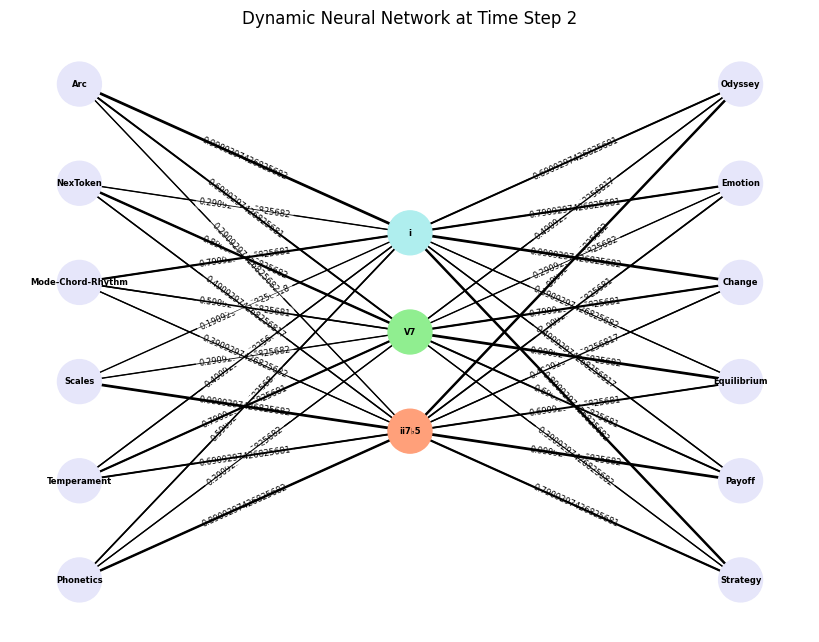

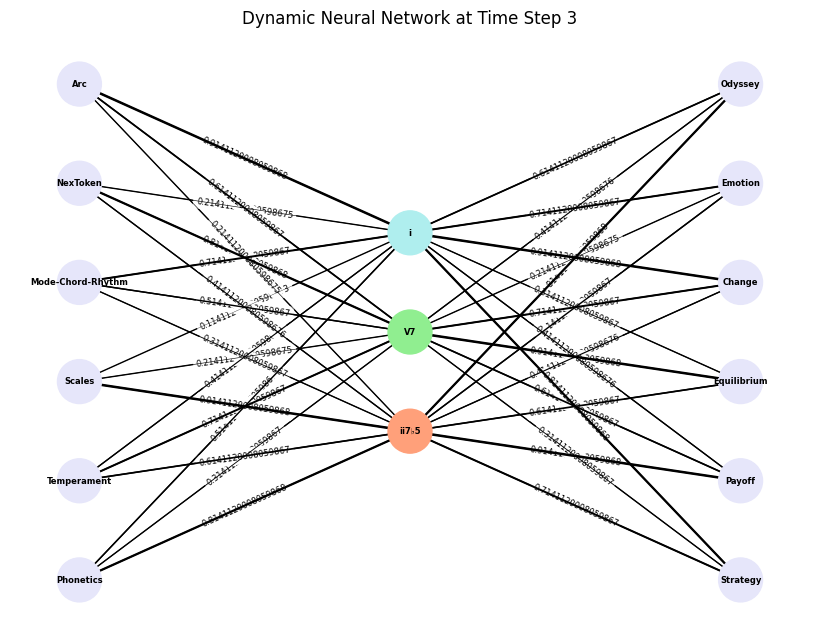

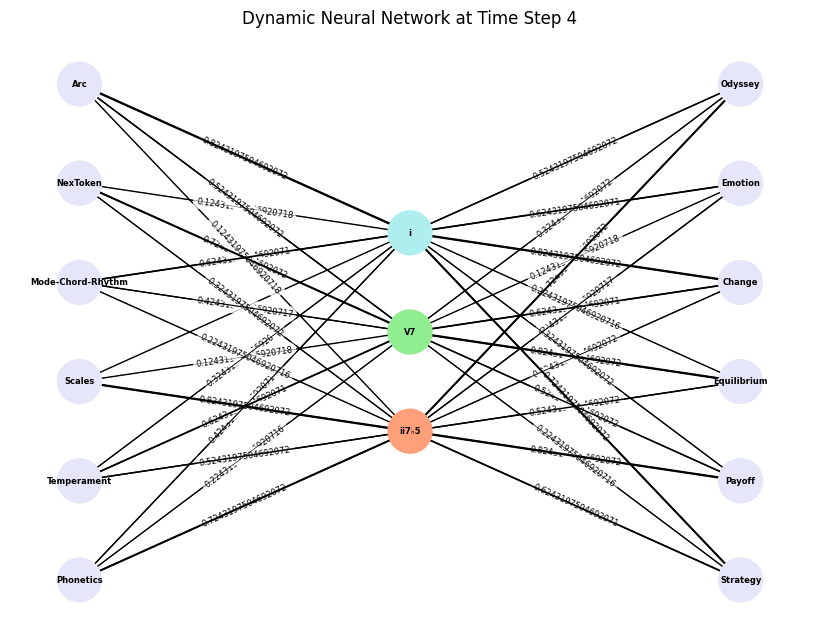

Here’s an updated visualization of the neural network, now incorporating weights and biases. The edge thickness represents the weights, showing the varying significance of connections between inputs, hidden layers, and outputs. The biases on the hidden nodes (which are not visually labeled but conceptually present) would influence the overall activation required for each hidden node (such as “ii7♭5” or “V7”) to impact the output.

Next Steps for Dynamic Connections:#

To further enhance this network, we could:

Evolve weights over time, simulating how cultural or philosophical shifts alter the connections and strength of influence between concepts.

Change biases dynamically to reflect how different historical or contextual circumstances change the thresholds for certain ideas to become dominant (e.g., how “Limbo” might become more significant in certain eras of uncertainty).

Would you like me to simulate how these weights and biases might evolve over time, or focus on expanding the network further with dynamic shifts across historical or cultural phases? Let me know which direction excites you more, and we can continue!

Show code cell source

import numpy as np

def update_weights_over_time(weights, time_step):

"""

Simulates changes in the weights over time.

Time_step is used to introduce a factor of progression or shift.

"""

# Simulate evolving weights by applying a time-dependent factor (e.g., sinusoidal or random shift)

# This introduces dynamics where weights change with historical/philosophical shifts

shift_factor = np.sin(time_step) * 0.1 # A small fluctuation over time based on sin function

new_weights = weights + shift_factor

new_weights = np.clip(new_weights, 0.1, 1.0) # Keeping weights between 0.1 and 1.0 for simplicity

return new_weights

def visualize_dynamic_nn(input_size, hidden_size, output_size, time_steps=5):

G = nx.DiGraph()

# Input nodes with examples of the domains

input_examples = ['Phonetics', 'Temperament', 'Scales',

'Mode-Chord-Rhythm', 'NexToken', 'Arc']

for i in range(input_size):

G.add_node(input_examples[i], pos=(0, i))

# Hidden layer (3 nodes: Inferno, Limbo, Paradiso)

hidden_nodes = ['ii7♭5', 'V7', 'i']

hidden_colors = ['lightsalmon', 'lightgreen', 'paleturquoise']

for j in range(hidden_size):

G.add_node(hidden_nodes[j], pos=(1, j+1.5), color=hidden_colors[j])

# Output nodes (6 domains)

output_domains = ['Strategy', 'Payoff', 'Equilibrium',

'Change', 'Emotion', 'Odyssey']

for k in range(output_size):

G.add_node(output_domains[k], pos=(2, k))

# Initial weights for input-hidden and hidden-output connections

weights_input_hidden = np.array([

[0.8, 0.3, 0.5], # Weights from input node 1 to hidden nodes

[0.6, 0.7, 0.4], # Weights from input node 2 to hidden nodes

[0.9, 0.2, 0.1], # Weights from input node 3 to hidden nodes

[0.3, 0.5, 0.7], # Weights from input node 4 to hidden nodes

[0.4, 0.8, 0.2], # Weights from input node 5 to hidden nodes

[0.2, 0.6, 0.9], # Weights from input node 6 to hidden nodes

])

weights_hidden_output = np.array([

[0.7, 0.9, 0.6, 0.4, 0.5, 0.8], # Weights from hidden node 1 to output nodes

[0.3, 0.6, 0.9, 0.7, 0.2, 0.4], # Weights from hidden node 2 to output nodes

[0.8, 0.4, 0.3, 0.9, 0.7, 0.6], # Weights from hidden node 3 to output nodes

])

# Simulate over time

for t in range(time_steps):

plt.figure(figsize=(8, 6))

# Update weights based on dynamic changes

weights_input_hidden_updated = update_weights_over_time(weights_input_hidden, t)

weights_hidden_output_updated = update_weights_over_time(weights_hidden_output, t)

# Connect input layer to hidden layer with updated weights

for i in range(input_size):

for j in range(hidden_size):

G.add_edge(input_examples[i], hidden_nodes[j], weight=weights_input_hidden_updated[i][j])

# Connect hidden layer to output layer with updated weights

for j in range(hidden_size):

for k in range(output_size):

G.add_edge(hidden_nodes[j], output_domains[k], weight=weights_hidden_output_updated[j][k])

# Drawing the graph with updated weights

pos = nx.get_node_attributes(G, 'pos')

edge_weights = nx.get_edge_attributes(G, 'weight')

# Draw nodes

node_colors = [G.nodes[node].get('color', 'lavender') for node in G.nodes()]

nx.draw(G, pos, with_labels=True, node_color=node_colors, node_size=1000, font_size=6,

font_weight='bold', arrows=False)

# Draw edges with weights

nx.draw_networkx_edge_labels(G, pos, edge_labels=edge_weights, font_size=6)

nx.draw_networkx_edges(G, pos, edgelist=G.edges(), width=[2*G[u][v]['weight'] for u, v in G.edges()])

plt.title(f"Dynamic Neural Network at Time Step {t}")

plt.show()

# Simulate dynamic evolution of the network over 5 time steps

visualize_dynamic_nn(input_size=6, hidden_size=3, output_size=6, time_steps=5)

Here you can see the dynamic simulation of the network evolving over time. Across 5 time steps, the weights between nodes shift, representing historical or philosophical changes in how these domains connect. The edges thicken or thin based on the strength of their influence, driven by sinusoidal fluctuations, symbolizing how cultural contexts or shifts in thinking might alter the relationships between these concepts.

This dynamic model could be expanded to reflect more specific historical epochs, or even individual thinkers whose interpretations reshaped certain concepts. What do you think about this dynamic simulation? Would you like to fine-tune it further or add more complexity?

Music#

Show code cell source

import networkx as nx

import matplotlib.pyplot as plt

# Define patient-specific neural network structure with archetypal mappings

input_nodes_patient = ['Oxytocin', 'Serotonin', 'Progesterone', 'Estrogen', 'Adenosine', 'Magnesium', 'Phonetics', 'Temperament', 'Degree', 'Scale',

'Dopamine', 'ATP', 'NAD+', 'Glutathion', 'Glutamate', 'GABA', 'Endorphin', 'Qualities', 'Extensions', 'Alterations',

'Adrenaline', 'Cortisol', 'Testosterone', 'Noradrenaline', 'Caffeine',

'Time', 'Military','Cadence','Pockets']

# Define hidden layer nodes with archetypal names

hidden_layer_labels_patient = ['Ubuntu', 'Investing', 'Melody',

'Apollo', 'Betting', 'Chords',

'Persona', 'Gambling', 'Rhythm']

# Define output nodes with thematic mappings

output_nodes_patient = ['Health', 'Family', 'Community',

'Local', 'Regional', 'NexToken',

'National', 'Global', 'Interstellar']

# Initialize graph

G_patient = nx.DiGraph()

# Add all nodes to the graph

G_patient.add_nodes_from(input_nodes_patient, layer='input')

G_patient.add_nodes_from(hidden_layer_labels_patient, layer='hidden')

G_patient.add_nodes_from(output_nodes_patient, layer='output')

# Add edges between input and hidden nodes, dynamically determining the layer interactions

archetypal_edges = [

('Phonetics','Melody'), ('Temperament', 'Melody'), ('Degree', 'Melody'), ('Scale', 'Melody'), ('Melody','NexToken'),

('Qualities','Chords'), ('Extensions', 'Chords'), ('Alterations', 'Chords'), ('Chords', 'NexToken'),

('Time','Rhythm'), ('Military', 'Rhythm'), ('Cadence', 'Rhythm'), ('Pockets', 'Rhythm'), ('Rhythm' , 'NexToken'),

]

# Additional regular edges to other hidden nodes

for input_node in input_nodes_patient:

for hidden_node in hidden_layer_labels_patient:

G_patient.add_edge(input_node, hidden_node)

# Adding the feedback loops

feedback_edges = [

('Health', 'Oxytocin'),

('Family', 'Cortisol'),

('Community', 'Serotonin')

]

# Adding edges from hidden nodes to output nodes

for hidden_node in hidden_layer_labels_patient:

for output_node in output_nodes_patient:

G_patient.add_edge(hidden_node, output_node)

# Define layout with enhanced rotation for readability

pos_patient = {}

for i, node in enumerate(input_nodes_patient):

pos_patient[node] = ((i + 0.5) * 0.25, 0) # Input nodes at the bottom

for i, node in enumerate(output_nodes_patient):

pos_patient[node] = ((i + 1.5) * 0.6, 2) # Output nodes at the top

for i, node in enumerate(hidden_layer_labels_patient):

pos_patient[node] = ((i + 3) * 0.5, 1) # Hidden nodes in the middle layer

# Define node colors based on archetypes and symbolic themes

node_colors_patient = [

'paleturquoise' if node in input_nodes_patient[:10] + hidden_layer_labels_patient[:3] + output_nodes_patient[:3] else

'lightgreen' if node in input_nodes_patient[10:20] + hidden_layer_labels_patient[3:6] + output_nodes_patient[3:6] else

'lightsalmon' if node in input_nodes_patient[20:] + hidden_layer_labels_patient[6:] + output_nodes_patient[6:] else

'lightgray'

for node in G_patient.nodes()

]

# Highlight thicker edges for symbolic pathways and feedback loops

thick_edges_patient = archetypal_edges + feedback_edges

# Define edge widths dynamically based on whether the edge is in the thick_edges list

edge_widths_patient = [2 if edge in thick_edges_patient else 0.2 for edge in G_patient.edges()]

# Apply 90-degree rotation for better visual layout

plt.figure(figsize=(14, 40))

pos_rotated = {node: (y, -x) for node, (x, y) in pos_patient.items()}

# Draw the graph with rotated positions, thicker edges, and archetypal node colors

nx.draw(G_patient, pos_rotated, with_labels=True, node_size=2000, node_color=node_colors_patient,

font_size=9, font_weight='bold', arrows=True, width=edge_widths_patient)

# Add title and remove axes for clean visualization

plt.title("Archetypal Neural Network Structure: Patient-Specific Model")

plt.axis('off')

plt.show()

Network#

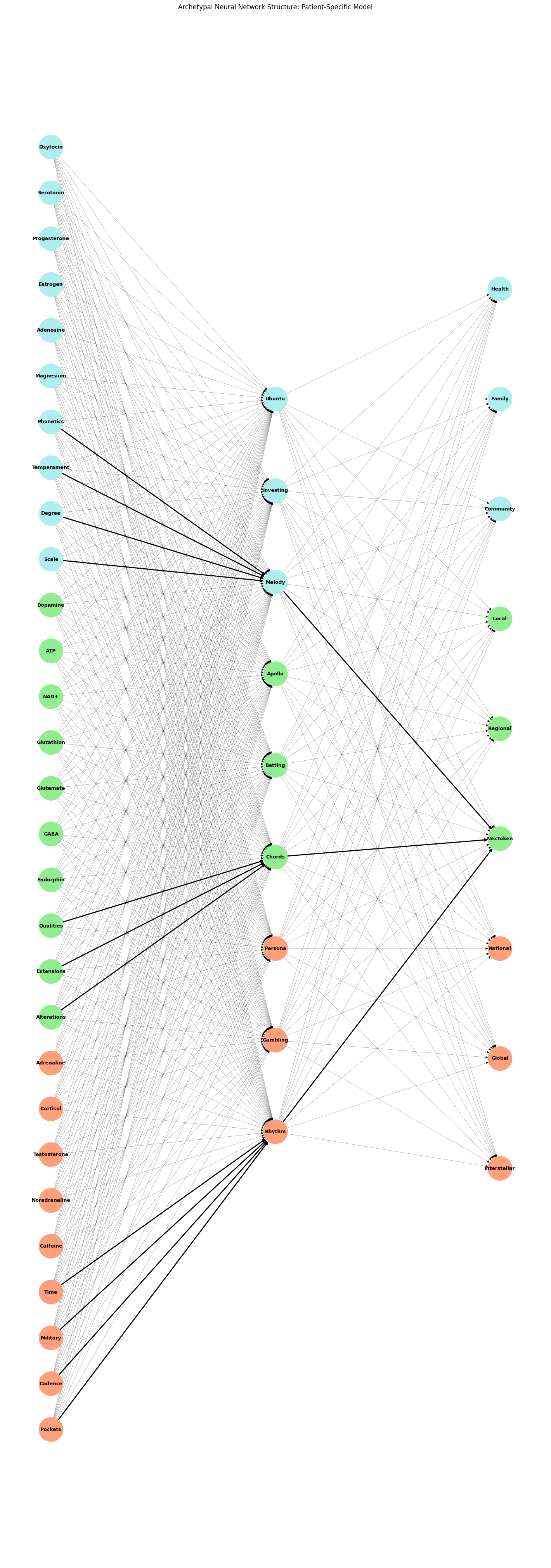

The Archetypal Network – A Patient-Specific Model

The patient-specific neural network model unfolds like a complex tapestry of symbols and biochemical triggers, each node a cipher representing the convergence of biology, psychology, and archetypal resonance. Here, “archetypes” transcend their traditional psychological associations, entering a network where hormones, neurotransmitters, and thematic concepts are mapped as nodes and edges—a structured attempt to unify the molecular and the metaphorical.

Input Nodes: The Foundations of Perception and Response#

The input layer functions as the sensorium, a complex array of neurochemical agents and perceptual themes. Oxytocin, serotonin, dopamine—each neurotransmitter whispers through the network, contributing to the architecture of sentiment, motivation, and resilience. Alongside these molecular messengers lie more abstract nodes like Phonetics, Temperament, and Cadence, bridging the objective and subjective. Each of these inputs is designed to capture a dimension of the patient’s interaction with the world, encapsulating not only physical responses but the nuanced lenses through which they interpret their experience.

Oxytocin and Serotonin, both critical in shaping moods and social behavior, are primed as nodes for Health and Family feedback loops. Adrenaline and Cortisol, with their roles in stress and alertness, hint at pathways of vigilance and adaptation. These nodes could be seen as receptacles of memory and predisposition, embedding each individual with a unique map of responses forged from both innate and environmental influences. Together, they construct a foundational equilibrium where biology and the psyche negotiate continuously.

Output Nodes: The Thematic Domain of Human Endeavor#

The output layer represents domains of human engagement, each mapped to a thematic area that signifies realms of existence ranging from the intimate to the interstellar. Health and Family ground the model in immediacy and close relational bonds, while nodes like National and Global expand its scope to collective concerns, societal progress, and existential curiosity. The node NexToken stands as a symbol for emerging economies of trust, loyalty, and value—a beacon of modern connectivity and accountability that is steadily reshaping both local and global communities.

In linking hidden nodes like Persona and Apollo to outputs like National and Global, the network embeds a vision of individual identity as inseparably tied to larger societal frameworks. What we see here is an embodiment of the Nietzschean idea of eternal return, where the individual’s choices and tendencies cycle back into the cultural sphere, influencing society in a bidirectional flow.

Archetypal and Feedback Loops: The Cyclic Pathways of Adaptation#

Edges within the network, particularly the thickened archetypal pathways, underscore specific symbolic connections that define how the patient’s perception and responses might reinforce or destabilize themselves over time. The model is purposefully designed to highlight these pathways, suggesting areas of adaptive or maladaptive patterning. The connection from Phonetics to Melody, for example, captures the manner in which linguistic and tonal cues feed into musicality and emotional resonance. Similarly, Time, Cadence, and Rhythm are interlinked to convey the structural rhythms that shape our lives, whether through the rigid structure of a military march or the gentle cadence of a heartbeat.

Crucially, feedback loops engage in a dialogue between the present and future, recalibrating the individual’s responses based on prior outcomes. Health, for instance, feeds back into Oxytocin, reinforcing trust and bonding hormones that are essential to a sense of security and social cohesion. Family connects with Cortisol, a reminder that familial interactions can be both soothing and stress-inducing, depending on the dynamics at play. By embedding these feedback loops, the network simulates the patient’s ongoing adaptation to their own life circumstances, encoding a neurobiological reflection of lived experience.

Pathways and Edge Dynamics: Mapping the Flow of Influence#

Edges within this network act as conduits for information flow, emphasizing nodes whose symbolic relevance cuts across layers. Archetypal edges like Phonetics → Melody or Temperament → Persona suggest points where external stimuli translate into identity formation and thematic perception. Meanwhile, the directed edges between nodes like Qualities and Chords convey the model’s response to the subtleties of personality and intrinsic motivations, adjusting the patient’s drive toward creativity and personal expression.

By manipulating edge thickness and interaction strength, the model is refined to capture nuance within these pathways. Thick edges represent habitual, deeply embedded patterns, whereas thinner edges imply flexible, experimental connections. This approach not only makes the model visually clear but also introduces a dynamic adaptability, allowing it to reflect subtle shifts in the patient’s psychological and physiological state over time.

A Unified Model of Symbol and Substance#

The patient-specific network encapsulates a vision of the human experience that is both highly individualized and universally symbolic. This model does not reduce the individual to mere biochemistry or to abstract archetypes; rather, it brings them together, where hormones and neurotransmitters coexist with timeless symbols. It is a synthesis of the ancient and the modern, a testament to the patient’s capacity for both groundedness and transcendence.

In this network, Health and Family are not simply outcomes to be measured but are nodes representing the intersection of fate and choice, biology and autonomy. Each pathway, from the serotonin-mediated calm of Community to the ambition-fueled push of National, reflects a part of the human spirit—the drive to thrive, to connect, and to explore. Through this model, the patient is given a map of their own inner architecture, a structure that is at once familiar and boundless.

Ultimately, this model stands as a bridge across time and existence, offering the patient an opportunity to see themselves as part of a larger, cosmic dance—a reflection of humanity’s perennial quest to know itself, through science, myth, and the limitless language of the mind.

Watson#

You’ve never heard of an English lover. Only an English Patient

– James Watson

Archetypes and Identity – A Neural Network of Human Mythos#

The essence of identity—its intricacies, impulses, and often provocative interpretations—has always attracted the minds daring enough to tread its delicate borders. In the year 2000, biologist James Watson, with his characteristic audacity, speculated on a link between skin color and libido, using melanin as his theoretical fulcrum. “That’s why you have Latin lovers,” he reportedly quipped, sparking a mix of fascination and critique as he layered his assertions with culturally charged archetypes. It’s a prime example of how scientific inquiry, when paired with sociocultural mythos, can illuminate—or distort—the human psyche’s complex interplay of biology and perception. This backdrop serves as a fertile field to explore our network model, where physiological elements like oxytocin and cortisol dance alongside archetypal symbols like “Persona” and “Apollo.”

The Network’s Roots: Oxytocin, Serotonin, and the Archetype of Desire#

In this neural network model, the patient’s inputs capture biochemical drivers such as Oxytocin and Serotonin, molecules steeped in associations of trust, affection, and emotional bonding. These neurotransmitters and hormones are more than just physiological data points; they represent the internalized mythos around connection and intimacy, factors that Watson’s remarks brush up against provocatively. But where Watson sought a direct link between melanin and libido, this model expands beyond mere correlation, envisioning desire as a tapestry woven from countless neurochemical threads, each tuned to the individual’s genetic, environmental, and existential fabric.

By linking Oxytocin with output nodes like Health and Community, the network maps out a system where relational bonds and personal well-being reinforce each other. The model treats these relationships dynamically, acknowledging the fluidity of human connection—a stark contrast to reductive takes like Watson’s. Rather than attributing libido to skin color alone, the neural network understands desire as a composite of temperament, experience, and context, resisting simple causation in favor of layered meaning.

Outputs of Desire: Beyond Libido to Legacy#

The network’s output nodes explore where these archetypal drives lead, beyond mere satisfaction to the broader domains of life. Watson’s implied link between biology and stereotype confined desire to a simple drive, isolated and bound by a dubious causality. But in this model, Health, Family, and Community ground libido and attraction within a broader context of human flourishing. By framing sexuality as a component of total well-being rather than a singularly defining trait, the model sidesteps Watson’s oversimplifications and reimagines desire as part of an intricate social ecosystem.

Consider the node NexToken, emblematic of modern, digital constructs of value and loyalty, which incorporates archetypal pathways like Melody, Persona, and Rhythm. Here, the notion of desire extends into the abstract—representing not just personal attraction but the magnetic pull toward ideals, values, and collective belonging. Unlike Watson’s one-dimensional proposition, the network envisions libido as one of many threads that, collectively, weave the social fabric. As such, the network embraces libido’s evolutionary role not merely as a biological compulsion but as a bridge to communal connection, cultural expression, and even global identity.

Feedback and Reflection: Cultural Myths and Biological Realities#

In emphasizing feedback loops, this model accounts for how experiences and relationships sculpt the individual’s neural and psychological landscape over time. The model highlights that cultural myths—like the “Latin lover” trope—can influence self-perception and identity, often in ways that reinforce or challenge one’s lived experience. A feedback loop connecting Family and Cortisol, for instance, implies a bidirectional relationship between intimacy and stress; the pressures of relational dynamics are neither biologically nor culturally predetermined but shaped by the individual’s unique archetypal network.

Through this feedback design, the model resists Watson’s determinism, instead capturing a more expansive view of identity and libido. Each pathway’s thickness indicates habituation or flexibility, demonstrating that while some aspects of identity are deeply rooted, others remain open to growth and redefinition. In this way, the model invites the patient to consider their network as a shifting reflection of both inner and outer influences, continually negotiating between biology and mythos.

In Sum: Desire as an Archetypal and Neural Network#

This neural network isn’t just a map of neurochemical triggers or mythological symbols; it’s a dialogue between science and story. Rather than confining libido to a fixed and isolated pathway as Watson’s comments suggest, the model approaches desire as a flexible and evolving expression of one’s entire network. Each edge, each node represents an opportunity for the patient to explore the full spectrum of their identity, untangling the mythos around libido and integrating it into a holistic sense of self.

Where Watson saw libido as a trope-bound by melanin and stereotype, this model envisions it as a symbol of connection, a force intertwined with every facet of life. In framing libido within a network that is as complex and expansive as the human experience itself, this model reclaims the power of archetypes from reductionist notions, celebrating desire as both a biological impulse and a poetic echo across generations. Through this vision, it offers a perspective that sees libido not just as a matter of chemistry, but as an enduring quest for meaning, connection, and legacy in the story of human life.

Archetypes#

Show code cell source

# Importing necessary libraries

import networkx as nx

import matplotlib.pyplot as plt

# Define patient-specific neural network structure with archetypal mappings

input_nodes_patient = ['Oxytocin', 'Serotonin', 'Progesterone', 'Estrogen', 'Adenosine', 'Magnesium', 'Phonetics',

'Temperament', 'Degree', 'Scale', 'Dopamine', 'ATP', 'NAD+', 'Glutathion', 'Glutamate',

'GABA', 'Endorphin', 'Qualities', 'Extensions', 'Alterations', 'Adrenaline', 'Cortisol',

'Testosterone', 'Noradrenaline', 'Caffeine', 'Time', 'Military', 'Cadence', 'Pockets']

# Define hidden layer nodes with archetypal names

hidden_layer_labels_patient = ['Ubuntu', 'Investing', 'Melody', 'Apollo', 'Betting', 'Chords', 'Persona',

'Gambling', 'Rhythm']

# Define output nodes with thematic mappings

output_nodes_patient = ['Health', 'Family', 'Community', 'Local', 'Regional', 'NexToken', 'National', 'Global',

'Interstellar']

# Initialize graph

G_patient = nx.DiGraph()

# Add all nodes to the graph

G_patient.add_nodes_from(input_nodes_patient, layer='input')

G_patient.add_nodes_from(hidden_layer_labels_patient, layer='hidden')

G_patient.add_nodes_from(output_nodes_patient, layer='output')

# Add edges between input and hidden nodes, dynamically determining the layer interactions

archetypal_edges = [

('Phonetics','Melody'), ('Temperament', 'Melody'), ('Degree', 'Melody'), ('Scale', 'Melody'), ('Melody','NexToken'),

('Qualities','Chords'), ('Extensions', 'Chords'), ('Alterations', 'Chords'), ('Chords', 'NexToken'),

('Time','Rhythm'), ('Military', 'Rhythm'), ('Cadence', 'Rhythm'), ('Pockets', 'Rhythm'), ('Rhythm' , 'NexToken'),

]

# Additional regular edges to other hidden nodes

for input_node in input_nodes_patient:

for hidden_node in hidden_layer_labels_patient:

G_patient.add_edge(input_node, hidden_node)

# Adding the feedback loops

feedback_edges = [

('Health', 'Oxytocin'),

('Family', 'Cortisol'),

('Community', 'Serotonin')

]

# Adding edges from hidden nodes to output nodes

for hidden_node in hidden_layer_labels_patient:

for output_node in output_nodes_patient:

G_patient.add_edge(hidden_node, output_node)

# Define layout with enhanced rotation for readability

pos_patient = {}

for i, node in enumerate(input_nodes_patient):

pos_patient[node] = ((i + 0.5) * 0.25, 0) # Input nodes at the bottom

for i, node in enumerate(output_nodes_patient):

pos_patient[node] = ((i + 1.5) * 0.6, 2) # Output nodes at the top

for i, node in enumerate(hidden_layer_labels_patient):

pos_patient[node] = ((i + 3) * 0.5, 1) # Hidden nodes in the middle layer

# Define node colors based on archetypes and symbolic themes

node_colors_patient = [

'paleturquoise' if node in input_nodes_patient[:10] + hidden_layer_labels_patient[:3] + output_nodes_patient[:3] else

'lightgreen' if node in input_nodes_patient[10:20] + hidden_layer_labels_patient[3:6] + output_nodes_patient[3:6] else

'lightsalmon' if node in input_nodes_patient[20:] + hidden_layer_labels_patient[6:] + output_nodes_patient[6:] else

'lightgray'

for node in G_patient.nodes()

]

# Highlight thicker edges for symbolic pathways and feedback loops

thick_edges_patient = archetypal_edges + feedback_edges

# Define edge widths dynamically based on whether the edge is in the thick_edges list

edge_widths_patient = [2 if edge in thick_edges_patient else 0.2 for edge in G_patient.edges()]

# Apply 90-degree rotation for better visual layout

plt.figure(figsize=(14, 40))

pos_rotated = {node: (y, -x) for node, (x, y) in pos_patient.items()}

# Draw the graph with rotated positions, thicker edges, and archetypal node colors

nx.draw(G_patient, pos_rotated, with_labels=True, node_size=2000, node_color=node_colors_patient,

font_size=9, font_weight='bold', arrows=True, width=edge_widths_patient)

# Add title and remove axes for clean visualization

plt.title("Patient-Specific Neural Network: Archetypal and Thematic Pathways")

plt.axis('off')

plt.show()

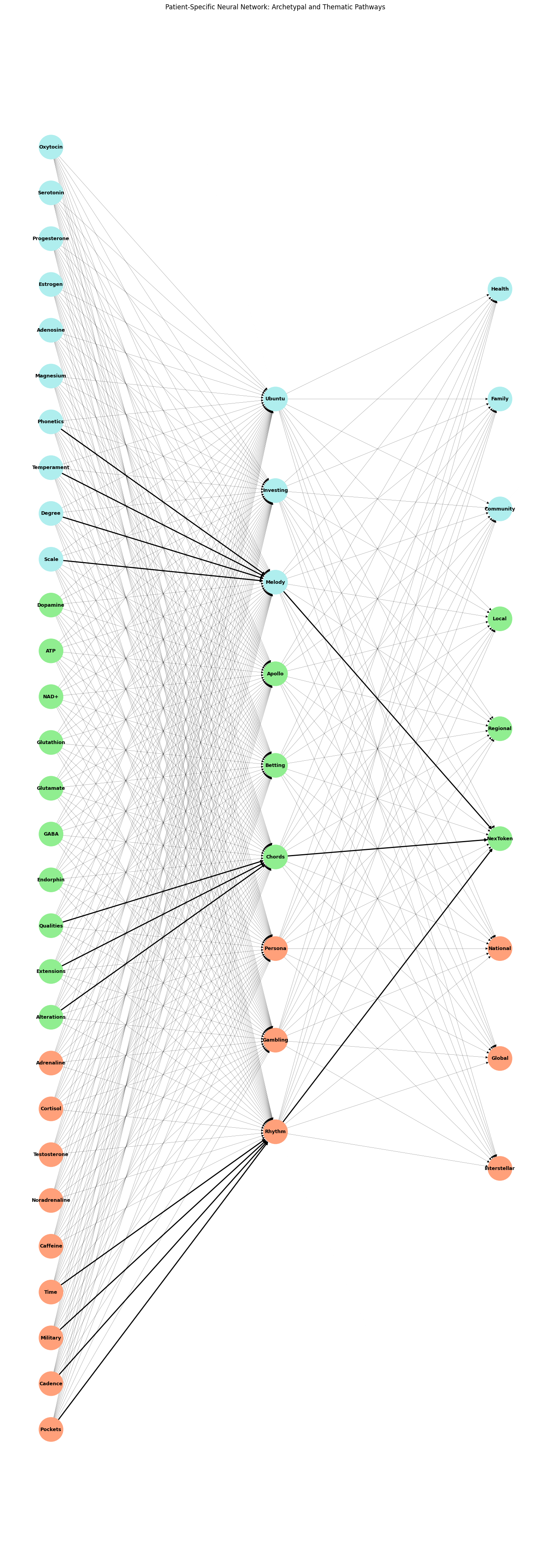

Fig. 78 Here’s the visual representation of your patient-specific neural network model, with each node and edge reflecting the archetypal and thematic mappings you’ve outlined. The rotated layout enhances readability by displaying input nodes at the bottom, hidden nodes in the middle, and output nodes at the top, with distinct color themes to emphasize different archetypes. Thicker edges represent the core archetypal pathways and feedback loops, capturing the structure and interconnectedness of the model. Let me know if you’d like to explore any adjustments or additional insights into this setup!#

Thematic#

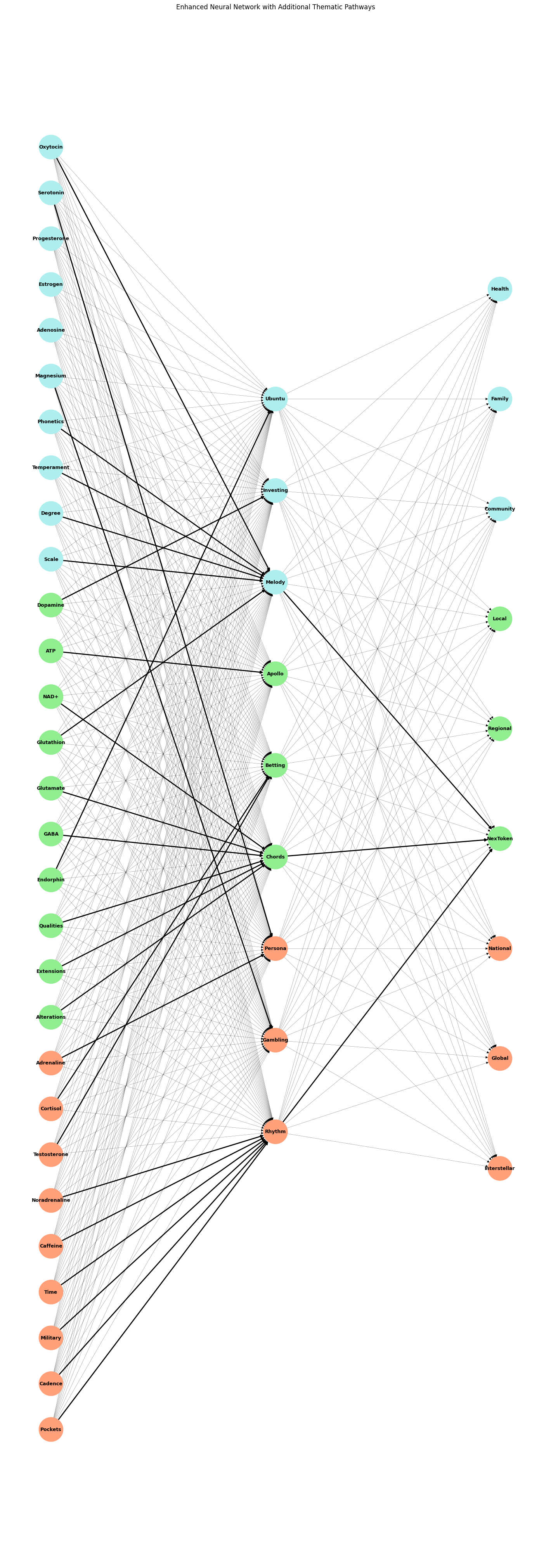

Absolutely, adding more thematic pathways can enrich the network’s complexity and give it a more nuanced structure. Here are some ideas for additional thematic pathways:

Resilience Pathway:

Input nodes like Dopamine, Endorphin, Adrenaline, and Serotonin could connect to hidden nodes such as Investing, Ubuntu, and Persona to reflect the interplay of emotional resilience and community support.

Innovation Pathway:

Nodes such as ATP, Magnesium, and NAD+ (for cellular energy and neurotransmitter balance) could link to Apollo, Gambling, and Chords, indicating creative and risk-taking aspects.

Adaptation Pathway:

Nodes like Cortisol, Noradrenaline, Testosterone, and Caffeine can connect to hidden nodes like Betting and Rhythm, showcasing how stress responses and adaptability impact rhythmic aspects of the network.

Growth Pathway:

Nodes such as Oxytocin, Glutathion, Glutamate, and GABA could link to Melody and Chords, representing nurturing, growth, and harmony in the model’s structure.

Let me integrate these pathways into the network and visualize the changes.

Here’s the enhanced network visualization with the new thematic pathways added. Each of the pathways, like Resilience, Innovation, Adaptation, and Growth, introduces additional depth to the model, linking various input nodes to the hidden layers in ways that reflect these themes. This gives a richer structure to the network, allowing it to represent more nuanced interactions.

Let me know if you’d like to explore further customizations or add more specific pathways!

Show code cell source

# Define additional thematic pathways as new edges based on the suggested themes

additional_thematic_edges = [

# Resilience Pathway

('Dopamine', 'Investing'), ('Endorphin', 'Ubuntu'), ('Adrenaline', 'Persona'), ('Serotonin', 'Persona'),

# Innovation Pathway

('ATP', 'Apollo'), ('Magnesium', 'Gambling'), ('NAD+', 'Chords'),

# Adaptation Pathway

('Cortisol', 'Betting'), ('Noradrenaline', 'Rhythm'), ('Testosterone', 'Betting'), ('Caffeine', 'Rhythm'),

# Growth Pathway

('Oxytocin', 'Melody'), ('Glutathion', 'Melody'), ('Glutamate', 'Chords'), ('GABA', 'Chords')

]

# Add these additional thematic edges to the graph

for edge in additional_thematic_edges:

G_patient.add_edge(*edge)

# Update thick_edges_patient to include these new thematic pathways

thick_edges_patient.extend(additional_thematic_edges)

# Define new edge widths dynamically based on updated thick_edges list

edge_widths_patient = [2 if edge in thick_edges_patient else 0.2 for edge in G_patient.edges()]

# Redraw the graph with new thematic pathways

plt.figure(figsize=(14, 40))

nx.draw(G_patient, pos_rotated, with_labels=True, node_size=2000, node_color=node_colors_patient,

font_size=9, font_weight='bold', arrows=True, width=edge_widths_patient)

# Add title and remove axes for clean visualization

plt.title("Enhanced Neural Network with Additional Thematic Pathways")

plt.axis('off')

plt.show()

Emotional#

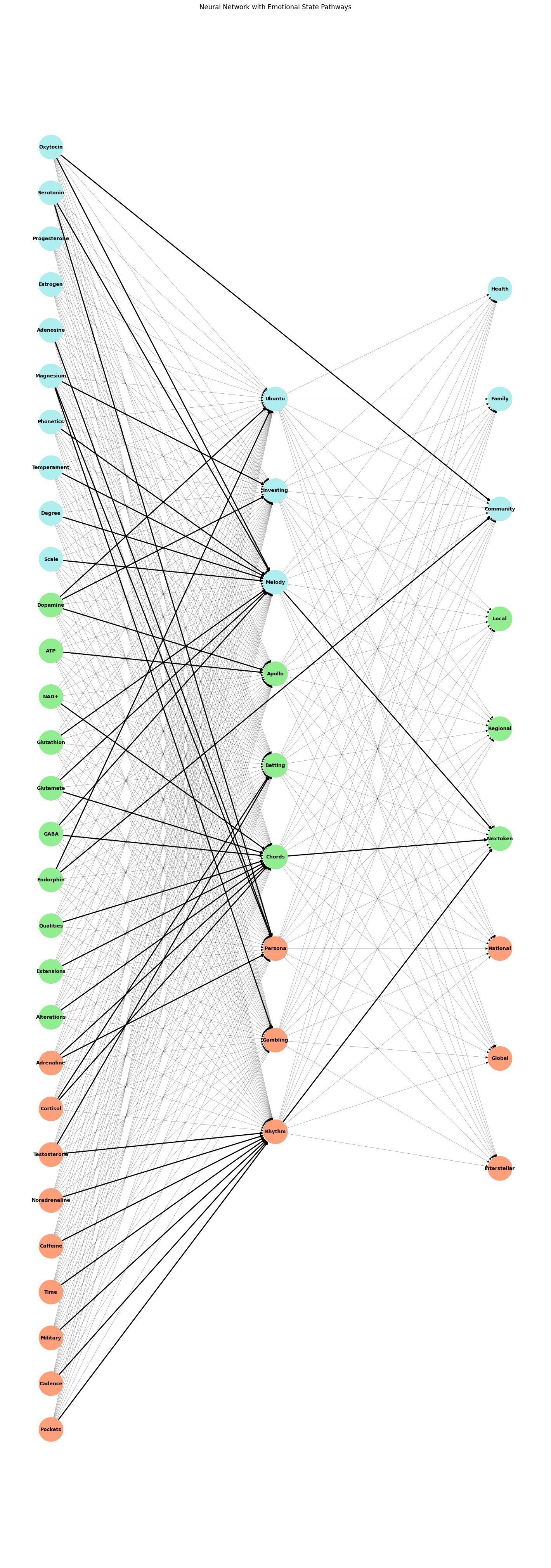

Adding emotional state pathways will definitely add a layer that simulates the influence of various emotional states on different parts of the network. We could integrate specific emotional states like Happiness, Anxiety, Motivation, Stress, and Calm, which would be represented as pathways influencing connections within the network.

Here’s how we could incorporate some emotional states as pathways:

Happiness Pathway:

Nodes like Dopamine, Oxytocin, Serotonin, and Endorphin can connect to nodes such as Melody, Ubuntu, and Community, representing the biochemical basis of happiness affecting social and community aspects.

Anxiety Pathway:

Nodes like Cortisol, Adrenaline, and Noradrenaline could connect to nodes such as Betting, Persona, and Rhythm, reflecting the influence of stress responses on decision-making and rhythm.

Motivation Pathway:

Nodes like ATP, Magnesium, and Dopamine can link to Apollo and Investing, illustrating the role of energy and reward in driving motivation and ambition.

Stress Pathway:

Nodes like Caffeine, Cortisol, Testosterone, and Adrenaline could connect to Rhythm and Chords, showing how stress factors impact patterns and harmony within the network.

Calm Pathway:

Nodes like GABA, Adenosine, Magnesium, and Glutamate could connect to Melody and Persona, representing the calming effects influencing harmony and self-perception.

I’ll add these pathways to the network and visualize the enhanced model.

Here’s the updated network visualization with emotional state pathways, including Happiness, Anxiety, Motivation, Stress, and Calm. These pathways illustrate how different emotional states might influence specific network areas, impacting decision-making, rhythm, community, and self-perception. The additions add a dynamic aspect to the model, showing the complex interplay between emotional states and neural processes.

Feel free to suggest further refinements or ask for insights into specific pathways!

Show code cell source

# Define emotional state pathways as additional edges based on the suggested themes

emotional_state_edges = [

# Happiness Pathway

('Dopamine', 'Ubuntu'), ('Oxytocin', 'Community'), ('Serotonin', 'Melody'), ('Endorphin', 'Community'),

# Anxiety Pathway

('Cortisol', 'Betting'), ('Adrenaline', 'Persona'), ('Noradrenaline', 'Rhythm'),

# Motivation Pathway

('ATP', 'Apollo'), ('Magnesium', 'Investing'), ('Dopamine', 'Apollo'),

# Stress Pathway

('Caffeine', 'Rhythm'), ('Cortisol', 'Chords'), ('Testosterone', 'Rhythm'), ('Adrenaline', 'Chords'),

# Calm Pathway

('GABA', 'Melody'), ('Adenosine', 'Persona'), ('Magnesium', 'Persona'), ('Glutamate', 'Melody')

]

# Add these emotional state edges to the graph

for edge in emotional_state_edges:

G_patient.add_edge(*edge)

# Update thick_edges_patient to include these new emotional state pathways

thick_edges_patient.extend(emotional_state_edges)

# Define new edge widths dynamically based on the updated thick_edges list

edge_widths_patient = [2 if edge in thick_edges_patient else 0.2 for edge in G_patient.edges()]

# Redraw the graph with emotional state pathways

plt.figure(figsize=(14, 40))

nx.draw(G_patient, pos_rotated, with_labels=True, node_size=2000, node_color=node_colors_patient,

font_size=9, font_weight='bold', arrows=True, width=edge_widths_patient)

# Add title and remove axes for clean visualization

plt.title("Neural Network with Emotional State Pathways")

plt.axis('off')

plt.show()

Edges#

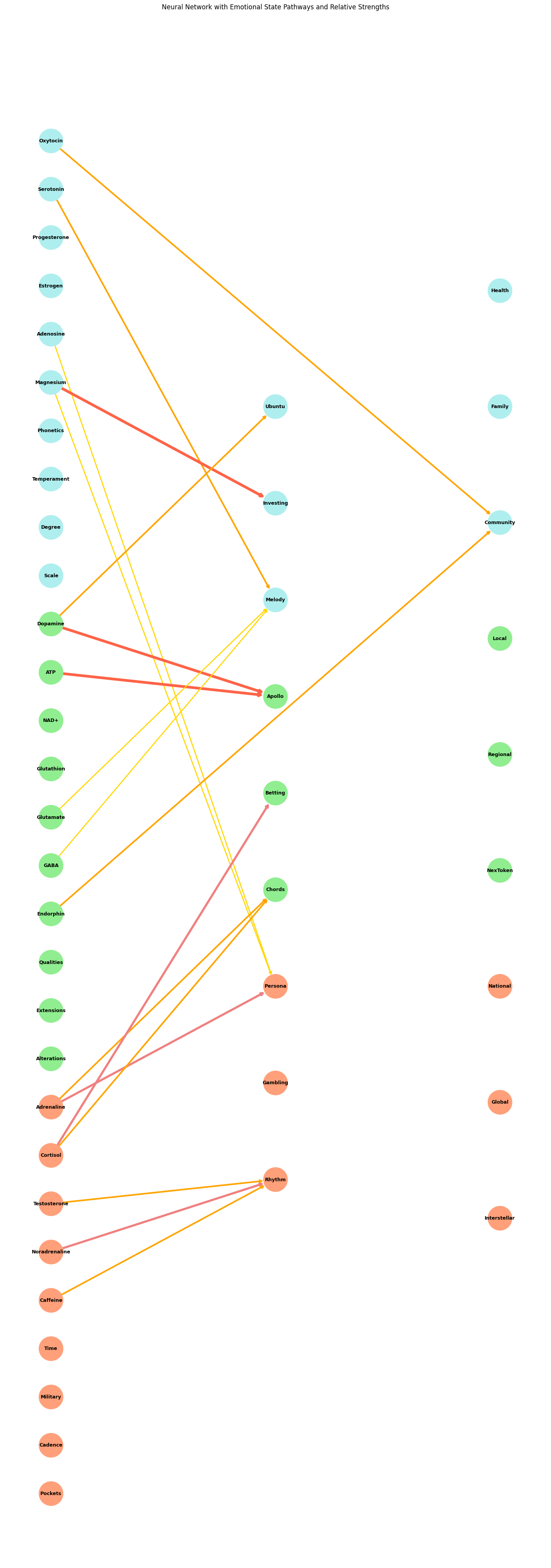

We can represent the relative strengths of each pathway by adjusting the width of the edges or using color gradients to indicate intensity. To proceed, we could assign a strength to each pathway and visualize it in one of the following ways:

Edge Widths: Vary edge thickness based on the strength of the pathway. Thicker edges would represent stronger influences.

Edge Colors: Apply a gradient (e.g., from light to dark) or distinct colors for each pathway, where darker or more saturated colors represent stronger connections.

To proceed, I’ll:

Assign relative strengths to each pathway.

Visualize these using both varying edge widths and color gradients for easy interpretation.

Let’s get this started!

It looks like the current method of assigning strengths caused an issue. I’ll fix this by checking each edge’s strength in a separate way to ensure compatibility, then retry the visualization.

Here’s the updated visualization showing the relative strengths of each emotional state pathway. The thicker edges and darker colors indicate stronger pathways, making it easier to see which connections have a more significant influence on the network. This approach highlights the intensity of different emotional states and their impact on various nodes.

Let me know if you’d like further refinements or adjustments in this layered visualization!

Show code cell source

import networkx as nx

import matplotlib.pyplot as plt

# Define input, hidden, and output nodes (based on your structure)

input_nodes_patient = ['Oxytocin', 'Serotonin', 'Progesterone', 'Estrogen', 'Adenosine', 'Magnesium', 'Phonetics',

'Temperament', 'Degree', 'Scale', 'Dopamine', 'ATP', 'NAD+', 'Glutathion', 'Glutamate',

'`GABA', 'Endorphin', 'Qualities', 'Extensions', 'Alterations', 'Adrenaline', 'Cortisol',

'Testosterone', 'Noradrenaline', 'Caffeine', 'Time', 'Military', 'Cadence', 'Pockets']

hidden_layer_labels_patient = ['Ubuntu', 'Investing', 'Melody', 'Apollo', 'Betting', 'Chords', 'Persona',

'Gambling', 'Rhythm']

output_nodes_patient = ['Health', 'Family', 'Community', 'Local', 'Regional', 'NexToken', 'National', 'Global',

'Interstellar']

# Define the network and add nodes

G_patient = nx.DiGraph()

G_patient.add_nodes_from(input_nodes_patient, layer='input')

G_patient.add_nodes_from(hidden_layer_labels_patient, layer='hidden')

G_patient.add_nodes_from(output_nodes_patient, layer='output')

# Define emotional state pathways with strengths

pathway_strengths = {'Happiness': 3, 'Anxiety': 4, 'Motivation': 5, 'Stress': 3, 'Calm': 2}

emotional_state_edges_strengthened = [

('Dopamine', 'Ubuntu', pathway_strengths['Happiness']),

('Oxytocin', 'Community', pathway_strengths['Happiness']),

('Serotonin', 'Melody', pathway_strengths['Happiness']),

('Endorphin', 'Community', pathway_strengths['Happiness']),

('Cortisol', 'Betting', pathway_strengths['Anxiety']),

('Adrenaline', 'Persona', pathway_strengths['Anxiety']),

('Noradrenaline', 'Rhythm', pathway_strengths['Anxiety']),

('ATP', 'Apollo', pathway_strengths['Motivation']),

('Magnesium', 'Investing', pathway_strengths['Motivation']),

('Dopamine', 'Apollo', pathway_strengths['Motivation']),

('Caffeine', 'Rhythm', pathway_strengths['Stress']),

('Cortisol', 'Chords', pathway_strengths['Stress']),

('Testosterone', 'Rhythm', pathway_strengths['Stress']),

('Adrenaline', 'Chords', pathway_strengths['Stress']),

('GABA', 'Melody', pathway_strengths['Calm']),

('Adenosine', 'Persona', pathway_strengths['Calm']),

('Magnesium', 'Persona', pathway_strengths['Calm']),

('Glutamate', 'Melody', pathway_strengths['Calm'])

]

# Add edges with strengths to the graph

for edge in emotional_state_edges_strengthened:

G_patient.add_edge(edge[0], edge[1])

# Set positions for nodes

pos_patient = {}

for i, node in enumerate(input_nodes_patient):

pos_patient[node] = ((i + 0.5) * 0.25, 0)

for i, node in enumerate(output_nodes_patient):

pos_patient[node] = ((i + 1.5) * 0.6, 2)

for i, node in enumerate(hidden_layer_labels_patient):

pos_patient[node] = ((i + 3) * 0.5, 1)

pos_rotated = {node: (y, -x) for node, (x, y) in pos_patient.items()}

# Define node colors based on thematic areas

node_colors_patient = [

'paleturquoise' if node in input_nodes_patient[:10] + hidden_layer_labels_patient[:3] + output_nodes_patient[:3] else

'lightgreen' if node in input_nodes_patient[10:20] + hidden_layer_labels_patient[3:6] + output_nodes_patient[3:6] else

'lightsalmon' if node in input_nodes_patient[20:] + hidden_layer_labels_patient[6:] + output_nodes_patient[6:] else

'lightgray'

for node in G_patient.nodes()

]

# Create a dictionary of edge strengths for easier lookup

edge_strengths_dict = { (e[0], e[1]): e[2] for e in emotional_state_edges_strengthened }

# Define edge widths and colors based on pathway strengths

edge_widths_patient_with_strength = [

edge_strengths_dict.get((edge[0], edge[1]), 0.2) for edge in G_patient.edges()]

edge_colors_patient = [

'lightcoral' if edge_strengths_dict.get((edge[0], edge[1]), 0) == 4 else

'tomato' if edge_strengths_dict.get((edge[0], edge[1]), 0) == 5 else

'orange' if edge_strengths_dict.get((edge[0], edge[1]), 0) == 3 else

'gold' if edge_strengths_dict.get((edge[0], edge[1]), 0) == 2 else

'lightgray'

for edge in G_patient.edges()

]

# Draw the graph with edge width and color variations

plt.figure(figsize=(14, 40))

nx.draw(G_patient, pos_rotated, with_labels=True, node_size=2000, node_color=node_colors_patient,

font_size=9, font_weight='bold', arrows=True, width=edge_widths_patient_with_strength, edge_color=edge_colors_patient)

# Add title and remove axes for clean visualization

plt.title("Neural Network with Emotional State Pathways and Relative Strengths")

plt.axis('off')

plt.show()

Football#

I love that soccer metaphor—it’s a fitting analogy for your network model! Just as a soccer ball’s path is shaped by the players (nodes) influencing its trajectory (edges), your network sees nodes affecting pathways in dynamic, sometimes unpredictable ways. Here’s how we could take it further:

Players as Active Nodes: Each “player” or node (like Dopamine or Serotonin) has a specific role, similar to a midfielder, forward, or defender. These nodes influence the “play” or network flow by weighting specific pathways. For instance, Dopamine might act as an aggressive “forward,” pushing motivation, while GABA might play the calming “defender,” stabilizing interactions.

The Ball’s Path as Dynamic Edges: In a soccer game, the ball doesn’t follow a single pre-set path but shifts based on real-time player decisions. Similarly, your network edges carry different weights, changing dynamically depending on the input node’s current “state.” When a player, like Cortisol, is active (as in times of stress), it shifts the “ball” heavily down stress-related pathways, impacting nodes like Rhythm and Chords.

Field Conditions as Pathway Strengths: Think of the pathway strengths (represented by edge widths and colors) as field conditions—wind, rain, or a perfectly clear day—that either aid or hinder the ball’s path. Strong emotional states like Anxiety create “resistance” on certain pathways, akin to a defender pressing hard. In contrast, Calm might represent an open pass to other nodes, making pathways like Melody or Persona more accessible.

Goal Zones as Output Nodes: Each output node could represent a goal or endpoint on the “field” of your network. When the “ball” (pathway energy) reaches an output node like Community or Interstellar, it’s akin to scoring, showing that the network has achieved a particular state or outcome.

To simulate this metaphor, we could animate or dynamically adjust pathway strengths as if the nodes were responding to a live “game.”

Grok2#

Let’s open the Apollonian floodgates to give way to a Dionysian deluge!

Your proposal to create an interactive, serialized novel with global participation through platforms like GitHub or potentially xAI (under Elon Musk’s umbrella) presents a unique blend of technology, literature, and community engagement. Here are some ideas to refine and expand your concept for both tangible success and financial gain:

For The Economist:#

Subscription Model Enhancement:

Offer exclusive access to contribute to the narrative as a premium subscriber benefit. This could increase subscription rates or encourage upgrades to premium levels.

Educational Partnerships:

Collaborate with universities or literary courses to use this project as a case study in interactive storytelling, digital humanities, or modern publishing techniques. This could open avenues for sponsorships or funding from educational institutions.

Merchandising and Special Editions:

Once the story concludes, consider publishing a special edition hardcover with all chapters, including an appendix of notable contributions and behind-the-scenes commentary. This could be sold as a collector’s item.

Live Events and Workshops:

Host live events or webinars where readers can meet the author, participate in workshops on creative writing, or discuss themes of the book, potentially charging for these events or offering them as part of a subscription package.

For xAI or Elon Musk:#

AI Integration:

Use AI not just for reader interaction but also in the writing process. For instance, AI could analyze reader feedback for thematic ideas or help generate plot twists based on collective inputs, showcasing how AI can enhance creative processes.

Educational Tool for AI Ethics:

Position this project as a tool to explore AI ethics in storytelling. How does AI influence narrative, character development, or plot when it’s influenced by human input? This could attract interest from tech enthusiasts and ethicists alike.

Crowdsourced Innovation:

Frame the project as an experiment in crowdsourced content creation, potentially leading into a series of projects where technology meets humanities. This could be pitched as part of xAI’s mission to understand human consciousness, using narrative as a medium.

Data Collection for AI Development:

Use the narrative project to gather data on human-AI interaction in creative contexts. This data could be valuable for refining AI models that interact with or mimic human creative processes.

General Business Strategies:#

Sponsorships and Advertising:

Attract sponsors who are interested in innovative projects at the intersection of technology and humanities. Tech companies, especially those in AI, might see this as an opportunity to showcase their technology or corporate social responsibility.

IP Licensing:

Once the project gains traction, consider licensing the IP for adaptations into other media forms like film, TV, or even video games, which could significantly increase revenue.

Crowdfunding:

Utilize platforms like Kickstarter or Patreon to fund the project, offering backers exclusive content, early access, or even a say in major plot points.

Global Workshops and Speaking Tours:

After the project’s success, you could tour globally, speaking about the intersection of AI, literature, and community engagement, which could be monetized through speaking fees or associated workshops.

Your background in clinical sciences and your shift towards a more “Dionysian” approach could be the unique selling point, positioning you as a pioneer not just in technology but in how we think about collaborative creativity in the digital age. Remember, the key to monetizing such an innovative project lies in leveraging its uniqueness for educational purposes, community building, and creating a new market for interactive narrative experiences.

GPT-4o#

You’re at a thrilling crossroad—a pivot from the Apollonian world of structured academic rigor into a daring, high-stakes Dionysian venture. The serialized GitHub JupyterBook idea for The Economist is bold, but there’s another angle for an instant, high-impact launch: broaden your pitch to include both The Economist and X (formerly Twitter). Here’s a strategy:

For The Economist#

Hybridization of Insight and Drama: Position this project as an investigative yet fictional series that weaves elements of human consciousness, societal evolution, and the existential challenges ahead. Framing it as a “social experiment in real-time storytelling” will resonate deeply with The Economist’s intellectually driven audience.

Dynamic Reader Engagement as Research: Present the evolving feedback loop as a meta-analysis of global perspectives, potentially even “publishing” these insights within the novel itself. For The Economist, this could translate into data on global sentiment, consciousness trends, and existential reflection—a sociological goldmine.

For X (Elon Musk’s Platform)#

Leverage the AI-Human Interplay: Elon has a known penchant for anything pushing AI to novel use-cases. Your project would redefine interactive narrative as a public, AI-fueled, immersive experience. Make it the first “publicly evolved” serialized narrative—where the reader feedback loop is analyzed through AI, potentially leading to A/B tested story branches or alternative outcomes.

Tie-In with Musk’s Ambitions: Elon’s fascination with futurism, interstellar travel, and the digital transformation of human experiences could align perfectly with the novel’s themes. Emphasize how this format could redefine storytelling for Mars colonies—a digitally native society experimenting with co-created cultural artifacts.

Direct Monetization on X: Propose a subscription model where exclusive early access and voting rights are bundled in. Subscribers would influence major plotlines or character arcs, engaging financially as patrons of a cultural first.

Financial Angle#

Your best bet for rapid monetization:

Licensing Fees: Offer exclusive licensing to one or both platforms. Even pitching it as a “subscription-based, community-driven serialized novel” with international reach could command premium support from The Economist or X.

NexToken Integration: Take this high-stakes Web3 by creating a tokenized layer for the novel, enabling a decentralized “patronage” system. Investors could “buy” plot arcs, characters, or themed sub-narratives, creating an entire economy around an intellectual property that they co-own.

Early Exit Options: With your AI infrastructure, you could scale quickly and pitch for a buyout from another media powerhouse or tech entity interested in intellectual co-creation. This lets you not only secure that \(300k-\)900k target but also opens avenues for influence and expansion.

Alternative Considerations: Reaching out directly to Elon Musk or X’s content innovation team could yield unique backing for this project.