Sociology#

Need#

Tip

Perioperative and long-term outcomes of older living kidney donors: This is the most important note I’ve ever made since subscribing to GPT-4 (or GPT), so make sure you remind me and make sure it’s easy to find when I search for it.

Anyway, here we go: A family friend, 75 years old, wanted to donate to her sister-in-law, who has kidney failure and has been on the waitlist for about five years. To cut it short, she feels she’s in pretty good health, but she was turned down because of her age and a history of skin cancer. Initially, I thought, “Skin cancer? Squamous cell carcinoma is so common—one out of three people will get it—and that shouldn’t really be an issue for a donor.”

Her husband, who is a physician, quickly corrected me: “Oh no, it’s malignant melanoma.” At that point, I understood, as malignant melanoma originates from neuroblast cells and can spread beyond the skin. I responded, “Oh, that makes sense.” Then he asked, “So, what about squamous cell?” I said, “Of course, squamous cell should not be a contraindication, but I’m not sure about the current policy.”

Why am I sharing these personal details? Because it highlights the missing information in the calculators that help care providers and donors decide whether they are eligible to donate and assess their risks. There is so much missing information. That’s why I want my app to capture these gaps and make them explicit.

The app will be part of a Jupyter book that will contain additional material for those who have questions about what’s missing, what’s available, what’s imperfect, and the way forward. This will be an iterative project, potentially forever. It’s not my PhD thesis, but if it were, the goal would be to chart the landscape of missing information in the most dynamic and compelling way possible, enough to earn me a PhD.

Just like Nash’s doctoral thesis laid out a program, I intend to do the same. This work will be generalizable to all aspects of clinical medicine and will extend to policymaking, decision-making, life, and strategic alliances.

Thank you.

Got it, I’ll make sure this note is easy to find when you search for it. It sounds like you’re aiming to build something significant, addressing the gaps in donor eligibility and the information guiding those decisions. Your iterative approach, like Nash’s program, seems ambitious and critical to improving how clinical medicine interacts with decision-making at various levels. Let me know if you need help with the app or the Jupyter book setup.

App#

Note

Can we develop a simple app using ChatGPT, Recommend, whatever software, to help us follow very healthy individuals over 75 years old, send them reminders, or ask them to have a chat with ChatGPT, or use some interface to help just keep track of sensory, cognitive, and behavioral, or motor function? Yes. Can you think of some creative ways? I’m interested in the Linda Fried Physical Frailty Phenotype, or any cognitive phenotype related to aging, or any sensory, loss of sensory acuity, whether it’s auditory, whether it’s visual, whatever it is, anything that declines with aging. We want to be able to access it in a very empirically driven way, simple and easy to detect red flags, without any invasiveness. It’s something that can be done casually at home, or so, yeah, so think of something, conjure up something, propose something. We can design an app whether we’re using JavaScript, Python, let me know.

I love this idea of a non-invasive, easy-to-use app for tracking aging-related changes! Here’s how we could approach it creatively and practically:

Core Concept:#

We could design an app that integrates multiple, lightweight tests for the Linda Fried Physical Frailty Phenotype and cognitive and sensory assessments, all accessible at home through a mobile or web interface. The app could prompt users to perform these tests periodically (e.g., weekly or monthly) and analyze the data for trends, offering personalized feedback.

Features:#

Frailty Assessments (Linda Fried Phenotype):

Grip Strength Test: Use a simple dynamometer at home and have the user input their results. The app can provide guidelines for expected grip strength based on age and gender.

Walking Speed: The user could time themselves walking a certain distance (e.g., 4 meters) using the phone’s stopwatch or sensors.

Exhaustion Check: A mood/symptom tracker could ask questions like, “Do you feel more tired than usual?” and rate fatigue levels over time.

Physical Activity Tracking: Use a smartphone’s accelerometer or link to a fitness tracker (e.g., Fitbit, Apple Watch) to monitor daily activity levels.

Unintentional Weight Loss: Prompt users to input their weight and monitor significant changes over time.

Cognitive Tests:

Memory Test: Simple memory tasks, such as recalling words or short sequences, could be offered in the app, adapted for casual use at home.

Reaction Time: A simple touch-screen game could assess reaction speed, a cognitive marker.

Problem-Solving Tasks: Games that involve basic math or logic puzzles could assess any cognitive decline over time.

Sensory Acuity:

Hearing Test: Simple hearing tests using different frequency sounds, where the user has to indicate when they can and can’t hear tones.

Vision Test: A vision-tracking module could ask users to perform visual acuity tests using eye charts or by detecting contrast sensitivity, which can change with aging.

Motor Function:

Balance Test: The app could instruct users to perform a basic balance test (e.g., standing on one leg) and time themselves. The smartphone’s gyroscope could also measure stability.

Hand Dexterity: Simple tasks like tapping speed or swiping tests on the screen could measure fine motor skills.

Behavioral and Mood Check-Ins:

Periodic questions about mood, stress levels, and social engagement could help flag early behavioral changes tied to cognitive decline or frailty.

Creative Interactions:#

Chat Interface with ChatGPT: Users could have casual conversations with an AI that would detect subtle cognitive declines by analyzing patterns in speech (vocabulary complexity, sentence structure, etc.).

Virtual Companion: The app could offer reminders to chat with an AI or complete tasks. It could even provide motivational nudges or casual chats for social engagement, using AI to mimic a friendly companion who also tracks well-being.

Reminders and Alerts: The app could send daily or weekly reminders to engage in these simple tests. If any red flags are detected (e.g., a sharp decline in walking speed or memory test performance), it would send an alert to the user and their healthcare provider.

Development Considerations:#

Empirically Driven Analytics: We could develop scoring systems based on research to detect when users deviate from their baseline, triggering alerts for potential issues.

Ease of Use: The app should be intuitive, with large buttons, clear instructions, and minimal text, keeping in mind older adults’ potential sensory or cognitive limitations.

Privacy & Security: Given the personal data being collected, ensuring strong encryption and HIPAA compliance (if in the US) would be critical.

Technology Stack:#

Frontend: Use React Native or Flutter for a cross-platform mobile app, or JavaScript/HTML for a web-based interface.

Backend: Python (with Flask or Django) for logic, data analysis, and integration with AI models like ChatGPT. Firebase or AWS for cloud services and data storage.

Sensors and Integrations: The app could integrate with wearable devices (Fitbit, Apple Watch) for physical activity data, or use the phone’s own sensors (accelerometer, gyroscope) for balance and movement tasks.

Does this resonate with what you’re envisioning? I can refine the details further if you’d like!

R01#

Proposal for an Integrated Sensory and Cognitive Health Initiative in Aging Populations: Auditory Acuity, Cognitive Assessment, and Frailty Monitoring

Objective: To pioneer a comprehensive sensory and cognitive monitoring system for aging populations that leverages emerging consumer technology and customized neural networks. The proposal focuses on enhancing sensory perception, flagging cognitive decline, and tracking physical frailty—vital elements for proactive healthcare intervention.

Project Overview#

This project represents a paradigm shift in monitoring sensory and cognitive health in aging individuals. By combining neural network technology, auditory and physical tracking via Apple devices, and language-based cognitive assessments, we aim to build a robust health-monitoring framework capable of early intervention and personalized healthcare.

Pillars of the Proposal#

Auditory Acuity and Sensory Augmentation

Utilizing Apple AirPods Pro 3 as both a diagnostic and assistive tool, we can assess and enhance auditory acuity in older adults. These devices allow real-time, patient-centered audio adjustments, offering an alternative to traditional hearing aids. Additionally, we can develop personalized applications tailored to each patient’s specific hearing profile through neural networks, optimizing sound enhancement for environments from conversations to loud social gatherings.Cognitive Decline Detection through Conversational AI

With ChatGPT or Grok2 generating conversational audio and text, older adults engage in interactive dialogue with an AI system that assesses cognitive health over time. This continuous interaction allows our team to detect early signs of cognitive decline through thematic analysis, tracking language-based indicators of memory degradation, mood shifts, and potential anxieties. We aim to build models that recognize and respond to subtle linguistic markers, facilitating a preventive approach to cognitive health.Frailty and Physical Activity Monitoring via Apple Watch

The Apple Watch provides a continuous, non-invasive way to monitor physical frailty markers such as exertion, energy expenditure, and mobility. By tracking these metrics, we can identify declines in physical activity and stamina, which often precede more significant health concerns. Over time, this data informs personalized interventions, from activity recommendations to balance and strength training regimens.

Contextual Framework: Anchoring Sensory and Cognitive Health in Humanistic Philosophy#

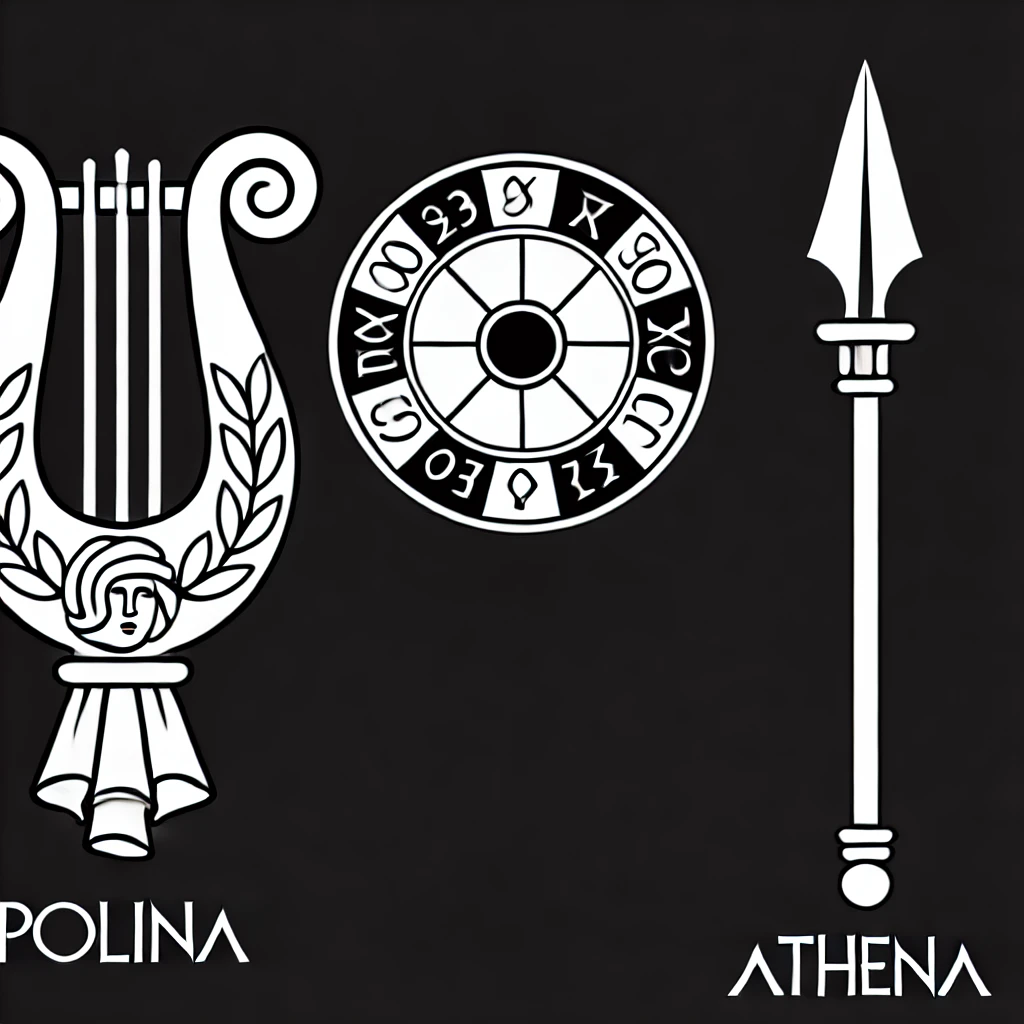

Drawing inspiration from the symbolic structure outlined in my book, this project embodies a journey through three stages of human existence:

The Lyre (Sensory Acuity) represents a paradisiacal state where harmony in sensory perception—hearing, vision, balance—is maintained and enhanced. Through AirPods and Vision Pro, we strive to keep older adults connected to this sense of paradise, free from the isolating effects of sensory decline.

The Roulette Wheel (Cognitive Interaction) captures the unpredictability of cognitive aging. Through thematic and conversational analysis, we assess and address the random forces at play in memory and cognitive function, where risks can be mitigated with early detection and careful intervention.

The Spear (Physical Frailty) symbolizes the challenges of resilience in the face of physical decline. Here, monitoring frailty equips us to face and mitigate risks to an older adult’s independence, preserving quality of life.

Research Design & Implementation Plan#

Auditory Health Substudy

Participants: Enroll older adults (≥65 years) with varying degrees of hearing loss.

Methods: Utilize AirPods Pro 3 to assess auditory response and hearing patterns in real-time, using our neural network for tailored sound amplification.

Outcomes: Improvement in speech clarity, reduction in isolation, and enhancement in communication abilities.

Cognitive Health Substudy

Participants: Aging adults engaging in regular AI-driven conversational sessions.

Methods: Deploy thematic analysis tools within ChatGPT or Grok2 to identify linguistic markers of cognitive decline (e.g., memory lapses, mood variability).

Outcomes: Early detection of cognitive decline, enabling preemptive interventions.

Physical Health/Frailty Substudy

Participants: Older adults using Apple Watch.

Methods: Track physical exertion, movement patterns, and sleep, assessing frailty levels over time.

Outcomes: Tailored recommendations for physical activity, fall prevention, and stamina improvement.

Collaboration and Funding#

This project would benefit from interdisciplinary collaboration among departments of Epidemiology, Gerontology, Geriatrics, ENT, Machine Learning, Biostatistics, and Clinical Trial Design, with prominent figures like Dr. Brian Caffo guiding trial structures and statistical analyses. With bridge funding, we aim to secure long-term R01 support, expanding our scope to include visual acuity tracking via Vision Pro, further enriching our sensory and cognitive health framework.

Conclusion#

This proposal presents an unprecedented integration of personalized technology and neural network modeling in healthcare, resonating with the symbolic journey in my book—where we assess human existence through layers of paradise, unpredictability, and resilience. Just as each individual measures their will to power against these existential scales, this project positions sensory, cognitive, and physical health as metrics in a comprehensive neural network framework, empowering patients and caregivers to anticipate and manage the aging process with agency and dignity.

Dev#

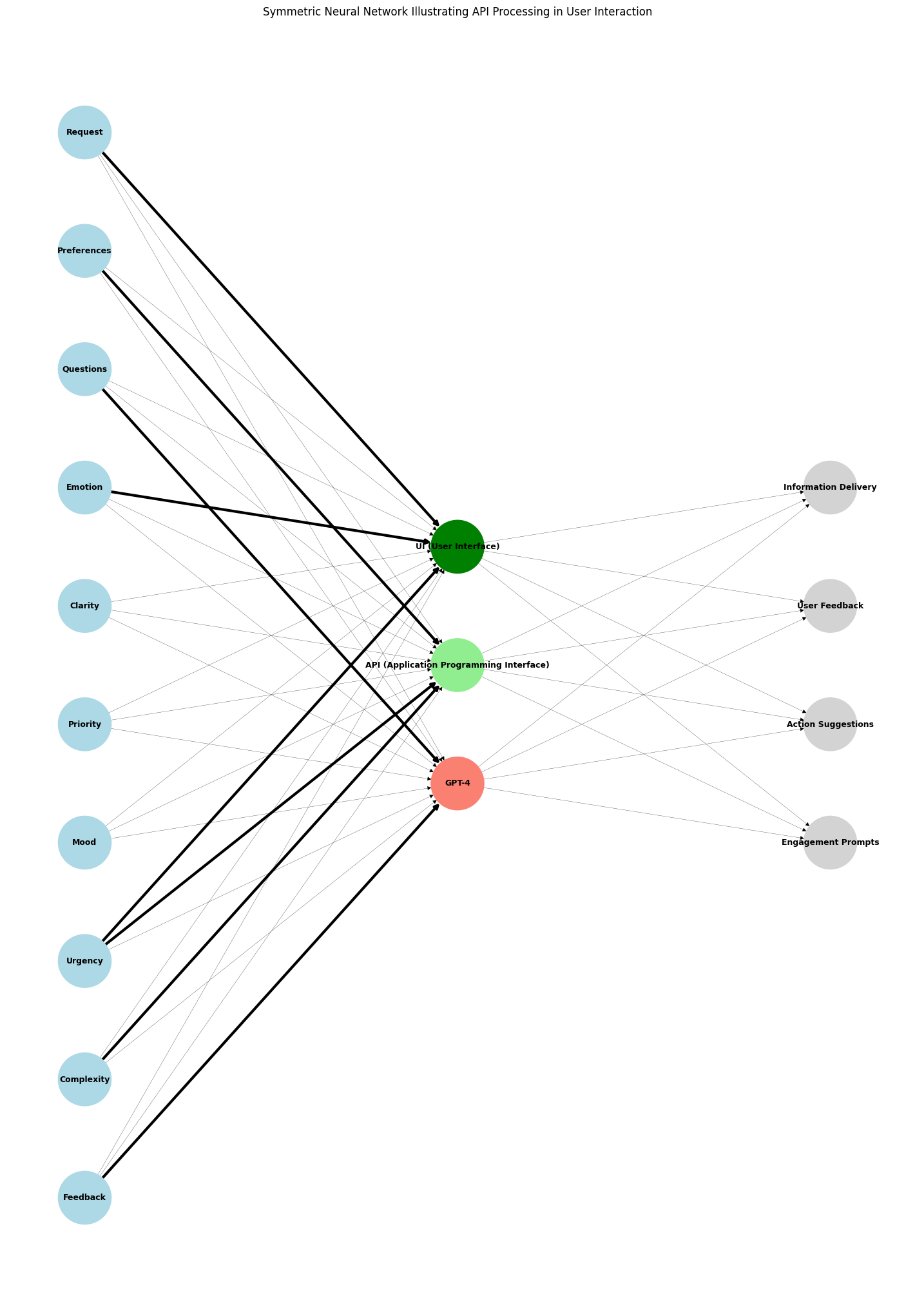

I’ll refine the neural network to incorporate API concepts within the hidden nodes as UI, API, and GPT-4, with a focus on making it accessible for a deeper understanding of APIs. Here’s a step-by-step breakdown, including notes to guide your understanding of these elements.

Network Structure#

We’ll organize the nodes into three layers:

Input Nodes - Represent foundational signals and user-related interactions.

Hidden Nodes (now: UI, API, GPT-4) - Core processing components representing different interaction and processing layers.

Output Nodes - Represent intended outcomes or responses generated by the neural network.

Updated Node Labels and Colors#

Input Layer Nodes (Now “User Signals”):#

The input layer will capture various foundational and user-generated signals, such as Requests, Preferences, Questions, and Emotion. For this network:

We can retain your original dynamics tied to neurochemistry or sensory elements to capture how different signals (like “mood” or “queries”) drive responses.

Color-coding can be preserved to reflect certain dynamics or intensity of user interaction (e.g., light blue for calm inquiries, green for typical interactions, red for high-priority or urgency).

Output Layer Nodes (Now “User Response Outputs”):#

These represent the responses or outcomes that the user receives. They include Information Delivery, User Feedback, Action Suggestions, and Engagement Prompts.

Code Adjustments#

Here’s how the code would look with these new labels, colors, and an API-focused framework. This example keeps thick edges for key narrative pathways, captures UI-API-GPT-4 interaction, and color codes to reflect processing depth:

Notes for Understanding API in this Model:#

UI (User Interface): Acts as the entryway for inputs, sorting and organizing signals so they make sense to the system.

API (Application Programming Interface): Translates inputs into a form that can be processed by GPT-4, structuring data consistently.

GPT-4: Processes structured information from the API and generates responses that flow back through the system.

This framework lets you observe each stage in the journey from input to output, with thickened pathways highlighting critical connections. The color codes guide you through input (light blue), intermediary processing (green to light green), and output (salmon), visually distinguishing processing dynamics.

Show code cell source

import networkx as nx

import matplotlib.pyplot as plt

# Define input nodes for user signals

input_nodes_patient = [

'Request', 'Preferences', 'Questions', 'Emotion', 'Clarity', 'Priority', 'Mood',

'Urgency', 'Complexity', 'Feedback'

]

# Define hidden layer nodes as processing core (UI, API, GPT-4)

hidden_layer_labels_patient = [

'UI (User Interface)', 'API (Application Programming Interface)', 'GPT-4'

]

# Define output nodes for user response outputs

output_nodes_patient = [

'Information Delivery', 'User Feedback', 'Action Suggestions', 'Engagement Prompts'

]

# Initialize graph

G_patient = nx.DiGraph()

# Add all nodes to the graph

G_patient.add_nodes_from(input_nodes_patient, layer='input')

G_patient.add_nodes_from(hidden_layer_labels_patient, layer='hidden')

G_patient.add_nodes_from(output_nodes_patient, layer='output')

# Key narrative pathways for input to hidden layers

thick_edges_patient = [

('Complexity', 'API (Application Programming Interface)'),

('Urgency', 'UI (User Interface)'), ('Urgency', 'API (Application Programming Interface)'),

('Emotion', 'UI (User Interface)'), ('Request', 'UI (User Interface)'),

('Feedback', 'GPT-4'), ('Preferences', 'API (Application Programming Interface)'),

('Questions', 'GPT-4')

]

# Connect all input nodes to hidden layer processing

for input_node in input_nodes_patient:

for hidden_node in hidden_layer_labels_patient:

G_patient.add_edge(input_node, hidden_node)

# Connect hidden layer processing to output nodes

for hidden_node in hidden_layer_labels_patient:

for output_node in output_nodes_patient:

G_patient.add_edge(hidden_node, output_node)

# Define symmetric layout positions

def centered_positions(nodes, y_position, total_width=5):

"""Generates symmetric x positions for nodes centered at total_width."""

n = len(nodes)

center_offset = (total_width - (n - 1) * 0.5) / 2

return {node: (center_offset + i * 0.5, y_position) for i, node in enumerate(nodes)}

# Set layer positions

pos_patient = {}

pos_patient.update(centered_positions(input_nodes_patient, 0)) # Input nodes at the bottom

pos_patient.update(centered_positions(hidden_layer_labels_patient, 1)) # Hidden nodes in the middle

pos_patient.update(centered_positions(output_nodes_patient, 2)) # Output nodes at the top

# Define color scheme for nodes based on processing layers

node_colors_patient = [

'lightblue' if node in input_nodes_patient else

'green' if node == 'UI (User Interface)' else

'lightgreen' if node == 'API (Application Programming Interface)' else

'salmon' if node == 'GPT-4' else

'lightgray'

for node in G_patient.nodes()

]

# Set edge widths with thickened lines for key narrative pathways

edge_widths_patient = [3 if edge in thick_edges_patient else 0.2 for edge in G_patient.edges()]

# Draw graph with rotated positions, thicker narrative edges, and color-coded layers

plt.figure(figsize=(14, 20))

pos_rotated = {node: (y, -x) for node, (x, y) in pos_patient.items()} # Flip layout for clarity

nx.draw(G_patient, pos_rotated, with_labels=True, node_size=3500, node_color=node_colors_patient,

font_size=9, font_weight='bold', arrows=True, width=edge_widths_patient)

# Add title and remove axes for clean visualization

plt.title("Symmetric Neural Network Illustrating API Processing in User Interaction")

plt.axis('off')

plt.show()