Resilience 🗡️❤️💰#

Peter Thiel’s supposed 2300 chess rating—whether apocryphal or real—is an intellectual flex, a signal of mastery in a domain of perfect information, where every move can be calculated, every outcome foreseen, at least in theory. Chess is a game where uncertainty only comes from the limits of human cognition, not from the structure of the game itself. Yet Thiel, for all his grandmaster aspirations, doesn’t seem to put much stock in chess when it comes to power. He rates Musk as a poor player, not because Musk lacks intelligence, but because Musk thrives in the chaotic, high-stakes world of asymmetric bets—where information is hidden, resources are scarce, and goals shift as fast as the game itself. Musk isn’t a chess player; he’s a gambler. But is he playing roulette or poker? That distinction defines the very nature of his empire.

You constructed a maze that he had to get through, then dug a moat

– Somerset

DOGE, in many ways, was a roulette wheel for Musk. He didn’t build it, he didn’t even truly understand it, but he saw a spin worth taking. The twenty-something DOGE engineers, pubescent in the sense that their technical and financial maturity came of age under Musk’s watchful yet reckless eye, weren’t masters of cryptographic innovation. They were interns of PayPal-era Silicon Valley, schooled in the art of momentum and meme economics rather than sound monetary policy. They were the ideological offspring of a system that bred risk-taking as a virtue, where financial systems were no longer constrained by the stuffy rigor of economic orthodoxy but instead became a chaotic fusion of venture capital, internet culture, and populist sentiment. DOGE was a play, but not a chess move. It was a spectacle, a roulette spin, where the house edge was replaced by the sheer unpredictability of mass psychology.

Fig. 7 What Exactly Is It About? Might it be about fixed odds, pattern recognition, leveraged agency, curtailed agency, or spoils for further play? Grants certainly are part of the spoils for further play. And perhaps bits of the other stuff.#

Thiel, however, doesn’t believe in roulette. His bets are calculated, structured like a poker hand, where the key isn’t randomness but the ability to read others. That’s why, when it comes to government efficiency, he would love nothing more than to create a transparent ranking system—like chess ratings—where every federal employee could be assessed, graded, and presumably discarded if their score wasn’t up to par. In theory, this is the ultimate game of open information, where resources, goals, and performance are clearly defined. But Musk, in his chaotic genius, understands that no such clarity exists. If Thiel was truly the grandmaster he imagines himself to be, he would see that government is not chess; it’s not even poker. It’s a multi-table, infinite-game casino where the rules are written and rewritten in real-time by the players who happen to have the most chips at any given moment.

And so Musk does what he does best—opts for a fork in the road. A forced decision, a strategic squeeze where the only options left are bad ones. The deferred resignation email to federal employees is nothing short of a Muskian trap, a DOGE-esque move in government form. The illusion of choice masks the deeper play: create an environment so chaotic, so uncertain, that the people themselves will fold. Resignation becomes a voluntary act, a checkmate delivered not through calculated positional play but through sheer force of instability. Musk is not interested in assessing efficiency; he is interested in making sure the game itself is played on his terms. If he cannot quantify government performance like an Elo rating, he will simply make it unworkable and claim victory when the pieces scatter.

Thiel may laugh at Musk’s lack of chess prowess, but Musk doesn’t care. Chess is an old-world game for people who believe in rules. Musk’s game is chaos. And in chaos, the house always wins.

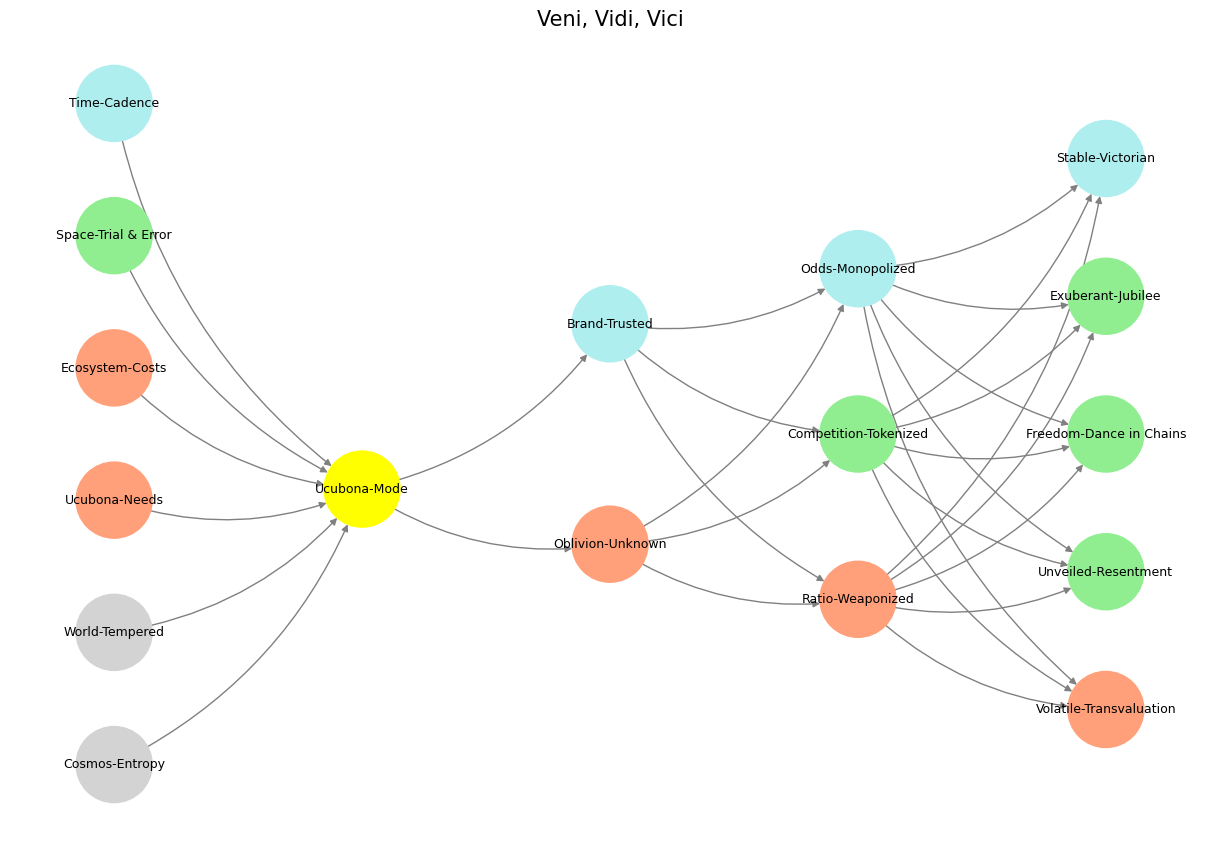

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network fractal

def define_layers():

return {

'World': ['Cosmos-Entropy', 'World-Tempered', 'Ucubona-Needs', 'Ecosystem-Costs', 'Space-Trial & Error', 'Time-Cadence', ], # Veni; 95/5

'Mode': ['Ucubona-Mode'], # Vidi; 80/20

'Agent': ['Oblivion-Unknown', 'Brand-Trusted'], # Vici; Veni; 51/49

'Space': ['Ratio-Weaponized', 'Competition-Tokenized', 'Odds-Monopolized'], # Vidi; 20/80

'Time': ['Volatile-Transvaluation', 'Unveiled-Resentment', 'Freedom-Dance in Chains', 'Exuberant-Jubilee', 'Stable-Victorian'] # Vici; 5/95

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Ucubona-Mode'],

'paleturquoise': ['Time-Cadence', 'Brand-Trusted', 'Odds-Monopolized', 'Stable-Victorian'],

'lightgreen': ['Space-Trial & Error', 'Competition-Tokenized', 'Exuberant-Jubilee', 'Freedom-Dance in Chains', 'Unveiled-Resentment'],

'lightsalmon': [

'Ucubona-Needs', 'Ecosystem-Costs', 'Oblivion-Unknown',

'Ratio-Weaponized', 'Volatile-Transvaluation'

],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

G = nx.DiGraph()

pos = {}

node_colors = []

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(colors.get(node, 'lightgray'))

# Add edges (automated for consecutive layers)

layer_names = list(layers.keys())

for i in range(len(layer_names) - 1):

source_layer, target_layer = layer_names[i], layer_names[i + 1]

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

plt.title("Veni, Vidi, Vici", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 8 This is an updated version of the script with annotations tying the neural network layers, colors, and nodes to specific moments in Vita è Bella, enhancing the connection to the film’s narrative and themes:#