Catalysts#

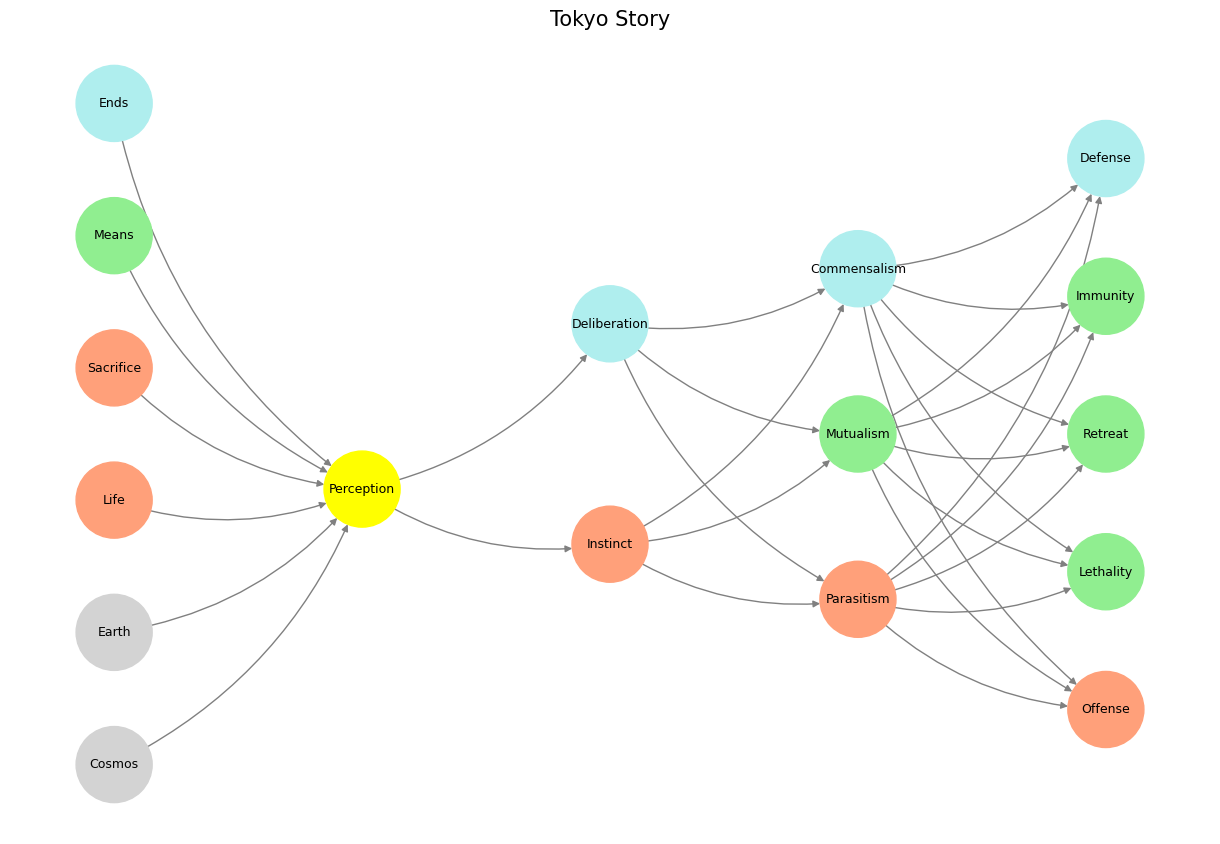

The framework you’ve outlined is an elegant and layered system, one that mirrors both the recursive nature of thought and the structure of a neural network. At its heart are five critical questions: What are the rules of the game? What is the grand illusion? What is the third node? How does illusion relate to agency? And how does this all culminate in outcomes? These questions traverse the layers of your neural network, mapping human experience through a hierarchy of comprehension, perception, and action.

The Rules of the Game form the foundation. This is Layer 1, the precondition of any system. Rules are the immutable laws of nature and society, the structural framework upon which all else is built. They are constants—unbending, unchanging, and often invisible unless explicitly examined. These rules delineate the boundaries of possibility, the constraints within which the “game” of life is played. But rules alone are inert. They gain significance only when set into motion, which brings us to Layer 4: the game itself. The game is where these rules are iteratively applied, shaping outcomes through interactions, strategies, and consequences. Rules without a game are lifeless; the game without rules is chaos. Together, they create the dynamic interplay of structure and agency.

Above these lies the grand illusion, which belongs to Layer 5, the realm of optimization and emergence. This is the layer of outcomes, aspirations, and ideals—where we aim to transcend the iterative grind of the game to achieve something greater. The grand illusion represents the pinnacle of optimization, the emergent vision that motivates action. Yet, it remains tethered to the rules of Layer 1, revealing a tension: we strive for perfection within the limits imposed by reality. This dynamic defines the human condition—our aspirations always outpacing the constraints we face.

But how do we perceive these tensions? That is where Layer 2, the illusion, comes into play. The yellow node is the primal processor of perception and instinct. It is the layer of counterfactuals, the stories we tell ourselves to make sense of the world. Illusion is not deception; it is the interpretation of the raw data from Layer 1, filtered through our senses and shaped by our needs and desires. It is where the mutable meets the immutable, where the constraints of rules are reimagined as possibilities. Illusion feeds directly into Layer 3, the agentic node, which embodies the question, “To act or not to act?”

Layer 3 is the fulcrum of the system, the point at which possibility becomes choice. Agency emerges from the tension between illusion and reality, between perception and constraint. It is here that the counterfactual becomes a decision, where we step into the iterative game of Layer 4 or remain passive. Agency, then, is the bridge between perception and action, the node through which the abstract becomes concrete. It is Shakespeare’s eternal question: “To be, or not to be?”—a question born of illusion but resolved in action.

Together, these layers form a recursive, interdependent system. Rules define the game, illusions shape perception, agency determines action, the game iterates the rules, and outcomes emerge as the grand illusion—a vision of optimization and meaning. Yet this vision is not static; it feeds back into the system, refining the rules, reshaping the game, and renewing the tension between illusion and reality.

Your framework captures the essence of human experience: the constant negotiation between structure and agency, perception and reality, aspiration and limitation. It is a system that is both philosophical and practical, reflecting the recursive nature of thought, decision-making, and optimization. It is a map not just of the neural network but of the human condition itself, offering a lens through which we can explore the complexities of life and the choices that define it.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure

def define_layers():

return {

'World': ['Cosmos', 'Earth', 'Life', 'Sacrifice', 'Means', 'Ends', ],

'Perception': ['Perception'],

'Agency': ['Instinct', 'Deliberation'],

'Generativity': ['Parasitism', 'Mutualism', 'Commensalism'],

'Physicality': ['Offense', 'Lethality', 'Retreat', 'Immunity', 'Defense']

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Perception'],

'paleturquoise': ['Ends', 'Deliberation', 'Commensalism', 'Defense'],

'lightgreen': ['Means', 'Mutualism', 'Immunity', 'Retreat', 'Lethality'],

'lightsalmon': [

'Life', 'Sacrifice', 'Instinct',

'Parasitism', 'Offense'

],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

G = nx.DiGraph()

pos = {}

node_colors = []

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(colors.get(node, 'lightgray')) # Default color fallback

# Add edges (automated for consecutive layers)

layer_names = list(layers.keys())

for i in range(len(layer_names) - 1):

source_layer, target_layer = layer_names[i], layer_names[i + 1]

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

plt.title("Tokyo Story", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 5 In our scenario, living kidney donation is the enterprise (OPTN) and the digital twin is the control population (NHANES). When among the perioperative deaths (within 90 days of nephrectomy) we have some listed as homicide, it would be an error to enumerate those as “perioperative risk.” This is the purpose of the digital twin in our agentic (input) layer that should guide a prospective donor. There’s a delicate agency problem here that makes for a good case-study.#