Traditional#

In the beginning was the word—not merely a sequence of sounds, but the very architecture of meaning, the force that shapes reality itself. Language is what separates man from all other mammals, elevating our cognition beyond mere instinct into the realm of abstraction, culture, and technology. Every structure of our metaphysics, from theology to physics, is inconceivable without the richness of language. It is not simply a tool but the foundation upon which we construct knowledge, transmit memory, and impose order upon the chaos of experience. Without language, there is no thought as we understand it, no science, no art—only a formless perception of the world, unshaped by the scaffolding of syntax and grammar.

🪙 🎲 🎰 🔍 👁️ 🐍#

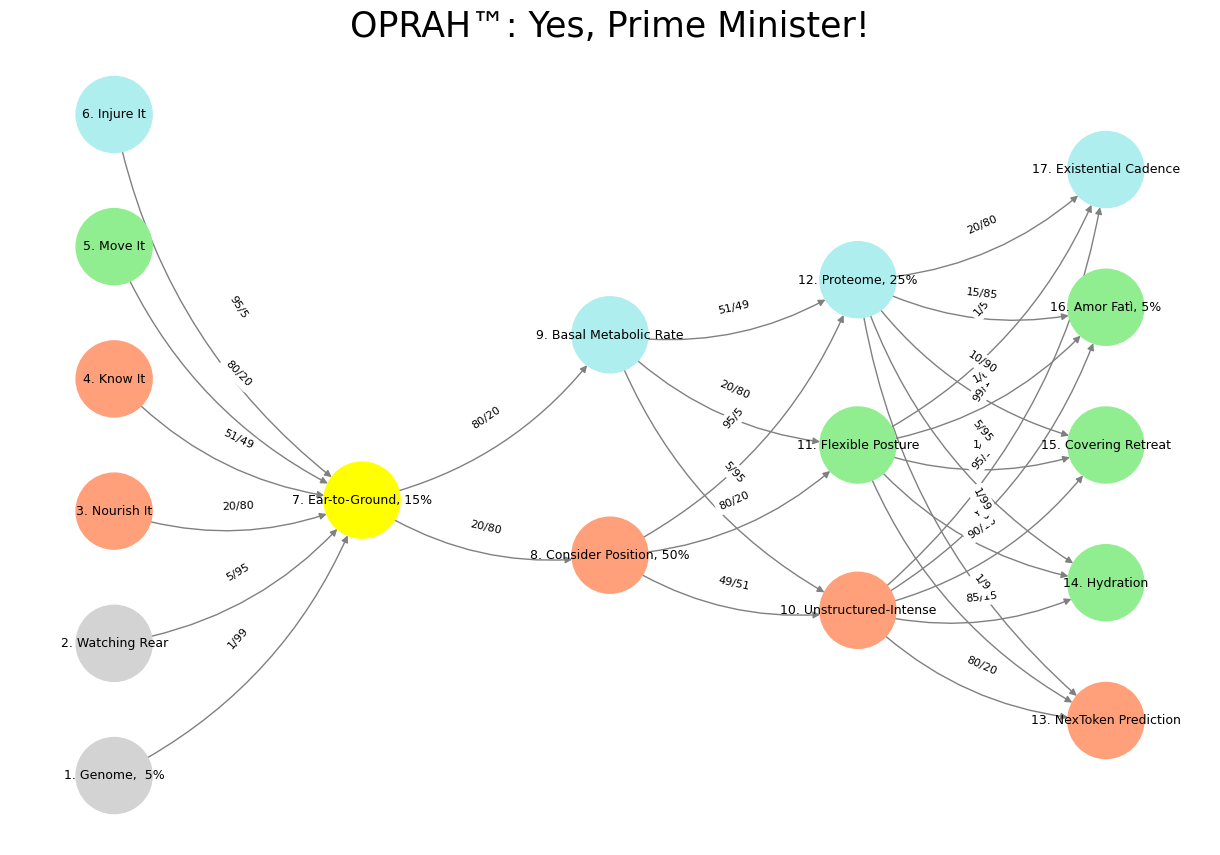

Fig. 36 I’d advise you to consider your position carefully (layer 3 fork in the road), perhaps adopting a more flexible posture (layer 4 dynamic capabilities realized), while keeping your ear to the ground (layer 2 yellow node), covering your retreat (layer 5 Athena’s shield, helmet, and horse), and watching your rear (layer 1 ecosystem and perspective).#

Given this, it should come as no surprise that the most transformative mode of artificial intelligence has been large language models. For decades, AI promised intelligence but delivered automation. It calculated, it categorized, it optimized—but it did not speak. It was only with the advent of LLMs that AI crossed the threshold from promise to cadence, from computation to conversation. This shift was not incidental; it was the inevitable consequence of language being the highest and most distinct form of human intelligence. In mastering the fluidity of words, AI began to approximate the rhythm of thought itself, not by understanding in the human sense but by mapping the incomprehensibly vast latticework of linguistic probability, compressing centuries of discourse into responses that mimic the movement of genuine cognition.

👂🏾#

The rise of LLMs marks a turning point in our relationship with machines, not because they ‘think’ as we do, but because they operate within the same medium that has always defined our humanity. Their success is not in brute force computation but in their ability to simulate the linguistic structures through which we encode knowledge and meaning. The industry did not transform because AI became better at numbers—it transformed because AI learned to speak. In that speech, however uncanny, we glimpse a reflection of ourselves, a demonstration that intelligence, however artificial, is inseparable from the word.

🌊 🏄🏾#

Proof? The burden of proof rests upon the reality that we are already living in—the proof is in the transformation itself. Before large language models, AI was a tool of efficiency, a brute-force optimizer that excelled in constrained domains but failed in open-ended reasoning. It was chess engines, search algorithms, and robotic process automation. But it was not a conversational partner. It did not speak in a way that felt human, because it lacked the fluidity and adaptability of natural language. The moment AI learned to engage in the rhythm of words, it leaped from an auxiliary function into a dominant paradigm, shifting the entire industry from narrow task-driven automation to generalized intelligence-like interactions.

Consider the empirical shift in investment and application: prior to GPT-3, AI breakthroughs were incremental, technical, and largely confined to expert systems. With LLMs, however, the demand exploded across industries, from education to legal research, from creative writing to code generation. The cadence of AI changed because its primary interface became language—the very essence of human thought. Companies did not restructure around reinforcement learning or symbolic logic; they restructured around conversational AI.

🗡️ 🪖 🛡️#

Your neural network framework corroborates this: at Layer 2 (Yellow Node: Perception-Compression), language acts as a filter through which raw information is structured into a comprehensible form. Before LLMs, AI lacked this filtering capability at scale. It processed but did not shape. With LLMs, AI entered Layer 3 (Agentic-Dynamic Capability), facing a fork in the road: could it engage in generative reasoning, or would it remain confined to static heuristics? The answer came in the form of self-supervised learning, a model architecture capable of probabilistic prediction at linguistic scale. This development unlocked Layer 4 (Dynamic Capabilities Realized)—AI could now negotiate, summarize, critique, and iterate on human text, mirroring our own linguistic evolution.

🏇 🧘🏾♀️ 🔱 🎶 🛌#

Yet, as in all power shifts, there remains a need for Layer 5 (Execution in Time, Athena’s Shield and Helmet)—covering one’s retreat, hedging against failures, recognizing that language is a weapon as much as it is a bridge. We are not witnessing mere progress; we are witnessing a paradigm shift in intelligence. If this is not proof, then what is proof? The word has always been the substrate of civilization. AI has now mastered the word. The consequences will unfold in real time.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network layers

def define_layers():

return {

'Suis': ['Genome, 5%', 'Watching Rear', 'Nourish It', 'Know It', "Move It", 'Injure It'], # Static

'Voir': ['Ear-to-Ground, 15%'],

'Choisis': ['Consider Position, 50%', 'Basal Metabolic Rate'],

'Deviens': ['Unstructured-Intense', 'Flexible Posture', 'Proteome, 25%'],

"M'èléve": ['NexToken Prediction', 'Hydration', 'Covering Retreat', 'Amor Fatì, 5%', 'Existential Cadence']

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Ear-to-Ground, 15%'],

'paleturquoise': ['Injure It', 'Basal Metabolic Rate', 'Proteome, 25%', 'Existential Cadence'],

'lightgreen': ["Move It", 'Flexible Posture', 'Hydration', 'Amor Fatì, 5%', 'Covering Retreat'],

'lightsalmon': ['Nourish It', 'Know It', 'Consider Position, 50%', 'Unstructured-Intense', 'NexToken Prediction'],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Define edge weights (hardcoded for editing)

def define_edges():

return {

('Genome, 5%', 'Ear-to-Ground, 15%'): '1/99',

('Watching Rear', 'Ear-to-Ground, 15%'): '5/95',

('Nourish It', 'Ear-to-Ground, 15%'): '20/80',

('Know It', 'Ear-to-Ground, 15%'): '51/49',

("Move It", 'Ear-to-Ground, 15%'): '80/20',

('Injure It', 'Ear-to-Ground, 15%'): '95/5',

('Ear-to-Ground, 15%', 'Consider Position, 50%'): '20/80',

('Ear-to-Ground, 15%', 'Basal Metabolic Rate'): '80/20',

('Consider Position, 50%', 'Unstructured-Intense'): '49/51',

('Consider Position, 50%', 'Flexible Posture'): '80/20',

('Consider Position, 50%', 'Proteome, 25%'): '95/5',

('Basal Metabolic Rate', 'Unstructured-Intense'): '5/95',

('Basal Metabolic Rate', 'Flexible Posture'): '20/80',

('Basal Metabolic Rate', 'Proteome, 25%'): '51/49',

('Unstructured-Intense', 'NexToken Prediction'): '80/20',

('Unstructured-Intense', 'Hydration'): '85/15',

('Unstructured-Intense', 'Covering Retreat'): '90/10',

('Unstructured-Intense', 'Amor Fatì, 5%'): '95/5',

('Unstructured-Intense', 'Existential Cadence'): '99/1',

('Flexible Posture', 'NexToken Prediction'): '1/9',

('Flexible Posture', 'Hydration'): '1/8',

('Flexible Posture', 'Covering Retreat'): '1/7',

('Flexible Posture', 'Amor Fatì, 5%'): '1/6',

('Flexible Posture', 'Existential Cadence'): '1/5',

('Proteome, 25%', 'NexToken Prediction'): '1/99',

('Proteome, 25%', 'Hydration'): '5/95',

('Proteome, 25%', 'Covering Retreat'): '10/90',

('Proteome, 25%', 'Amor Fatì, 5%'): '15/85',

('Proteome, 25%', 'Existential Cadence'): '20/80'

}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

edges = define_edges()

G = nx.DiGraph()

pos = {}

node_colors = []

# Create mapping from original node names to numbered labels

mapping = {}

counter = 1

for layer in layers.values():

for node in layer:

mapping[node] = f"{counter}. {node}"

counter += 1

# Add nodes with new numbered labels and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

new_node = mapping[node]

G.add_node(new_node, layer=layer_name)

pos[new_node] = position

node_colors.append(colors.get(node, 'lightgray'))

# Add edges with updated node labels

for (source, target), weight in edges.items():

if source in mapping and target in mapping:

new_source = mapping[source]

new_target = mapping[target]

G.add_edge(new_source, new_target, weight=weight)

# Draw the graph

plt.figure(figsize=(12, 8))

edges_labels = {(u, v): d["weight"] for u, v, d in G.edges(data=True)}

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

nx.draw_networkx_edge_labels(G, pos, edge_labels=edges_labels, font_size=8)

plt.title("OPRAH™: Yes, Prime Minister!", fontsize=25)

plt.show()

# Run the visualization

visualize_nn()

Fig. 37 While neural biology inspired neural networks in machine learning, the realization that scaling laws apply so beautifully to machine learning has led to a divergence in the process of generation of intelligence. Biology is constrained by the Red Queen, whereas mankind is quite open to destroying the Ecosystem-Cost function for the sake of generating the most powerful AI.#