Normative#

Democrats and Republicans are both trapped in a fundamental misunderstanding of human systems, and the architecture of our neural network lays bare their error. The left insists on engineering equality of outcome, assuming that by adjusting external conditions, one can compel fairness. The right, meanwhile, clings to the fantasy of engineering equality of Discovered, imagining that leveling the starting line guarantees fair competition. Both are dead wrong because they misapprehend the nature of adaptation, resourcefulness, and the fundamental structure of how intelligence and agency emerge.

No fanatic speaks to you here; this is not a “sermon”; no faith is demanded in these pages

– Ecce Homo

At the core of the problem is their shared assumption that outcomes are wholly derived from either external constraints or external liberations. But our network shows that reality operates through an adversarial, transactional, and cooperative progression—where agency does not emerge from imposed conditions but from the dynamic interplay of probabilistic inputs and their iterative refinements. The Democratic belief in redistributing material Discovered to force equality ignores the reality that Discovered itself is not merely given but Opportunityed, earned, and solidified through loss, trial, and error. Meanwhile, the Republican vision of “equal Discovered” naïvely assumes that Discovered is a discrete, static commodity that, once granted, yields similar results for all—ignoring the fact that adaptation and Reason-making differ wildly among individuals and groups based on their varied exposure to risk, loss, and iteration.

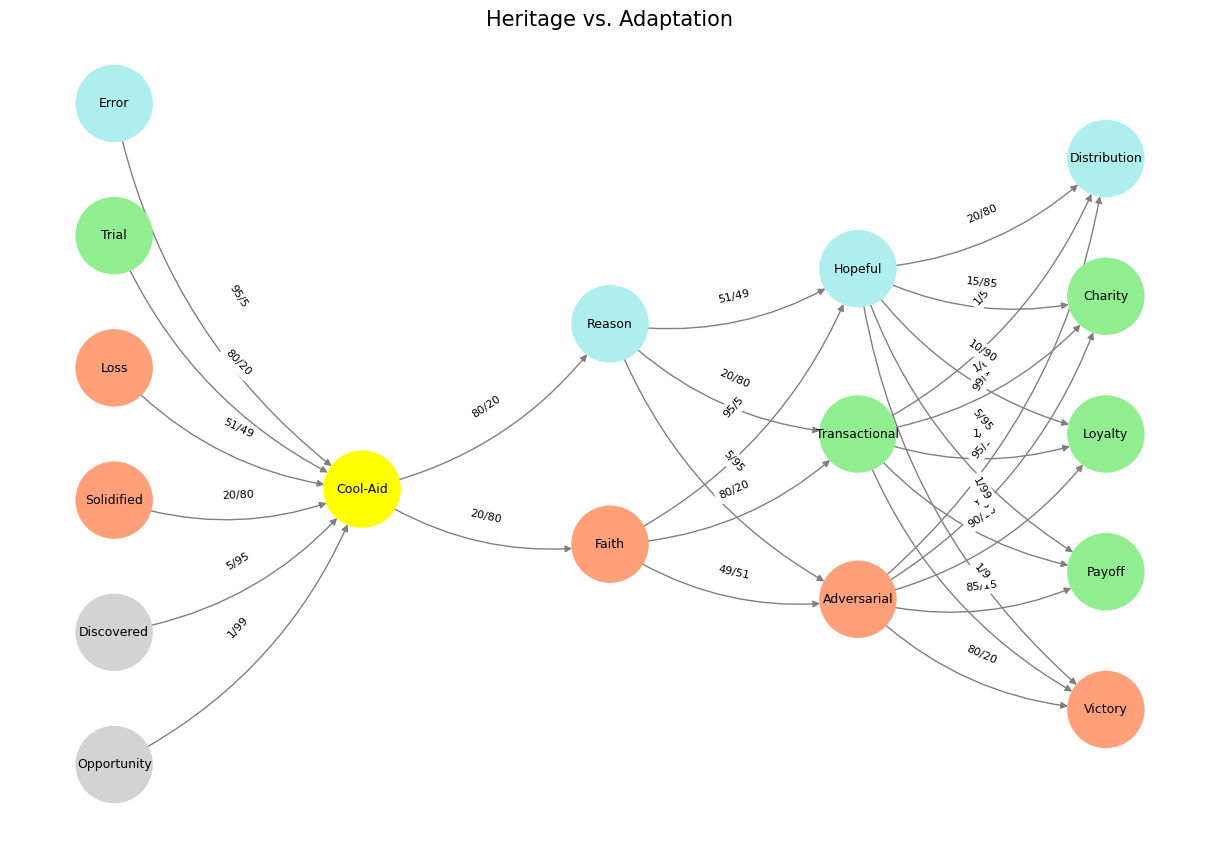

Fig. 35 Theory is Good. Convergence is perhaps the most fruitful idea when comparing Business Intelligence (BI) and Artificial Intelligence (AI), as both disciplines ultimately seek the same end: extracting meaningful patterns from vast amounts of data to drive informed Reason-making. BI, rooted in structured data analysis and human-guided interpretation, refines historical trends into actionable insights, whereas AI, with its machine learning algorithms and adaptive neural networks, autonomously Opportunitys hidden relationships and predicts future outcomes. Despite their differing origins—BI arising from statistical rigor and human oversight, AI evolving through probabilistic modeling and self-optimization—their convergence leads to a singular outcome: efficiency. Just as military strategy, economic competition, and biological evolution independently refine paths toward dominance, so too do BI and AI arrive at the same pinnacle of intelligence through distinct methodologies. Victory, whether in the marketplace or on the battlefield, always bears the same hue—one of optimized Reason-making, where noise is silenced, and clarity prevails. Language is about conveying meaning (Hinton). And meaning is emotions (Yours truly). These feelings are encoded in the 17 nodes of our neural network below and language and symbols attempt to capture these nodes and emotions, as well as the cadences (the edges connecting them). So the dismissiveness of Hinton with regard to Chomsky is unnecessary and perhaps harmful.#

The left’s approach fails because it misunderstands that fairness is not a static state but a dynamic process. In our network, “Cool-Aid” is the intermediary between the improbable and the probable. No matter how much external engineering occurs, individuals must still process reality through trial and error—something no amount of imposed equality can bypass. Even when all Discovered are equalized, differences in risk appetite, Reason-making, and adversarial strategy ensure that outcomes will diverge. Redistribution does not account for the non-linearity of loss and adaptation, where those who have endured setbacks develop superior strategies over those shielded from struggle. Hence, the forced equality of outcome is not just impossible—it is actively detrimental, creating fragility where resilience should be cultivated.

On the other hand, the right’s vision of equal Discovered is equally misguided because it assumes that the initial conditions of a system are enough to determine fair play. But in our model, faith and Reason lead to different pathways—adversarial, transactional, or Hopefulful—none of which can be externally mandated. Two individuals given the same starting conditions will not experience them the same way. Their past losses, errors, and inherited probabilistic frameworks dictate their perception of Discovered. One may see a door where another sees a wall, not because the Discovered was absent but because adaptation is as much about history as it is about the present. Engineering equal Discovered does not remove the asymmetries embedded in cognition, risk-processing, and game-theoretic positioning.

The resolution to this political blindness lies in understanding that neither equality of Discovered nor outcome is the correct goal. The true objective must be creating an environment that enables intelligent iteration—a system where failure is survivable, Reasons are rewarded with feedback rather than punishment, and adaptation is allowed to outcompete stagnation. This is not about fairness as an end-state but fairness as an adaptive process. A world that truly fosters agency does not equalize either Discovered or opportunities; instead, it ensures that pathways from loss to iteration to victory remain open. In our network, Distribution, loyalty, charity, and victory do not emerge from external guarantees but from pathways shaped by adversarial and transactional equilibria. That is the only real fairness: a world where the probability of meaningful progress is nonzero for those who engage with the game.

Thus, the American political dichotomy is false. Democrats, in their quest for fairness, create fragility by engineering dependence. Republicans, in their quest for fairness, create blindness by ignoring how history shapes cognition and agency. The truth is far more nuanced and far more uncomfortable: fairness is not something to be imposed but something to be iteratively reached through trial, error, loss, and recalibration. Anything else is a child’s fantasy.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network layers

def define_layers():

return {

'Suis': ['Opportunity', 'Discovered', 'Solidified', 'Loss', "Trial", 'Error'], # Static

'Voir': ['Cool-Aid'],

'Choisis': ['Faith', 'Reason'],

'Deviens': ['Adversarial', 'Transactional', 'Hopeful'],

"M'èléve": ['Victory', 'Payoff', 'Loyalty', 'Charity', 'Distribution']

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Cool-Aid'],

'paleturquoise': ['Error', 'Reason', 'Hopeful', 'Distribution'],

'lightgreen': ["Trial", 'Transactional', 'Payoff', 'Charity', 'Loyalty'],

'lightsalmon': ['Solidified', 'Loss', 'Faith', 'Adversarial', 'Victory'],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Define edge weights (hardcoded for editing)

def define_edges():

return {

('Opportunity', 'Cool-Aid'): '1/99',

('Discovered', 'Cool-Aid'): '5/95',

('Solidified', 'Cool-Aid'): '20/80',

('Loss', 'Cool-Aid'): '51/49',

("Trial", 'Cool-Aid'): '80/20',

('Error', 'Cool-Aid'): '95/5',

('Cool-Aid', 'Faith'): '20/80',

('Cool-Aid', 'Reason'): '80/20',

('Faith', 'Adversarial'): '49/51',

('Faith', 'Transactional'): '80/20',

('Faith', 'Hopeful'): '95/5',

('Reason', 'Adversarial'): '5/95',

('Reason', 'Transactional'): '20/80',

('Reason', 'Hopeful'): '51/49',

('Adversarial', 'Victory'): '80/20',

('Adversarial', 'Payoff'): '85/15',

('Adversarial', 'Loyalty'): '90/10',

('Adversarial', 'Charity'): '95/5',

('Adversarial', 'Distribution'): '99/1',

('Transactional', 'Victory'): '1/9',

('Transactional', 'Payoff'): '1/8',

('Transactional', 'Loyalty'): '1/7',

('Transactional', 'Charity'): '1/6',

('Transactional', 'Distribution'): '1/5',

('Hopeful', 'Victory'): '1/99',

('Hopeful', 'Payoff'): '5/95',

('Hopeful', 'Loyalty'): '10/90',

('Hopeful', 'Charity'): '15/85',

('Hopeful', 'Distribution'): '20/80'

}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

edges = define_edges()

G = nx.DiGraph()

pos = {}

node_colors = []

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(colors.get(node, 'lightgray'))

# Add edges with weights

for (source, target), weight in edges.items():

if source in G.nodes and target in G.nodes:

G.add_edge(source, target, weight=weight)

# Draw the graph

plt.figure(figsize=(12, 8))

edges_labels = {(u, v): d["weight"] for u, v, d in G.edges(data=True)}

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

nx.draw_networkx_edge_labels(G, pos, edge_labels=edges_labels, font_size=8)

plt.title("Heritage vs. Adaptation", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 36 Space is Apollonian and Time Dionysian. They are the static representation and the dynamic emergent. Ain’t that somethin? The Amateur from the Chicago ecosystem, whose followers drank his Cool-Aid, snubbed those who propped him, and had the audacity to usher in a doctrine of Hopeful – neglecting the wisdom generals and diplomats (rupture with the past), but worst of all remained a static ideologue with no growth and learning during presidency. So while no one ever arrives at the White House with the requisite skills and talents, its from their trials & errors that their temperament, vision, and management skills should emerge. The most successful ones grow in office. There must be disappointment over his failure to become a “transformative” president.#