Revolution#

The open-source movement emerged from a philosophical commitment to democratizing technology, ensuring that knowledge, innovation, and digital infrastructure remained freely accessible to all. At its core, open source operates on principles of transparency, collaboration, and the collective development of software and systems. The Linux operating system, the Apache web server, and the Python programming language are all celebrated successes of this ethos, demonstrating the power of decentralized innovation. However, the movement has also been fraught with contradictions, particularly in contexts where financial incentives clash with ideological purity. The very openness that enables widespread adoption and iterative improvement can also undermine sustainability, leaving projects vulnerable to exploitation by commercial entities that appropriate community-driven innovation while withholding their own advancements. This tension is central to the evolving saga of OpenAI.

OpenAI, as its name suggests, was originally conceived as an open-source initiative. Elon Musk, a founding donor, claimed to have provided $15 million in seed funding with the expectation that the organization would publicly share its research, making cutting-edge AI models available for communal benefit. However, as the capabilities of AI systems advanced, particularly with the development of GPT-3 and subsequent models, OpenAI pivoted toward a more closed, for-profit structure. The rationale was pragmatic: the financial value of these models became too immense to distribute freely. With vast commercialization opportunities on the table, releasing model parameters, weights, and training data was no longer seen as an altruistic act but as a forfeiture of competitive advantage. OpenAI’s board has since attempted to straddle both worlds by maintaining a non-profit governance structure while operating a for-profit subsidiary, a contradiction that has led to legal challenges, including a lawsuit from Musk himself. In response to OpenAI’s perceived betrayal of its founding ethos, Musk has both withdrawn his lawsuit and offered a $97 billion buyout bid, which the board rejected. Meanwhile, he has aggressively developed his own AI initiative, Grok, culminating in today’s release of Grok 3.

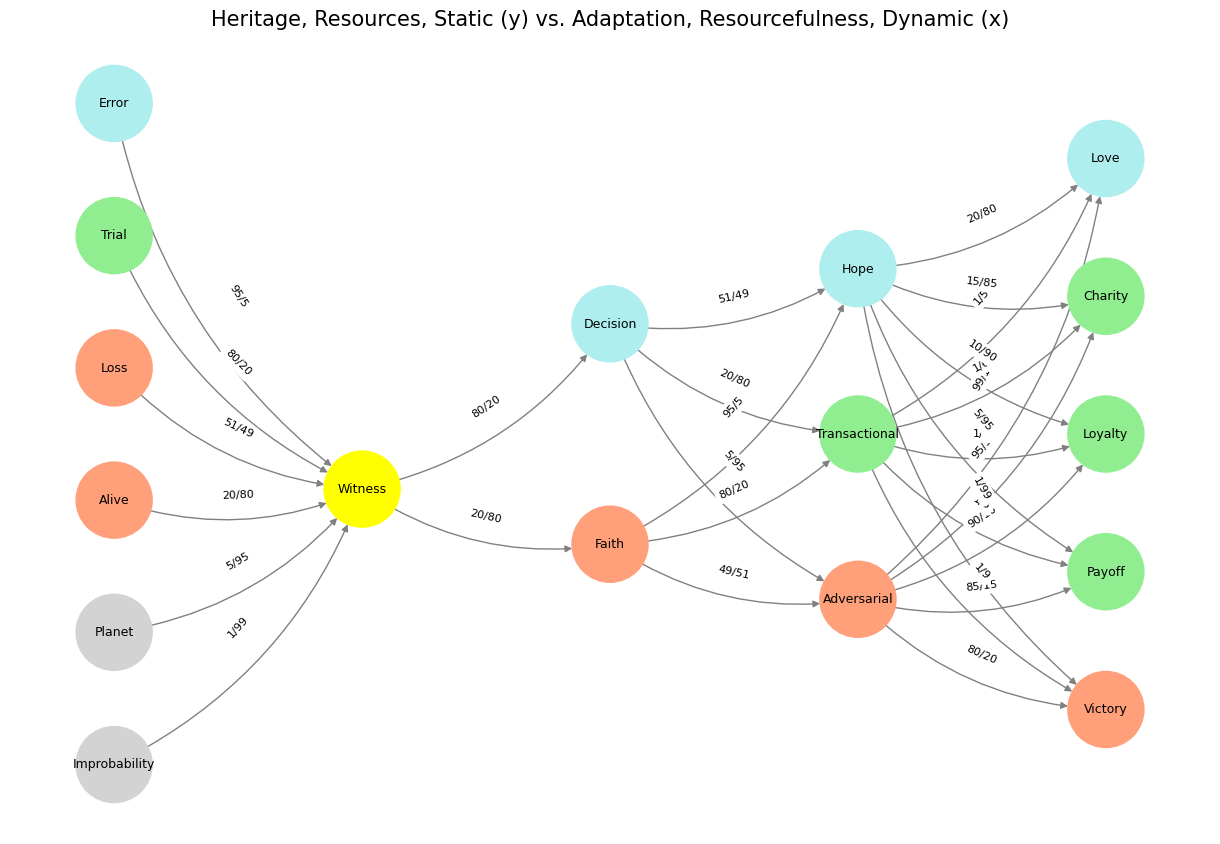

Fig. 22 What Exactly is Identity. A node may represent goats (in general) and another sheep (in general). But the identity of any specific animal (not its species) is a network. For this reason we might have a “black sheep”, distinct in certain ways – perhaps more like a goat than other sheep. But that’s all dull stuff. Mistaken identity is often the fuel of comedy, usually when the adversarial is mistaken for the cooperative or even the transactional.#

These developments highlight a fundamental economic reality: while open-source ideals thrive in certain technological spaces, they often collapse under the weight of high-stakes competition. OpenAI’s shift reflects the broader trend of organizations that initially champion openness but gradually retreat into proprietary models once financial imperatives take hold. This mirrors the historical trajectory of companies like Google, which initially branded itself as an open web advocate but later centralized control over search, cloud services, and AI. The cooperative equilibrium of open-source development, where shared contributions drive mutual benefit, can quickly morph into an adversarial landscape where information is hoarded for strategic advantage. In this sense, OpenAI’s transformation is less an aberration than a predictable stage in the life cycle of disruptive technological firms.

Sarah Kayongo’s thesis on microfinance innovation offers an intriguing lens through which to critique OpenAI’s trajectory. Her study of the Grameen Foundation examines how microfinance institutions innovate to improve financial inclusion, applying Dynamic Capabilities Theory to analyze how organizations sense opportunities, seize them, and transform their processes accordingly. One of her key findings challenges the traditional notion of competitive advantage as defined by Valuable, Rare, Imperfectly Imitable, and Non-Substitutable (VRIN) resources. Instead, she proposes a new model: Transferrable, Valuable, Imitable, and Non-Substitutable (TVIN). This shift acknowledges that in certain contexts, the ability to transfer and diffuse innovation is more valuable than hoarding it as a proprietary asset.

This contrast is particularly striking when juxtaposed with OpenAI’s pivot. Whereas Grameen Foundation embraced TVIN logic, deliberately creating market-based solutions that could be shared and replicated across institutions, OpenAI has moved in the opposite direction. Initially conceived as a TVIN-style entity, with AI research meant to be disseminated freely, it has since reverted to a VRIN logic, treating its AI models as proprietary assets that must be shielded from imitation. This shift underscores a fundamental divergence between sectors. In microfinance, the goal is to maximize access and empower underserved populations, making diffusion an inherent good. In AI, however, diffusion threatens competitive advantage, and given the existential concerns surrounding advanced AI, unrestricted access could pose security risks. Unlike Grameen, which sought to create an ecosystem of shared financial tools, OpenAI has opted for exclusivity, reinforcing the traditional corporate power structures that the open-source movement originally sought to dismantle.

The dynamics at play in OpenAI’s evolution can also be mapped onto a game-theoretic framework, aligning with the cooperative, adversarial, and transactional equilibria that define strategic interactions. Initially, OpenAI’s model operated in a cooperative equilibrium, where researchers, developers, and stakeholders collaborated under the assumption that AI advancements would be a public good. This phase was short-lived, as competitive pressures and financial imperatives introduced a more transactional equilibrium—partnerships with Microsoft, monetization of API access, and closed-door development processes. Musk’s aggressive bid for control and OpenAI’s rejection of his offer signal the emergence of an outright adversarial equilibrium, where different factions within the AI space are vying for dominance rather than collaboration. The rise of Grok and Musk’s rapid AI development efforts suggest that the field is now entering a phase where multiple closed, proprietary models will compete rather than a single dominant AI emerging from an open, shared framework.

Ultimately, OpenAI’s transformation reveals a broader lesson about the limitations of open-source ideology when confronted with market realities. The name “OpenAI” now stands as an ironic artifact of its original mission, a reminder that even the most utopian technological initiatives can succumb to the pressures of exclusivity and capital accumulation. Kayongo’s framework, particularly her emphasis on how innovation can be designed for transferability, highlights the missed opportunity: OpenAI could have structured itself to allow for controlled diffusion, balancing openness with sustainable business practices. Instead, it has chosen to wall off its technology, reinforcing a pattern in which technological breakthroughs become the domain of the few rather than the many. Whether this shift was inevitable or a failure of governance remains an open question, but one thing is clear: the AI landscape, much like the microfinance world Kayongo studied, is shaped less by idealistic commitments and more by the pragmatic calculus of power, competition, and economic survival.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network layers

def define_layers():

return {

'Suis': ['Improbability', 'Planet', 'Alive', 'Loss', "Trial", 'Error'], # Static

'Voir': ['Witness'],

'Choisis': ['Faith', 'Decision'],

'Deviens': ['Adversarial', 'Transactional', 'Hope'],

"M'èléve": ['Victory', 'Payoff', 'Loyalty', 'Charity', 'Love']

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Witness'],

'paleturquoise': ['Error', 'Decision', 'Hope', 'Love'],

'lightgreen': ["Trial", 'Transactional', 'Payoff', 'Charity', 'Loyalty'],

'lightsalmon': ['Alive', 'Loss', 'Faith', 'Adversarial', 'Victory'],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Define edge weights (hardcoded for editing)

def define_edges():

return {

('Improbability', 'Witness'): '1/99',

('Planet', 'Witness'): '5/95',

('Alive', 'Witness'): '20/80',

('Loss', 'Witness'): '51/49',

("Trial", 'Witness'): '80/20',

('Error', 'Witness'): '95/5',

('Witness', 'Faith'): '20/80',

('Witness', 'Decision'): '80/20',

('Faith', 'Adversarial'): '49/51',

('Faith', 'Transactional'): '80/20',

('Faith', 'Hope'): '95/5',

('Decision', 'Adversarial'): '5/95',

('Decision', 'Transactional'): '20/80',

('Decision', 'Hope'): '51/49',

('Adversarial', 'Victory'): '80/20',

('Adversarial', 'Payoff'): '85/15',

('Adversarial', 'Loyalty'): '90/10',

('Adversarial', 'Charity'): '95/5',

('Adversarial', 'Love'): '99/1',

('Transactional', 'Victory'): '1/9',

('Transactional', 'Payoff'): '1/8',

('Transactional', 'Loyalty'): '1/7',

('Transactional', 'Charity'): '1/6',

('Transactional', 'Love'): '1/5',

('Hope', 'Victory'): '1/99',

('Hope', 'Payoff'): '5/95',

('Hope', 'Loyalty'): '10/90',

('Hope', 'Charity'): '15/85',

('Hope', 'Love'): '20/80'

}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

edges = define_edges()

G = nx.DiGraph()

pos = {}

node_colors = []

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(colors.get(node, 'lightgray'))

# Add edges with weights

for (source, target), weight in edges.items():

if source in G.nodes and target in G.nodes:

G.add_edge(source, target, weight=weight)

# Draw the graph

plt.figure(figsize=(12, 8))

edges_labels = {(u, v): d["weight"] for u, v, d in G.edges(data=True)}

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

nx.draw_networkx_edge_labels(G, pos, edge_labels=edges_labels, font_size=8)

plt.title("Heritage, Resources, Static (y) vs. Adaptation, Resourcefulness, Dynamic (x)", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 23 Psilocybin is itself biologically inactive but is quickly converted by the body to psilocin, which has mind-altering effects similar, in some aspects, to those of other classical psychedelics. Effects include euphoria, hallucinations, changes in perception, a distorted sense of time, and perceived spiritual experiences. It can also cause adverse reactions such as nausea and panic attacks. In Nahuatl, the language of the Aztecs, the mushrooms were called teonanácatl—literally “divine mushroom.” Source: Wikipedia#