Traditional#

The age of the digital witness has transformed aviation accident investigation from a forensic labyrinth into an empirical goldmine. The events at YVR, captured in high-resolution video, encapsulate the ultimate dream of the National Transportation Safety Board (NTSB) and its Canadian counterpart, the Transportation Safety Board (TSB). This is no longer the era of reconstructing accidents from wreckage, black boxes, and radar data alone; it is an era in which every microsecond of an aircraft’s fate can be analyzed frame by frame, offering investigators a privileged vantage point that was once the exclusive domain of cockpit voice recorders and flight data logs. The Delta plane’s landing, explosion, and overturning on the tarmac—witnessed, recorded, and disseminated in real-time—marks the evolution of aviation accident reconstruction into a realm where every citizen is a potential data node.

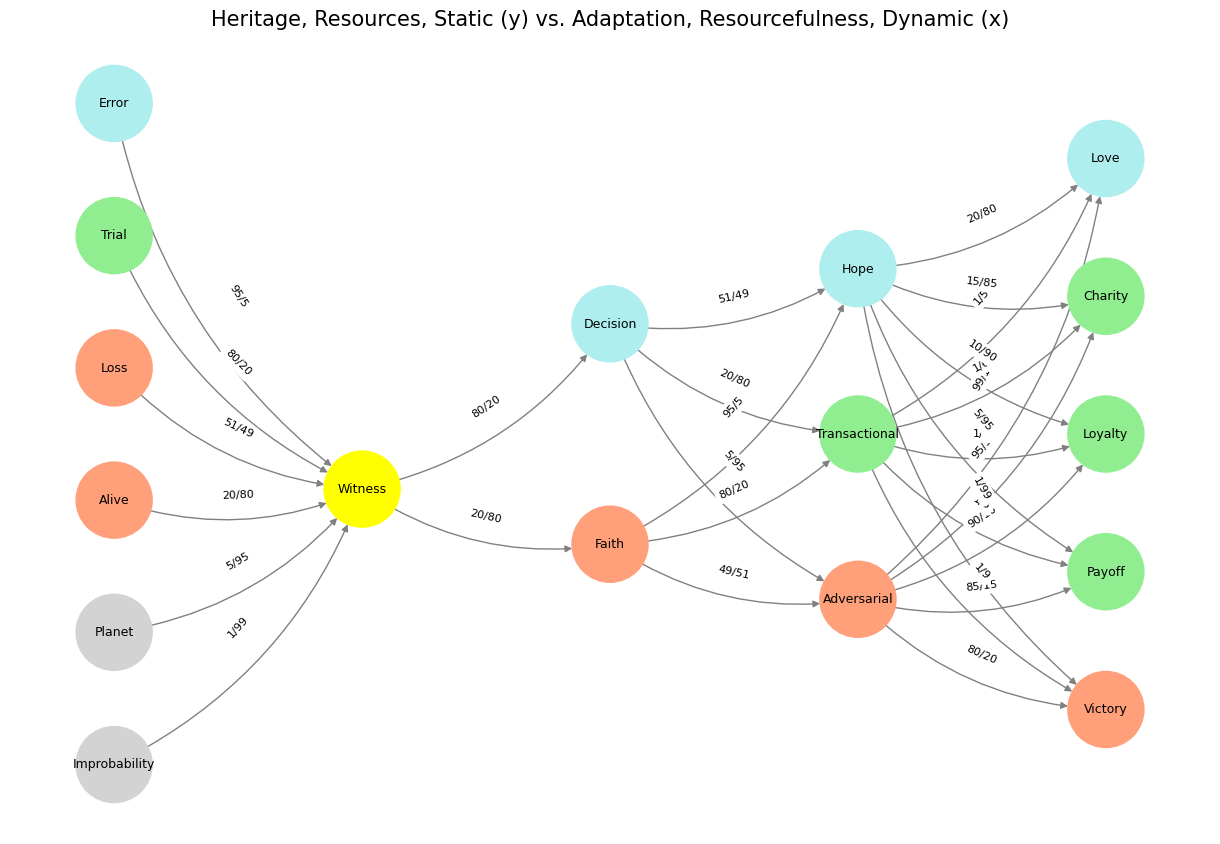

Fig. 33 Digital Witness. Heritage, Resources, Static (y) vs. Adaptation, Resourcefulness, Dynamic (x)#

For investigators, the presence of such footage is akin to a perfectly executed experiment with real-world consequences. Unlike static wreckage, which tells only the final chapter of a catastrophic sequence, high-quality video captures the entire process in motion—every flameout, every oscillation, every frame of aerodynamic stress visible. The human eye and, more importantly, computer vision algorithms can dissect the descent rate, the flare angle, and the precise moment kinetic energy translated into rupture. Investigators who once relied on interpolations between recorded telemetry points can now integrate continuous visual verification, eliminating the guesswork from their fluid dynamics simulations and impact trajectory modeling. A witness with a smartphone and a steady hand has thus become a critical forensic asset, bridging the gap between speculative hypothesis and irrefutable evidence.

From a historical perspective, the transformation of aviation safety methodology is staggering. There was a time when accident reports hinged on secondhand accounts, incomplete logbooks, and black-box transcripts that failed to capture the full scope of human and mechanical factors. Today, thanks to ubiquitous recording devices and cloud-based dissemination, a complex aviation event is instantly archived in multiple independent locations, each video angle providing a redundant layer of validation. This decentralization of data ensures that the investigative process is no longer vulnerable to state suppression, missing evidence, or the bureaucratic inertia that sometimes accompanies formal aviation probes. Even in cases where vested interests might seek to obscure culpability, public-domain footage forces a reckoning—an unassailable record of what truly transpired.

For the NTSB and TSB, this influx of raw visual data represents both an opportunity and a challenge. While video evidence is indispensable, it is not infallible. The human mind is prone to pareidolia—seeing patterns where none exist—and the untrained observer can misinterpret optical distortions as mechanical failure or pilot error. Investigators must contend with frame-rate limitations, lens aberrations, and parallax effects that can distort a viewer’s sense of scale and velocity. Moreover, in a world where deepfakes and algorithmic manipulations are increasingly sophisticated, forensic verification of video authenticity is now an essential component of accident investigation. The very same digital tools that have made aircraft disasters more transparent have also necessitated a level of skepticism and technical scrutiny that did not exist in the analog age.

Yet despite these challenges, the presence of real-time digital witnesses signifies a paradigm shift in how aviation safety evolves. The incorporation of immediate, high-fidelity visual data into accident analysis accelerates the feedback loop for regulatory agencies and aircraft manufacturers, shortening the timeline for corrective measures. Rather than waiting months for a comprehensive accident report, engineers and policymakers can begin making inferences within hours, comparing real-world failure sequences against predictive models and prior incident databases. This shift from postmortem analysis to near-instantaneous learning drastically improves the aviation industry’s adaptive resilience, ensuring that lessons from catastrophe are not merely academic but actively integrated into next-generation safety protocols.

In a broader sense, the digital witness phenomenon extends beyond aviation, reshaping forensic disciplines from criminal justice to military strategy. Every explosion, collision, and infrastructural failure is now observed not just by professionals but by an omnipresent network of devices—phones, drones, security cameras—all feeding into an ever-expanding reservoir of empirical documentation. The consequence is both liberating and unsettling: transparency is no longer optional, but neither is selective ignorance. We are fast approaching a world where human memory and institutional narratives are superseded by immutable, algorithmically enhanced digital recall. The dream of NTSB investigators today is merely the prologue to a much deeper transformation in how history itself is recorded and interpreted.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network layers

def define_layers():

return {

'Suis': ['Improbability', 'Planet', 'Alive', 'Loss', "Trial", 'Error'], # Static

'Voir': ['Witness'],

'Choisis': ['Faith', 'Decision'],

'Deviens': ['Adversarial', 'Transactional', 'Hope'],

"M'èléve": ['Victory', 'Payoff', 'Loyalty', 'Charity', 'Love']

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Witness'],

'paleturquoise': ['Error', 'Decision', 'Hope', 'Love'],

'lightgreen': ["Trial", 'Transactional', 'Payoff', 'Charity', 'Loyalty'],

'lightsalmon': ['Alive', 'Loss', 'Faith', 'Adversarial', 'Victory'],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Define edge weights (hardcoded for editing)

def define_edges():

return {

('Improbability', 'Witness'): '1/99',

('Planet', 'Witness'): '5/95',

('Alive', 'Witness'): '20/80',

('Loss', 'Witness'): '51/49',

("Trial", 'Witness'): '80/20',

('Error', 'Witness'): '95/5',

('Witness', 'Faith'): '20/80',

('Witness', 'Decision'): '80/20',

('Faith', 'Adversarial'): '49/51',

('Faith', 'Transactional'): '80/20',

('Faith', 'Hope'): '95/5',

('Decision', 'Adversarial'): '5/95',

('Decision', 'Transactional'): '20/80',

('Decision', 'Hope'): '51/49',

('Adversarial', 'Victory'): '80/20',

('Adversarial', 'Payoff'): '85/15',

('Adversarial', 'Loyalty'): '90/10',

('Adversarial', 'Charity'): '95/5',

('Adversarial', 'Love'): '99/1',

('Transactional', 'Victory'): '1/9',

('Transactional', 'Payoff'): '1/8',

('Transactional', 'Loyalty'): '1/7',

('Transactional', 'Charity'): '1/6',

('Transactional', 'Love'): '1/5',

('Hope', 'Victory'): '1/99',

('Hope', 'Payoff'): '5/95',

('Hope', 'Loyalty'): '10/90',

('Hope', 'Charity'): '15/85',

('Hope', 'Love'): '20/80'

}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

edges = define_edges()

G = nx.DiGraph()

pos = {}

node_colors = []

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(colors.get(node, 'lightgray'))

# Add edges with weights

for (source, target), weight in edges.items():

if source in G.nodes and target in G.nodes:

G.add_edge(source, target, weight=weight)

# Draw the graph

plt.figure(figsize=(12, 8))

edges_labels = {(u, v): d["weight"] for u, v, d in G.edges(data=True)}

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

nx.draw_networkx_edge_labels(G, pos, edge_labels=edges_labels, font_size=8)

plt.title("Heritage, Resources, Static (y) vs. Adaptation, Resourcefulness, Dynamic (x)", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 34 While neural biology inspired neural networks in machine learning, the realization that scaling laws apply so beautifully to machine learning has led to a divergence in the process of generation of intelligence. Biology is constrained by the Red Queen, whereas mankind is quite open to destroying the Ecosystem-Cost function for the sake of generating the most powerful AI.#