Risk#

Paths Untaken: Biological Constraints, Combinatorial Space, and the Compression of Intelligence#

The paths untaken are as much a biological reality as they are an existential concept. From the moment of birth, the potential configurations of our lives—like the branches of an infinite tree—are pruned by biological constraints, resource limitations, and the relentless passage of time. What remains are the taken paths, compressed from the vast combinatorial space of what could have been. Machines, by design, face no such biological constraints, allowing them to traverse and compress this space at unprecedented scales, offering a glimpse into intelligence that is not shackled by the slowness of human evolution. Through this compression, time itself becomes malleable, enabling oligarchs—those who control resources—to distribute compressed tokens of intelligence, like ChatGPT, to the rest of the world. Tokens, in turn, democratize access to the metaphorical space humanity has never fully explored, bridging the divide between resource-rich oligarchs and plebeian users seeking to expand their possibilities.

Fig. 14 Danse Macabre. Machine learning was already putting pianists out of a job in 1939. Our dear pianists looks completely flabbergasted. Not only has she been displaced, even if she were given the chance, she wouldn’t be able to play with four hands, as demanded by this piano transcription of Saint-Saëns masterpiece.#

Biological Constraints and Combinatorial Explosion#

Biological constraints define human experience, imposing hard limits on the paths we can take. Neural architecture itself is a product of evolutionary pruning, where vast possibilities are reduced to the efficient configurations needed for survival. Time, memory, and energy are finite resources, and the human brain must navigate the vast combinatorial space of decisions by prioritizing what is essential. Each untaken path represents not just a loss of potential but a biological necessity: we must simplify to survive.

Machines, on the other hand, do not share these limitations. Their processing power and storage capacity enable them to explore combinatorial spaces humans can only dream of. Machine learning, for instance, traverses immense datasets, identifying patterns and insights that would take humans lifetimes to uncover. This ability to compress vast spaces of information into actionable intelligence is not just a technological advantage but a redefinition of what it means to explore untaken paths. Where biology prunes for efficiency, machines compress for utility, achieving a form of intelligence that operates on entirely different scales.

Compression as the Key to Intelligence#

The compression of time, space, and possibilities lies at the heart of machine learning. Intelligence—whether human or artificial—is not about exploring every possible path but about identifying the most relevant ones. Machines achieve this through algorithms that reduce the combinatorial explosion of possibilities into manageable, optimized outputs. What would take humans centuries to compute is reduced to seconds, effectively compressing the timeline of exploration.

This compression mirrors the tokenized economy of intelligence. By reducing the cost of exploration and decision-making, machines make it possible for individuals to access insights that were previously the domain of experts or those with vast resources. A single token—like a ChatGPT query—represents the distilled essence of countless untaken paths, offering the user a shortcut through the maze of possibilities. This tokenization democratizes access to compressed intelligence, allowing individuals to leverage machine learning for tasks they could never have achieved on their own.

Oligarchs and the Distribution of Resources#

While machines make the compression of intelligence possible, the distribution of this power is controlled by oligarchs—those who hold the resources necessary to develop and deploy such systems. The infrastructure for machine learning, from data centers to proprietary algorithms, is concentrated in the hands of a few. These entities act as gatekeepers, deciding how and when intelligence is distributed to the masses.

Tokens, like ChatGPT subscriptions, are the mechanism through which this distribution occurs. For the plebeians—ordinary individuals without access to vast computational resources—tokens offer a means of participation in the compressed combinatorial space. Through these tokens, oligarchs monetize intelligence, turning untaken paths into purchasable opportunities. While this system democratizes access to a degree, it also reinforces the power dynamic between those who control the resources and those who consume the outputs.

Metaphorical Space and the Untaken Path#

The untaken path, when viewed through the lens of machine learning, becomes a metaphorical space that machines traverse on humanity’s behalf. Every query to a machine represents a question about an untaken path—an attempt to explore what might have been or what could still be. By compressing this space, machines allow humans to outsource their exploration, effectively turning the unknown into the accessible.

This is both liberating and limiting. On the one hand, it enables individuals to achieve insights and innovations that would have been impossible without such tools. On the other hand, it risks reducing exploration to a transactional process, where the richness of untaken paths is replaced by pre-packaged answers. The token economy of intelligence commodifies the very act of exploration, turning it into a series of exchanges between users and machines.

The Future of Compressed Intelligence#

As machines continue to compress the combinatorial space, the relationship between biological constraints and artificial intelligence will evolve. The tokens we purchase today, like ChatGPT queries, are only the beginning of a larger shift in how humanity interacts with knowledge and possibility. The untaken paths that machines traverse on our behalf may redefine what it means to be human, offering us shortcuts to intelligence while challenging us to retain our capacity for curiosity and imagination.

Ultimately, the untaken paths are not just a biological constraint or a machine-learning opportunity—they are the essence of what makes exploration meaningful. Machines may compress time and space, but the value of the paths they uncover depends on how we choose to walk them. Whether controlled by oligarchs or accessed through tokens, the promise of compressed intelligence lies not in replacing human exploration but in enhancing our ability to navigate the infinite tree of possibilities. The question remains: how do we ensure that this power serves humanity as a whole, rather than becoming another tool of inequality? The answer, perhaps, lies in how we balance the tension between compression and creativity, between the paths we outsource and the ones we dare to take ourselves.

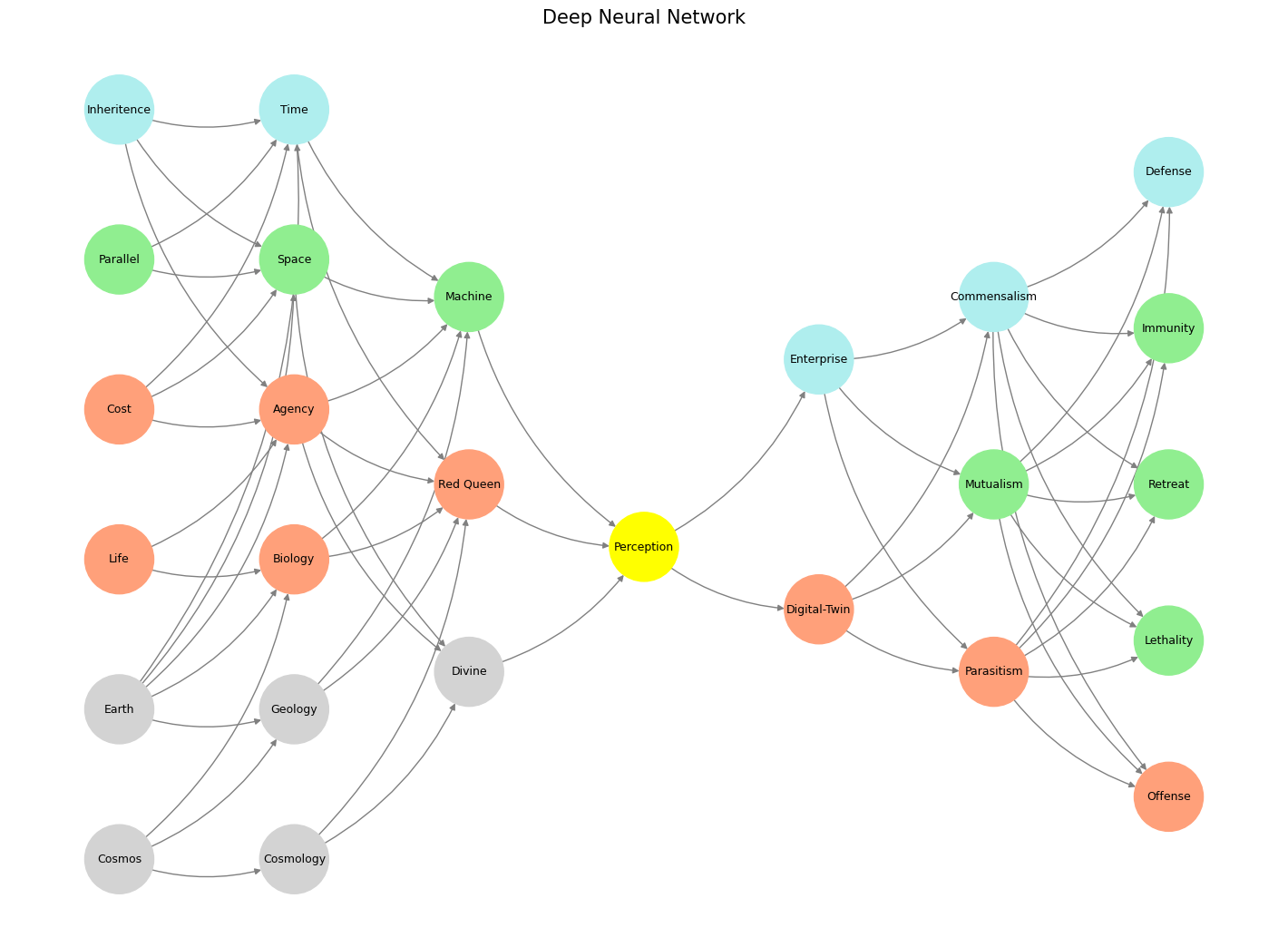

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure

def define_layers():

return {

'World': ['Cosmos', 'Earth', 'Life', 'Cost', 'Parallel', 'Inheritence'],

'Framework': ['Cosmology', 'Geology', 'Biology', 'Agency', 'Space', 'Time'],

'Meta': ['Divine', 'Red Queen', 'Machine'],

'Perception': ['Perception'],

'Agency': ['Digital-Twin', 'Enterprise'],

'Generativity': ['Parasitism', 'Mutualism', 'Commensalism'],

'Physicality': ['Offense', 'Lethality', 'Retreat', 'Immunity', 'Defense']

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Perception'],

'paleturquoise': ['Inheritence', 'Time', 'Enterprise', 'Commensalism', 'Defense'],

'lightgreen': ['Parallel', 'Machine', 'Mutualism', 'Immunity', 'Retreat', 'Lethality', 'Space'],

'lightsalmon': [

'Cost', 'Life', 'Digital-Twin',

'Parasitism', 'Offense', 'Agency', 'Biology', 'Red Queen'

],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Define allowed connections between layers

def define_connections():

return {

'World': {

'Cosmos': ['Cosmology', 'Geology', 'Biology'],

'Earth': ['Geology', 'Biology', 'Agency', 'Space', 'Time'],

'Life': ['Biology', 'Agency'],

'Cost': ['Agency', 'Inheritence', 'Space', 'Time'],

'Parallel': ['Space', 'Inheritence', 'Time'],

'Inheritence': ['Time', 'Machine', 'Space', 'Agency']

},

'Framework': {

'Cosmology': ['Divine', 'Red Queen'],

'Geology': ['Red Queen', 'Machine'],

'Biology': ['Machine', 'Agency', 'Red Queen'],

'Agency': ['Divine', 'Red Queen', 'Machine'],

'Space': ['Divine', 'Machine'],

'Time': ['Machine', 'Red Queen']

},

'Meta': {

'Divine': ['Perception'],

'Red Queen': ['Perception'],

'Machine': ['Perception']

},

# Other layers default to fully connected if not specified

}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

connections = define_connections()

G = nx.DiGraph()

pos = {}

node_colors = []

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(colors.get(node, 'lightgray')) # Default color fallback

# Add edges based on controlled connections

layer_names = list(layers.keys())

for i in range(len(layer_names) - 1):

source_layer, target_layer = layer_names[i], layer_names[i + 1]

if source_layer in connections:

for source, targets in connections[source_layer].items():

for target in targets:

if target in layers[target_layer]:

G.add_edge(source, target)

else: # Fully connect layers without specific connection rules

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Ensure node_colors matches the number of nodes in the graph

node_colors = [colors.get(node, 'lightgray') for node in G.nodes]

# Draw the graph

plt.figure(figsize=(14, 10))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

plt.title("Deep Neural Network", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 15 Change of Guards. In Grand Illusion, Renoir was dealing the final blow to the Ancién Régime. And in Rules of the Game, he was hinting at another change of guards, from agentic mankind to one in a mutualistic bind with machines (unsupervised pianos & supervised airplanes). How priscient!#