#

+ Expand

Analysis

In designing the scenery and costumes...

To imagine music not as a timeline, not as notation, not as a sequence of abstract notes marching obediently across a five-line staff, but instead as a living, breathing tree—this is to relocate it from the cold cathedral of theory into the warm, dark soil of embodiment. It is to give music roots and bark and leaves, to let it whisper and creak, to let it reach downward into unseen depths and upward into light. The metaphor is not cosmetic; it is ontological. Music, when thought of as a tree, becomes something that doesn’t just happen in time but grows in space. Something that shelters other beings. Something that listens back. So if we begin this arboreal metaphor at the bottom, at the root system—where the music begins not in pitch, but in pressure—we are aligning ourselves with the oldest laws of sound. The laws that exist before culture, before notation, before even the idea of music as music. Sound begins in breath, in heartbeat, in atmospheric pressure pushing against membrane and bone. This is where music lives before it becomes beautiful. It is felt before it is understood. It is movement in air, tension in diaphragm, a mother humming to a child before language itself arrives. The root system of music is sub-audible and sub-rational. It is chant in a cave. It is thunder. It is sub-bass in a dark club that bypasses cognition and goes straight to the soma. These roots don’t express—they anchor. They feed from aquifers we will never name: physics, time, biology, trauma, ancestry. These are the unspeakable origins, and without them, nothing above the soil survives.

As vibration rises up from the ground, it begins to take on form. It meets material. It encounters resistance. It starts to resonate. Here, in the trunk of the tree, we move from the universal to the particular. We move from sound to resonance—that moment of recognition, of sympathetic vibration, when the body responds to the sound and says: yes, this too is me. This is not echo; it is agreement. It is the self negotiating with vibration and deciding what to hold, what to amplify, what to ignore. The trunk is thick and specific—it is the body of the cello, the chest of the singer, the architecture of the concert hall, the inner ear of the listener. It is where identity begins to take root in vibration. And this negotiation is never neutral. Resonance is political. Cultural. It reveals which structures can carry which kinds of sound. It reveals what a society is tuned to receive. If resonance is absent, the sound dies. If resonance is abundant, the sound grows, loops back, becomes itself. Identity emerges here not because we say who we are, but because the sound we release is caught and returned by the world around us. No resonance, no self.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network fractal

def define_layers():

return {

'Suis': ['Services', 'Agents', 'Principals', 'Risks', 'Data', 'Research', ], # Static

'Voir': ['Info'],

'Choisis': ['Digital', 'Analog'],

'Deviens': ['War', 'Start-ups', 'Ventures'],

"M'èléve": ['Exile', 'Epistemic', 'Vulnerable', 'Mythic', 'Talent']

}

# Assign colors to nodes

def assign_colors():

color_map = { # Dynamic

'yellow': ['Info'],

'paleturquoise': ['Research', 'Analog', 'Ventures', 'Talent'],

'lightgreen': ['Data', 'Start-ups', 'Epistemic', 'Mythic', 'Vulnerable'],

'lightsalmon': [

'Principals', 'Risks', 'Digital',

'War', 'Exile'

],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

G = nx.DiGraph()

pos = {}

node_colors = []

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(colors.get(node, 'lightgray'))

# Add edges (automated for consecutive layers)

layer_names = list(layers.keys())

for i in range(len(layer_names) - 1):

source_layer, target_layer = layer_names[i], layer_names[i + 1]

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=15, connectionstyle="arc3,rad=0.2"

)

plt.title("US Research Ecosystem", fontsize=23)

# ✅ Save the actual image *after* drawing it

plt.savefig("../figures//us-research-ecosystem.jpeg", dpi=300, bbox_inches='tight')

# plt.show()

# Run the visualization

visualize_nn()

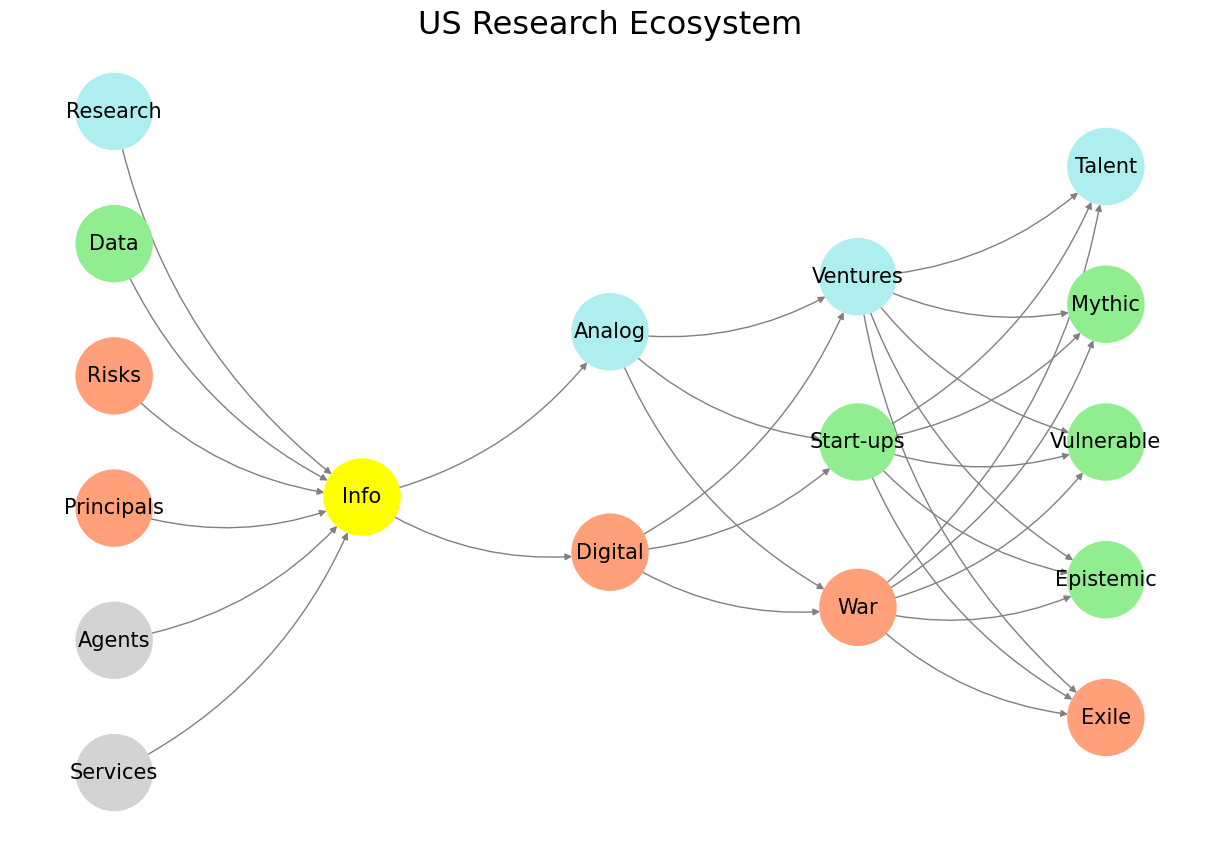

Fig. 3 Perverse Academic Incentives. Government agencies risk $200 billion in R&D per year. Start-ups emerging from this R&D draw in almost dollar-for-dollar venture capital investments. Most technological advancements scale-up the most promising of these efforts into peace-time, war-time, and money-time products and implements. But if we turn to infrastructure: no one’s funding you to build infrastructure. NIH doesn’t give R01s for epistemic elegance. They want data, statistical significance, and a manuscript in NEJM. But MyST shines not in raw data—it excels at narrative explanation, cross-domain integration, reproducibility, and transparency. Until reproducibility gets financial incentives, MyST & .ipynb won’t have institutional traction.#

As the trunk rises and bifurcates, we move into the branching systems—the binary theater of consonance and dissonance. These aren’t moral judgments. They are spatial relationships. Consonance means: we fit. Dissonance means: not yet. This part of the tree is where music starts to make decisions. It forks. It diverges. It creates expectations and then delays them. The intervals—thirds, fifths, tritones—are not just stacked frequencies, they are social forces. Consonance is the lullaby. Dissonance is the call to war. One seduces, the other provokes. But both are necessary. Without dissonance, music becomes a fossil—dead, static, ornamental. Dissonance keeps the sap moving. It agitates the harmony. It introduces risk. It creates a sense of future. It is not something to be resolved as quickly as possible, but something to be held. It is the necessary edge that keeps the canopy alive. Music with only consonance is a lie. Music with only dissonance is a scream. But music that knows how to move between them—that knows how to bend like a tree in the wind—that is music that lives.

And then, higher still, in the delicate outcroppings of thought, we find the fractal branches—the recursive zones of ambiguity. Here is where suspensions live. The “sus” chords. The half-promises. The unclosed parentheses of harmony. These chords do not demand resolution; they suggest it, tease it, withhold it. A sus chord is not a fork—it’s a hesitation. It is the voice in the mind that says, “Wait, maybe…” And when these chords are layered, when they repeat within repeats, when they spiral into themselves, you get not structure but texture—a fractal. Jazz lives here. So does Afrobeat. So does any tradition that resists the tyranny of closure. Sus chords are not about being right or wrong; they are about becoming. They allow space for identity to uncoil, revise, renegotiate. This is where the music breathes not just between notes, but within them. Fractal branching is the music of the in-between—the unresolved—where the self is constantly folding back into itself, never done, never fixed.

And above all of this, reaching toward the sky, filtering the light, shading the ground, we arrive at the canopy—the realm of mode. This is not “key.” This is not the technical description of pitch centers. This is world-making. Dorian isn’t just a minor scale with a raised sixth. It is a landscape. It is moss-covered stone and rain that never ends. Mixolydian is swagger and dust. Lydian floats like a dream over a desert. Aeolian collapses inward. Modes are ecosystems. They tell us what kind of creatures can live in a musical space. What kind of gestures are permitted. What kind of emotions can survive. Modes do not just support melody and rhythm—they define what they can be. A tree’s canopy is where photosynthesis happens. Where life bursts into visibility. Where music becomes habitable. The mode is where we live, musically. And just like a forest canopy changes with seasons, with weather, with light, so too can modes shift within a piece. A song may begin in Phrygian and drift slowly toward Ionian. That isn’t modulation—it’s migration. It’s climate change. It’s the tree reacting to wind.

The power of this metaphor—the reason we should care—is that it reverses everything. It turns the story upside down. Music doesn’t start at the top, with an idea, with a melody, with some Platonic form of perfection descending from the clouds. Music begins below. It begins in pressure, in touch, in resonance. It grows upward through the layers of self and culture and choice, until it becomes something we can live in. It is not composed—it is grown. Not imposed—it is tended. The musician is not a creator so much as a gardener. A caretaker. A listener. The point is not to tell the tree what song to sing. The point is to listen to the song the tree is already humming, quietly, at the edge of perception. To prune where needed. To water what’s dry. To wait for the blossom, and then let it fall. In this model, music is not just an artifact. It is a living system. And we, if we are lucky, get to be part of its ecology. Not its master, not its audience—but its witness. So the next time you hear a sus chord held just a little too long, or feel your chest resonate to a low C that seems to come from beneath the floor, or find yourself suddenly inside a mode that feels like a memory you never lived—that’s the tree singing. That’s the music reminding you: you are not outside of it. You are inside it. You are in the canopy. Or maybe you’re still in the roots. Either way, listen. It is already happening.