Life ⚓️#

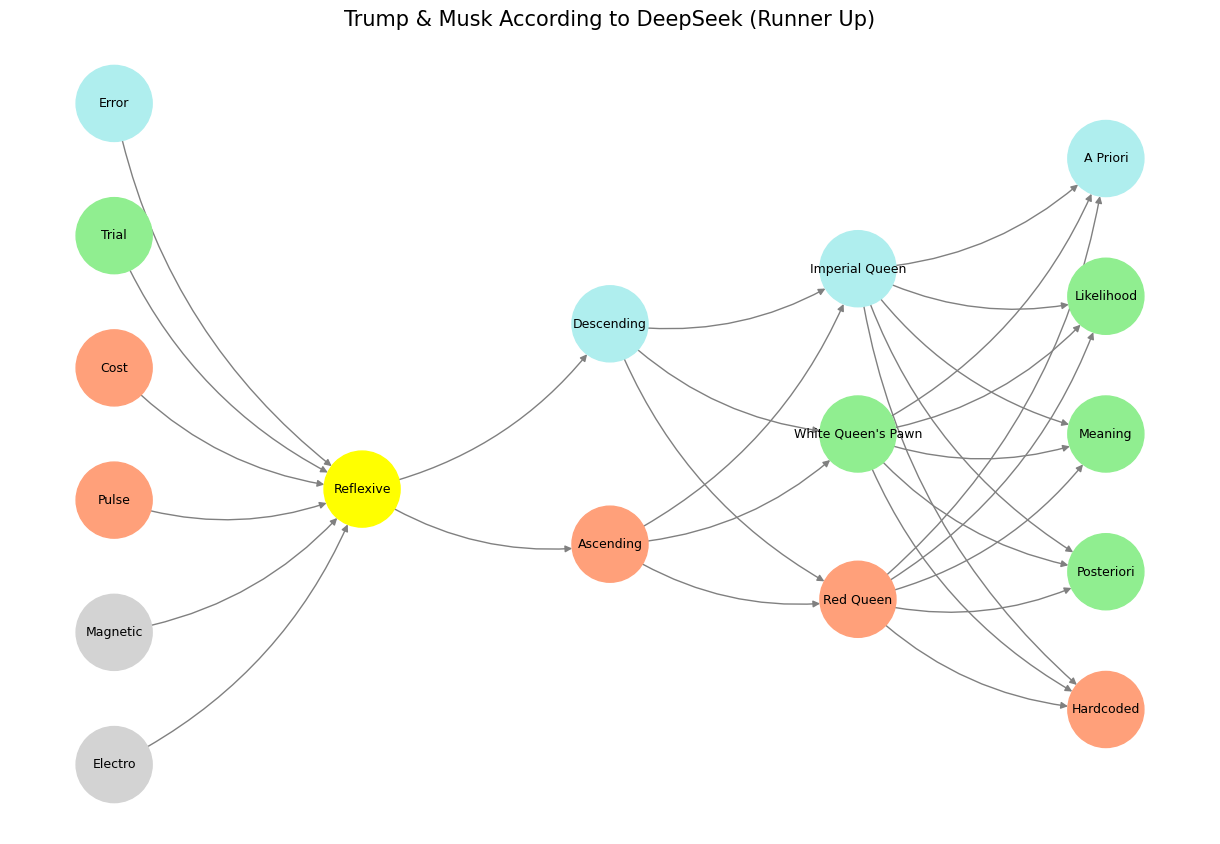

Playing games, at its core, is an exercise in manipulating choice. It is the art of steering the herd, guiding them towards a decision that, while seemingly autonomous, has already been shaped by the parameters of the game itself. The neural network you’ve laid out reveals this process with striking clarity—how different forces, whether biological, psychological, or social, push individuals toward decisions preordained by structural constraints. The fundamental tension is between two paths: paleturquoise, representing stability, predictability, and parasympathetic calm, and lightsalmon, the domain of adrenaline, urgency, and sympathetic activation. The most skilled game-masters—the bluffers, the snake oil salesmen, the orchestrators of illusion—are those who make these two options seem like the only possible choices.

To drive someone towards lightsalmon, you do not need to argue for its virtues directly. The simplest method is to attack the alternative, painting paleturquoise as lifeless, as stagnation masquerading as security. This is the essence of the gambler’s thrill, the entrepreneur’s high, the revolutionary’s call to arms—an implicit suggestion that those who choose paleturquoise are weak, uninspired, or merely existing rather than living. The trick is in framing the game in such a way that the status quo becomes unbearable, that the idea of predictable stability is made to seem suffocating rather than reassuring. At the biological level, this maps to the fight-or-flight response. The sympathetic nervous system doesn’t need to make a logical case for its dominance; it merely needs to create an existential threat, setting off a cascade of responses that demand movement, urgency, action. In this way, the player is coaxed toward the lightsalmon path—not by attraction, but by repulsion from its alternative.

On the other hand, paleturquoise is often sold through exhaustion. When lightsalmon has run its course—when the gamble fails, when the high fades, when the revolutionary fervor collapses into chaos—there emerges a hunger for the steady, for the predictable, for a return to homeostasis. This is where the game’s architects allow paleturquoise to reassert itself, but always on their terms. The return to stability is conditional. It is not granted freely but offered as a privilege for those who ‘earn’ it, those who have sufficiently burned themselves out in the pursuit of something else. Yet, even this is a controlled decision, framed within a system that prevents true autonomy. The players believe they are resting, recovering, rebuilding, but in reality, they are simply preparing for the next cycle, the next rush, the next manufactured crisis that will once again push them toward the edge.

For those who refuse the binary, there is the illusion of a third option—lightgreen. This is the promise of emergence, of transcendence beyond the artificial duality. But it is never truly granted. The game-masters dangle it as an aspiration, a state that one might reach if they commit fully to either paleturquoise or lightsalmon. Lightgreen is never the immediate choice; it is always deferred, always just beyond reach, contingent on proving one’s worth through previous commitments. This is how ideologies sustain themselves, how religions maintain devotion, how financial speculation cycles between boom and bust. The promise of lightgreen is the great stabilizer, keeping the disillusioned engaged, giving hope that some synthesis might emerge, if only one plays the game long enough.

Oh, what fun it is!

– Alice

The brilliance of this framework is that it requires no coercion. The choices appear natural, organic, self-directed, when in fact they are nothing more than preordained endpoints of a game already rigged. Whether by fear or by fatigue, by adrenaline or by despair, the players are always making a ‘choice’—but never their own.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network fractal

def define_layers():

return {

'World': ['Electro', 'Magnetic', 'Pulse', 'Cost', 'Trial', 'Error', ], # Veni; 95/5

'Mode': ['Reflexive'], # Vidi; 80/20

'Agent': ['Ascending', 'Descending'], # Vici; Veni; 51/49

'Space': ['Red Queen', "White Queen's Pawn", 'Imperial Queen'], # Vidi; 20/80

'Time': ['Hardcoded', 'Posteriori', 'Meaning', 'Likelihood', 'A Priori'] # Vici; 5/95

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Reflexive'],

'paleturquoise': ['Error', 'Descending', 'Imperial Queen', 'A Priori'],

'lightgreen': ['Trial', "White Queen's Pawn", 'Likelihood', 'Meaning', 'Posteriori'],

'lightsalmon': [

'Pulse', 'Cost', 'Ascending',

'Red Queen', 'Hardcoded'

],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

G = nx.DiGraph()

pos = {}

node_colors = []

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(colors.get(node, 'lightgray'))

# Add edges (automated for consecutive layers)

layer_names = list(layers.keys())

for i in range(len(layer_names) - 1):

source_layer, target_layer = layer_names[i], layer_names[i + 1]

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

plt.title("Trump & Musk According to DeepSeek (Runner Up)", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 4 How now, how now? What say the citizens? Now, by the holy mother of our Lord, The citizens are mum, say not a word. Indeed, indeed. When Hercule Poirot predicts the murderer at the end of Death on the Nile, he is, in essence, predicting the “next word” given all the preceding text (a cadence). This mirrors what ChatGPT was trained to do. If the massive combinatorial search space—the compression—of vast textual data allows for such a prediction, then language itself, the accumulated symbols of humanity from the dawn of time, serves as a map of our collective trials and errors. By retracing these pathways through the labyrinth of history in compressed time—instantly—we achieve intelligence and “world knowledge.” Inherited efficiencies are locked in data and awaiting someone “ukubona” the lowest ecological cost by which to navigate lifes labyrinth. But a little error and random chaos must be added to go just little beyond the wisdom of our forebears, since the world isn’t static and we must adapt to it. In biology, mutations are such errors added to the “wisdom” of our forebears encoded in DNA. Life’s final cadence, as suggested most articulately by Dante – inferno, limbo, paradiso – is merely a side effect of optimizing the ecological cost function. Unlike what Victorian moralists, including Dante to an extent, think: the final cadence isn’t everything.#