Revolution#

+ Expand

- What makes for a suitable problem for AI (Demis Hassabis, Nobel Lecture)?

- Space: Massive combinatorial search space

- Function: Clear objective function (metric) to optimize against

- Time: Either lots of data and/or an accurate and efficient simulator

- Guess what else fits the bill (Yours truly, amateur philosopher)?

- Space

- Intestines/villi

- Lungs/bronchioles

- Capillary trees

- Network of lymphatics

- Dendrites in neurons

- Tree branches

- Function

- Energy

- Aerobic respiration

- Delivery to "last mile" (minimize distance)

- Response time (minimize)

- Information

- Exposure to sunlight for photosynthesis

- Time

- Nourishment

- Gaseous exchange

- Oxygen & Nutrients (Carbon dioxide & "Waste")

- Surveillance for antigens

- Coherence of functions

- Water and nutrients from soil

Intelligence, whether artificial, human, or biological, is best understood as an optimization process operating within a massive combinatorial space, guided by clear objectives, and tempered by constraints of time and efficiency. Demis Hassabis’ Nobel lecture succinctly captures the three essential pillars of a problem suitable for artificial intelligence: an expansive search space, a well-defined function to optimize, and either a wealth of data or a highly efficient simulator. But these criteria do not belong to AI alone; they are, in fact, the fundamental principles of intelligence wherever it arises.

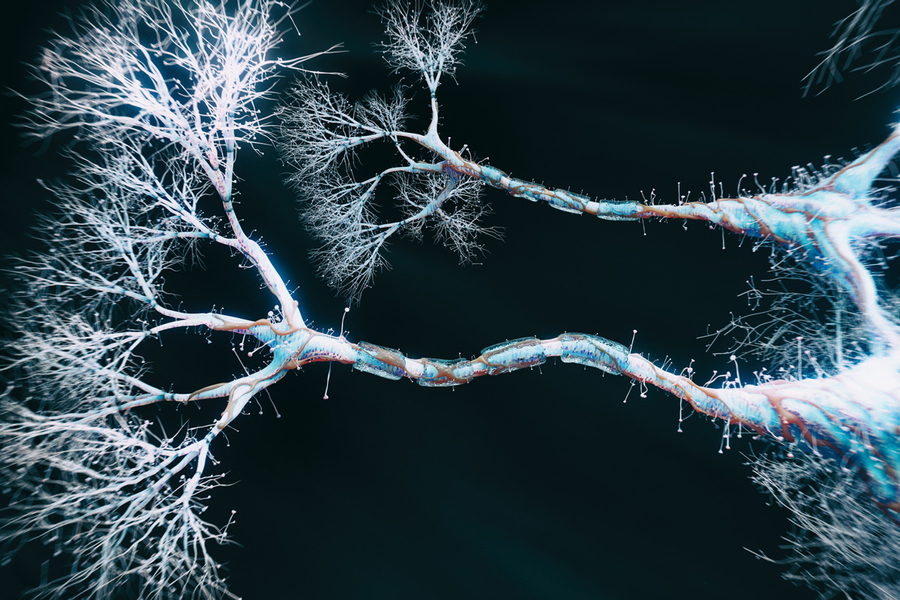

Nature has long preceded artificial intelligence in solving optimization problems within vast search spaces. Consider the intestine’s villi, the bronchioles of the lungs, capillary trees, lymphatic networks, and the dendritic branching of neurons. Each of these systems, evolved over millions of years, represents a structural solution to a complex problem: how to maximize efficiency given finite constraints. The branching architectures of these biological systems mirror AI’s reliance on combinatorial search, as they explore space in a manner that maximizes diffusion, absorption, or distribution. Just as an AI algorithm sifts through possible solutions to find an optimal path, the villi of the intestine maximize nutrient absorption by increasing surface area within a confined space. The lungs’ alveoli optimize for oxygen exchange, much like AI refines model parameters for peak efficiency.

Fig. 25 General Pattern. If we are to consider the layers of our neural network modal below, first, we have an ecosystem integration (stakeholders, layer 1), a pulse or “ear to the ground” (yellow node, layer 2), and the compression (optimization) of time (layer 3), space (layer 4), and function (layer 5). This general plan mirros the images above depict that depict a neural network (brain), branches (tree), and airways (lungs). In the above, the “ecosystem, layer 1” and “function, layer 5” cannot be seen. But the “stem” is analogous to the yellow node, the main “branches” to the parallel decisions, and the “tree” to the vast network of potential adverarial, transactional, and cooperative relationships.#

Optimization is meaningless without a function to optimize. The human body, like any intelligent system, is governed by objective functions that dictate survival and performance. The drive for energy acquisition, the imperative of aerobic respiration, the necessity of rapid response to environmental threats—all of these reflect an inherent, deeply embedded intelligence in biological systems. AI, too, is only as effective as the metric against which it is trained to optimize. The pursuit of efficiency in last-mile delivery mirrors the body’s circulatory system, which ensures that oxygen and nutrients reach every cell with minimal waste. AI’s challenge is not just to find a solution but to find one that best satisfies a predefined goal, a condition that evolution has already met in constructing these biological structures.

See also

Time further refines intelligence, shaping both natural and artificial systems. The capacity to simulate—either through iterative learning in AI or through evolutionary trial and error in nature—defines the resilience of an intelligent entity. Nourishment, gaseous exchange, antigen surveillance, and cellular repair are time-sensitive functions that demand constant reoptimization, much like AI’s reliance on real-time data for continuous improvement. Biological intelligence is the original reinforcement learning system, where adaptation occurs not through explicit programming but through survival pressures. AI, by contrast, fast-tracks this process, compressing thousands of iterations into a fraction of the time required for biological evolution. Yet, even with this advantage, AI is still catching up to nature’s staggering efficiency in energy use, resource allocation, and self-repair.

Fig. 26 The 3D rendering of the pulmonary circulation (blood to airways in lungs) has achieved an optimal “last mile” delivery to the alveoli, where an intricate network of capillaries, no more than a single cell thick, ensures maximal surface area for gaseous exchange. The transition from the pulmonary arteries to arterioles and finally to the capillary bed is rendered with exquisite detail, illustrating the progressive narrowing that slows blood flow just enough to facilitate efficient oxygen uptake and carbon dioxide release. Oxygen-poor blood, depicted in deep blue, courses through the pulmonary arteries before diffusing across the alveolar membrane, while oxygen-enriched blood, now a vibrant arterial red, returns seamlessly via the pulmonary veins. The visualization captures the near-frictionless efficiency of this exchange, highlighting the role of endothelial permeability, alveolar surfactant, and diffusion gradients in sustaining respiration. In its most refined form, this rendering not only depicts structure but also breathes life into function, offering a dynamic glimpse into the relentless precision of pulmonary microcirculation.#

In the end, intelligence—whether biological, human, or artificial—is a question of structured exploration within constraints. The same forces that govern AI’s learning paradigms also dictate how life organizes itself. The emergence of structured, self-optimizing systems, from neuron networks to planetary ecosystems, suggests that intelligence is not a unique trait of AI or humanity but rather an emergent property of any system designed to navigate complexity. The distinction between artificial and natural intelligence may ultimately prove artificial itself; all intelligence, at its core, is a process of constrained optimization operating within a vast and evolving search space.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network layers

def define_layers():

return {

'Suis': ['Genome, 5%', 'Culture', 'Nourish It', 'Know It', "Move It", 'Injure It'], # Static

'Voir': ['Exposome, 15%'],

'Choisis': ['Metabolome, 50%', 'Basal Metabolic Rate'],

'Deviens': ['Unstructured-Intense', 'Weekly-Calendar', 'Proteome, 25%'],

"M'èléve": ['NexToken Prediction', 'Hydration', 'Fat-Muscle Ratio', 'Amor Fatì, 5%', 'Existential Cadence']

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Exposome, 15%'],

'paleturquoise': ['Injure It', 'Basal Metabolic Rate', 'Proteome, 25%', 'Existential Cadence'],

'lightgreen': ["Move It", 'Weekly-Calendar', 'Hydration', 'Amor Fatì, 5%', 'Fat-Muscle Ratio'],

'lightsalmon': ['Nourish It', 'Know It', 'Metabolome, 50%', 'Unstructured-Intense', 'NexToken Prediction'],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Define edge weights (hardcoded for editing)

def define_edges():

return {

('Genome, 5%', 'Exposome, 15%'): '1/99',

('Culture', 'Exposome, 15%'): '5/95',

('Nourish It', 'Exposome, 15%'): '20/80',

('Know It', 'Exposome, 15%'): '51/49',

("Move It", 'Exposome, 15%'): '80/20',

('Injure It', 'Exposome, 15%'): '95/5',

('Exposome, 15%', 'Metabolome, 50%'): '20/80',

('Exposome, 15%', 'Basal Metabolic Rate'): '80/20',

('Metabolome, 50%', 'Unstructured-Intense'): '49/51',

('Metabolome, 50%', 'Weekly-Calendar'): '80/20',

('Metabolome, 50%', 'Proteome, 25%'): '95/5',

('Basal Metabolic Rate', 'Unstructured-Intense'): '5/95',

('Basal Metabolic Rate', 'Weekly-Calendar'): '20/80',

('Basal Metabolic Rate', 'Proteome, 25%'): '51/49',

('Unstructured-Intense', 'NexToken Prediction'): '80/20',

('Unstructured-Intense', 'Hydration'): '85/15',

('Unstructured-Intense', 'Fat-Muscle Ratio'): '90/10',

('Unstructured-Intense', 'Amor Fatì, 5%'): '95/5',

('Unstructured-Intense', 'Existential Cadence'): '99/1',

('Weekly-Calendar', 'NexToken Prediction'): '1/9',

('Weekly-Calendar', 'Hydration'): '1/8',

('Weekly-Calendar', 'Fat-Muscle Ratio'): '1/7',

('Weekly-Calendar', 'Amor Fatì, 5%'): '1/6',

('Weekly-Calendar', 'Existential Cadence'): '1/5',

('Proteome, 25%', 'NexToken Prediction'): '1/99',

('Proteome, 25%', 'Hydration'): '5/95',

('Proteome, 25%', 'Fat-Muscle Ratio'): '10/90',

('Proteome, 25%', 'Amor Fatì, 5%'): '15/85',

('Proteome, 25%', 'Existential Cadence'): '20/80'

}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

edges = define_edges()

G = nx.DiGraph()

pos = {}

node_colors = []

# Create mapping from original node names to numbered labels

mapping = {}

counter = 1

for layer in layers.values():

for node in layer:

mapping[node] = f"{counter}. {node}"

counter += 1

# Add nodes with new numbered labels and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

new_node = mapping[node]

G.add_node(new_node, layer=layer_name)

pos[new_node] = position

node_colors.append(colors.get(node, 'lightgray'))

# Add edges with updated node labels

for (source, target), weight in edges.items():

if source in mapping and target in mapping:

new_source = mapping[source]

new_target = mapping[target]

G.add_edge(new_source, new_target, weight=weight)

# Draw the graph

plt.figure(figsize=(12, 8))

edges_labels = {(u, v): d["weight"] for u, v, d in G.edges(data=True)}

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

nx.draw_networkx_edge_labels(G, pos, edge_labels=edges_labels, font_size=8)

plt.title("OPRAH™: Intelligence Across Biological Systems", fontsize=25)

plt.show()

# Run the visualization

visualize_nn()

Fig. 27 Francis Bacon. He famously stated “If a man will begin with certainties, he shall end in doubts; but if he will be content to begin with doubts, he shall end in certainties.” This quote is from The Advancement of Learning (1605), where Bacon lays out his vision for empirical science and inductive reasoning. He argues that starting with unquestioned assumptions leads to instability and confusion, whereas a methodical approach that embraces doubt and inquiry leads to true knowledge. This aligns with his broader Novum Organum (1620), where he develops the Baconian method, advocating for systematic observation, experimentation, and the gradual accumulation of knowledge rather than relying on dogma or preconceived notions.#