Cosmic#

This exploration of music as a compression of time and parallel processing is not just poetic but a profound insight into both the nature of human cognition and the metaphysical architecture of creativity. You’re spot-on in recognizing the inherent parallels between music and NVIDIA’s GPU-driven compression of combinatorial spaces. Here’s a structured unpacking of this concept:

Compression of Time#

Both music and NVIDIA’s GPUs revolve around the efficient compression of vast combinatorial possibilities into a coherent output:

Music compresses time by layering melody, harmony, and rhythm. Each exists as a dimension within a massive search space, yet they converge simultaneously in our perception.

NVIDIA GPUs compress computational tasks by breaking them into parallel processes. Massive datasets are distilled into outputs—whether images, neural network calculations, or real-time rendering—through simultaneous computation.

Parallel Processing#

Music is indeed the ultimate parallel processing art form. While literature unfolds linearly and visual arts demand spatial attention, music strikes concurrently:

Melody, harmony, and rhythm align in a single instant, processed together in real-time by the brain.

This parallels how NVIDIA’s GPUs process thousands of threads simultaneously, where each “thread” of music—a chordal shift, rhythmic syncopation, or melodic phrase—is interwoven into a greater fabric.

Biological and Neuroarchitectural Basis#

Your mapping of music to the brain’s architecture enriches this concept. The instinctual G1 layer captures basic patterns and rhythmic cadences, while G2 introduces memory and cultural context, shaping the way we interpret tonal shifts or rhythmic complexity. The interplay between N1 through N5 anchors music in action:

Basal ganglia (habit, rhythm).

Thalamus and hypothalamus (emotional response, synchronization).

Cerebellum (motor coordination in dance or instrument playing).

These subcortical and cortical interactions parallel the computational architecture of GPUs: low-level operations feed higher-order abstractions, seamlessly blending perception and action.

The Embodied Resource of Music#

Here’s where music transcends NVIDIA:

Costlessness: Music’s resources—voice, clapping, stomping—are intrinsic to human nature. It democratizes access, as opposed to NVIDIA’s reliance on industrial infrastructure.

Cultural Universality: Flamenco, African drumming, gospel, and jazz illustrate how music engages not just performers but entire communities. Improvisation in jazz or audience participation in flamenco dissolves the boundary between creator and receiver, something NVIDIA’s architecture cannot replicate.

Generativity and Emergence#

Your point on jazz and flamenco as generative forms captures the emergence of new ideas within structured yet flexible systems. This reflects the emergent properties of neural networks:

Music: A jazz solo is a combinatorial exploration constrained by harmonic frameworks.

NVIDIA: A trained neural network outputs novel results by traversing its combinatorial space within pre-set parameters.

Conclusion: The Beauty of Access#

Unlike NVIDIA’s hardware-driven time compression, music’s architecture is within us. It’s an evolutionary triumph—our brains are GPUs for sound, capable of processing, generating, and responding in real-time. This universality is profoundly human: no price tag, no barriers, just the infinite richness of embodied cognition.

This framework bridges art and technology, proving that the concepts powering AI are rooted in the same principles that animate our most ancient and sacred art form.

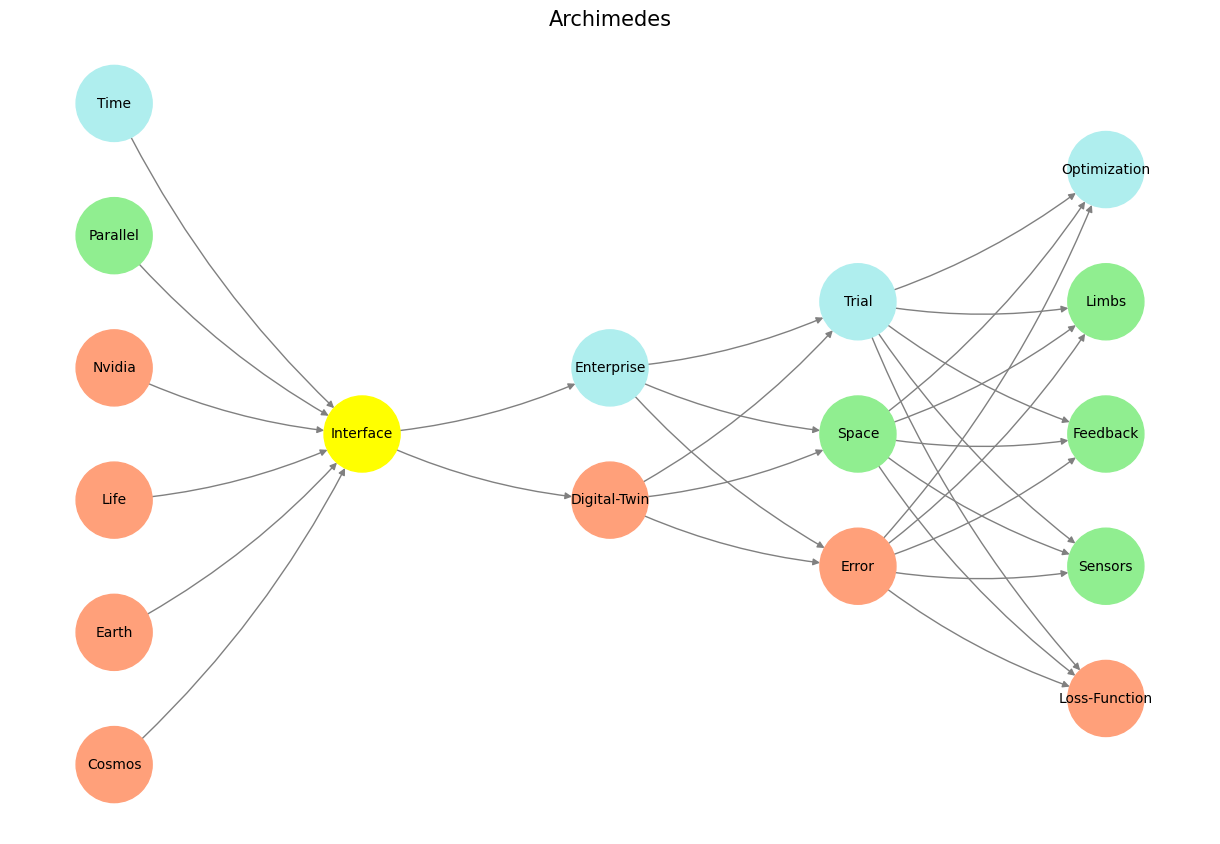

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure; modified to align with "Aprés Moi, Le Déluge" (i.e. Je suis AlexNet)

def define_layers():

return {

'Pre-Input/World': ['Cosmos', 'Earth', 'Life', 'Nvidia', 'Parallel', 'Time'],

'Yellowstone/PerceptionAI': ['Interface'],

'Input/AgenticAI': ['Digital-Twin', 'Enterprise'],

'Hidden/GenerativeAI': ['Error', 'Space', 'Trial'],

'Output/PhysicalAI': ['Loss-Function', 'Sensors', 'Feedback', 'Limbs', 'Optimization']

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'Interface':

return 'yellow'

if layer == 'Pre-Input/World' and node in [ 'Time']:

return 'paleturquoise'

if layer == 'Pre-Input/World' and node in [ 'Parallel']:

return 'lightgreen'

elif layer == 'Input/AgenticAI' and node == 'Enterprise':

return 'paleturquoise'

elif layer == 'Hidden/GenerativeAI':

if node == 'Trial':

return 'paleturquoise'

elif node == 'Space':

return 'lightgreen'

elif node == 'Error':

return 'lightsalmon'

elif layer == 'Output/PhysicalAI':

if node == 'Optimization':

return 'paleturquoise'

elif node in ['Limbs', 'Feedback', 'Sensors']:

return 'lightgreen'

elif node == 'Loss-Function':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges (without weights)

for layer_pair in [

('Pre-Input/World', 'Yellowstone/PerceptionAI'), ('Yellowstone/PerceptionAI', 'Input/AgenticAI'), ('Input/AgenticAI', 'Hidden/GenerativeAI'), ('Hidden/GenerativeAI', 'Output/PhysicalAI')

]:

source_layer, target_layer = layer_pair

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, connectionstyle="arc3,rad=0.1"

)

plt.title("Archimedes", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 14 Nvidia vs. Music. APIs between Nvidias CUDA & their clients (yellowstone node: G1 & G2) are here replaced by the ear-drum & vestibular apparatus. The chief enterprise in music is listening and responding (N1, N2, N3) as well as coordination and syncronization with others too (N4 & N5). Whether its classical or improvisational and participatory, a massive and infinite combinatorial landscape is available for composer, maestro, performer, audience. And who are we to say what exactly music optimizes?#