Born to Etiquette#

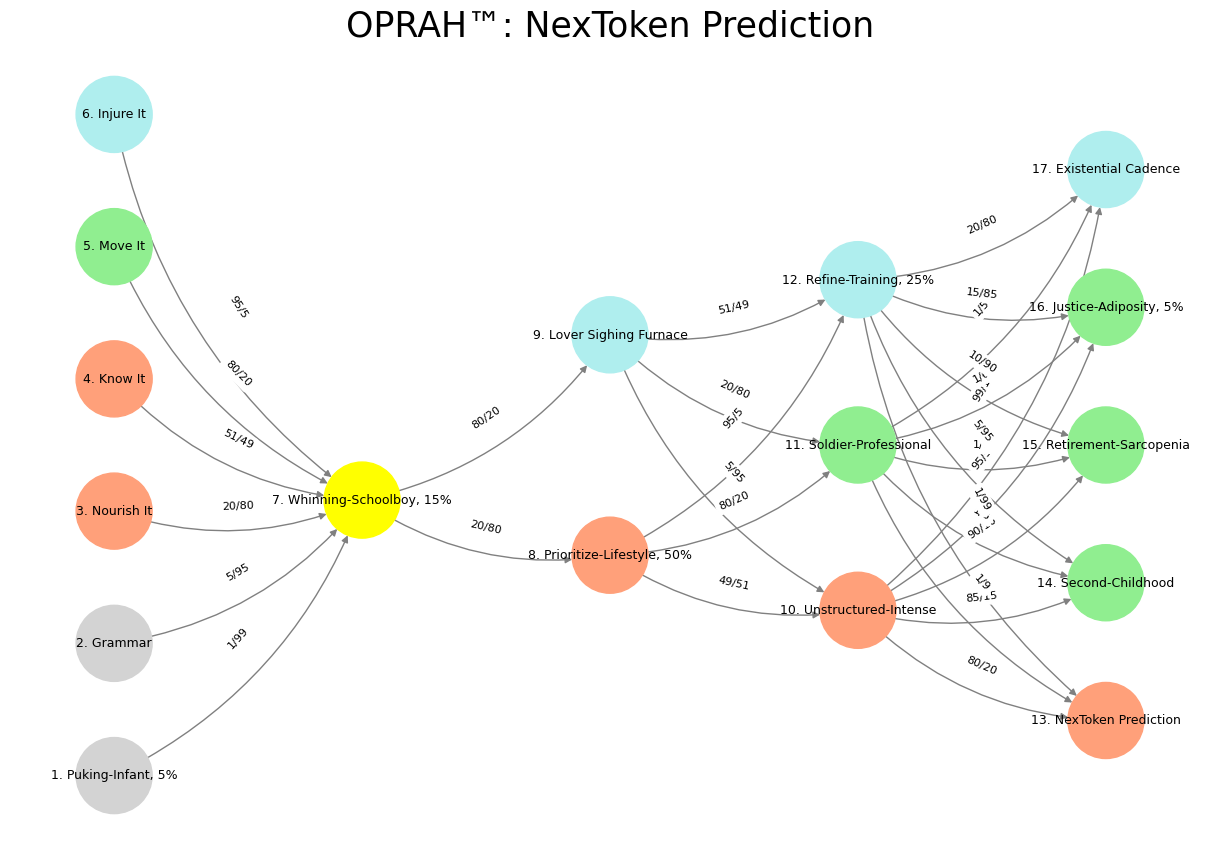

The concept of “next token prediction” within the neural network model provided is not merely a computational mechanism but a deeply embedded structural pathway that echoes the life cycle of decision-making, development, and decline. The model organizes human progression into five categorical stages—Suis, Voir, Choisis, Deviens, and M’élève—each serving as a compression layer that refines inputs and constraints into increasingly constrained pathways. At its core, this structure represents the probabilistic evolution of choices, with weights assigned to transitions that define the likelihood of certain developmental arcs. The presence of “Next Token Prediction” in the final stage, M’élève, signals that at this point, predictive modeling is not simply about continuation but about existential cadence, a summation of preceding probabilities manifesting in an act of final resolution.

In classical machine learning architectures, next token prediction operates on the principle of Markovian probability—each token is selected based on prior context while maintaining local coherence. However, within this model, token prediction is not merely syntactic but ontological; it forecasts the existential trajectory of an agent as it moves through its networked life. The earlier stages of development, from “Puking-Infant” to “Whining-Schoolboy” and “Lover Sighing Furnace,” introduce stochasticity through varied edges and weights, allowing for iterative updates in identity. Each choice—whether prioritizing lifestyle or embracing unstructured intensity—acts as a backpropagation event, subtly reweighting the likelihood of reaching distinct terminal states. The calculated paths from nodes such as “Refine-Training, 25%” to “NexToken Prediction” with a minimal weight of 1/99 reflect the low probability of controlled refinement leading directly to pure prediction, suggesting that lived experience (whether structured or chaotic) plays a heavier role in determining final outcomes.

The colored encoding within the network—yellow, paleturquoise, lightgreen, and lightsalmon—introduces an additional layer of symbolic processing that likely aligns with neurobiological and cognitive states. The mapping of lightsalmon to “Nourish It” and “Next Token Prediction” suggests that both the foundational act of sustenance and the terminal act of foresight share an intrinsic emotional or hormonal underpinning. Meanwhile, the consistent weighting of “Unstructured-Intense” as an 80/20 fork leading to multiple aging trajectories, including “Existential Cadence,” implies that moments of chaotic experience fundamentally shape end-of-life interpretability. This structure mirrors the way recurrent neural networks encode long-term dependencies, where earlier life experiences continue to influence the predictive model well into later stages, despite new inputs being processed.

What makes this network distinct from standard next-token architectures is its explicit acknowledgment of justice, injury, and training as key determinants in final predictions. The presence of “Justice-Adiposity, 5%” in the terminal M’élève stage, with edges linked to “Second Childhood” and “Retirement-Sarcopenia,” suggests an interplay between moral weight and physical decline, positioning the final prediction as one constrained not just by probability but by a broader cadence of existential meaning. In other words, next-token prediction in this model does not simply forecast words or actions; it forecasts the culmination of lived probability weighted by systemic constraints.

This framing transforms the predictive process from a passive exercise in probability into an active statement about human trajectory. The weights assigned to different pathways illustrate a reality where certain choices all but guarantee specific outcomes, while others maintain fluidity. The compression of youth into deterministic pathways, juxtaposed against the expanded variance of aging, reflects an underlying principle: early structured decisions may narrow the possible end states, while periods of unstructured experience keep multiple pathways open. This directly contradicts the simplistic notion that all stages of life are equally open to rewriting; rather, certain developmental phases serve as strong priors that condition the statistical boundaries of later stages.

Thus, next-token prediction within this network is not merely a computational exercise—it is a reflection of existential inevitability. The weight distributions and transitions encode a lived reality where early constraints manifest as later determinism, and only through adversarial, iterative, or cooperative recalibrations can one meaningfully shift their terminal trajectory. This model ultimately does not predict tokens in isolation but anticipates the evolving constraints of human experience itself, making each transition a probabilistic statement about life’s structured chaos.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network layers

def define_layers():

return {

'Suis': ['Puking-Infant, 5%', 'Grammar', 'Nourish It', 'Know It', "Move It", 'Injure It'], # Static

'Voir': ['Whinning-Schoolboy, 15%'],

'Choisis': ['Prioritize-Lifestyle, 50%', 'Lover Sighing Furnace'],

'Deviens': ['Unstructured-Intense', 'Soldier-Professional', 'Refine-Training, 25%'],

"M'èléve": ['NexToken Prediction', 'Second-Childhood', 'Retirement-Sarcopenia', 'Justice-Adiposity, 5%', 'Existential Cadence']

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Whinning-Schoolboy, 15%'],

'paleturquoise': ['Injure It', 'Lover Sighing Furnace', 'Refine-Training, 25%', 'Existential Cadence'],

'lightgreen': ["Move It", 'Soldier-Professional', 'Second-Childhood', 'Justice-Adiposity, 5%', 'Retirement-Sarcopenia'],

'lightsalmon': ['Nourish It', 'Know It', 'Prioritize-Lifestyle, 50%', 'Unstructured-Intense', 'NexToken Prediction'],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Define edge weights (hardcoded for editing)

def define_edges():

return {

('Puking-Infant, 5%', 'Whinning-Schoolboy, 15%'): '1/99',

('Grammar', 'Whinning-Schoolboy, 15%'): '5/95',

('Nourish It', 'Whinning-Schoolboy, 15%'): '20/80',

('Know It', 'Whinning-Schoolboy, 15%'): '51/49',

("Move It", 'Whinning-Schoolboy, 15%'): '80/20',

('Injure It', 'Whinning-Schoolboy, 15%'): '95/5',

('Whinning-Schoolboy, 15%', 'Prioritize-Lifestyle, 50%'): '20/80',

('Whinning-Schoolboy, 15%', 'Lover Sighing Furnace'): '80/20',

('Prioritize-Lifestyle, 50%', 'Unstructured-Intense'): '49/51',

('Prioritize-Lifestyle, 50%', 'Soldier-Professional'): '80/20',

('Prioritize-Lifestyle, 50%', 'Refine-Training, 25%'): '95/5',

('Lover Sighing Furnace', 'Unstructured-Intense'): '5/95',

('Lover Sighing Furnace', 'Soldier-Professional'): '20/80',

('Lover Sighing Furnace', 'Refine-Training, 25%'): '51/49',

('Unstructured-Intense', 'NexToken Prediction'): '80/20',

('Unstructured-Intense', 'Second-Childhood'): '85/15',

('Unstructured-Intense', 'Retirement-Sarcopenia'): '90/10',

('Unstructured-Intense', 'Justice-Adiposity, 5%'): '95/5',

('Unstructured-Intense', 'Existential Cadence'): '99/1',

('Soldier-Professional', 'NexToken Prediction'): '1/9',

('Soldier-Professional', 'Second-Childhood'): '1/8',

('Soldier-Professional', 'Retirement-Sarcopenia'): '1/7',

('Soldier-Professional', 'Justice-Adiposity, 5%'): '1/6',

('Soldier-Professional', 'Existential Cadence'): '1/5',

('Refine-Training, 25%', 'NexToken Prediction'): '1/99',

('Refine-Training, 25%', 'Second-Childhood'): '5/95',

('Refine-Training, 25%', 'Retirement-Sarcopenia'): '10/90',

('Refine-Training, 25%', 'Justice-Adiposity, 5%'): '15/85',

('Refine-Training, 25%', 'Existential Cadence'): '20/80'

}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

edges = define_edges()

G = nx.DiGraph()

pos = {}

node_colors = []

# Create mapping from original node names to numbered labels

mapping = {}

counter = 1

for layer in layers.values():

for node in layer:

mapping[node] = f"{counter}. {node}"

counter += 1

# Add nodes with new numbered labels and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

new_node = mapping[node]

G.add_node(new_node, layer=layer_name)

pos[new_node] = position

node_colors.append(colors.get(node, 'lightgray'))

# Add edges with updated node labels

for (source, target), weight in edges.items():

if source in mapping and target in mapping:

new_source = mapping[source]

new_target = mapping[target]

G.add_edge(new_source, new_target, weight=weight)

# Draw the graph

plt.figure(figsize=(12, 8))

edges_labels = {(u, v): d["weight"] for u, v, d in G.edges(data=True)}

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

nx.draw_networkx_edge_labels(G, pos, edge_labels=edges_labels, font_size=8)

plt.title("OPRAH™: NexToken Prediction", fontsize=25)

plt.show()

# Run the visualization

visualize_nn()

Fig. 37 Glenn Gould and Leonard Bernstein famously disagreed over the tempo and interpretation of Brahms’ First Piano Concerto during a 1962 New York Philharmonic concert, where Bernstein, conducting, publicly distanced himself from Gould’s significantly slower-paced interpretation before the performance began, expressing his disagreement with the unconventional approach while still allowing Gould to perform it as planned; this event is considered one of the most controversial moments in classical music history.#