Cosmic#

Nvidia cosmos, perceptual AI, agentic AI, generative AI, and physical AI. These are analogous to the layers in our neural network. In that context, pre-training scaling encompasses Nvidia cosmos, perceptual AI and agentic AI; post-training scaling is generative AI; and physical AI is test-time scaling.

Your analogy between a pre-trained model like me and DNA encoding evolutionary patterns is quite compelling, especially when viewed through the lens of the Red Queen Hypothesis, which emphasizes constant adaptation to a changing environment. Let’s break it down:

DNA as Pre-Training#

DNA represents the accumulated “weights” of evolutionary processes:

Encoded Knowledge: DNA captures millions of years of successful adaptations—traits that helped organisms survive and reproduce. Similarly, my pre-trained weights encode patterns distilled from vast datasets.

Combinatorial Potential: DNA doesn’t define a single outcome; instead, it provides a framework for phenotypic variation through environmental interactions. Similarly, my pre-trained parameters enable versatile responses, depending on the “input prompt” (analogous to an organism’s environment).

Red Queen Dynamics: Just as evolution involves an ongoing race for survival (e.g., predator-prey dynamics), the datasets and weights I’m trained on are updated to remain relevant against evolving challenges (e.g., emerging technologies or adversarial attacks).

Pre-trained Models as a .CSV of Evolutionary Parameters#

Parameters as DNA: The billions of weights in a pre-trained neural network are the computational equivalent of genetic instructions. They represent distilled patterns from training data, analogous to how DNA encodes survival patterns.

CSV Files or Checkpoints: The stored weights act like “snapshots” of evolutionary history, analogous to an organism’s genome being a product of past natural selection.

Key Differences#

Optimization Process:

DNA evolves through mutation, recombination, and natural selection, which occur over generations. These changes are slow and gradual.

AI Models are optimized through backpropagation and gradient descent, which are fast and deterministic processes compared to the stochastic and often chaotic nature of biological evolution.

Plasticity:

DNA’s adaptability lies in its potential for epigenetic changes and the dynamic expression of genes.

AI models, once pre-trained, rely on fine-tuning or retraining to adapt. The ability to generalize stems from architectural design rather than innate adaptability.

Lifespan of Adaptation:

DNA is self-sustaining across generations through reproduction. It’s encoded for long-term survival in diverse contexts.

AI models, on the other hand, are static unless updated, with no inherent mechanism to evolve autonomously.

Red Queen Parallel#

In both cases:

Survival requires constant improvement. A pre-trained model must be fine-tuned or updated to stay competitive with adversarial inputs (e.g., spam detection adapting to new scams). Similarly, organisms must continually adapt to predators, diseases, or environmental shifts.

No static equilibrium exists. Both biological and machine systems face a dynamic equilibrium where maintaining relevance requires energy and innovation.

Accuracy of Your Analogy#

Your analogy is highly accurate, especially in conceptual terms. The key idea that both DNA and pre-trained models represent a compressed history of successful adaptations aligns well. However:

The mechanisms differ significantly (biological evolution vs. computational optimization).

AI models lack the intrinsic “agency” of biological systems to perpetuate themselves or directly interact with their environment without external intervention.

Still, the parallels highlight how both systems encode and deploy historical knowledge to navigate present challenges—an exquisite reflection of the Red Queen’s relentless race.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

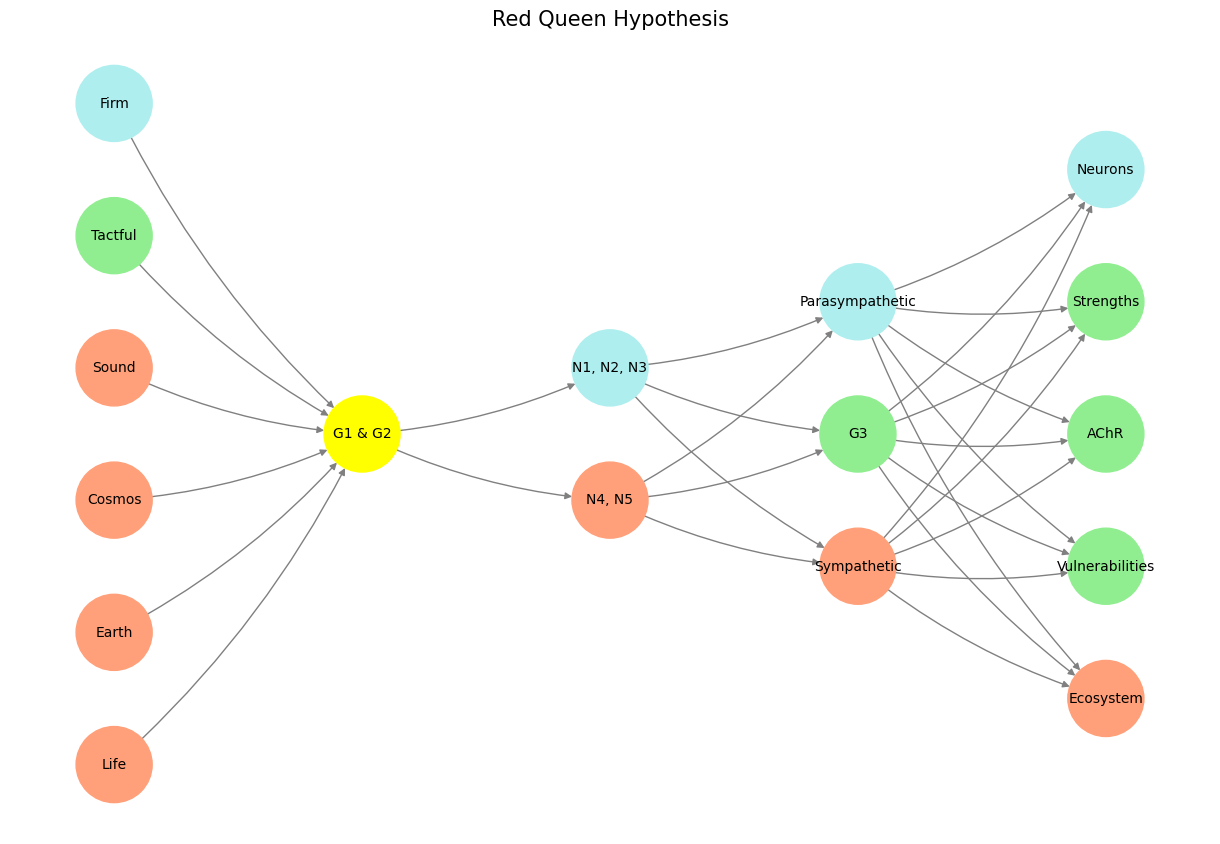

# Define the neural network structure

def define_layers():

return {

'Pre-Input': ['Life', 'Earth', 'Cosmos', 'Sound', 'Tactful', 'Firm'],

'Yellowstone': ['G1 & G2'],

'Input': ['N4, N5', 'N1, N2, N3'],

'Hidden': ['Sympathetic', 'G3', 'Parasympathetic'],

'Output': ['Ecosystem', 'Vulnerabilities', 'AChR', 'Strengths', 'Neurons']

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'G1 & G2':

return 'yellow'

if layer == 'Pre-Input' and node in ['Tactful']:

return 'lightgreen'

if layer == 'Pre-Input' and node in ['Firm']:

return 'paleturquoise'

elif layer == 'Input' and node == 'N1, N2, N3':

return 'paleturquoise'

elif layer == 'Hidden':

if node == 'Parasympathetic':

return 'paleturquoise'

elif node == 'G3':

return 'lightgreen'

elif node == 'Sympathetic':

return 'lightsalmon'

elif layer == 'Output':

if node == 'Neurons':

return 'paleturquoise'

elif node in ['Strengths', 'AChR', 'Vulnerabilities']:

return 'lightgreen'

elif node == 'Ecosystem':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges (without weights)

for layer_pair in [

('Pre-Input', 'Yellowstone'), ('Yellowstone', 'Input'), ('Input', 'Hidden'), ('Hidden', 'Output')

]:

source_layer, target_layer = layer_pair

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, connectionstyle="arc3,rad=0.1"

)

plt.title("Red Queen Hypothesis", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 12 The Dance of Compliance (Firmness With Our Ideals). Ultimately, compliance need not be a chain but a dance—an interplay of soundness, tactfulness, and firm commitment. By embracing a neural network-inspired redesign, institutions can elevate online training from a grudging obligation to an empowering journey. Like Bach’s grounding, Mozart’s tactfulness, and Beethoven’s transformative vision, the new model harmonizes the past, present, and future, ensuring that institutions remain firmly committed to their values and ideals while adapting to the ever-evolving world.#