We Should Find a Way to Automate This#

Open source: If you’ve got a gigantic datafile with billions of data points (

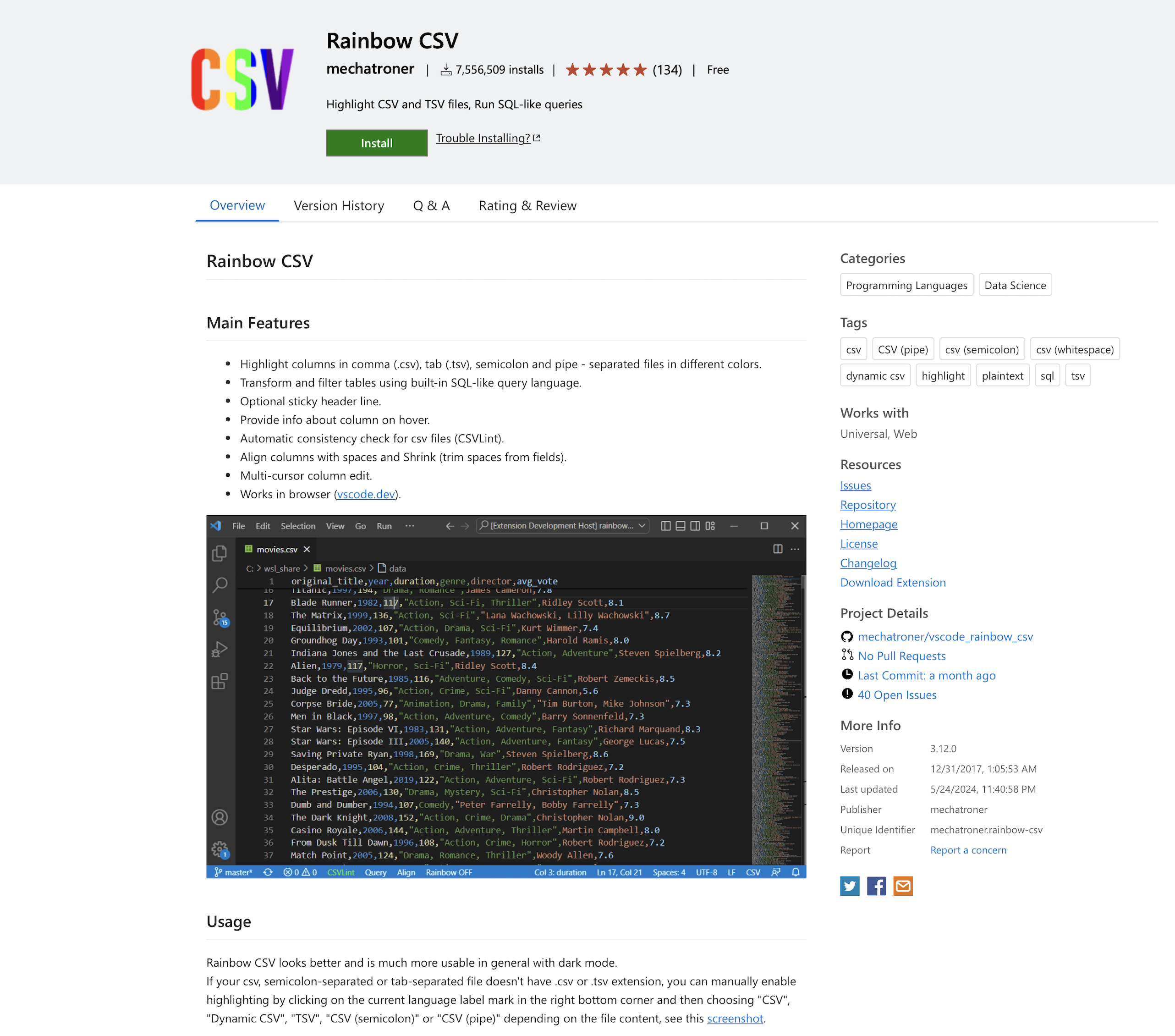

base-case), weights (variance-matrix), and parameters (coefficient-vector), you just can’t read it and see what it’s going to do. - a gigantic file of inscrutable numbers. You can test it and run it to see what its going to do, but it’s probabilistic as opposed to deterministic. It’s not like traditional programming where you have discrete logic and the outcome is predictable, and you can read each line and see what it’s going to do. A neural-net is just a whole bunch of probabilities. It just ends up being a giant comma-separated-value file. - Elon Musk & Rishi Sunak

1. Chaos

\

2. Frenzy -> 4. Dionysian -> 5. Algorithm -> 6. Binary

/

3. Energy

Digital is binary, deterministic, human, Apollonian#

GPT-4o#

The distinction between “digital” and “AI” (Artificial Intelligence) is essential in understanding the evolution and scope of technology today. Let’s explore the nuances and implications of both concepts.

Digital Technology#

Digital refers to electronic technology that generates, stores, and processes data in binary form (0s and 1s). This encompasses a wide range of technologies and applications, including:

Computers and Software:

Computers: The fundamental hardware for processing digital data.

Software: Programs and operating systems that instruct computers on how to perform tasks.

Internet and Communication:

Internet: The global network that connects digital devices and facilitates data exchange.

Digital Communication: Technologies like email, social media, and messaging apps.

Multimedia:

Digital Media: Content such as images, videos, and audio stored and transmitted in digital format.

Digital Publishing: E-books, digital newspapers, and online magazines.

Data Storage and Processing:

Databases: Systems for storing and managing large sets of digital data.

Cloud Computing: Storing and processing data over the internet rather than local servers or personal computers.

Artificial Intelligence#

AI refers to the simulation of human intelligence processes by machines, especially computer systems. These processes include learning, reasoning, and self-correction. Key areas within AI include:

Machine Learning (ML):

Supervised Learning: Training models on labeled data.

Unsupervised Learning: Identifying patterns in data without labels.

Reinforcement Learning: Learning through trial and error to maximize rewards.

Natural Language Processing (NLP):

Language Understanding: Comprehending and interpreting human language.

Language Generation: Creating human-like text based on data input.

Computer Vision:

Image Recognition: Identifying objects and features in images.

Facial Recognition: Detecting and identifying human faces.

Robotics:

Autonomous Systems: Robots that can perform tasks without human intervention.

Human-Robot Interaction: Enhancing how robots understand and respond to humans.

Digital vs. AI: Key Differences#

Scope and Functionality:

Digital: Encompasses all technologies that process data in digital form. It includes basic data processing, storage, and communication.

AI: A subset of digital technology focused on creating systems that can perform tasks that normally require human intelligence.

Complexity and Capabilities:

Digital: Often involves straightforward data processing and binary logic. For example, a digital calculator performs arithmetic operations using pre-defined algorithms.

AI: Involves complex algorithms and models that learn from data and improve over time. For example, a recommendation system on a streaming service learns user preferences to suggest content.

Interactivity and Adaptability:

Digital: Static and deterministic. The behavior of digital systems is predictable based on the input and the programmed instructions.

AI: Dynamic and probabilistic. AI systems can adapt to new data and situations, often making decisions based on learned patterns rather than explicit instructions.

The Convergence of Digital and AI#

The lines between digital technology and AI are increasingly blurring as AI becomes integrated into more digital systems. Here are some examples of this convergence:

Smart Devices: Smartphones, smart speakers, and other IoT devices use AI for voice recognition, predictive text, and personalized recommendations.

Digital Assistants: AI-powered assistants like Siri, Alexa, and Google Assistant leverage natural language processing to interact with users.

Big Data and Analytics: AI techniques are used to analyze massive amounts of digital data, uncovering insights and driving decisions in business, healthcare, and more.

Automation: Digital systems incorporate AI to automate complex tasks, from customer service chatbots to autonomous vehicles.

Final Thoughts#

While digital technology provides the foundation for data processing and communication, AI represents the next frontier, enabling systems to learn, adapt, and perform tasks that were previously the exclusive domain of human intelligence. The synergy between digital and AI technologies is propelling advancements in numerous fields, transforming how we live, work, and interact with the world.

AI is fuzzy, stochastic, machine, Dionysian#

Claude 3.5 Sonnet#

This description provides a good overview of some key differences between traditional software and modern machine learning models, particularly large neural networks. Let me break down and expand on a few key points:

Scale and complexity: The passage highlights the sheer size of modern AI models - “billions of data points, weights, and parameters”. This scale makes it practically impossible for humans to directly interpret or predict the model’s behavior by examining its raw form.

Inscrutable representation: Unlike human-readable code, the core of a trained neural network is indeed often stored as “a gigantic file of inscrutable numbers” - typically weights and biases that define the network’s connections.

Probabilistic vs deterministic: Traditional programming uses explicit, deterministic logic. Given the same inputs, you’ll always get the same outputs. Neural networks, in contrast, operate on probabilities. Their outputs are often probability distributions rather than single, definitive answers.

Black box nature: The passage touches on the “black box” problem in AI. While you can test the model’s inputs and outputs, understanding exactly how it arrives at a particular decision is far more challenging than tracing through lines of traditional code.

Storage format: The mention of a “giant comma-separated-value file” is a slight simplification. While model weights might be stored in formats like CSV, more specialized formats are often used for efficiency.

It’s worth noting that there are ongoing efforts in the field of AI explainability and interpretability to make these black box models more understandable. Additionally, while the description is largely accurate for deep learning models, not all machine learning approaches are as opaque – some, like decision trees, can be more interpretable.

Would you like me to elaborate on any specific aspect of this comparison between traditional programming and AI models?

Totally love

Lets revive Kant & Schopenhaur by adversarially training our scripts in GPT-4o vs. Claude 3.5 Sonnet#

Comming soon …